AI: Exponential Expectations

...how to frame AI tech growth curves

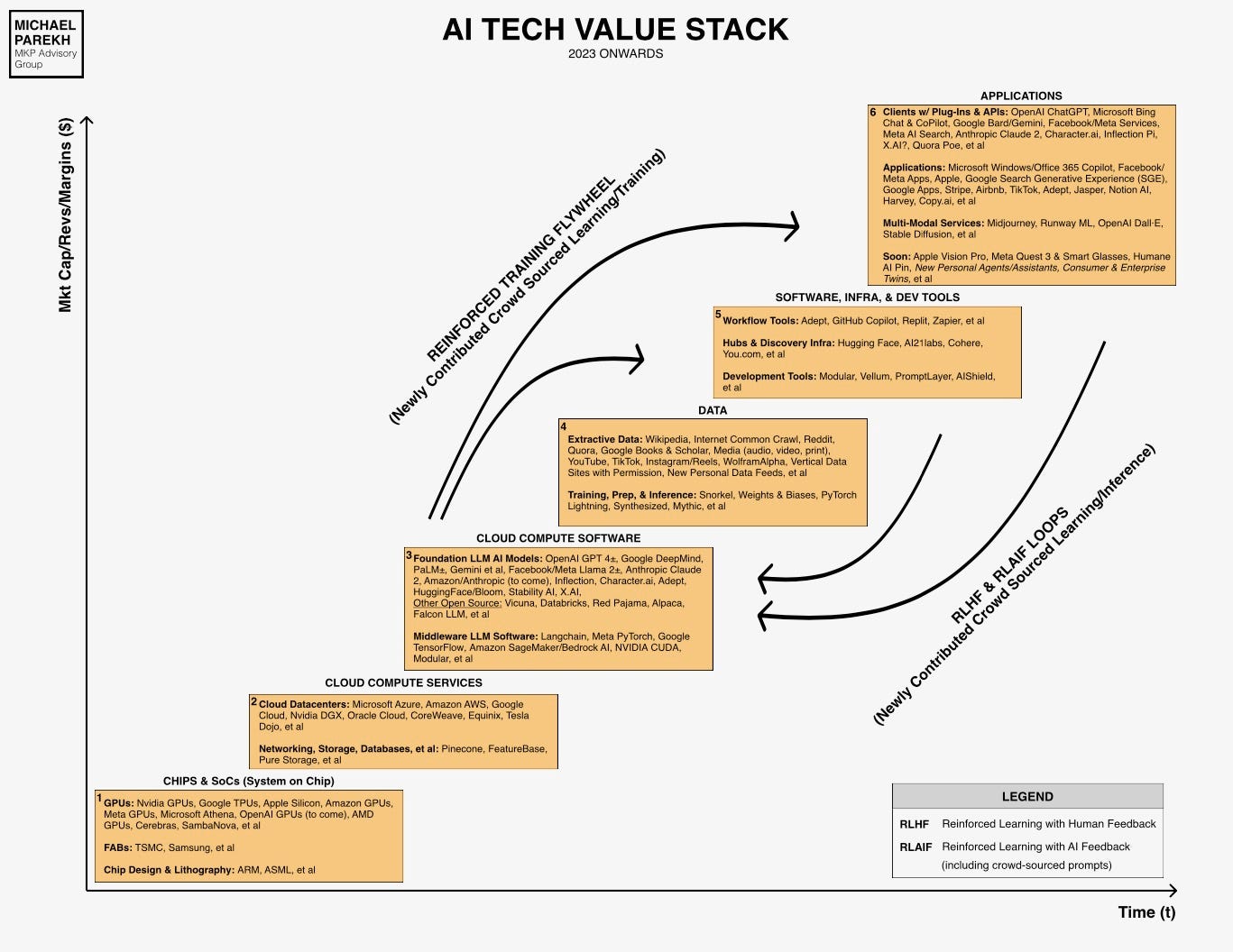

Ask the leading founders and technologists of the main LLM AI companies, and one comes away with their collective conviction on the exponential growth ahead for AI tech capabilities, built on top of every more power hungry AI ‘Compute’ heavy, data centers. Both in terms of software and hardware. These include the many AI smart AI people in hardware and software (OpenAI, Inflection, Anthropic, Nvidia et al), who are predicting anywhere multi-fold, exponential improvements in LLM AI capabilities over next one to three years.

This for now dramatically beats Moore’s law which promised, and for the most part delivered a doubling of computing hardware capabilities and ever lower prices occurring every two years or so. This has held up for the most part now for over a half century and counting.

AI technologies, now driven by ever more massive foundation LLM AI models, and ever more massively parallel GPUs running the brute force math of these ‘reinforced learning loops at unimaginable counts, are currently on their massive ‘exponential growth spurt’ phase. Like a toddler growing into a six foot plus teenager.

So all this is good at least for the next three to five years for AI infrastructure growth and the eventual application of the hundreds of billions of dollars being committed to the industry by both public and private companies globally. And of course good for ongoing investor AI confidence, as long as they continue to watch the financial AND the secular technology cycles in this early AI Tech Wave.

But there are more nuances that may be important to frame our expectations, so let me unpack that a bit here.

First, as I’ve stated before, it’s important to remember the sometimes multi-year gaps between hardware progress vs software progress. We’ve seen this in EVERY tech cycle from mainframes to PCs to client server to the Internet, Cloud, Mobile, and now of course AI. There is always a lag there. And sometimes it’s years, even decades.

As I explained in a piece just a few days ago, we’ve seen that gap play out in self-driving cars for example.

In the case of AI right now, there are a plethora of smart people talking about an exponential jumps in BOTH AI hardware and software over the next two or three years.

One example of this confidence besides the senior most folks at OpenAI, Nvidia and others, is Mustafa Suleyman, who currently is the founder and CEO of the multi-billion dollar AI ‘smart agents’ company Inflection, and before was a co-founder of Deepmind, which is now a key part of Google’s LLM AI set of assets. Suleyman, who just published a book on AI and ‘The Coming Wave’, has been citing “AI training models will be "1,000x larger in three years":

“DeepMind (now part of Google) co-founder and Inflection CEO Mustafa Suleyman predicts that AI will continue its exponential progress, with orders-of-magnitude growth in model training sizes over the next few years.”

“In 2022, having left both DeepMind and Google, he then co-founded Inflection AI with the goal of leveraging "AI to help humans 'talk' to computers". Inflection has launched a chatbot named Pi, which stands for Personal Intelligence, able to "remember" past conversations and get to know its users over time, offering people emotional support when needed. The company has also built a supercomputer with 22,000 NVIDIA H100 GPUs, one of the largest in the industry.”

Suleyman began by talking about his new book, The Coming Wave: Technology, Power, and the Twenty-first Century's Greatest Dilemma, published this week and described as "an urgent warning of the unprecedented risks that AI and other fast-developing technologies pose to global order, and how we might contain them while we have the chance".”

OpenAI has been focusing on similar exponential growth capabilities of AI to general AI (aka AGI or ‘Superintelligence’).

Nvidia’s next chip and components are focused on multi-fold improvements, in both the capabilities of theGPUs AND the surrounding AI networking and data transfer infrastructure. It’s the foundation of founder/CEO Jensen Huang’s ‘New Computing Strategy’ strategy, driven in part by its AI DGX ‘accelerated computing’ data center technologies that I’ve talked about in earlier pieces.

So these are the SECULAR technology growth curves that the founder/CEOs of the top AI companies are excited about over next three years at least.

That the brute force improvement from the big jump in hardware, will lead to a 10x plus brute force improvement in the LLM AI software, which will then show the big jumps to AGI and SuperIntelligence that many of these leaders are hyper focused on.

From my perspective, I think they’re all right and there will be an exponential spurt of multiple folds in hardware and software LLM AI capabilities over the next three years. And then like most tech ‘S’ and ‘J’ growth curves, they may slow down a tad as all the industry participants and customers digest and start to implement the technologies.

However I’m NOT sure there will be an EQUAL jump in the way regular people USE and ACCESS the AI capabilities. As I’ve discussed before, in several pieces, it will take a while for customers, both businesses and consumers, to figure out how to implement these technologies at scale, and the industry to figure out how to make sustainable business models work at scale off the same. And there’s of course that topic of finding the right ‘product-market-fit’ for these rapidly evolving technologies, and what customers will ultimately pay.

The next immediate development of AI ‘smart agents’, multimodal AI services driven by voice and sight, and deeper integration of AI software with traditional software and data sources will of course help figure some of these growth curves out in the near term. But true mainstream adoption of AI at scale, with sustained changes in user habits will take time.

Today, AI still needs a lot of good prompts designed well, in a SERIES of questions to get really impressive results. Which means that regular people may not see the improvements as much as early adopters will.

This is where the GAP may be perceived as longer by regular users. It depends on how the industry innovates on the ways people can access and use the AI. As excited am about multimodal voice capabilities in ChatGPT and other AI services from Google, Amazon, Apple, and others, I’m not sure voice multimodal access does it in the first versions.

For investors though it will still be a good progress report to continue the excitement on AI momentum. Even if it’s far less than a thousand fold improvement.

And again, remember it beats Moore’s law, which promised a doubling every two years or so. As long as we frame the exponential growth curves with realistic sets of expectations of execution and adoption. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)