AI: Feeling our way to AI UI/UX

...the quest for AI 'product-market-fit'

In the ten months since OpenAI’s ‘ChatGPT moment’, and after 200 billion plus in AI Infrastructure investments, the world is still trying to figure out how AI will be used at scale in all its many possible incarnations. And so many ways for users to interact and experiment, ‘UI/UX’ in silicon valley speak.

These include of course the ChatGPT style ‘ChatBot’, with variations coming in celebrity ‘Smart Agent’ flavors by Meta and others. And they include behind-the scenes ‘For You’ feed algorithms by TikTok, which endlessly provides highly scrollable entertaining and informative video content generated by tens of millions of ‘Creators’ in the new ‘Creator Economy’, and soon AI generated creators too.

And they include AI software like Microsoft’s CoPilot, which humbly started out to be an assistant over your shoulder to help coders, and soon will be at the beck and call of hundreds of million Windows 11 and Office 365 users worldwide, ‘augmenting’ them with helpful AI knowledge boosts at work and play.

Apple is hard at work as outlined here and here, finding bottom up ways to integrate AI into its many devices, apps and services, including its next generation Vision Pro platform in early 2024, even at its ‘historical bargain’ price of $3499. Recent reports have their AI head ‘JG’ looking hard at everything from Siri, to search delivered by Apple products using both its own technologies and Google.

But as Sam Altman of OpenAI himself said not too long ago, AI has yet to find more ‘Product-Market-Fit’, silicon valley speak for user interactions and experiences that can be delivered via software and hardware at scale, and then monetized with a combination of advertising, transactions and/or subscriptions.

Big Business that can eventually be worth trillions, the perennial quest for countless ‘Unicorns’ (silicon valley speak for private companies valued at $1 billion plus).

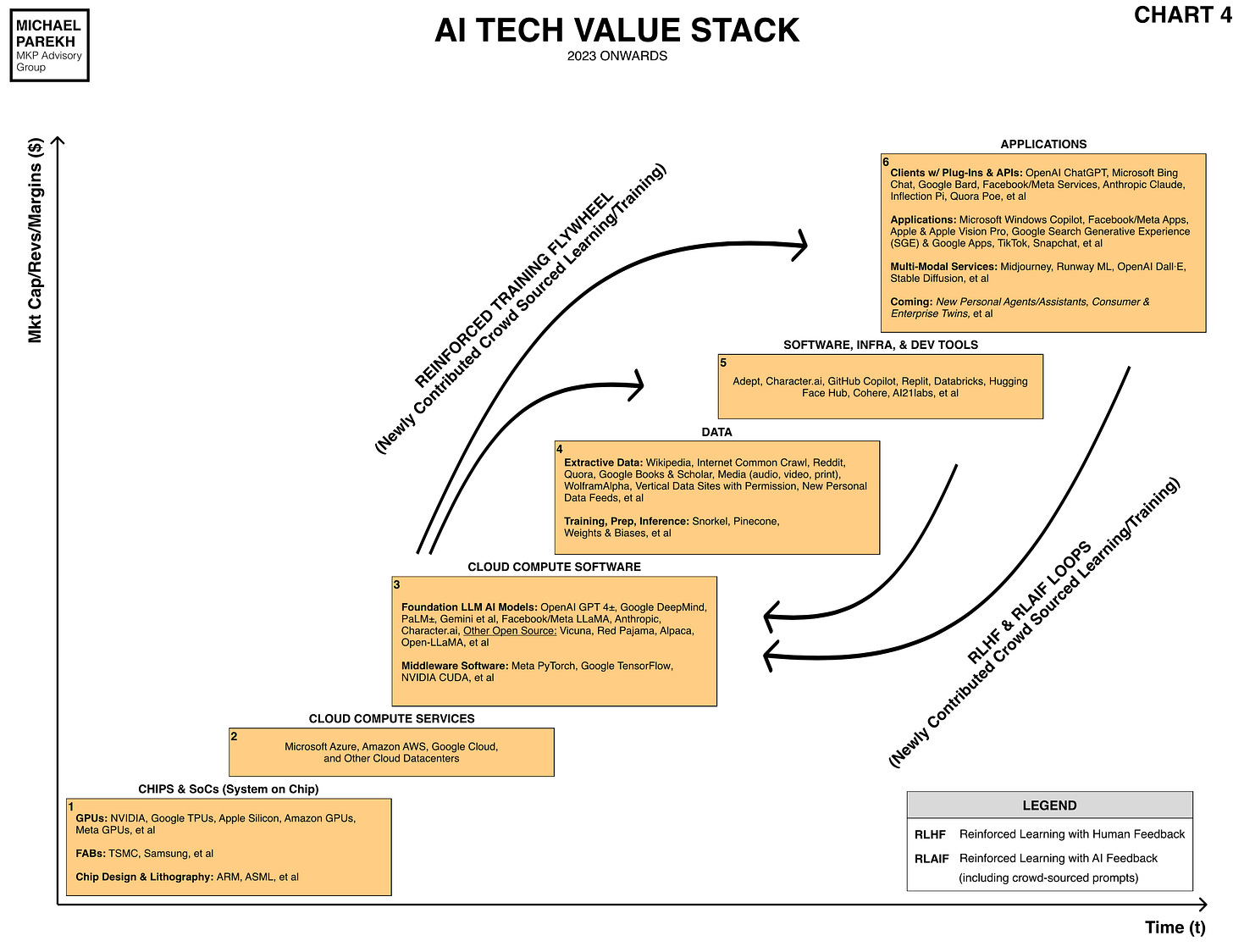

There is no lack of venture backed companies trying to figure out the endless ways to deliver AI to billions. It’s Box 6 in the AI Tech Wave chart above. After all, as I’ve explained, AI software is a new type of math and fast GPU driven algorithmic approach to turbo charge and supplement traditional software, to do more useful things for society than has been delivered to us over the last 75 plus years.

The main challenge and opportunity is HOW to deliver AI to the masses, and how fast. It’s different than traditional software in that its output is endlessly qualitative in its variety, and ability to help users Create and Reason based on the cornucopia of human knowledge and data to date stored on the internet to date and beyond. That data is being constantly mined, and then endlessly ‘reinforced’ with learning loops based on the user queries (aka ‘Prompts’) to increase reliability, relevancy, and of course safety. And that can be done in ways far beyond the humble ‘text prompts’ of ChatGPT.

As I’ve outlined before, AI is going ‘multimodal’, with single queries in place will generate LLM AI responses in Images, Voice, Video, Code, and many more ‘modalities’ to come. All our senses are going to be used to the max, beyond Sight and Touch of our smartphones, to Voice and beyond.

This whole issue was spotlighted a few days ago by reports that OpenAI’s Sam Altman and Apple’s former vaunted Design chief Jony Ive, were spit-balling new ways to deliver AI to the masses, funded with over a billion dollars from the ever-ready Masayoshi Son of Softbank in Japan.

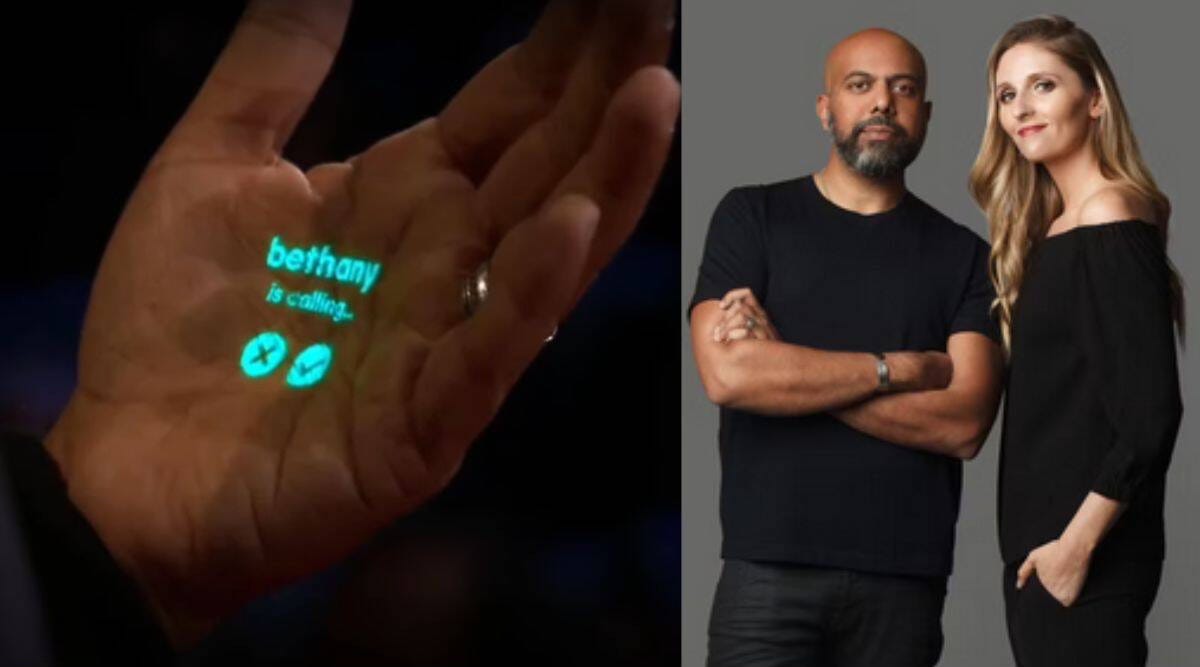

Sam Altman, is also an investor in another startup led by a former Design couple, Imran Chaudhri and Bethany Bongiorno, veterans from Apple, that has been toiling away since 2018 on a way to deliver AI without screens or smartphones.

Called Humane AI, the company hinted at its product and its possibilities at a TED talk a few months ago, and this week gave another peek at a Paris fashion show with Naomi Campbell and others showing it off. A broader showcasing is coming in November. Its current form of AI delivery is a lapel pin that can project images onto surfaces like your hand. The pin is activated by a touch with one’s hand, like in Star Trek from the 1960s of course.

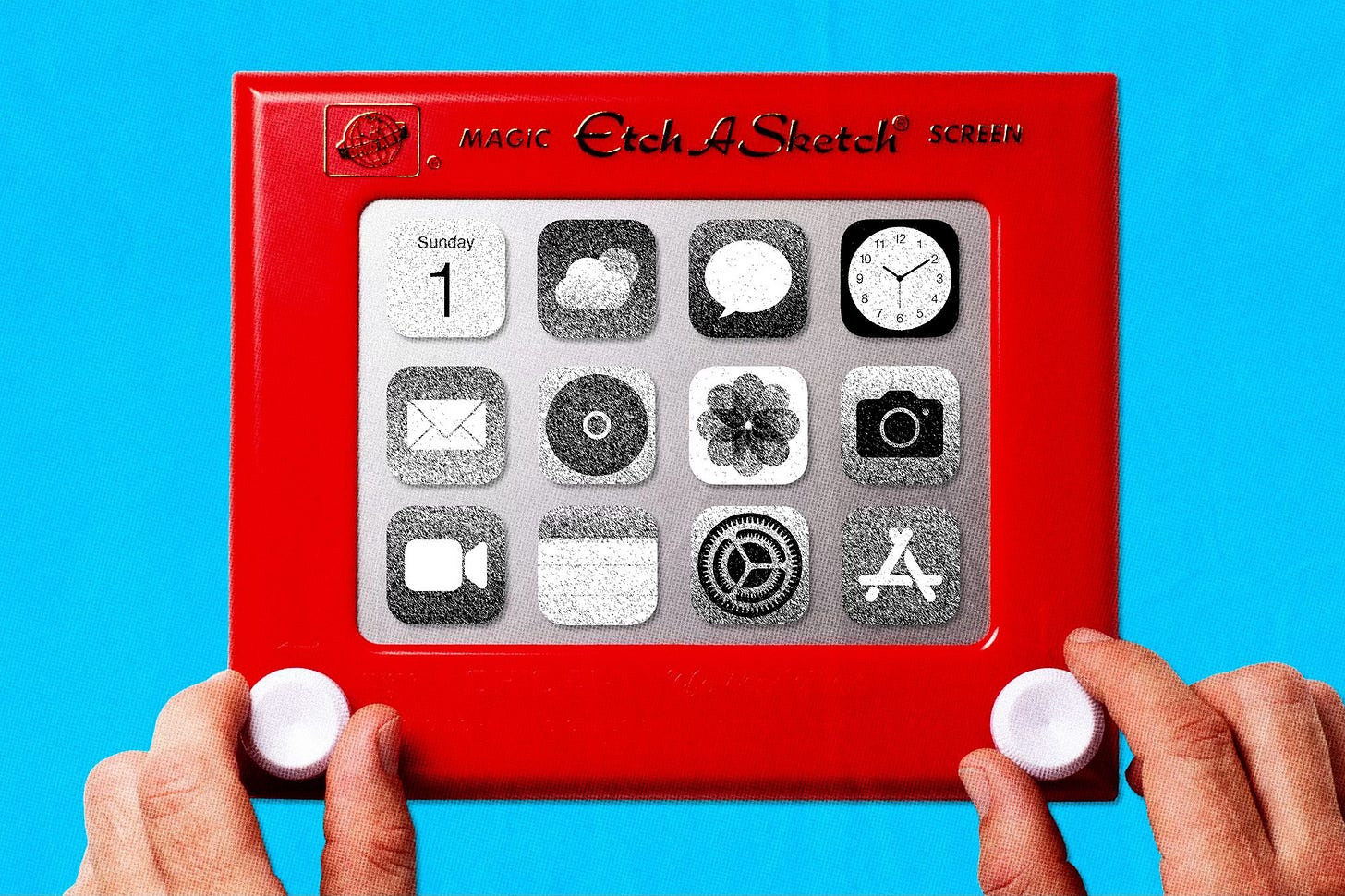

Since the explosive popularity of the all-touch smartphone pioneered at scale by Steve Jobs and Apple in 2007, over four billion people are now addicted to these devices for hours a day around the world. Helped also of course by Google’s Android, and itsmany derivatives in Asia that make up a big percent of the world’s smartphones. So we’ve all been thoroughly trained in daily habits to touch our way at glass screens to get things done.

That has of course carried over to our cars, led by Tesla with its iPad sized screens in the center console of their cars, and now been copied by every car manufacturer in the world. And Apple and Google are trying to make sure their software operating systems rule that ‘platform’ as well with CarPlay, Android Auto, and Google’s other automotive software efforts. And all those systems of course are getting revamped with LLM and Generative AI makeovers as fast as possible. Apple’s latest version of CarPlay is an entire dashboard of screens. We’re already seeing production versions of similar screen driven cars from Mercedes, BMW and many others.

The same of course is going on with the other way we’ve all been trained to get machine learning driven information via ‘Voice Assistant’ devices in the hundreds of millions, via devices like Amazon’s Echo and Alexa, Apple’s HomePods and SIri, Google’s Nest and Assistant, and countless others. And as I’ve also documented here, these efforts are also being ‘augmented’ and revamped with a healthy dollop of LLM and Generative AI.

There are lots of pros and cons with bringing our habits from touchscreens and voice assistants to cars, as pithily discussed in this WSJ piece by Nicole Nguyen:

“Screens, on the other hand, need our full attention, which is why using them can be frustrating when we are doing something else, like driving.”

“‘Although we call them touch screens, they require sightedness,’ explained Rachel Plotnick, associate professor of media studies at Indiana University Bloomington.”

Plotnick has a name for this frustration: the “rage poke.” When people rage poke, they’re exasperated by the screens’ lack of interactiveness, she said.

Voice-enabled commands were supposed to help, but using AI assistants, which don’t always understand correctly or perform the right task, can be just as maddening.”

It’s a human trait to want touch beyond screens to include knobs and switches again.

Meta this past week also showed off its next generation VR/AR/MR (virtual reality, augmented reality, and mixed reality) headset Quest 3 ($499+), and the second generation of ‘Smart Glasses’ in a partnership with Ray-Ban ($299+), that now in addition to taking photos and videos and sending them to Facebook and Instagram, also allow ‘Live Streaming’ to Meta’s Reels platforms, and do voice driven AI searches using Meta AI.

Meta AI is of course Meta’s AI service based on their open-source Llama 2 LLM AI, and real-time Search capabilities in partnership with Microsoft’s Bing service.

All this is to indicate that companies large and small are investing billions and working hard to help humans use ALL their senses to access AI driven content and services. Sight, Voice, Ears, Touch and am sure our senses like Taste are not far off.

We know typing text into a chatbot, even if it’s to play Dungeon Master with Snoop Dogg himself on Meta’s services, doesn’t quite do it in terms of UI/UX down the line at scale.

It’s all in a rapid state of exploration and experimentation, to leverage human desires to be emotionally and rationally driven to live a richer life. And we’re again the guinea pigs. Both with our spending dollars, and of course our Attention. At least the early adopters amongst us. And those today in a world of over 4 billion smartphone users, represent a big, potent market for AI exploration, WAY BEFORE there is true ‘product-market-fit’. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)