ChatGPT: About those Plugins.

Work in Progress.

OpenAI Founder/CEO Sam Altman, “[ChatGPT] Plugins “don’t have Product Market Fit”

The industry has been excited about rapidly extending LLM AI models into tons of vertical apps and services, for some months now, led of course by OpenAI and ChatGPT in March.

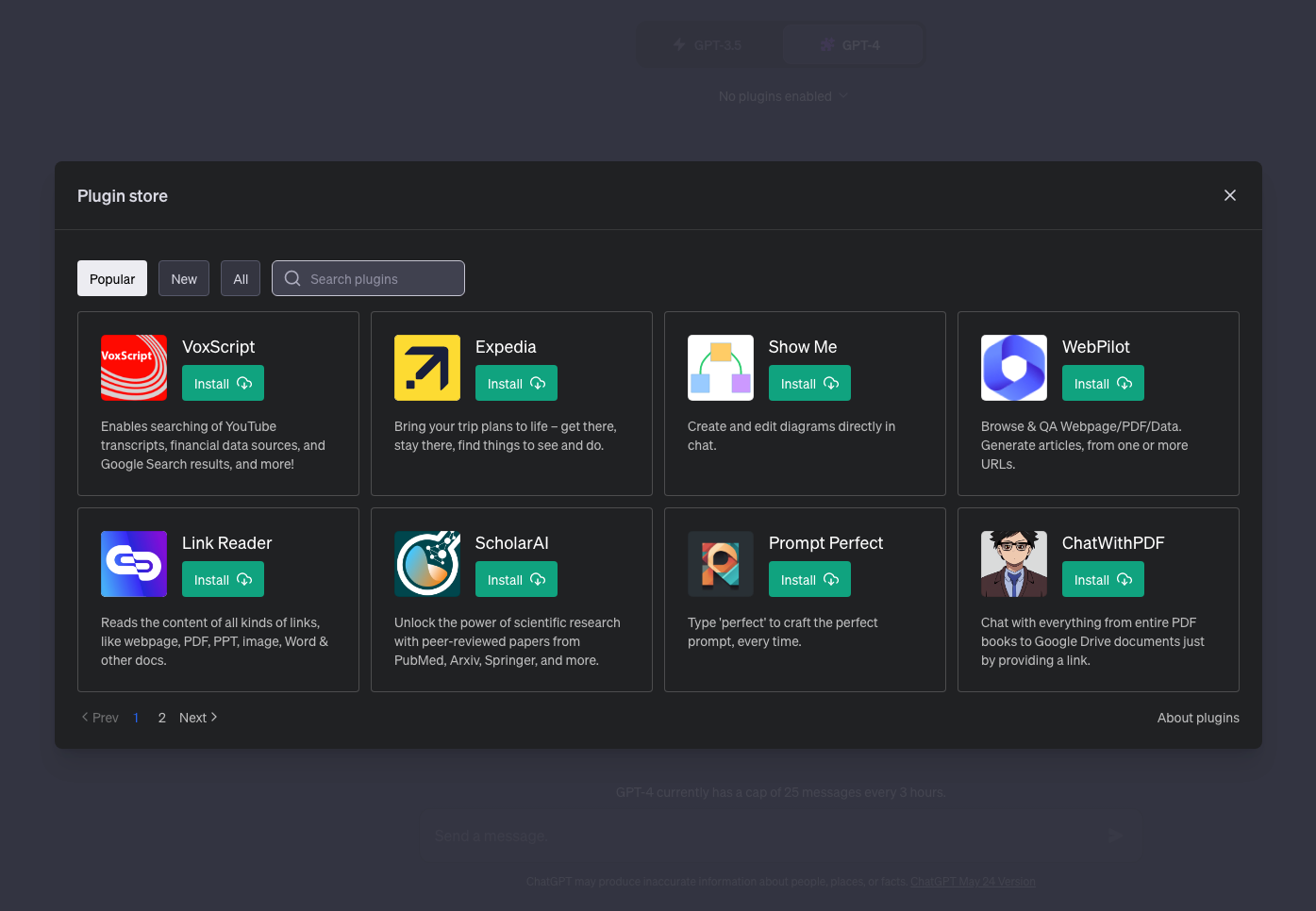

ChatGPT and Microsoft in particular both had back to back announcements over the last couple of weeks on expanding support to hundreds of not thousands of third party apps and services. Note that only ChatGPT Plus subscribers, paying $20/month, get access to the Plugins, while Microsoft plans to roll our hundreds and eventually thousands of Plugins to users of Bing Chat, presumably using Microsoft Edge browsers at first.

Sam Altman in the interview at the Developer conference this past week mentioned in the previous post, did however temper expectations on this front.

Key point mentioned:

“Plugins “don’t have Product Market Fit (PMF)” and are probably not coming to the API anytime soon.

A lot of developers are interested in getting access to ChatGPT plugins via the API but Sam said he didn’t think they’d be released any time soon. The usage of plugins, other than browsing, suggests that they don’t have PMF yet. He suggested that a lot of people thought they wanted their apps to be inside ChatGPT but what they really wanted was ChatGPT in their apps.”

We checked the internet to see how ChatGPT plugins were being reviewed by tech bloggers. Typical was this take by Andy Stapleton, a well known tech blogger, who had a representative YouTube review.

Money quote is at the 7:45 mark. “Plugins on ChatGPT are JUST SO ANNOYING TO USE”. That’s the headline.

My nephew Neal, (Third year Computer Science & Design Major at UT Austin), who’s helping me with AI matters this summer, went through these reviews and had this to add:

“The biggest downside as of now has to do with only being able to use 3 plugins at a time, which means you will have to spend a lot of time trying several plugins for the same task”.

Source: OpenAI

Tedious.

Tom’s Guide also did a review of some of the ChatGPT Plugins, and found some to like and recommend. But the experience recounted still comes across as a work in progress.

So Sam was right and candid. NO PRODUCT MARKET FIT so far.

It all feels patched together, but not at all put together. For now. Will get better.

PAPERS, PAPERS, PAPERS

Finally, I wanted to leave you all with some notable AI Papers for the weekend. They continue to come fast and furious every week. And highlight that we’re at the Beginning of the Beginning of AI research, innovation, and eventual capabilities.

This VentureBeat article on a new paper is worth noting. The parties involved, MIT and others, are one of several groups making headway in making smaller LLM AI models perform as well as larger ones. This potentially increases LLM AI accessibility, Open or Closed, at far lower compute costs. The paper itself can be found here.

Also this week, OpenAI announced meaningful reinforced learning improvements with what they call “process supervision”. It seems to move the needle on meaningfully improving the alignment on reinforced learning/training, producing more interpretable reasoning. Great goal to continue to work towards.

Next Paper is one on AudioGPT. A multi-modal AI System connecting ChatGPT with Audio Foundation Models. More details in this story by marktechpost.

Finally, Meta has an approach to smaller models that can obviate the need for foundational LLM AI models. Links to the paper here.

That’s it for now.

Enjoy your weekend all. Lot more to come this week. Starting with Apple kicking off its WWDC 2023 Developer Conference in Cupertino tomorrow. We’ll likely hear AI mentioned at least a few times at the event. Stay tuned.