AI: Tech Speed Limits

...figuring out the acceleration

AI: In my View, September 10 2023

In this Sunday’s ‘AI: In my View’, I’d like to make the case that we raise the ‘Speed Limit’ for trying out AI technologies at Scale. Not to a 100 mph plus as many ‘Accelerationists’ (aka e/acc’, effective accelerationism) might argue for, but certainly above the 55 mph we seem to be on as a society. We haven’t settled yet on 60 or 65 mph as a safe, but more efficient speed limit for society at large. Especially given the unique set of Fears attached to AI vs previous technologies like PC or the Internet. Let me explain.

First some background again on the unprecedented overhang of Fear and Safety concerns around AI that is tempering societal enthusiasm for AI technologies, both top-down and bottom up. In specific sectors like Finance, Healthcare and beyond. Despite not knowing how these new-fangled AI technologies really work.

Most nations are figuring out their comfortable speed limits for AI, with the US currently leaning towards a higher limit (White House sponsored ‘Red Teams’ in Vegas), and the EU a slower one, especially with its pending EU AI Act. This Fall will see additional deliberations in the US Congress, with Senator Schumer’s ‘AI Insight Forum’ kicking off this week on September 13th (Links in quote mine):

“The September 13 event will involve Google CEO Sundar Pichai and former Google CEO Eric Schmidt; Meta CEO Mark Zuckerberg, OpenAI CEO Sam Altman; Microsoft CEO Satya Nadella; Nvidia CEO Jensen Huang; and Elon Musk, CEO of X, the company formerly known as Twitter.”

So it will be an eventful discussion indeed.

The White House has been actively focused on this front as I’ve written about, and those efforts will also see additional developments this year. On a bottom up basis, most recent surveys indicate a heightened fear on matters AI, as this study indicates a rate of over 60% earlier this year.

The NY Times this week highlights “The Technology Facebook and Google didn’t dare release”:

“Engineers at the tech giants built tools years ago that could put a name to any face but, for once, Silicon Valley did not want to move fast and break things.”

The piece echoes one surprisingly out of China this week as well, via the WSJ: “After feeding explosion of Facial Recognition, China moves to rein it in”:

“Authorities propose limits on when to use the technology, even as they leave national-security exceptions”.

Both pieces are worth reading for the intricate issues and nuances. Lots of debate and real issues on both sides.

And the issue goes beyond facial recognition, which LLM and Generative AI is particularly well-suited for. As I highlighted a few weeks ago, Zoom, the online videoconferencing company got embroiled in a controversy as it was potentially figuring out how its technologies could be augmented with AI features. The idea is being worked on by other companies as well, around AI features that summarize attendees and conversations on video calls (presumably with the permission of the participants).

The irony is that many of these ‘controversial’ applications are things that humans already do very inefficiently every day. We go to cocktail parties, and networking events, to ‘network’ and socialize. Try for hours to figure out ‘who was that person I met/saw/talked at that event’? Searching for hours on LinkedIn and Google and Facebook to remember. Asking friends, family and associates for days later.

Alternatively, taking inefficient notes on video and audio meetings, or having assistant do it, then spending hours and days editing notes from the meetings for follow-ups and the like. These are things computers were invented to do better. And now LLM AI tech can really accelerate the possibilities. Now AI Software can turbo-charge traditional software to do far better.

Other applications of course are in areas like Healthcare, where we have guarded our personal digital health data with a warren of rules, laws and regulations, when that data in aggregate and in the particular, can help us find ailments earlier, and certainly fine-tune better treatments in the aggregate with the data than without. The speed limits here on technology simply makes everything take longer to get to the eventual possibilities and results.

One could go on with examples in Education, Financial Services, Legal Services, and so many corners of our workplaces. AI and related technologies are in their infancy, and we’re a long way from there yet.

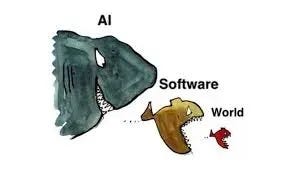

We need to figure out the right Acceleration or Speed Limit. Whether we embrace ‘Accelerationism’ all the way or not, as technology luminaries like Marc Andreessen has done. Yes, he of “Software will eat the World” fame, and now an AI enthusiast to say the least.

On the other side, another AI luminary, Deepmind co-founder Mustafa Suleyman, now the founder of a new ‘Smart Agents’ unicorn company Inflection AI, argues “for lawmakers to seize the opportunities and mitigate the potentially catastrophic risks of AI” in a new book, as detailed in this NY Times piece. They specifically asked about where technologists were on the ‘speed limits’:

“How have your peers responded to your ideas?

“There are lots of different clusters in Silicon Valley. People like Satya [Nadella, CEO of Microsoft] are very forward thinking about these things and definitely lean into the responsibility that the companies have to do the right thing.”

“But there are definitely skeptics. Marc Andreessen, the venture capital investor, just thinks that there’s not going to be much of a downside. It’s all going to be fine and dandy. I’m as much of an accelerationist as Andreessen, but I’m just more wide-eyed and comfortable talking about the potential harms, and I think that is a more intellectually honest position.”

I’m closer on the acceleration ‘Speed Limit’ to Marc Andreessen than his peers. The core issue for me is that these technologies can’t be regulated top down, especially since many of the core technologies to come haven’t been invented yet. They’re ‘UnKnowable’ from where we sit today. We just don’t know what we don’t know.

Yes, we need to be careful as the folks who built the Hadron Collider were in the face of unknowables that the incredible device won’t blow up the world when it was built around Switzerland. But we will never learn not to put our hand on a hot stove again if we haven’t done it once first.

The benefits scientific, economic and beyond are still being debated, calculated, and figured out, despite it already having contributed to discovering the famous Higgs Boson, and confirming its position in the Standard Model of physics. That it “proved the existence of an invisible process that performs the fundamentally important role of giving all other particles their mass or substance”, as the BBC highlighted eight years ago

And LLM AI today has as many unknowables in the depths of how we can create and reason better as humans using software and hardware at the speed of Moore’s Law and beyond. The ‘emergent’ behaviors only come to light if we build the mammoth computing data centers that run into the tens and likely hundreds of billions of dollars. And figure out how to pay for them privately and/or publicly. Through experiments grand and small, top-down or bottom-up.

We’ve got to go through the Looking Glass. As I’ve discussed before, all technologies since the dawn of fire have had bad and good applications. Society’s role is finding the right balance and speed to adopt them. In these days of AI, a little bit more pedal to the metal might be more good than bad.

A higher speed here is likely better for society than not. Especially when it comes to retraining our limbic brains, which don’t really help make decisions. Compared of course to our neocortex, where rational decisions eventually come from after a fair bit of reasoning. We seldom do what’s good for us, until we do the bad for us stuff for a long time. Applies to us as individuals, tribes and as nations. Eventually figuring out our habits for societies at large.

Good habits take a long time to learn and adopt. Especially for society at scale. We need to give ourselves and our machines more permissions to do things different than before. Walk into a meeting online or in real life, and expect that everything seen, said and heard is a matter of public record.

Or go into a social function expecting to be identified by anyone with any interest. We do all those things every day, and have done so forever with the expectation that it will happen. Just slower and in fewer numbers and circles. Technology today just makes it faster and in potentially larger numbers and circles. Maybe that’s more good than bad. Just see how societally we’ve learned to preen and put ourselves more on the Instagrams, Facebooks, YouTubes and TikTok. Yes, know very well there’ve been societal harms, and we’re figuring out the right speeds.

Past concerns for fear may be overtaken by greater societal opportunities and efficiencies. The sooner we come to that conclusion, and accelerate a bit, the more augmented will we all be together. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)