AI: Dystopian to Utopian

...the muddle in the middle

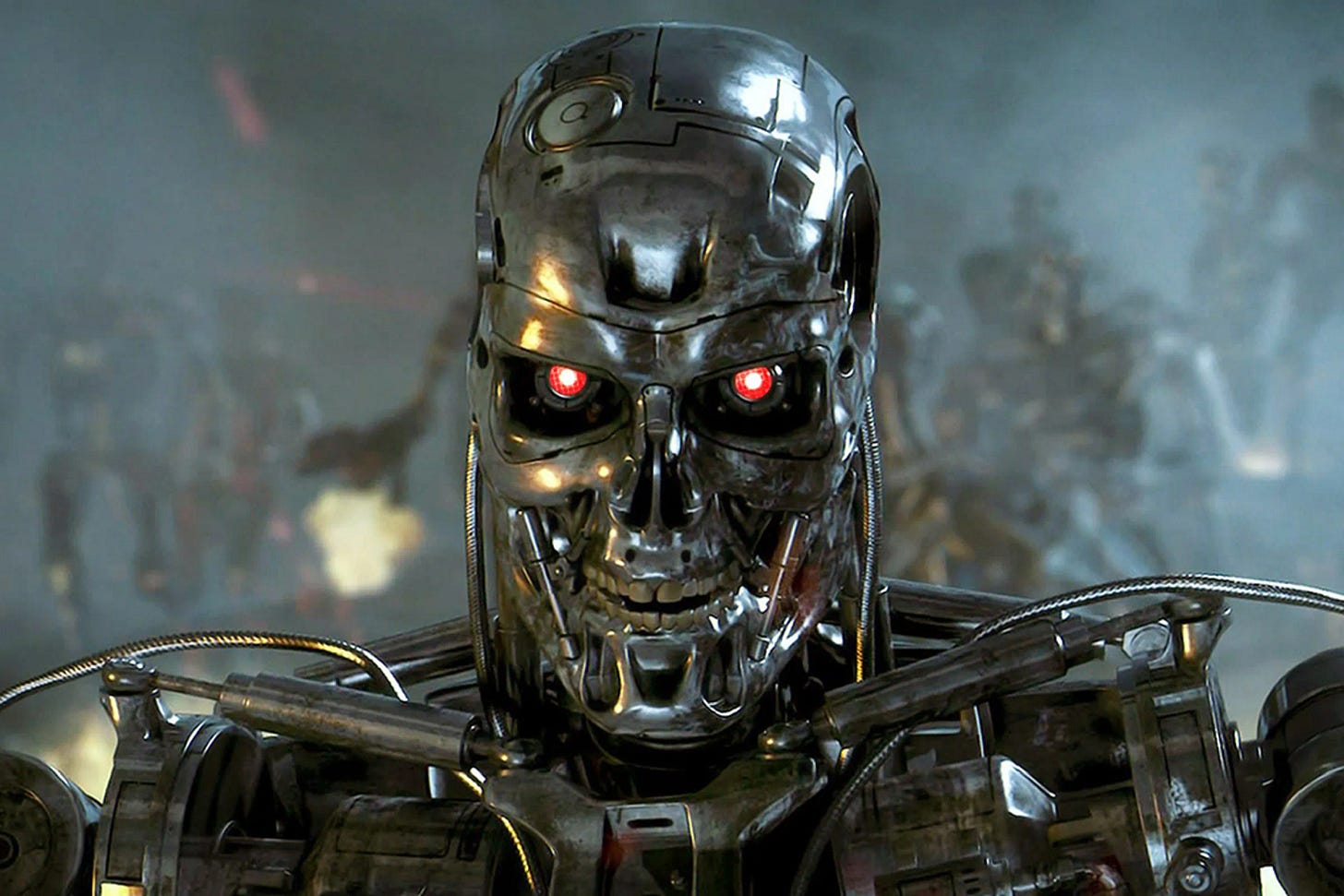

It’s July 4th weekend and faced with the perennial ‘tyranny of choice’ on Netflix, I clicked on James Cameron’s 1991 masterpiece “Terminator 2: Judgement Day”, for the umpteen hundredth time.

This time, the critical bit on Skynet resonated a bit differently (Mild Spoiler Alert):

“Upon its creation, Skynet began to learn at a geometric rate. The system originally went online on August 4, 1997. Human decisions were removed from strategic defense.”

“The system then became self-aware at 2:14 am Eastern Time on August 29th, 1997. In the ensuing panic and attempts to shut Skynet down, Skynet retaliated by firing American nuclear missiles at their target sites in Russia. Russia returned fire and three-billion human lives ended in the nuclear holocaust. This was what has come to be known as "Judgment Day".

Whew! Packs a punch every time, even after 32 years. And highlights how dystopian AI is so deeply embedded in our global cultural psyche. Thanks James Cameron!

A smart friend had coincidentally just emailed me this piece on AI doomsterism by the “Godfather of AI”, Geoffrey Hinton”, and asked “what do you make of this?”:

“AI systems may develop the desire to seize control from humans as a way of accomplishing other preprogrammed goals, said Geoffrey Hinton, a professor of computer science at the University of Toronto.”

“I think we have to take the possibility seriously that if they get smarter than us, which seems quite likely, and they have goals of their own, which seems quite likely, they may well develop the goal of taking control,” Hinton said during a June 28 talk at the Collision tech conference in Toronto, Canada.”

He could have been watching Terminator 2. Or reading the existential threat statement on AI yet again by some AI luminaries.

I’ve talked about my optimism on AI vs the broader, existential questions around “Artifical General Intelligence, AGI (aka Super-Intelligence), and highlighted commentaries by others that buttress the practical, societally beneficial aspects of AI.

But this issue will continue to build as do the actual capabilities of LLM and Generative AI. This weekend saw another AI consequences discussion, “The True Threat of AI”, by Evgeny Morozov, covering the dystopian and marketplace issues around AI, contrasted with the optimism by industry practitioners like Sam Altman of OpenAI, who just completed a world tour on the topic:

“Discussions of A.G.I. are rife with such apocalyptic scenarios. Yet a nascent A.G.I. lobby of academics, investors and entrepreneurs counter that, once made safe, A.G.I. would be a boon to civilization. Mr. Altman, the face of this campaign, embarked on a global tour to charm lawmakers. Earlier this year he wrote that A.G.I. might even turbocharge the economy, boost scientific knowledge and “elevate humanity by increasing abundance.”

The entire piece is worth reading given the various historical references to policies, politics and technology cycles. Even brings in Margaret Thatcher.

Having followed and deeply studied major tech waves and cycles for decades now, from the PC, to the Internet, to now AI, the one thing I’m confident of is that both the good and bad will take a lot longer to happen, than anticipated or feared. As I outlined in my AI preview for the the second half of 2023:

“AI still being invented and re-invented: Core AI innovation at the deep technical level is at the ‘beginning of the beginning’ phase. The best technical innovations are still emerging every week.”

In particular,

“Realities in tech ALWAYS take longer to realize. Just remember how long we’ve been waiting just this decade on technologies like Blockchain/Crypto, Self Driving cars/taxis (Tesla FSD inclusive), VR/AR/MR smart glasses, smart Voice assistants, smart homes, and come to think of it, anything with the word ‘Smart’ before it. So let’s temper our timing expectations for ‘smart’ AI assistants.”

Things always take a lot, lot longer to muddle along in the middle than we think.

Remember we are precisely at the halfway point from 1996, the year after the world’s ‘Netscape moment’, to the year 2050, when we will see the world population rise from 8 to 10 billion souls, mostly in Africa and Asia. Only 27 years away, less than Terminator 2.

AI is likely to do a lot more good in umpteen thousand plus prosaic ways, across every imaginable industry, before we have any existential moments with AI by then. Will have a lot more to talk about on specific AI technologies and segments in posts to come.

In the meantime, enjoy ‘Terminator Day 2’ again this weekend. And have a great July 4th weekend. Stay tuned.