AI: More powerful GPUs & Models

...”They just want to Learn”

One thing we can count on for some decades now, is technology getting better and cheaper over time. The AI Tech wave, even in its current early days, is following the template of the PC and Internet waves in that regard.

This time though, it needs to be fed with ever more Data from our world, in forms that we can barely imagine. To ingest, learn via reinforcement loops, while keep getting better in serving our needs with safety and reliability. A lot of moving parts.

We saw news on the AI tech front this week in terms of coming AI hardware and software upgrades. The two core drivers of LLM AI technologies, the GPU chips and the Foundation LLM AI models, keep getting better, more powerful, and cheaper at scale.

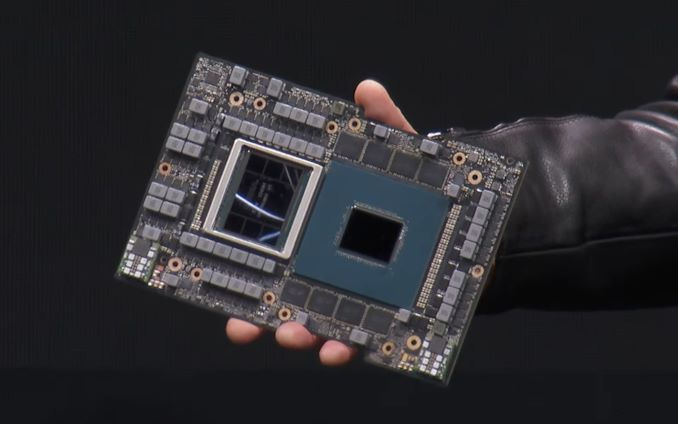

First, with Nvidia announcing it’s next generation GH200 GPU superchips at a big tech trade show, and second, LLM AI Unicorn Anthropic founder, giving color on the race towards bigger LLM AI models by his company and others.

Here’s more on the Nvidia announcement via Bloomberg (my notes and links in Bold):

“Nvidia Corp. announced an updated AI processor that gives a jolt to the chip’s capacity and speed, seeking to cement the company’s dominance in a burgeoning market.

The Grace Hopper Superchip, a combination graphics chip and processor, will get a boost from a new type of memory, Nvidia said Tuesday at the Siggraph conference in Los Angeles. The product relies on high-bandwidth memory 3, or HBM3e, which is able to access information at a blazing 5 terabytes per second.”

“The Superchip, known as GH200 [vs their current top chip H100, which sells at $30,000 plus per chip when available], will go into production in the second quarter of 2024, Nvidia said. It’s part of a new lineup of hardware and software that was announced at the event, a computer-graphics expo where Founder/CEO Jensen Huang spoke.”

“Nvidia has built an early lead in the market for so-called AI accelerators, chips that excel at crunching data in the process of developing artificial intelligence software. That’s helped propel the company’s valuation past $1 trillion this year, making it the world’s most valuable chipmaker. The latest processor signals that Nvidia aims to make it hard for competitors like Advanced Micro Devices Inc. and Intel Corp. to catch up.”

The second set of news around Foundation LLM AI models, was from an interview of Anthropic founder/CEO Dario Amodei, who was earlier at OpenAI, before striking out to build his own LLM AI company.

There’s a transcript of the interview by Dwarkesh Patel here. I’ve written about Anthropic’s Claude 2 LLM AI models, which goes up against OpenAI’s GPT4 and ChatGPT LLM AI products, as well as Google’s Bard and upcoming Gemini LLM AI models. Anthropic is also known for their keen focus on AI model alignment, safety and reliability.

The whole interview is worth reading, but the key item continues to be how LLM AI technologies are still relatively ‘inexplicable’ in how they work. Especially relative to other hardware and software technologies that have come before. Specifically, this opening exchange by interviewer Dwarkesh Patel kicks it off (again, my comments and links in bold):

“First question. You have been one of the very few people who has seen [LLM AI] scaling coming for years. As somebody who's seen it coming, what is fundamentally the explanation for why scaling works? Why is the universe organized such that if you throw big blobs of compute at a wide enough distribution of data, the thing becomes intelligent?”

“Dario Amodei (00:02:24 - 00:04:04):

I think the truth is that we still don't know. It's almost entirely an empirical fact. It's a fact that you could sense from the data and from a bunch of different places but we still don't have a satisfying explanation for it.”

In my piece last month titled “AI: Can’t see how it works”, I quoted another AI luminary as saying:

“If we open up ChatGPT or a system like it and look inside, you just see millions of numbers flipping around a few hundred times a second,” says AI scientist Sam Bowman. “And we just have no idea what any of it means.”

“Bowman says that because systems like this essentially teach themselves, it’s difficult to explain precisely how they work or what they’ll do. Which can lead to unpredictable and even risky scenarios as these programs become more ubiquitous.”

The Anthropic founder echoes much of that distinction with LLM AI models, despite the hundreds of millions and billions being invested in their development going forward. The whole piece is worth perusing, if only to understand how this tech wave is fundamentally different from all other software waves that have come before.

Not from a ‘being afraid’ perspective, but from the fact that we are working on technologies that are fundamentally processing data and information in a totally different way than what we’ve done in computing for over half a century. And we’re just at the very beginning of the beginning here, both in terms of the underlying hardware at the chip level, and certainly the software.

The other point that the Anthropic CEO discussed in his interview, was on the uncertainty of where new Data will come from for the insatiable appetite of tomorrow’s more powerful LLM AI models:

“Dwarkesh Patel (00:11:04 - 00:11:09):”

“You mentioned that Data is likely not to be the constraint. Why do you think that is the case?”

Dario Amodei (00:11:09 - 00:11:25):

“There's various possibilities here and for a number of reasons I shouldn't go into the details, but there's many sources of Data in the world and there's many ways that you can also generate data. My guess is that this will not be a blocker.”

Maybe it would be better if it was, but it won't be.”

As I discussed yesterday, a more immediate source for additional Data is likely going to be video. Google is working on this front with YouTube in recent weeks. As are Meta, TikTok, Elon, and others.

Peering pragmatically at the near term, and as I outlined in my second half 2023 AI and ‘Next Three Year’ outlook pieces recently, we still have three high probability items to look forward to on the AI front:

Short Supplies & High Demand: GPU (Graphical Processing Unit) chips from Nvidia, which currently supplies over 70% plus of the core GPU hardware and software infrastructure that make Foundation LLM (Large Language) AI models work, will be in short supply and in great demand at least into 2025. Lots of companies old and new are in the fray, and ultimately, there will be more options and supply.

Billions invested in Foundation LLM AI Compute Capex: Over a dozen big tech ventures are each going to invest tens and hundreds of millions building larger and more capable Foundation LLM AI models rivaling OpenAI’s GPT4. They would include the obvious public companies like Meta, Microsoft, Google, Amazon, Apple amongst others in the US, and China’s Alibaba, Tencent, and Baidu, as well as private ‘Unicorns’ like Anthropic, Inflection, and others. The capex of the big tech companies are going to be in the billions per quarter for the next 2-3 years at least.

Accelerating AI Compute at Scale: The Chips and the Models would get ever larger, and more efficient at scale. A lot of that is going to be driven by software innovations, especially in terms of how the “reinforcement learning” loops are processed around Foundation LLM AI models. These loops are what drive the increasing functionality, reliability and ‘emergent’ AI capabilities that have so enthralled everyone around the world. Both for consumers and businesses.

Beyond the pragmatic near term issues, I’d like to end with this longer-term insight by the co-founder and Chief Scientist of OpenAI. It came up in the Anthropic interview above by Founder/CEO Amodei (Bold text mine):

“It was just before OpenAI started that I met Ilya Sutskever, (OpenAI co-founder & Chief Scientist), who you interviewed. One of the first things he said to me was —

“Look. The models, they just want to learn. You have to understand this. The models, they just want to learn.”

“And it was a bit like a Zen Koan. I listened to this and I became enlightened.”

The AI hardware and software improvements just underway in these early days, it is just for the models to learn. Also to be fed constantly with Data. And then be given a lot of interaction and reinforcement feedback loops from us of course. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here).

great coverage, thank you!