One of the hardest things for regular folks to get their head around is how AI software is different from traditional software. Why is AI eating software? And why if we’ve had computers serve us faithfully for over half a century, we can’t expect them to do the same going forward. Why is there even this question of existential risks around AI? Why are the brilliant folks who invented this stuff running around saying the sky may fall on us all in a few years? Movies notwithstanding. Why do the AI researchers say they don’t know how it really works, even when they see ‘Sparks’ of amazing capabilities?

I've been wanting to address these questions for a while, and today’s piece is meant to focus on some key ideas around these critical questions. It’s longer than my usual posts, but I think it may be worth a full read on a Sunday.

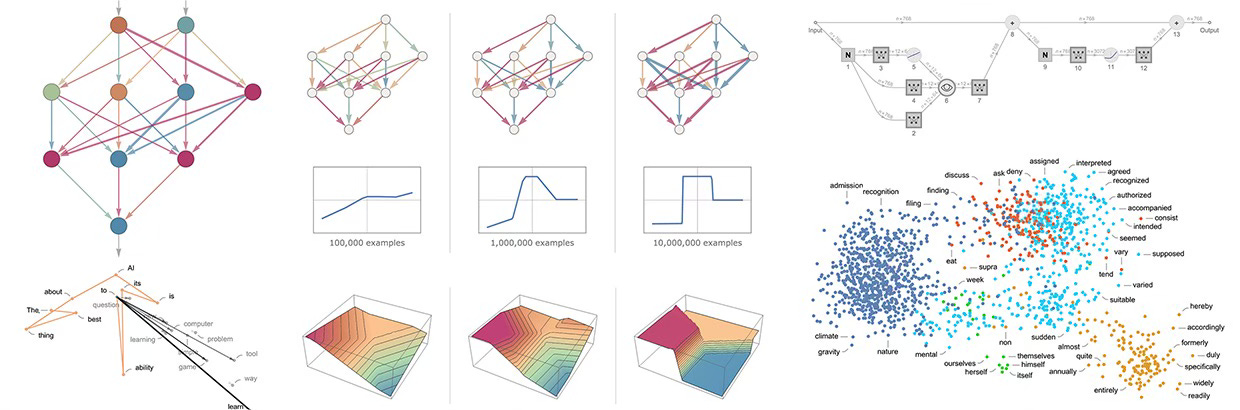

The way I’ve tried to explain AI software before is to highlight that traditional software is based on deterministic code vs AI software being run as probabilistic code. Of course on super fast parallel chips (GPUs), using massive amounts of reinforcement learning feedback loops, constantly feeding on all our Data ever put online, to make the eventual results of the Foundation LLM AI models more reliable and relevant. Less hallucinatory.

And how all this makes this AI Tech wave far more potent in human opportunities than any other tech wave before it like the PC or the Internet.

But it may be worth hearing directly on this from a deep practitioner of the LLM AI arts, Sam Bowman, who is an AI academic and practitioner at NYU, and has written important AI papers, on why these results are not like traditional software. Why even the coders do not know how all this AI code works:

“If we open up ChatGPT or a system like it and look inside, you just see millions of numbers flipping around a few hundred times a second,” says AI scientist Sam Bowman. “And we just have no idea what any of it means.”

“Bowman is a professor at NYU, where he runs an AI research lab, and he’s a researcher at Anthropic, an AI research company. He’s spent years building systems like ChatGPT, assessing what they can do, and studying how they work.”

“He explains that ChatGPT runs on something called an artificial neural network, which is a type of AI modeled on the human brain. Instead of having a bunch of rules explicitly coded in like a traditional computer program, this kind of AI learns to detect and predict patterns over time.

“But Bowman says that because systems like this essentially teach themselves, it’s difficult to explain precisely how they work or what they’ll do. Which can lead to unpredictable and even risky scenarios as these programs become more ubiquitous.”

It’s worth going into the tech weeds with him on how this really works in plain words. He goes on:

“So the main way that systems like ChatGPT are trained is by basically doing autocomplete. We’ll feed these systems sort of long text from the web. We’ll just have them read through a Wikipedia article word by word. And after it’s seen each word, we’re going to ask it to guess what word is gonna come next. It’s doing this with probability. It’s saying, “It’s a 20 percent chance it’s ‘the,’ 20 percent chance it’s ‘of.’” And then because we know what word actually comes next, we can tell it if it got it right.”

“This takes months, millions of dollars worth of computer time, and then you get a really fancy autocomplete tool. But you want to refine it to act more like the thing that you’re actually trying to build, act like a sort of helpful virtual assistant.”

“There are a few different ways people do this, but the main one is reinforcement learning. The basic idea behind this is you have some sort of test users chat with the system and essentially upvote or downvote responses. Sort of similarly to how you might tell the model, “All right, make this word more likely because it’s the real next word,” with reinforcement learning, you say, “All right, make this entire response more likely because the user liked it, and make this entire response less likely because the user didn’t like it.”

Then he puts a bow on it:

“So there’s two connected big concerning unknowns. The first is that we don’t really know what they’re doing in any deep sense. If we open up ChatGPT or a system like it and look inside, you just see millions of numbers flipping around a few hundred times a second, and we just have no idea what any of it means. With only the tiniest of exceptions, we can’t look inside these things and say, “Oh, here’s what concepts it’s using, here’s what kind of rules of reasoning it’s using. Here’s what it does and doesn’t know in any deep way.” We just don’t understand what’s going on here. We built it, we trained it, but we don’t know what it’s doing.”

“Yes. The other big unknown that’s connected to this is we don’t know how to steer these things or control them in any reliable way. We can kind of nudge them to do more of what we want, but the only way we can tell if our nudges worked is by just putting these systems out in the world and seeing what they do. We’re really just kind of steering these things almost completely through trial and error.”

And this despite the leading AI company out there, OpenAI, just having its Chief Scientist commit up to 20% of their very expensive and scarce ‘Compute’ resources to better steer this emerging ‘Superinteloigence’ AI over the next four years.

Professor Bowman finally explains how all this makes this stuff really hard to build product road maps, despite spending hundreds of millions on them;

“So basically when a lab decides to invest tens or hundreds of millions of dollars in building one of these neural networks, they don’t know at that point what it’s gonna be able to do. They can reasonably guess it’s gonna be able to do more things than the previous one. But they’ve just got to wait and see. We’ve got some ability to predict some facts about these models as they get bigger, but not these really important questions about what they can do.”

“This is just very strange. It means that these companies can’t really have product roadmaps. They can’t really say, “All right, next year we’re gonna be able to do this. Then the year after we’re gonna be able to do that.”

Here’s where it all comes down to when the critical topic of ‘Interpretability’ comes up, which is the core objective asked for by every AI regulator around the world. Chuck Schumer and his team in the US Senate and others, use the word ‘Explainability’. Terms both notwithstanding, it’s just what most mainstream folks would ask of a technology built for their daily use:

“Interpretability is this goal of being able to look inside our systems and say pretty clearly with pretty high confidence what they’re doing, why they’re doing it. Just kind of how they’re set up being able to explain clearly what’s happening inside of a system. I think it’s analogous to biology for organisms or neuroscience for human minds.”

“But there are two different things people might mean when they talk about interpretability.”

“One of them is this goal of just trying to sort of figure out the right way to look at what’s happening inside of something like ChatGPT figuring out how to kind of look at all these numbers and find interesting ways of mapping out what they might mean, so that eventually we could just look at a system and say something about it.”

“The other avenue of research is something like interpretability by design. Trying to build systems where by design, every piece of the system means something that we can understand.”

“But both of these have turned out in practice to be extremely, extremely hard. And I think we’re not making critically fast progress on either of them, unfortunately.”

“Interpretability is hard for the same reason that cognitive science is hard. If we ask questions about the human brain, we very often don’t have good answers. We can’t look at how a person thinks and explain their reasoning by looking at the firings of the neurons.”

“And it’s perhaps even worse for these neural networks because we don’t even have the little bits of intuition that we’ve gotten from humans. We don’t really even know what we’re looking for.”

“Another piece of this is just that the numbers get really big here. There are hundreds of billions of connections in these neural networks. So even if you can find a way that if you stare at a piece of the network for a few hours, we would need every single person on Earth to be staring at this network to really get through all of the work of explaining it.”

His last point on the “numbers' getting really big, is the one that’s hard for even computer programmers to get their heads around. Because our hardware GPUs chips, and AI data centers are getting ever more powerful, it’s extraordinarily easy to lose sight of the billions and trillions of queries done eventually around AI usage vs traditional software applications we’re all used to for over half a century.

For those looking for more detail on how LLM AI models work with a modestly deeper dive into the math of ‘word vectors’, ‘tokens’, and model layer calculations, I’d highly recommend this general primer by Ars Technica. For an even deeper dive into what makes LLM AIs so good at what they do, there’s this piece by tech luminary Stephen Wolfram that’s also worth a read. Suffice it to say that these algorithms assisted by massively parallel GPUs are turning words used by humans into numbers that can then be processed statistically in frequencies that are measured in the billions and trillions.

For almost every query and prompt into ChatGPT or an AI assisted search on Google and other services. Words are translated into massive amount of numbers that computers can then do with them what they do best. Run calculations backwards and forwards to figure out the probability of the next most likely ‘word’ that may be the answer the user seeks.

The Math is just off the charts. And it’s all probabilistic based on massive amounts of statistics. And it all reinforces each other in learning loops forever, getting better with their statistical analysis. Without easily being ‘interpretable’ by humans. Even if all 8 billion of us were going through the code.

And of course all this in a world where AI is going to make every one of us regular folks a programmer, as pithily said by Nvidia founder and CEO Jensen Huang. Remember, he also told us years earlier that AI was going to eat Software in 2017, weeks before Google’s famous ‘Transformers’ paper, that made the T in GPT4 possible. And of course, Nvidia’s precious, and scarce chips are what make the incredible probabilistic Math discussed above even physically possible.

And no, none of this is prone to easy replication, interpretability, or even explanation. Thus the relative inscrutability of AI as it eats Software. And the World. The AI road ahead is going to be hard to see, even with the fog lights and high beams turned on. And hope this piece makes it easier to understand why even narrow AI applications like “Full Self Driving” cars by Tesla and others are so hard, and are still likely a ways away at ‘Level 4 and 5’ capability (we’re at Level 2ish in 2023).

Hope this helped put it all in better basic context. And better understand that while AI augmentation is going to be a net good for society, it’s all going to take a bit longer than expected. Like it generally does with Tech, Thanks for reading. Stay tuned.

I think that any focus on what the "numbers in teh ANN" mean is fruitless. We cannot do it much with human brains. The approach humans use is to mold and interrogate minds with various approaches to teaching and with psychiological tests.

Reinforcement learnng should reduce hallucinations by effectively enforcing the AI not to "bullshit", to use facts and sources, and just say "I don't know" when the facts and sources are absent. Just as with humans getting caught in false narratives when not being corrected, so AIs need the same constant coaching. Without that, AI generated output could be steered in bad directions, just as we have seen with "rogue chatbots".

My sense is we need automated versions of Asimov's robot psychologist, Susan Calvin. In this case, lots of highly trained AIs to kept applying feedback and correcting the AI's responses. IMO, trying to "understand what's going on in the ANN connection architecture and synapse weights is not the way to address teh problems.

Brilliant. Now I need to stop thinking “When I can’t tell what my kids are up to, it’s usually not a good thing.” :)