AI: From 'Big AI' to 'Small AI'

...See-saw shift to Local AI Compute

Couple of days ago I discussed the AI software debate between Open and Closed. As long as the regulators stay open to both (pun intended), I think market dynamics will work out the best blend of the two for users at large.

The other dimension in AI that we can’t lose sight of is what I’d call ‘Big AI’ vs ‘Small AI’. Big AI is where the industry’s attention and dollars are curretly riveted on: Foundation LLM AI models that are getting ever more powerful running on ever more powerful GPUs in ever bigger Compute and power intensive data centers.

Think AI possibly a thousand times more powerful than today’s OpenAI GPT4, Google Palm2/Bard (and upcoming Gemini), and Anthropic’s Claude 2 in three years or less. That sure beats Moore’s Law by a mile and then some. As discussed earlier, over two hundred billion are being invested worldwide to get ready for these ‘Big Daddy’ Foundation LLM AI models.

Think Small Language Models, or SLMs. They could eventually be of bigger importance than their bigger brethren.

Ok, so where does ‘Small AI’ fit? These are far humbler and prosaic, but again ever more powerful LLM AI models increasingly being run on billions of devices already in the hands of over 4 billion users of smartphones and other computers ‘locally’. Also known as ‘the Edge’ as I’ve referred to it here and here. They're starting small today on photo and other apps on your phone, and will spread to almost every app and services on billions of local devices, tapping into near infinite local and cloud data sources.

Think of almost everything in Box 6 of the AI Tech Wave chart below running on local apps on your devices. Many of these currently run on ‘Big AI’ Foundation LLM AI infrastructure in the cloud today, but a lot of these will soon be shift to also running in ‘Small AI’ versions on local devices, for reasons cited below, as well as computing and cost efficiencies.

Apple is in a pole position here with over two billion iPhones, iPads, Apple Watches, AirPods, Macs, and soon their Vision Pro headset platform next year, all running on Apple Silicon with lots of local GPU power. Meta is ramping up here too with their AI-enhanced Quest 3 and Smart Glasses this year, running on their open sourced Llama 2 LLM AIs. Microsoft is actively working on SLMs called Phi, going from version 1 to version 2 (Phi-2), in an effort separate from their AI partner OpenAI.

And OpenAI also present here soon with their multimodal ChatGPT on our smartphones. Both Google and Microsoft are ramping up AI capabilities for our everyday productivity software on local computers and devices. Be they closed or open, these ‘multimodal’ models are showing up fast and furious in applications you and I use already everyday.

They’re far humbler, and we barely know they’re LLM and Generative AI. Apple especially has been leading the charge here as I’ve discussed, highlighting their ‘machine learning’ driven ‘computational photography’ optimized apps, along with their Messages, Notes, and Apple Pay apps. AI in all but name.

Running these locally has three specific advantages.

Use Local Data: Meaning leverage the daily data that are already in our devices. Increasingly this means our emails, documents, browsing history, phone calls, messages, and interactions with every imaginable type of app on our devices. This ‘Extractive Data’ is distinctly different from the Data that populates those ‘Big AI’ LLM AI discussed above. It’s personal user data, many times what I’ve called our ‘Data Exhaust’. It supplements the enormous amount of cloud data ‘Big AI’ crawls every day on the internet. I’ve argued that local data over time represents an almost inexhaustible amount of data (Box 4 in the AI Tech Wave chart) to feed the LLM AI models to come.

Things emanating from our daily activities that are used for something specific and nothing else. This local data gets run on ‘training’ and ‘reinforced learning loops’ for optimized LLM AI ‘inference’ training locally on the device. Highly personal to the user on their data alone, so as to provide highly specific LLM AI results that help provide entirely new set of highly personalized perspectives and reasoning options for users, on the data generated locally every day. Now the LLM AI models and the every powerful LOCAL GPU and neural chips in Apple Silicon as well as Google and Microsoft’s equivalent ‘neural processing chips’ from Intel provide the parallel computing horsepower.

Keep the ‘personal’ Private: This is the other main advantage of ‘Small AI’ run locally. It’s private. The new applications from Apple and others are increasingly making it clear that whenever possible, the ‘inference’ loops especially are being run locally. They never go out to the Cloud. Often even the companies themselves can never have access to it and potentially be compelled to provide it to authorities on demand. This is increasingly important if both consumer and business driven AI applications can really provide reliable and safe utility to us all.

Computing and latency efficiencies: Fancy way of saying if your data and queries don’t have to go back and forth to the server, then there are minimal user delays for their results, as well as compute cost efficiencies. This is increasingly important going forward given the exponential nature of the computing loads to come. Apple highlighted Siri being able to run local queries on Apple’s latest iOS software as a case in point. These types of applications are going to be far more mainstream beyond watches and wearables. Definitely will also be on our smartphones and computers with minimal latency. This also can provide other compute cost and power efficiencies vs sending it all for ‘brute’ ‘Big AI’ computing in very expensive cloud data centers.

These three advantages of ‘Small AI’ are just the beginning. And every major big tech is already running hard and fast to deliver AI locally. As mentioned earlier, Amazon, Apple, Google and others are racing to add LLM AI capabilities to their voice assistant software and hardware devices. That’s more user data in the hundreds of millions user queries coming soon from local devices.

We saw it last week with Microsoft’s CEO Satya Nadella talking about CoPilot running on WIndows 11 already, leveraging their partner’s OpenAI GPT-4 and ChatGPT, as well as their in-house Bing Search for updated real time results. Apple will likely follow with similar moves with ‘machine learning’ on its desktop, iOS and other operating systems, all running on GPU powered Apple Silicon.

We saw it again with Google’s updated Pixel 8 smartphones and watches today, where the bulk of the presentation focused on AI optimized apps and services. Google in particular highlighted ‘Assistant with Bard’ adding new generative AI capabilities to provide more personalized help locally. Another highlight was ‘Best Take’, an AI enhanced way for the Pixel 8 camera to take a burst of photos and pick out the best shots of everyone in the group photo. It’s impressive stuff when it rolls out.

Reviewers are already noticing these small but increasingly ‘delightful’ local LLM AI features. Case in point is this review of the latest free upgrade of Mac OS Sonoma, by the Verge’s Monica Chin:

“Your Mac can fill out forms for you. I’m sorry, but this should’ve been the headline feature of macOS Sonoma. This should’ve been the first thing shown onstage at WWDC. This should’ve been the only thing shown onstage at WWDC. You’re telling me that Apple’s neural network can fill out my insurance claim forms? My NDAs? You’re telling me the days of manually filling out these thousand-page medical intake packets are over? I’m giddy just thinking about it.”

This is humble generative AI working locally. No muss, no fuss. Just there to go to work in ways software has never been able to do this kind of stuff before.

Collecting these local usage queries driven off local data and adding them to the large pool of queries and data daily is like collecting rain drops to build mighty lakes and reservoirs. The drops add up. And in those case, these highly personal data-driven queries also make the results of the what we’re calling ‘Small AI’ more reliable, accurate and safe for users. Especially since users are likely to pay more attention curating to these results given their relevance to their daily activities in apps and services.

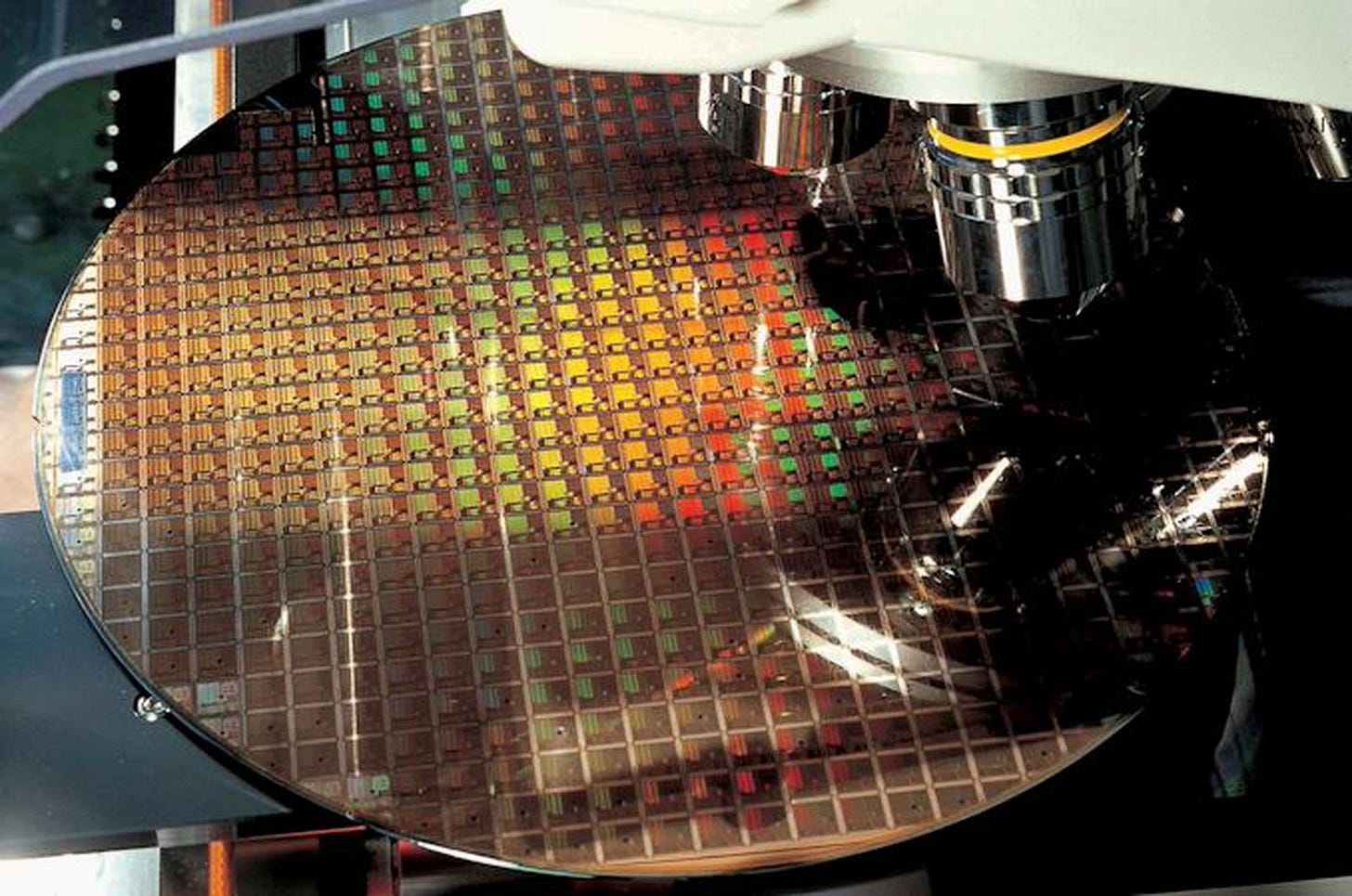

On the infrastructure side, we will be soon be focused on a chip race with local GPUs and neural processors, just as vigorously as the private and public markets are focused on cloud GPUs from Nvidia and other chip vendors. Competitors like AMD, Intel and others are already focused on ‘inference’ driven local GPU chips, as are foundries like Taiwan Semiconductor (TSMC), which are the majority source of both cloud and next generation device chips.

For example, it’s noted by industry specialists that Apple bought out all of TSMC’s capacity on next-gen 3nm (nanometer) chips for its iPhone 15 smartphones this year. And competitors are scrambling to get up to speed on that technology, especially in China, who continues to face US restrictions.

Google today highlighted its G3 next generation processors in the latest versions of their Pixel 8 smartphones:

“This past year we’ve seen incredible AI breakthroughs and innovations — but a lot of those are built on the kind of compute power only available in a data center. To bring the transformative power of AI to your everyday life, we need to make sure you can access it from the device you use every day. That's why we're so excited that the latest Pixel phone features our latest custom silicon chip: Tensor G3.”

“Our third-generation Google Tensor G3 chip continues to push the boundaries of on-device machine learning, bringing the latest in Google AI research directly to our newest phones: Pixel 8 and Pixel 8 Pro.”

All the AI software in the world goes nowhere without efficient, parallel processing chip infrastructure in both the cloud and devices. Again as explained before, every AI calculation vs traditional software computing is hundreds to thousands of times more calculation intensive. And chip vendors are anticipating and counting on the next leg of the AI infrastructure gold rush in units at least being in local AI GPU, memory, networking, and related chips vs the current rush for cloud GPU chips and related infrastructure.

It’s early days on the AI Tech Wave as discussed before and AI software is blending rapidly with traditional software. A big trend ahead is the race for ‘Small AI’ infrastructure, apps and services. All being layered on top of the ongoing race for ‘Big AI’ infrastructure, apps and services.

Many see-saws and roller-coasters in the financial and secular tech waves ahead. It’s a multi-layered race, on an accelerating course, both Big and Small. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)