AI: Nvidia 'Rubin' adds to AI GPU cubes. RTZ # 377

...'accelerated computing' hits annual rhythm on AI GPU infrastructure built on Hopper & Blackwell

Nvidia’s founder/CEO Jensen Huang made it clear that Nvidia’s ‘accelerated computing’ aggressive roadmap for 2024 and beyond is not slowing down after its GTC Developer conference just a couple of months ago.

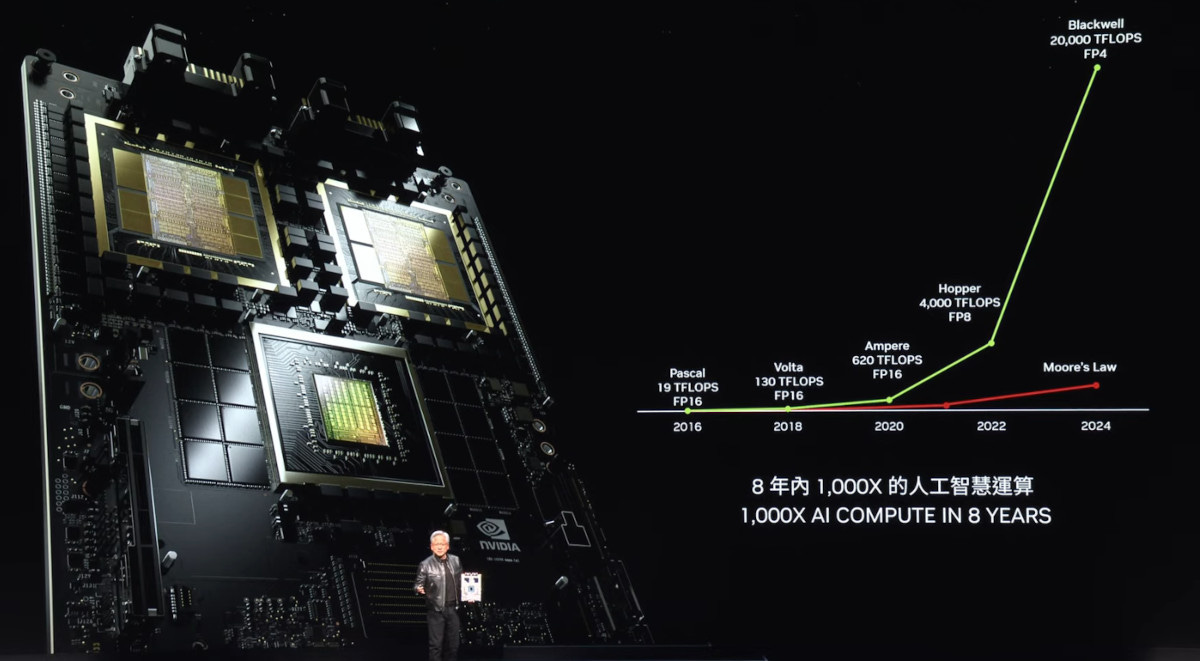

At a two hour keynote address in Taiwan’s Computex PC show, he outlined an AI GPU driven infrastructure map that showcased an annual cadence of upgrades for the company’s flagship AI GPU chips and systems. It’s industry-leading Hopper and Blackwell line of AI GPU chips, already in global white hot demand, now seeing ‘Rubin’ added in 2026.

A 15 minute summary of the keynote by CNET available here.

‘Accelerate Everything’, he underlined:

“Emphasizing cost reduction and sustainability, Huang detailed new semiconductors, software and systems to power data centers, factories, consumer devices, robots and more, driving a new industrial revolution.”

“‘Generative AI is reshaping industries and opening new opportunities for innovation and growth,’ NVIDIA founder and CEO Jensen Huang said in an address ahead of this week’s COMPUTEX technology conference in Taipei.”

“Today, we’re at the cusp of a major shift in computing,” Huang told the audience, clad in his trademark black leather jacket. “The intersection of AI and accelerated computing is set to redefine the future.”

“Huang spoke ahead of one of the world’s premier technology conferences to an audience of more than 6,500 industry leaders, press, entrepreneurs, gamers, creators and AI enthusiasts gathered at the glass-domed National Taiwan University Sports Center set in the verdant heart of Taipei.”

Moving beyond this year’s Hopper, and upcoming Blackwell line of chips, he outlined plans for Rubin in 2026, connecting the dots to an exponential line of improvements in AI training and inference at scale.

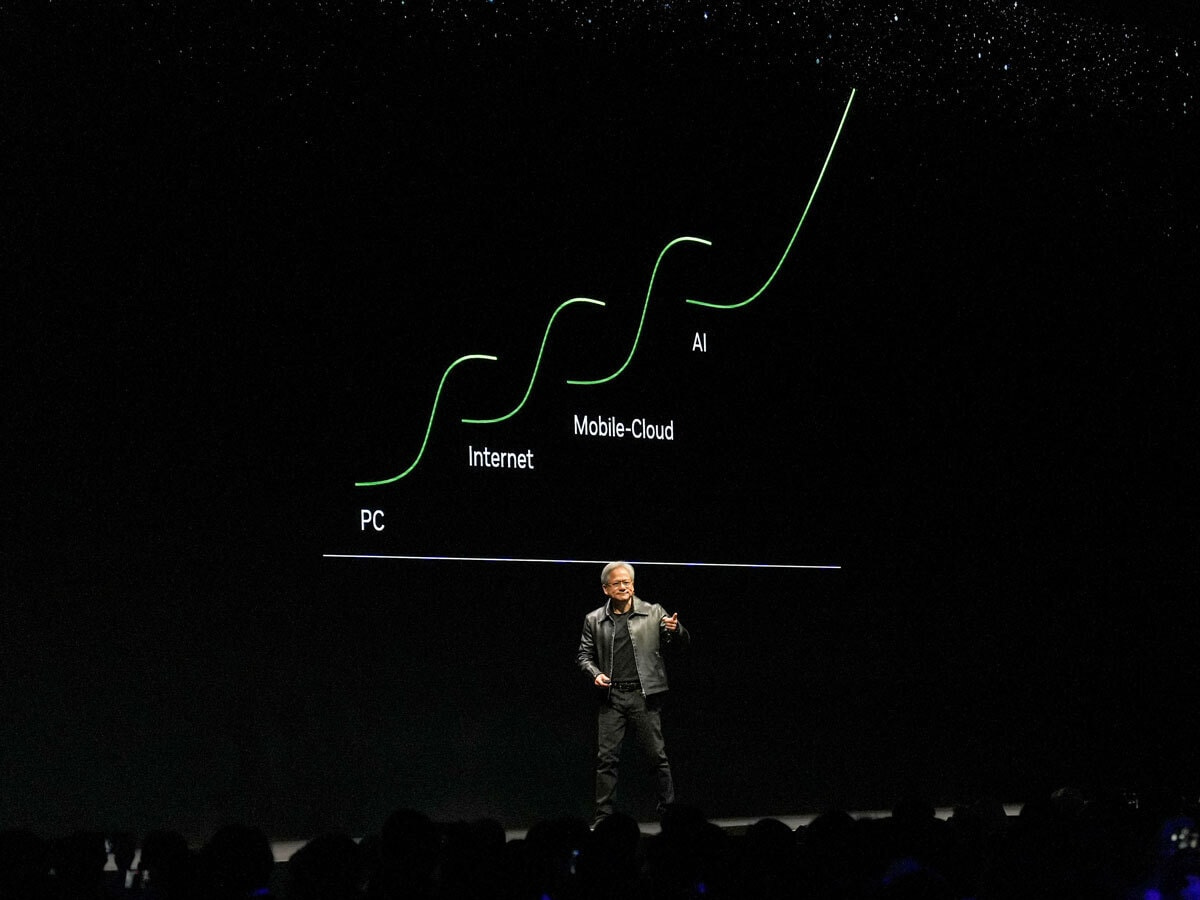

As I’ve outlined before, this AI Tech Wave is accelerated by the software innovations by OpenAI, built on the AI Scaling hardware imperatives of Nvidia. Boxes 1 through 3 below, fueled of course by the never-ending ‘Extractive’ Data in Box 4:

The Computex keynote emphasized the increased cadence of AI hardware/software innovation by Nvidia. As Bloomberg outlined “Nvidia Unveils Next-Generation Rubin AI Platform for 2026”:

“CEO Jensen Huang reveals plans for annual upgrade cycle.” Company details plans for Blackwell Ultra and subsequent chips called Rubin.”

“Nvidia Corp. Chief Executive Officer Jensen Huang said the company plans to upgrade its AI accelerators every year, announcing a Blackwell Ultra chip for 2025 and a next-generation platform in development called Rubin for 2026.”

“The company — now best known for its artificial intelligence data center systems — also introduced new tools and software models on the eve of the Computex trade show in Taiwan. Nvidia sees the rise of generative AI as a new industrial revolution and expects to play a major role as the technology shifts to personal computers, the CEO said in a keynote address at National Taiwan University.”

““We are seeing computation inflation,” Huang said on Sunday. As the amount of data that needs to be processed grows exponentially, traditional computing methods cannot keep up and it’s only through Nvidia’s style of accelerated computing that we can cut back the costs, Huang said. He touted 98% cost savings and 97% less energy required with Nvidia’s technology, saying that constituted “CEO math, which is not accurate, but it is correct.”

The announcements of course augur well for chip making companies up and down the line like TSMC on the ‘fab’ front, Korea’s SK Hynix on the High-Bandwidth Memory (HBM) front, and others.

“Shares of Taiwan Semiconductor Manufacturing Co. and other suppliers rose after the announcement. TSMC’s stock climbed as much as 3.9%, while Wistron Corp. gained 4%.”

SK Hynix is sold out on HBMs through 2025.

“Huang said the upcoming Rubin AI platform will use HBM4, the next iteration of the essential high-bandwidth memory that’s grown into a bottleneck for AI accelerator production, with leader SK Hynix Inc. largely sold out through 2025. He otherwise did not offer detailed specifications for the upcoming products, which will follow Blackwell.”

Analysts got the message:

““I think teasing out Rubin and Rubin Ultra was extremely clever and is indicative of its commitment to a year-over-year refresh cycle,” said Dan Newman, CEO and chief analyst at Futurum Group. “What I feel he hammered home most clearly is the cadence of innovation, and the company’s relentless pursuit of maximizing the limit of technology including software, process, packaging and partnerships to protect and expand its moat and market position.”

All of this is of coursed well noticed in the public markets. With Nvidia better than doubling this year alone, the company continues to garner the lion’s share of the tens of billions plus being expended to build AI data centers and related infrastructure around the world.

I’m particularly focused on Nvidia’s Inference Microservices (NIM) initiative outlined at GTC, and accelerated at Computex. But a lot to digest here in the technical weeds, with key buzzwords like ‘digital twins’, DGX, Omniverse, Isaac, Infiniband, Spectrum-X, and much more. Particularly on what it all means in Nvidia’s DGX strategy with AI cloud data centers, which are soaking up tens of billions in AI infrastructure investments. Will have more on all this in future pieces.

More broadly, on Nvidia as a public stock, the performance also has been on its own cadence. As investment manager Josh Brown noted pithily a few days ago:

“Over the three days since reporting earnings on Wednesday, Nvidia added over half a trillion dollars in market cap as active managers chased it and the retail investor got excited about a 10-for-1 stock split happening in June. It appears to be on its way to a $3 trillion market cap. It’s one of the fastest growing companies in the world right now (by revenue and earnings) and it’s also one of most profitable businesses of all time (50% margins - not normal for a component supplier). So of course the stock is up a lot.”

The cumulative math is imposing and intimidating to say the least:

“Nvidia is worth more than the combined value of Amazon, Walmart and Netflix.

“Nvidia is worth more than JPMorgan, Berkshire Hathaway and Meta stacked on top of each other.”

“Nvidia is worth the same as McDonalds, Pepsi, Salesforce, AMD, Bank of America, Johnson & Johnson, Costco and Proctor & Gamble COMBINED.”

I’ve often said in these pages that in Tech waves and cycles over the decades, it’s always important to separate the financial cycles from the secular ones.

The company’s continued cadence on secular AI technologies continues at a market and industry leading pace. The financial cadence will accordingly be dramatic and volatile at the same time.

At times like this, and they’re relatively rare, it’s important to keep an eye on the core technology improvements. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)