AI: Nvidia Focused on Inference

...No grass growing on Inference vs Training Chip Race

Nvidia of course continues to be the toast of the town at this stage of the AI Tech Wave. Especially from a media perspective. And Nvidia is not resting on its laurels. Or letting the grass grow at its feet. The latest WIred magazine profile on Nvidia founder/CEO Jensen Huang titled “Nvidia Hardware is Eating the World” highlights this energy (Some links below mine):

“Nvidia CEO is so invested in where AI is headed that, after nearly 90 minutes of spirited conversation, I came away convinced the future will be a neural net nirvana. I could see it all: a robot renaissance, medical godsends, self-driving cars, chatbots that remember. The buildings on the company’s Santa Clara campus weren’t helping. Wherever my eyes landed I saw triangles within triangles, the shape that helped make Nvidia its first fortunes.

No wonder I got sucked into a fractal vortex. I had been Jensen-pilled.”

“Huang is the man of the hour. The year. Maybe even the decade. Tech companies literally can’t get enough of Nvidia’s supercomputing GPUs. This is not the Nvidia of old, the supplier of Gen X video game graphics cards that made images come to life by efficiently rendering zillions of triangles. This is the Nvidia whose hardware has ushered in a world where we talk to computers, they talk back to us, and eventually, depending on which technologist you talk to, they overtake us.”

The whole piece is worth reading. For those who would like a TL;DR summary, Ben’s Bites obliges:

“Nvidia hardware is eating the world. Wired interviewed Jensen Huang, Nvidia’s CEO and here are some bites that stood out to me.

Nvidia is building a new type of data centre called AI factory. Every company—biotech, self-driving, manufacturing, etc will need an AI factory.

Jensen is looking forward to foundational robotics and state space models. According to him, foundational robotics could have a breakthrough next year.

The crunch for Nvidia GPUs is here to stay. It won’t be able to catch up on supply this year. Probably not next year too.

A new generation of GPUs called Blackwell is coming out, and the performance of Blackwell is off the charts.

Nvidia’s business is now roughly 70% inference and 30% training, meaning AI is getting into users’ hands.

Nvidia's also announced new AI chips in a laptop-friendly package. The new RTX 500 and 1000 Ada Generation laptop GPUs are perfect if you want to run crazy generative AI models.Our Summary (also below).”

The reason I’m focusing on these pieces is the last bullet in point 1 above focusing on Nvidia’s business being 70% inference and 30% training. This of course is the competitive dynamic that the public markets and investors are focused on in terms of a possible wedge by competitors into Nvidia’s dominance on the training side, where its chips power most of the Foundation LLM AI models by OpenAI, Microsoft, Meta, Apple and others. An exception of course is Google with its TPU chips, but training is the high dollar item in LLM AI currently.

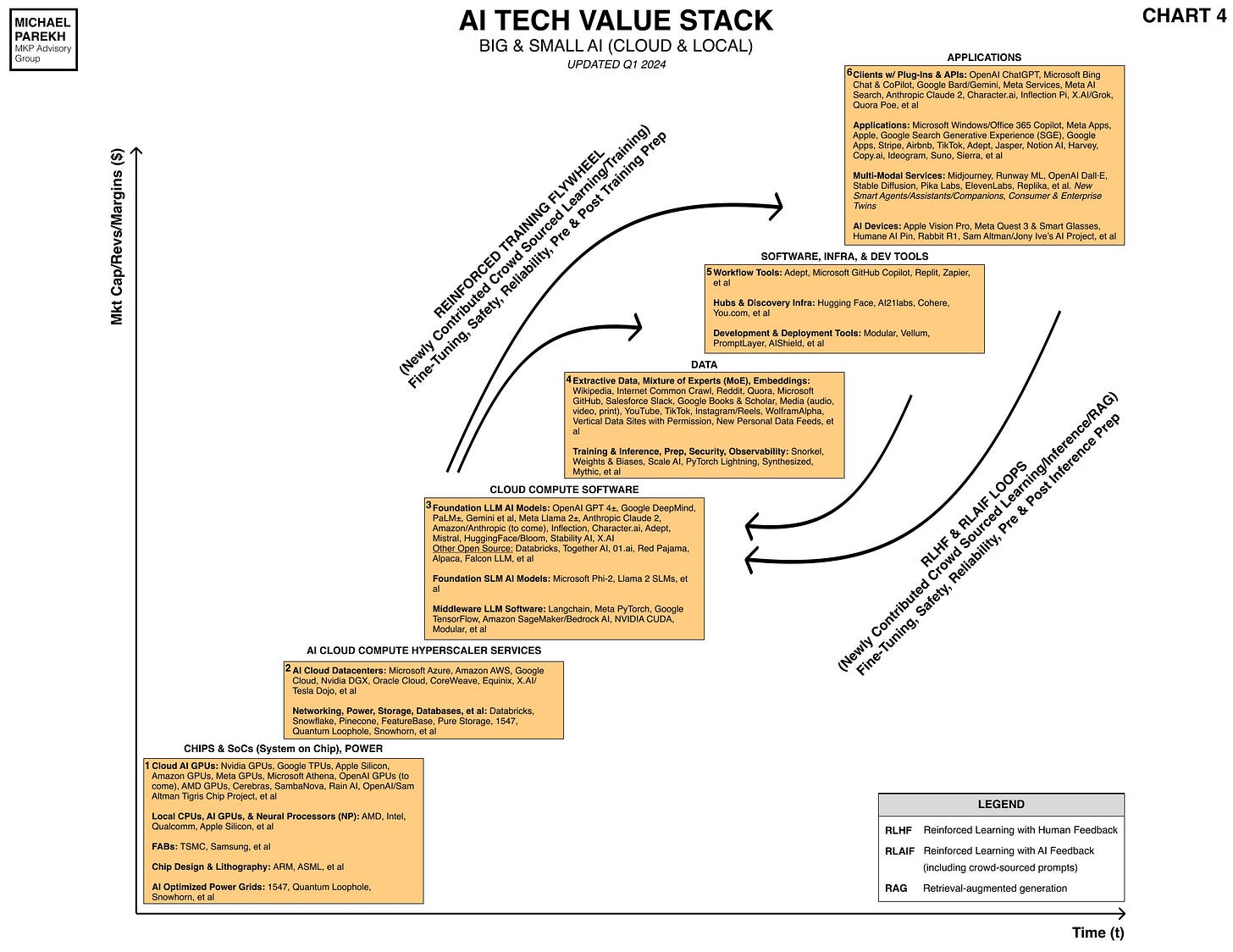

Inference of course refers to the ‘Reinforcement Learning Loops’ I’ve discussed at length before in my AI Tech Wave chart below.

These loops are driven by end user AI queries both direct and via APIs, which make the underlying Foundation models ‘smarter’ in providing more relevant replies that are less ‘hallucinatory’.

This is the area that other semiconductor companies like Intel, AMD and others see an opportunity to break into the AI chip game, and Nvidia is not ignoring that reality. Thus the visible focus on their training vs inference mix above. There will be a lot more on this from me in terms of competitive dynamics of ‘Big and Small AI’, but for now, note that Nvidia is not letting the Inference grass grow at its feet.

The Inference chips race is on. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)