AI: Markets 'Priced for Perfection'

...will need both 'Big & Small AI' together to drive secular AI tech at scale

Markets driven by big tech ‘Magnificent 7’, and private AI markets and their unicorns may increasingly priced for perfection. The financial markets have been buoyed to date by enthusiasm over the ‘AI Tech Wave’ over the past fifteen plus months since OpenAI’s ‘ChatGPT moment’ in November 2022.

As I’ve articulated for some time now, we’re likely going to need both ‘Big AI’ AND what I’ve been calling ‘Small AI’ to provide the next secular wave of innovation and user traction this year and next. Let me explain.

First, the signs of markets priced for perfection are abundant, especially in the public markets. As the WSJ notes in ‘Big Tech Stocks Find Little Room for Error after Monster Run’:

“Investors have a simple request for tech titans this earnings season: Nothing less than perfection.”

“The “Magnificent Seven” group of tech companies has been the stock market’s biggest engine for growth and profits over the past year. But after five of the companies turned in strong quarterly results last week, investors are being picky about which ones they reward.”

“Magnificent Seven shares have surged over the past year, propelled by bets that they would be the biggest beneficiaries of a coming boom in artificial intelligence technology. They have also helped send the overall stock market to repeated records: The S&P 500 is up 3.6% to start 2024.”

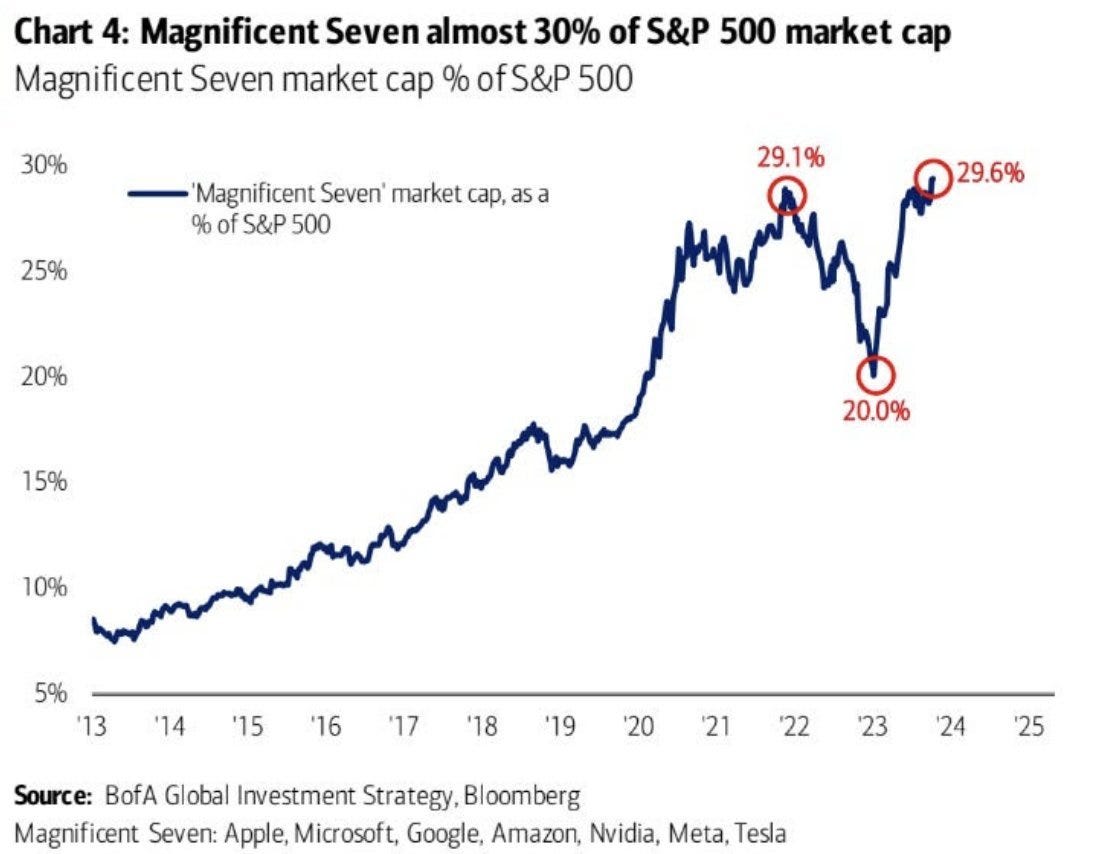

As I’ve observed before, the big tech stocks are nudging 30% of the S&P 500 already, a relative high historically.

This trend of ‘priced for perfection’ is of course also evident on the private market side for AI companies large and small. As The Information notes today in “Some OpenAI Investors Sit Out Share Sale, Reflecting AI Divide”:

“After a yearlong frenzy to invest in AI startups, some VC firms are largely avoiding the buzzy sector, chilled by the high valuations of OpenAI and worried about the threat of Google and Amazon.”

This is not to say the AI tail winds have stopped blowing, just that they may be tempering a bit.

Part of the issue here besides general valuations in the public and private markets, is just that investors are looking for what current and new AI products and services see traction at scale going forward. And some of the signs seem to point to innovations in ‘Small AI’ converging with the raging activity around ‘Big AI’. As Axios notes today in “The Push to make Big AI Small”:

“An effort to develop smaller, cheaper AI models could help put the power of machine learning in the hands of more people, products and companies.”

“Why it matters: Large language models get most of the AI attention, but even those that are open source aren't practical for many AI researchers who want to iterate on them to create their own models for new tools and products.”

“Some LLMs use more than 100 billion parameters to generate an output for a prompt and require significant and expensive computing power to train and run.”

“The intrigue: For the AI neural networks that fueled the latest AI wave, bigger has generally meant better — larger models trained by using more data seem to perform better.”

“But,"[i]t's often the case that you can create a model that is a lot smaller that can do one thing really well," says Graham Neubig, a computer science professor at Carnegie Mellon University. "It doesn't need to do everything."

“Using LLM for some tasks is like "using a supercomputer to play Frogger," writes Matt Casey at Snorkel.”

“How it works: Researchers are trying to shrink models to have fewer parameters but perform well on specialized tasks.”

“One approach is "knowledge distillation," which involves using a larger "teacher" model to train a smaller "student" model. Rather than learn from the dataset used to train the teacher, the student mimics the teacher.”

“In one experiment, Neubig and his collaborators created a model 700 times smaller than a GPT model and found it outperformed it on three natural language processing tasks, he says.”

Microsoft researchers recently reported being able to distill a GPT model down to a smaller one with just over 1 billion parameters. It can perform some tasks on par with larger models, and the researchers are continuing to hone them.”

Microsoft, from CEO Satya Nadella on down, has been proactively highlighting the opportunity for ‘Small AI’ models, with their ongoing efforts with Small Language Model (SLM) Phi 2 in particular. As the Decoder just noted in “Microsoft's mini LLM Phi-2 is now open source and allegedly better than Google Gemini Nano”:

“Phi-2 is Microsoft's smallest language model. New benchmarks from the company show it beating Google's Gemini Nano.”

“Microsoft has released more details on Phi-2, including detailed benchmarks comparing the 2.7 billion parameter model with Llama-2, Mistral 7B and Google's Gemini Nano.”

I discussed the importance of Google Gemini AI yesterday, in all three of its sizes, Nano, Pro and Ultra.

We’ll likely hear more on their strategies for all tomorrow.

So Microsoft, Google and Apple are fundamentally focused on ‘Big and Small AI’ concurrently. And of course other companies large and small, including Meta.

In the meantime, the AI industry is actively innovating at both the basic academic and commercial levels of ever new ways to make AI exponentially more capable and reliable, be the models big or small.

The latest innovation on this front may be the research effort around ‘Mergekit’. that’s a new technique for language model training known as Model Merging. It fuses multiple LLMs into a single model providing more capable models at a fraction of the cost in terms of heavy training and GPU costs.

It’s just one of the many innovations the industry is seeing at a breakneck pace, measured in weeks and months rather than years and decades.

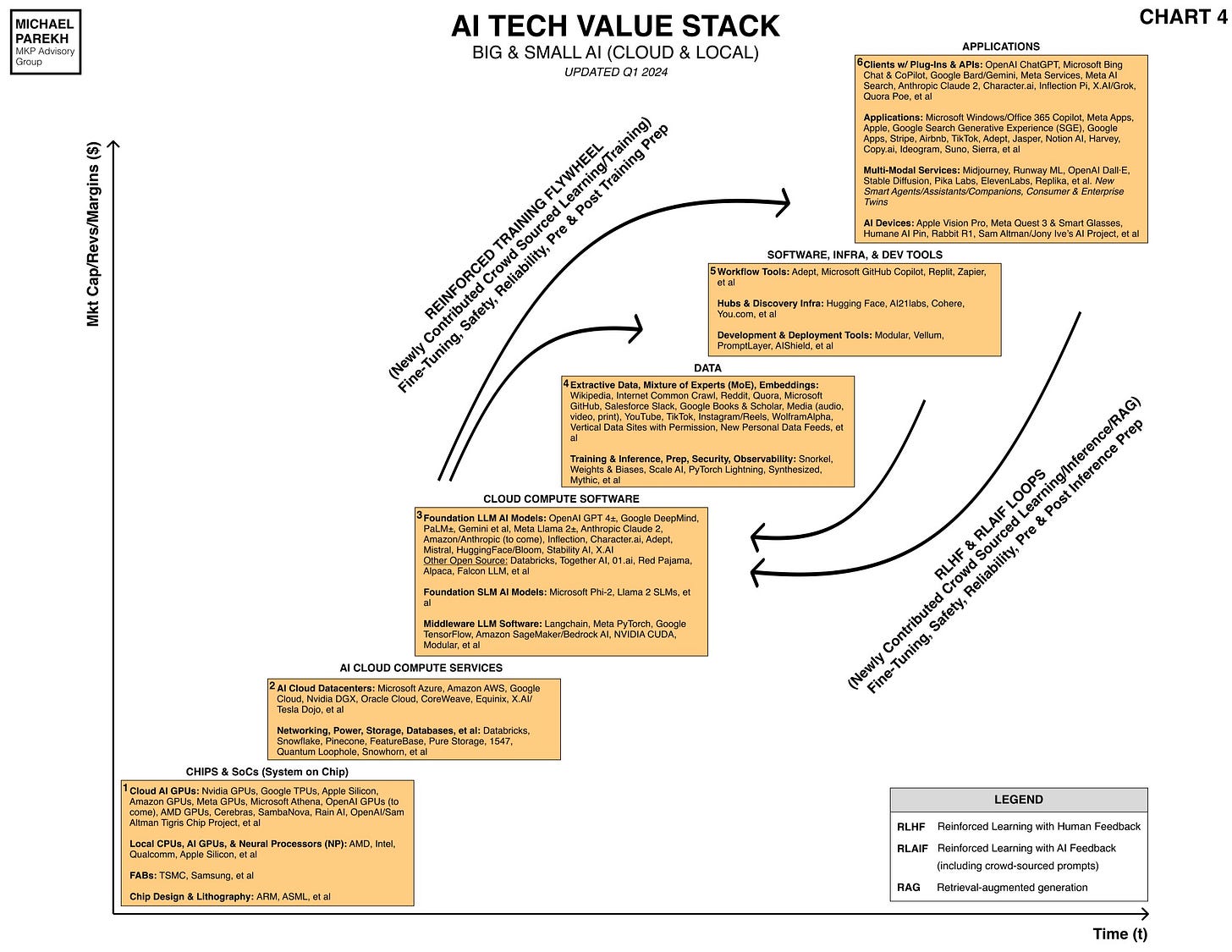

And that secular AI technology innovation, across the AI Tech Wave stack above, is what likely continues to bolster the case for ongoing financial market enthusiasm for AI innovations, both add-on and native.

Lot more to develop here in these early days, and it’ll all likely take longer than we like. But the general secular tech trends continue to be our friend for now. With Big and Small AI. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)