AI: Nvidia's GTC 2024 & AI GPU Roadmap. RTZ #300

...also, the 300th daily post here on AI: Reset to Zero

Today’s topic is Nvidia and its annual GPU GTC Conference, and how it continues to Reign in AI GPUs and related infrastructure.

But first, I wanted to take a moment to mark the 300th daily post here on ‘AI: Reset to Zero’. It’s been a long road in these early days of the AI Tech Wave. Complete with frenetic twists and turns and a never-ending race to the beginning of the AI beginning.

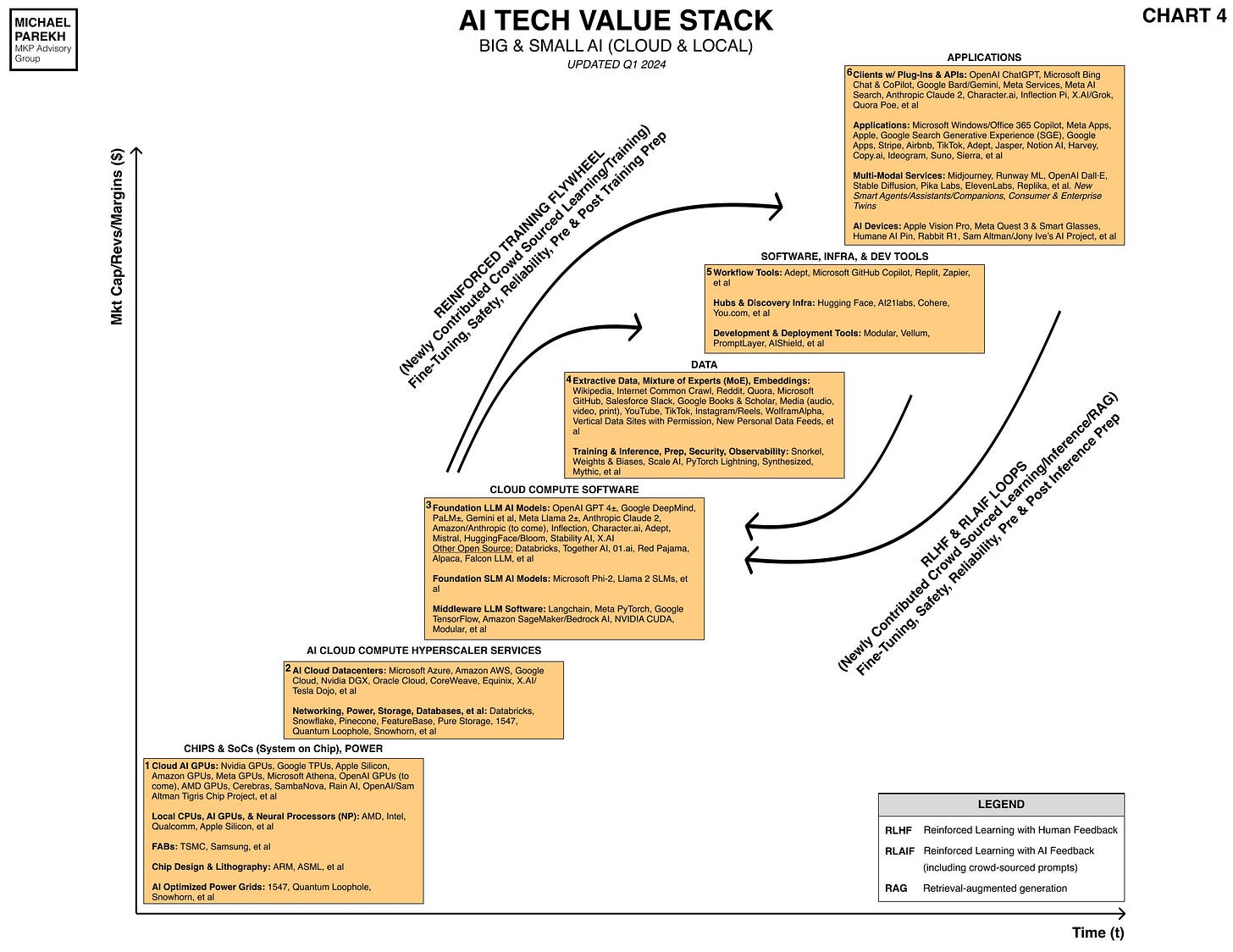

And of course dramas and stories to likely inspire many books, TV shows and movies down the road. But most importantly, as I explained in the introductory pages here, it’s an attempt to make sense of what’s to come with AI based on what’s come before. And of course a laser-focused eye on the amazing secular, driving technologies, in every one of the boxes of the AI Tech Stack this time around (See Chart below).

I thank you for coming along on this long journey. I’ll continue to do my best with daily takes on all things AI, with a long-term eye on both the financial and secular cycles in this AI Tech Wave. The writing here will continue to lean towards optimism in the long run, that AI like most technologies before it, will find a way to do good for society at large. This despite the inevitable bad that will likely be done by human, corporate, and State actors, intentionally or not. And despite the unique set of fears around AI this time relative to previous technology waves. Thanks again for being a part of this daily exercise.

Now to today’s topic. It’s an important update to Nvidia’s AI product and services roadmap, from its annual ‘GPU Technology (GTC) conference for over 11,000 customers and partners in San Jose, CA.

Nvidia, led by its uniquely capable founder/CEO Jensen Huang remains on of the few CEOs of a multi-trillion dollar cap company with over fifty direct reports. How he does that is a unique take on Nvidia’s story over three decades itself, which humbly started at a Denny’s not too far from today’s GTC conference for over 11,000 excited attendees.

A key feature of the event of course is Jensen Huang’s keynote today. Lots of items of note here, and generally worth a watch in its entirety. There’s also a 16 minute summary video of the keynote by CNET that may be helpful as well. As the WSJ introduced it in “Nvidia Unveils Its Latest Chips at ‘AI Woodstock’”:

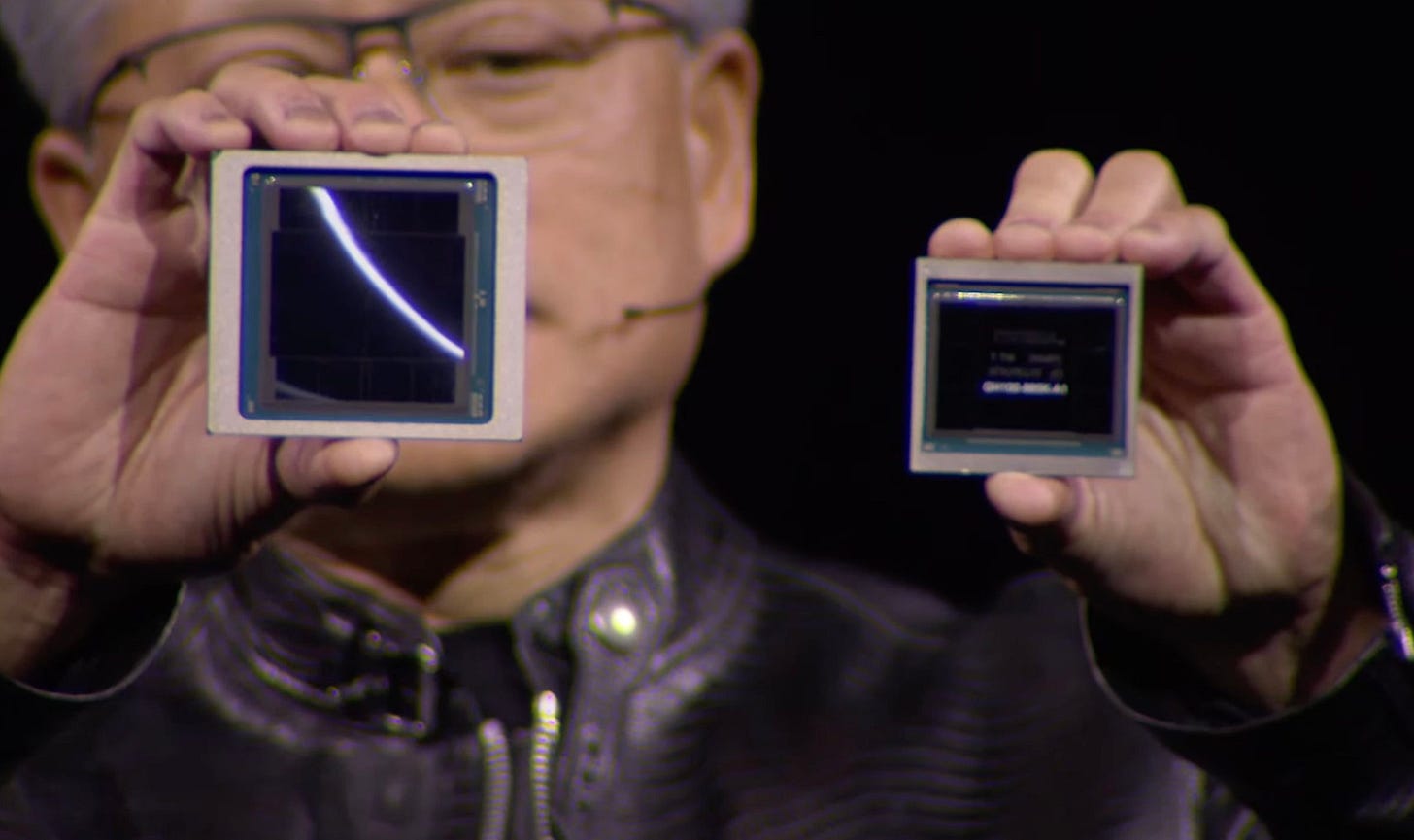

“In his address Monday, Huang gave more detail about Nvidia’s next generation of AI chips, the successors to the wildly successful H100, which has been in short supply since AI demand took off starting in late 2022.

The new chips, code-named Blackwell, are much faster and larger than their predecessors, Huang said. They will be available later this year, the company said in a statement. UBS analysts estimate Nvidia’s new chips might cost as much as $50,000, about double what analysts have estimated the earlier generation cost.”

“His vision of the future of AI will also be closely watched, as will any predictions about growth of Nvidia’s market—like his recent forecast that there will be another $1 trillion of spending on data centers in the next few years.”

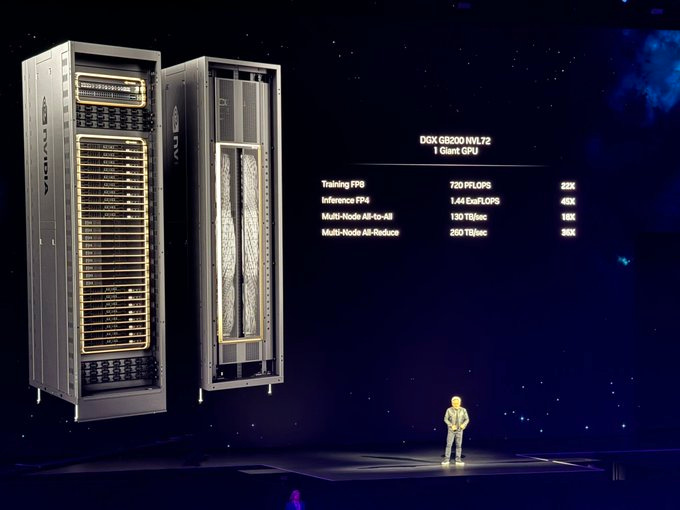

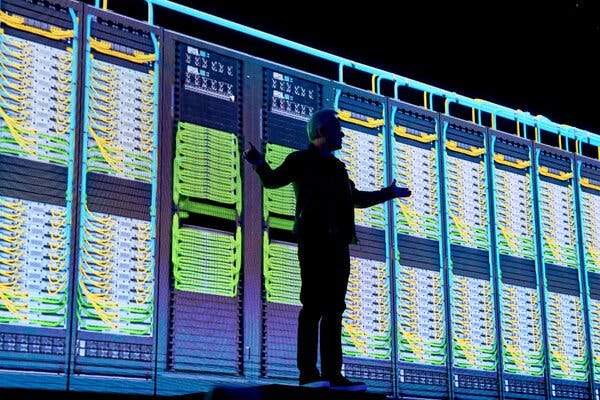

“In a highly technical presentation, Huang gave examples of the different ways the new chips can be used by customers developing and using AI systems. He also introduced new network switches designed for AI uses and supercomputer systems that incorporate the new chips.”

“Huang said the new chips could train the latest large AI models using 2,000 Blackwell GPUs using just 4 megawatts of power over about 90 days. Using the older model chips, that process would take 8,000 GPUs using 15 megawatts over the same amount of time, he said.”

“Huang also touched on his vision for some more far-flung technologies, including drug discovery, genomics and humanoid robots. Nine different humanlike robots flanked the CEO on stage near the end of his speech.”

Other highlights of his keynote include biggest hits from his prior appearances and presentations:

“In his recent appearances, Huang has outlined a vision of the future where AI drives the discovery of drugs, quantum computing and robotics, reshaping industries. He has touted Nvidia’s central role in what he terms a new industrial revolution that will require trillions of dollars of investment in data centers tuned for AI.

“The future is going to be about AI factories, and Nvidia gear will be powering AI factories,” he said in an appearance at Columbia University’s business school in October.”

I’ve written on Robotics and AI in the past, and more soon on what Jensen is discussing as ‘Foundational Robotics’. Released yesterday was also more detail on GROOT, Nvidia’s Robotics software frameworks and infrastructure. Also notable is the Nvidia NIM AI microservices offering combined with NEMO infrastructure.

And the AI driven ‘Digital Twins’ Jensen touched on throughout this keynote. It was particularly notable with Nvidia’s planned ‘AI Foundry’ offerings around what they’re calling ‘AI Factories’, which are customized versions of Nvidia AI data centers brimming with Nvidia AI infrastructure. Potential customers would be companies large and small, across a cross-section of industries. I’ll have more on NIM micrososervices and the above AI Foundry/Factory strategies in coming posts.

These could all be a meaningful contributor to Nvidia working its way up the AI Tech Value Stack all the way to Box 6 from Box 1 above (see chart), competing with its best customers like OpenAI, Microsoft, Google, Amazon and others. Indeed, it’s notable that the current version of the NIM micro-services leverages Metas’s open-source Llama-2 70 billion parameter model and up. Shows the room for growth around open source AI infrastructure at scale. As Jensen keeps saying, Nvidia is not ‘just’ a GPU company. He’s productizing 'Artificial Intelligence’ as a service (AIaaS in other words):

“In the 1920s, water went into a generator, and DC power came out. Now electrons go into a generator, and intelligence comes out.”

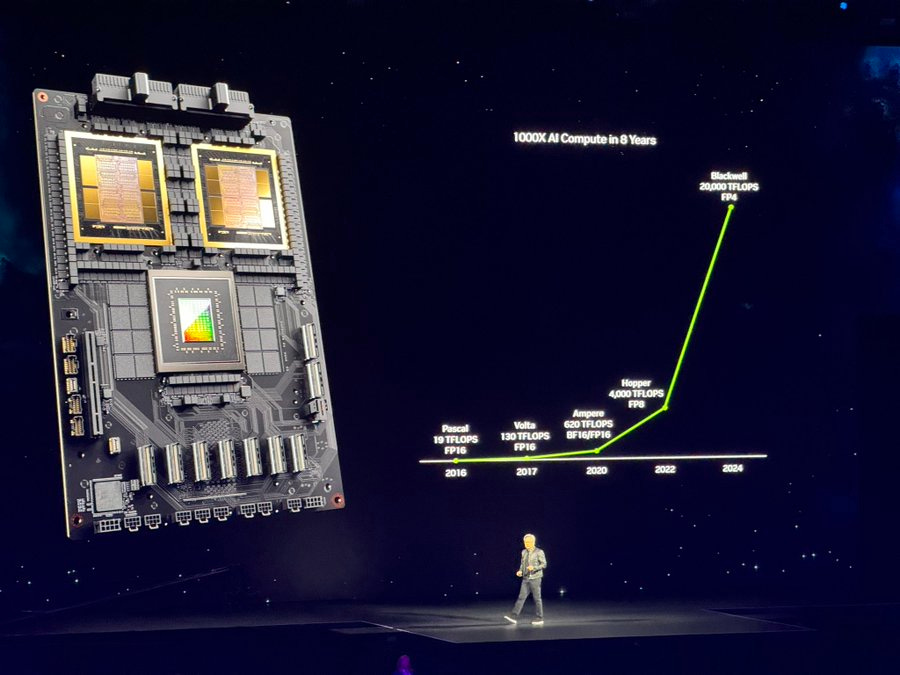

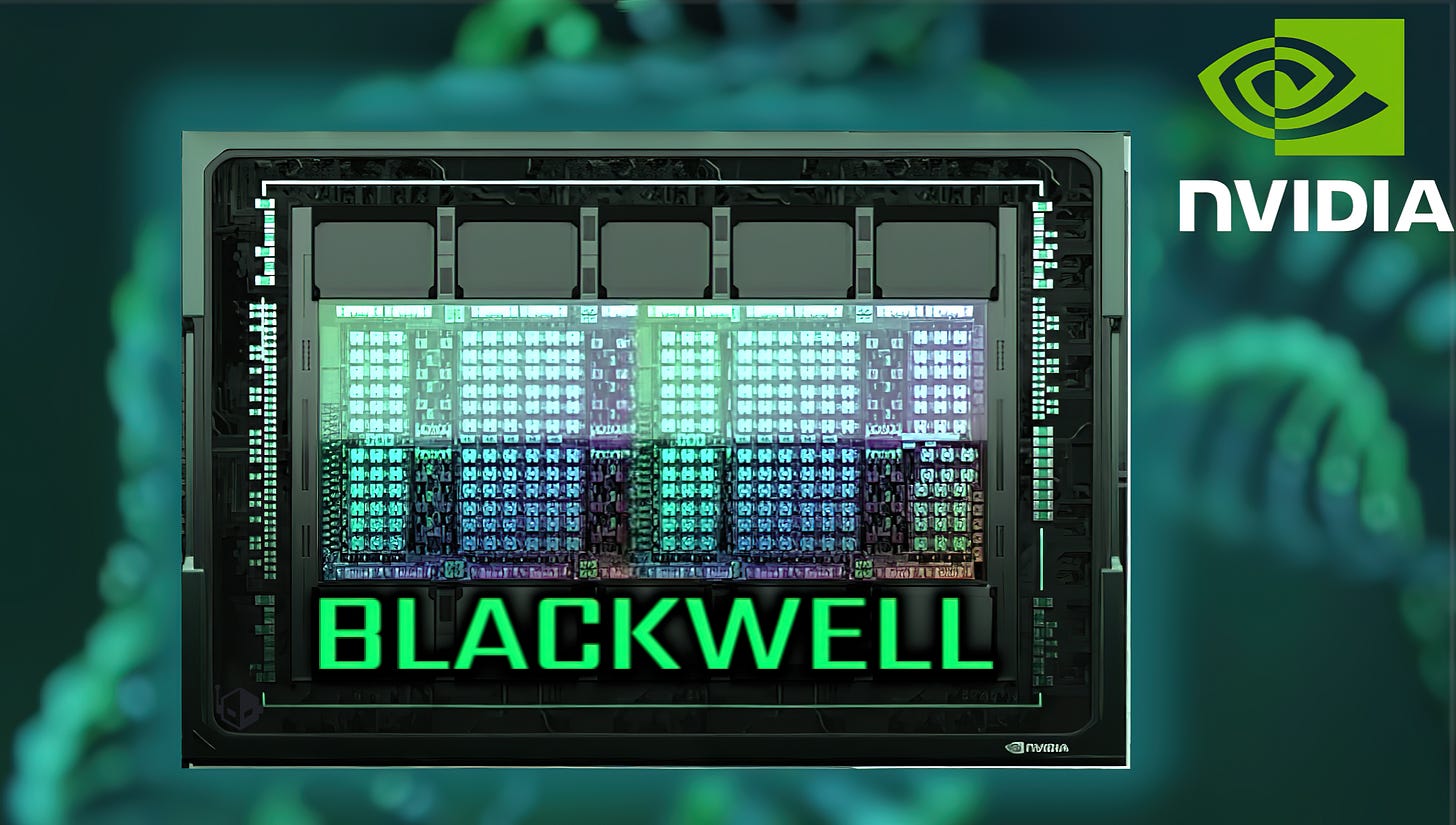

The ‘Blackwell’ B100 and B200 AI GPUs transition from the highly successful ‘Hopper’ H100 chips, as The Verge outines here:

“Nvidia’s must-have H100 AI chip made it a multitrillion-dollar company, one that may be worth more than Alphabet and Amazon, and competitors have been fighting to catch up. But perhaps Nvidia is about to extend its lead — with the new Blackwell B200 GPU and GB200 “superchip.”

“Nvidia says the new B200 GPU offers up to 20 petaflops of FP4 horsepower from its 208 billion transistors. Also, it says, a GB200 that combines two of those GPUs with a single Grace CPU can offer 30 times the performance for LLM inference workloads while also potentially being substantially more efficient. It “reduces cost and energy consumption by up to 25x” over an H100, says Nvidia.”

At tens of thousands of dollars per chip clustered in racks with Nvidia connectivity chips and gear, these chips can speed up both training and inference of LLM AI models in both time and money. Outlays for major customers for thousands of these chips and infrastructure will still be in the hundreds of millions of dollars and up.

In terms of the Nvidia chip road map itself, Semianalysis has a detailed take on the coning AI GPU lineup from Nvidia, expanding from today’s top of the line H100s to its ‘Blackwell’ B100, B200, GB200 and beyond:

“Nvidia is on top of the world. They have supreme pricing power right now, despite hyperscaler silicon ramping. Everyone simply has to take what Nvidia is feeding them with a silver spoon. The number one example of this is with the H100, which has a gross margin exceeding 85%. The advantage in performance and TCO continues to hold true because the B100 curb stomps the AMD MI300X, Gaudi 3, and internal hyperscaler chips (besides the Google TPU).”

Of note of course is that Nvidia’s top customers from Microsoft to Google to Meta to Amazon and beyond, are all readying their own AI chips. These investments are measured in billions of dollars and take multiple years for results at scale. As Semianalysis notes (links below mine):

“On the custom front all of Nvidia’s major customers are designing their own chips. Only Google have been successful to date, but Amazon continues to ramp Inferentia and Trainium, even though the current generation is not great, Meta is betting big on MTIA long term, and Microsoft is starting their silicon journey as well. As the hyperscalers dramatically increase their capital expenditure to defend their moats in the Gen AI world, the more hyperscaler dollars are ending up as Nvidia’s gross profits. There is an extreme sense of urgency to find alternatives.”

Despite this upcoming ferocious competition with its best customers, Nvidia remains in a strong position on both LLM AI Training and Inference due to its long term canny moves around AI GPUs, open source software infrastructure like CUDA, timely key AI Compute and Networking acqusitions, and other moves.

The NY Times has a piece worth reading on these moves vs its customers/competitors:

“Over more than 10 years, Nvidia has built a nearly impregnable lead in producing chips that can perform complex A.I. tasks like image, facial and speech recognition, as well as generating text for chatbots like ChatGPT. The onetime industry upstart achieved that dominance by recognizing the A.I. trend early, tailoring its chips to those tasks and then developing key pieces of software that aid in A.I. development.”

“Jensen Huang, Nvidia’s co-founder and chief executive, has since kept raising the bar. To maintain its leading position, his company has also offered customers access to specialized computers, computing services and other tools of their emerging trade. That has turned Nvidia, for all intents and purposes, into a one-stop shop for A.I. development.”

“While Google, Amazon, Meta, IBM and others have also produced A.I. chips, Nvidia today accounts for more than 70 percent of A.I. chip sales and holds an even bigger position in training generative A.I. models.”

Indeed a roadmap on Nvidia for now continues to Reign in AI infrastructure.

Also of note at the Nvidia GTC Conference this week is Jensen Huang hosting a panel with the original authors of the key 2017 Google AI “Attention is all you need’ paper that gave the world ‘Transformers’, and kicked off this Generative AI gold rush now seven years ago. And where they all are now of course.

It’s going to be a very busy hardware and data center expansion period for all the participants in Boxes 1 through 3 in the AI Tech Wave Stack below:

Lots of hardware and software innovation here across the ecosystem, and a lot for potential customers large and small to absorb, digest, test and implement.

As they figure out their own AI products and services further up the AI value stack above in Boxes 4 through 6. Nvidia for now still reigns. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

Congratulations on your 300th daily post!

I watched teh 2 hour keynote. The question is - is teh stock overpriced or not? I understand the many applications that can use GPUs to accelerate work, and clearly some of it is extremely impressive. But the perception is that AI is going to drive hardware sales. The "accelerationists" are touting a future full of usefiul AI, even AGI, as the backened server farms scale. But what if that does not happen? We've seen this overoptimism before - Silicon Graphics, Sun Micro, and now could it be NVIDIA?

Thoughts as this is yor field of expertise.