Meta remains the biggest of the ‘Magnificent 7’ to invest billions in state of the art AI GPU driven infrastructure, that is also pushing hard on its Llama 3 Foundation LLM AI open-source roots. And of course its open source Pytorch and other AI software infrastructure. Led by founder/CEO Mark Zuckerberg, Meta outlined its strategy in “Building Meta’s GenAI Infrastructure”:

“Marking a major investment in Meta’s AI future, we are announcing two 24k GPU clusters. We are sharing details on the hardware, network, storage, design, performance, and software that help us extract high throughput and reliability for various AI workloads. We use this cluster design for Llama 3 training.”

“We are strongly committed to open compute and open source. We built these clusters on top of Grand Teton, OpenRack, and PyTorch and continue to push open innovation across the industry.”

“This announcement is one step in our ambitious infrastructure roadmap. By the end of 2024, we’re aiming to continue to grow our infrastructure build-out that will include 350,000 NVIDIA H100 GPUs as part of a portfolio that will feature compute power equivalent to nearly 600,000 H100s.”

“To lead in developing AI means leading investments in hardware infrastructure. Hardware infrastructure plays an important role in AI’s future. Today, we’re sharing details on two versions of our 24,576-GPU data center scale cluster at Meta. These clusters support our current and next generation AI models, including Llama 3, the successor to Llama 2, our publicly released LLM, as well as AI research and development across GenAI and other areas.”

Those ‘H100s’ of course refer to Nvidia’s top of the line AI GPUs that are currently in white hot demand worldwide at over $30,000 per chip. So the above math of course suggests tens of billions in AI Infrastructure investment by Meta alone. That puts it at the front of the line next to Microsoft, OpenAI, Google, and soon Apple for Nvidia’s top-of-line AI chips well into next year and beyond.

As Ben’s Bites explains further, one of the key benefits for Meta is this spend making it a key place to work for the world’s top AI researchers:

“Zuck keeps on giving. First the Llama models, and now sharing details about their hardware work too. To be fair, often, a big part of releasing this in public is to attract insane talent. The promise is simple, Meta has the resources you need to do awesome research.”

AI chips and talent are the two top inputs for the major Foundation LLM AI companies that are driving the tens of billions in public and private investments this eary in the AI Tech Wave. Some estimates have the industry spending over a $100 billion in AI talent alone worldwide.

Meta despite not having a separate AI data center business like its peers Microsoft, Google, Amazon, Nvidia, and others, is investing at almost an equal clip to serve its multiple billion users worldwide with AI apps, services, and of course AI chip, power, and data center infrastructure to drive its industry-leading monetization efforts.

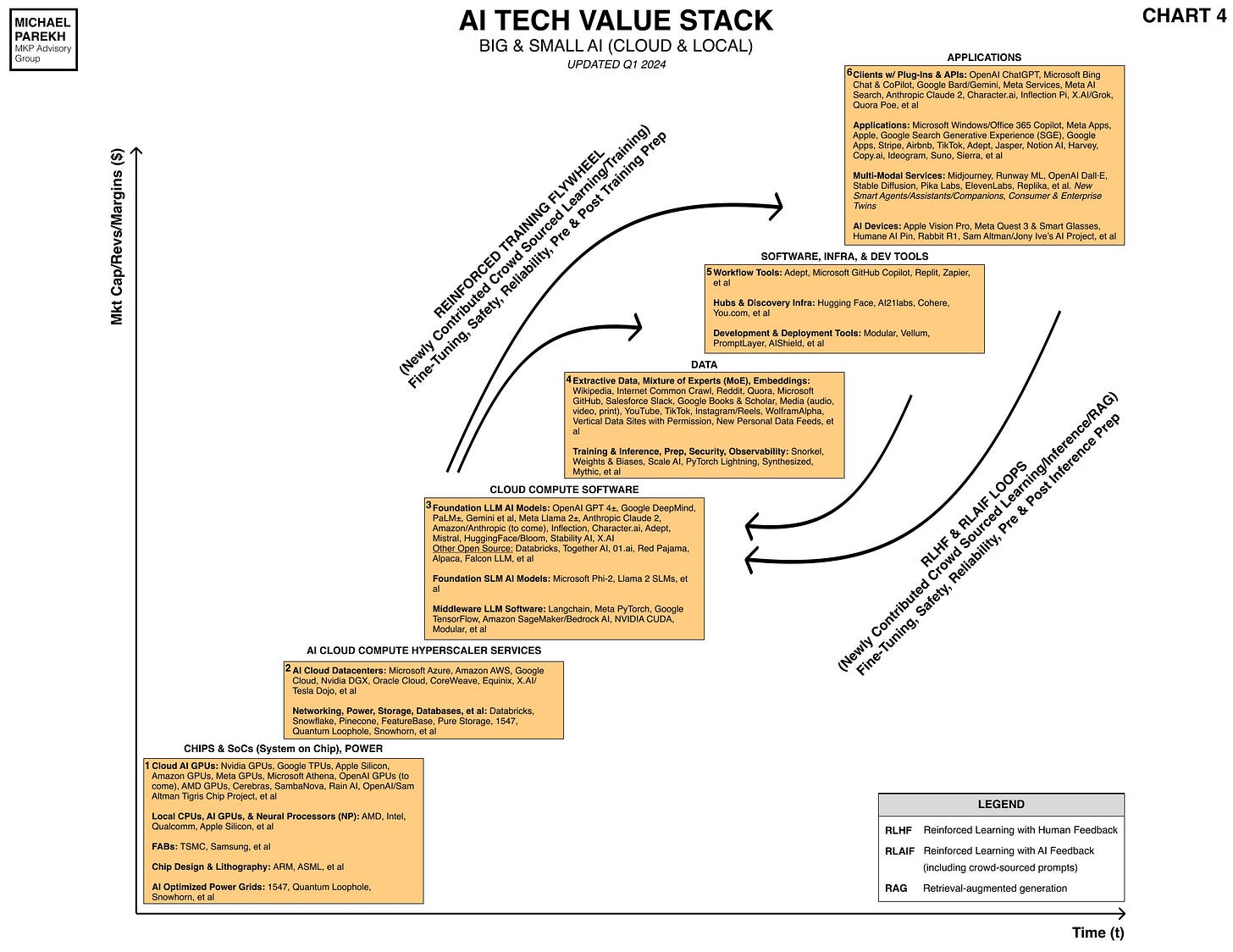

Most of Meta’s ‘smart agents’ AI rubber meets the road of course in Box 6 of the chart above, the one with the biggest monetary rewards potentially, once AI finds its mainstream traction over time.

Consider all this an early down payment for the investments and possible returns to come in the AI Tech Wave. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)