AI: Powering Up AI

...the other key input besides GPUs

I’ve often said in previous posts that without AI GPU chips and related infrastructure, AI represents two non-adjacent letters in the alphabet. Despite the amazing innovation behind the probabilistic computational genius and innovation of Foundation LLM AI models, they need the chips from Nvidia and others to do the massive numbers of computations per ‘Giga-Flops’ to do the ‘emergent’ AI capabilities.

But there is a less heralded input for both the hardware and software to do its AI thing. And that of course is power. And that too is amazing in the amounts of it needed at scale. And their expected growth rates.

The WSJ in a recent piece “AI is Ravenous for Energy. Can it be Satisified?” puts it this way:

“Some experts project that global electricity consumption for AI systems could soon require adding the equivalent of a small country’s worth of power generation to our planet. That demand comes as the world is trying to electrify as much as possible and decarbonize how that power is generated in the face of climate change.”

“Since 2010, power consumption for data centers has remained nearly flat, as a proportion of global electricity production, at about 1% of that figure, according to the International Energy Agency. But the rapid adoption of AI could represent a sea change in how much electricity is required to run the internet—specifically, the data centers that comprise the cloud, and make possible all the digital services we rely on.”

Companies like Microsoft, Amazon, Google and others are as focused on securing unimaginable sources of power for its AI driven data centers to come as they are on both chips from Nvidia and others, as well as make their own as an additional supply.

Indeed, the WSJ notes in a separate piece that Microsoft is focused on Nuclear to power AI operations. Consider that while nuclear is possibly one of the best sources of power at scale, it is one of the most difficult sources to scale out there because of regulatory requirements and societal fears:

“Microsoft is betting nuclear power can help sate its massive electricity needs as it ventures further into artificial intelligence and supercomputing.”

“The technology industry’s thirst for power is enormous. A single new data center can use as much electricity as hundreds of thousands of homes. Artificial intelligence requires even more computing power.”

“Nuclear power is carbon-free and, unlike renewables, provides round-the-clock electricity. But it faces significant hurdles to getting built, including the daunting and expensive U.S. nuclear regulatory process for project developers.”

“In a twist, Microsoft is experimenting with generative artificial intelligence to see if AI could help streamline the approval process, according to Microsoft executives.”

And Microsoft is not just looking at traditional fission driven nuclear power:

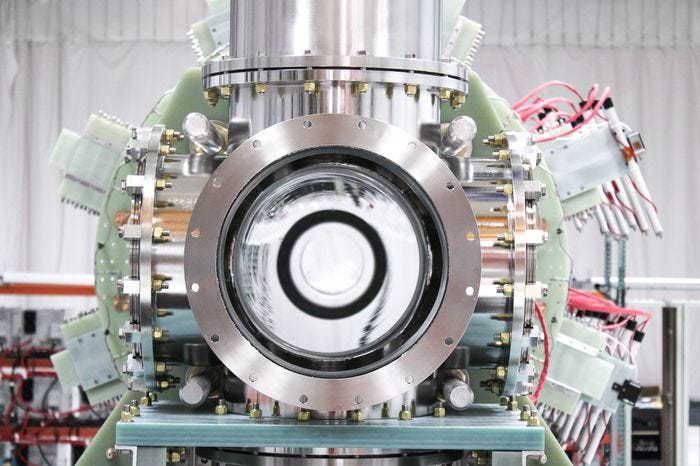

“The company is also betting on fusion power plants, inking a contract with a fusion startup that promises to deliver power within about five years—a bold move given that no one in the world has yet produced electricity from fusion.”

That company, a fusion nuclear startup called Helion, backed partly by OpenAI founder/CEO Sam Altman, is on the bleeding edge of fusion nuclear technology, hoping to go from research to commercial production at scale in record time.

In a post on TSMC in Taiwan a few days ago, I quoted the CEO of Taiwan Semiconductor Dr. C.C. Wei saying:

“We have to give the industry enough capacity to meet AI demand for chips, that deliver the needed performance, but efficiently controls power”.

“Reducing power consumption is becoming more important as AI power demand could easily triple in two years.”

The overall power demands for AI chips are already impacted by other drivers including electric vehicles. LLM AI, powered by Nvidia chips and others, drive the numbers up even more. Again, as the WSJ notes:

“Constellation Energy, which has already agreed to sell Microsoft nuclear power for its data centers, projects that AI’s demand for power in the U.S. could be five to six times the total amount needed in the future to charge America’s electric vehicles.”

“Alex de Vries, a researcher at the School of Business and Economics at the Vrije Universiteit Amsterdam, projected in October that, based on current and future sales of microchips built by Nvidia specifically for AI, global power usage for AI systems could ratchet up to 15 gigawatts of continuous demand. Nvidia currently has more than 90% of the market share for AI-specific chips in data centers, making its output a proxy for power use by the industry as a whole.”

“That’s about the power consumption of the Netherlands, and would require the entire output of about 15 average-size nuclear power plants.”

“According to de Vries’ estimates, the amount of electricity required to power the world’s data centers could jump by 50% by 2027, thanks to AI alone.”

We are in what I’ve called the ‘mainframe’ stage of AI computing where the LLM AI models are getting exponentially larger with ever greater amounts of AI GPU data center infrastructure needed. And while we’re also going to see ‘Small AI’ driven models running on billions of local devices as well, the cumulative investment impact of both just mean an up and to the right demand curve for AI driven power needs for the next few years. Again, as the WSJ notes:

“The main reason AI continues to require more power, even when more efficiency is possible, is that right now, the incentive for everyone in the industry is to continue building bigger, more powerful models.”

“As long as the competition between makers of AI continues to spur companies to use these ever more capable, ever more power-hungry models, there’s no end in sight to how much more electricity the global AI industry will demand. The only question, then, is at what rate its consumption of power will increase.”

And we’re just in the earliest of days for the AI Tech Wave, as I’ve often noted already. Power demands for AI are here to stay. And we’re going to need every source imaginable, old and new Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)