AI: Posturing for AI Chips & Talent

...largest customers & suppliers positioning for key AI inputs

The Bigger Picture, Sunday January 21, 2024

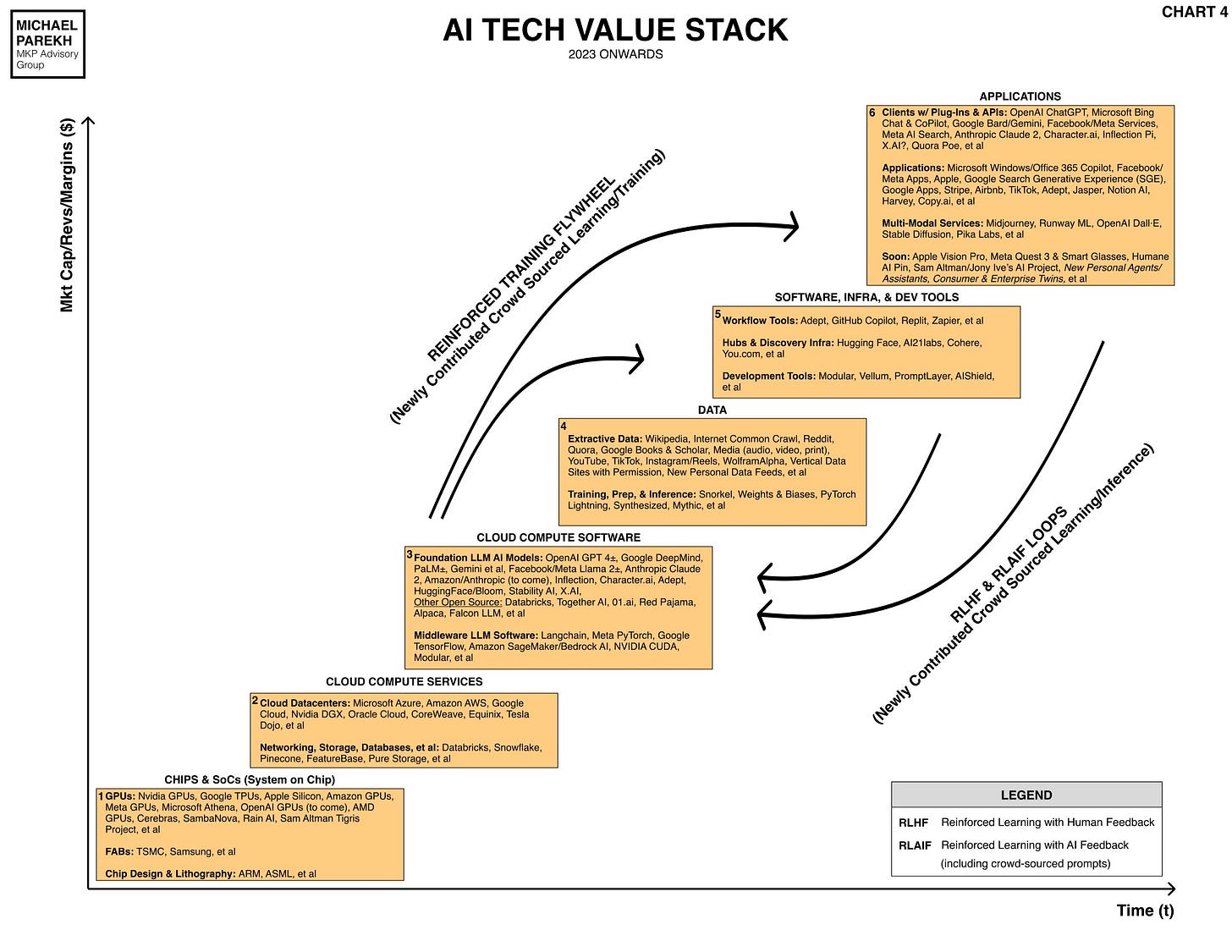

Going into 2024, the largest customers and suppliers of the most sought after inputs in the current AI Tech Wave infrastructure gold rush, are posturing and positioning for the best spots in the race. Those inputs of course are AI GPU chips to build out the needed AI data center infrastructure, the power to run them at the lowest costs per training and inference computations, and the AI talent to build and run both the AI infrastructure, and of course the products and services further up the AI Tech Stack below.

That is the Bigger Picture we need to keep in mind, when we see statements by the companies, and their CEOs, all through this year and likely next. Let me explain.

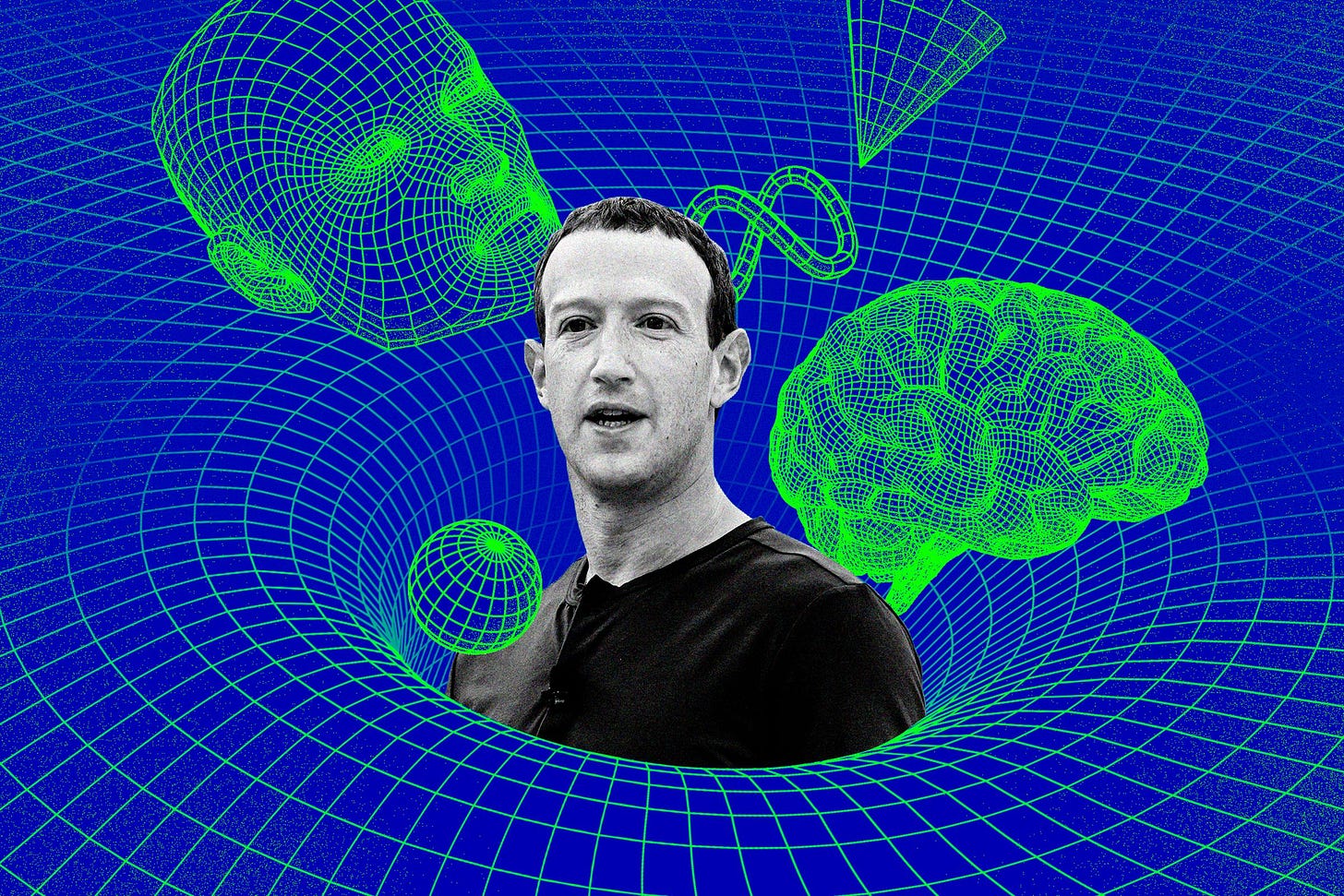

This week saw Mark Zuckerberg, founder//CEO of Meta/Facebook highlight his company’s continuing commitment to open source Foundation LLM AI models for its upcoming Llama 3, and future plans to propel the industry to AGI (aka artificial general intelligence).

In particular, he highlighted how his company was the lead customer for the scarce AI GPU chips from Nvidia, which remains in the catbird’s seat for the industry’s supply at least into next year and more. And he underlined his company’s commitment to open source AI software infrastructure. But there were are key messages he was imparting as well. As the Verge provides the broader context:

“The battle for AI talent has never been more fierce, with every company in the space vying for an extremely small pool of researchers and engineers. Those with the needed expertise can command eye-popping compensation packages to the tune of over $1 million a year. CEOs like Zuckerberg are routinely pulled in to try to win over a key recruit or keep a researcher from defecting to a competitor.”

“After talent, the scarcest resource in the AI field is the computing power needed to train and run large models. On this topic, Zuckerberg is ready to flex. He tells me that, by the end of this year, Meta will own more than 340,000 of Nvidia’s H100 GPUs — the industry’s chip of choice for building generative AI.”

“We have built up the capacity to do this at a scale that may be larger than any other individual company”

“External research has pegged Meta’s H100 shipments for 2023 at 150,000, a number that is tied only with Microsoft’s shipments and at least three times larger than everyone else’s. When its Nvidia A100s and other AI chips are accounted for, Meta will have a stockpile of almost 600,000 GPUs by the end of 2024, according to Zuckerberg.”

Meta almost consumes a third of Nvidia’s GPU current GPU output, and there are other big customers like Microsoft/OpenAI, Google, Amazon, Apple and many others in line this year and next.

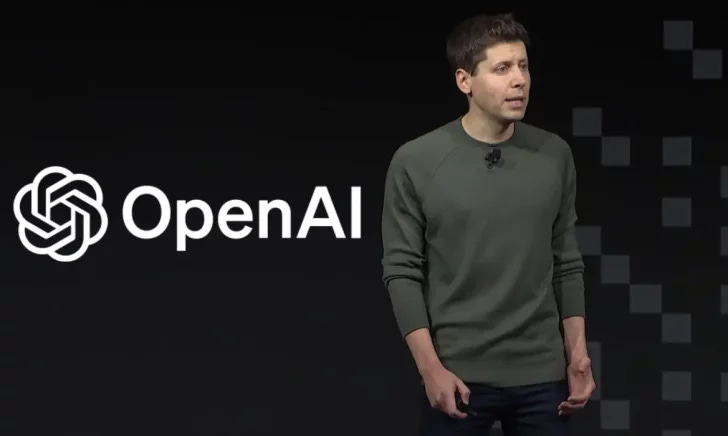

In fact, the need for additional AI GPU chip capacity is so acute for the next few years, that OpenAI’s founder/CEO Sam Altman has made it an addtional top priority this year:

As the Bloomberg highlights in a separate piece:

“Altman Seeks to Raise Billions for Network of AI Chip Factories”

“Push reflects concern about potential semiconductor shortage”

“Project would involve working with major chipmaking companies”

“OpenAI Chief Executive Officer Sam Altman, who has been working to raise billions of dollars from global investors for a chip venture, aims to use the funds to set up a network of factories to manufacture semiconductors, according to several people with knowledge of the plans.”

“Altman has had conversations with several large potential investors in the hopes of raising the vast sums needed for chip fabrication plants, or fabs, as they’re known colloquially, said the people, who requested anonymity because the conversations are private.”

“Firms that have held discussions with Altman include Abu Dhabi-based G42, people told Bloomberg last month, and SoftBank Group Corp., some of the people said. The project would involve working with top chip manufacturers, and the network of fabs would be global in scope, some of the people said.”

Of course the current company that actually has the foundries (aka ‘fabs’) to make the chips is Taiwan Semiconductor (TSMC), situated at the base of the AI Tech Stack in Box 1 above.

And they’re also posturing for additional positioning in the funds being offered by the Biden White House to build state of the art facilities in the US. It’s part of course of the continuing US-China ‘threading the needle’ tussles over AI technology and geopolitics. As the WSJ highlights:

“One of Biden’s Favorite Chip Projects Is Facing New Delays”

“Taiwan’s TSMC pushes back timeline for second plant at $40 billion Arizona site”

“Taiwanese chip maker TSMC said it expected to delay production at the second of two semiconductor plants it is building in Arizona, the latest setback for a $40 billion project at the core of Washington’s effort to rebuild U.S. chip manufacturing.”

“TSMC—the world’s leading contract manufacturer whose chips power Apple iPhones and Nvidia’s artificial-intelligence chips—also cast uncertainty on an earlier statement that the plant would produce an advanced type of chip.”

“The statements by TSMC Chairman Mark Liu at a news conference Thursday offered further evidence of challenges faced by the Arizona project, including a shortage of skilled workers and difficult negotiations over how much money the U.S. government will provide.”

“The delay, which some analysts say could be a negotiating tactic to secure more U.S. funding, also highlights the challenge the U.S. faces in attracting top Asian chip manufacturers to American shores. The Biden administration is preparing to roll out billions of dollars in grants in the coming weeks under the Chips Act. The $53-billion program aims to spur the construction of new factories across the country to cut reliance on imported chips and better compete with China, which is fast developing its own advanced semiconductor technologies.”

So companies up and down the AI Tech Stack in the chart above are posturing for better positions in the marathon race for AI chips and talent, even this early in the race.

Customers and suppliers are both cooperating and competing out in the open, and in plain sight. This is an important part of the Bigger Picture in the AI investment boom to keep in mind, at least for the forseeable future. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)