AI: OpenAI GPT-5 and Nvidia H200

...big leaps to the next generation

It was only this August, in ‘AI: More powerful GPUs & Models’, I noted:

“One thing we can count on for some decades now, is technology getting better and cheaper over time. The AI Tech wave, even in its current early days, is following the template of the PC and Internet waves in that regard.”

“This time though, it needs to be fed with ever more Data from our world, in forms that we can barely imagine. To ingest, learn via reinforcement loops, while keep getting better in serving our needs with safety and reliability. A lot of moving parts.”

“We saw news on the AI tech front this week in terms of coming AI hardware and software upgrades. The two core drivers of LLM AI technologies, the GPU chips and the Foundation LLM AI models, keep getting better, more powerful, and cheaper at scale.”

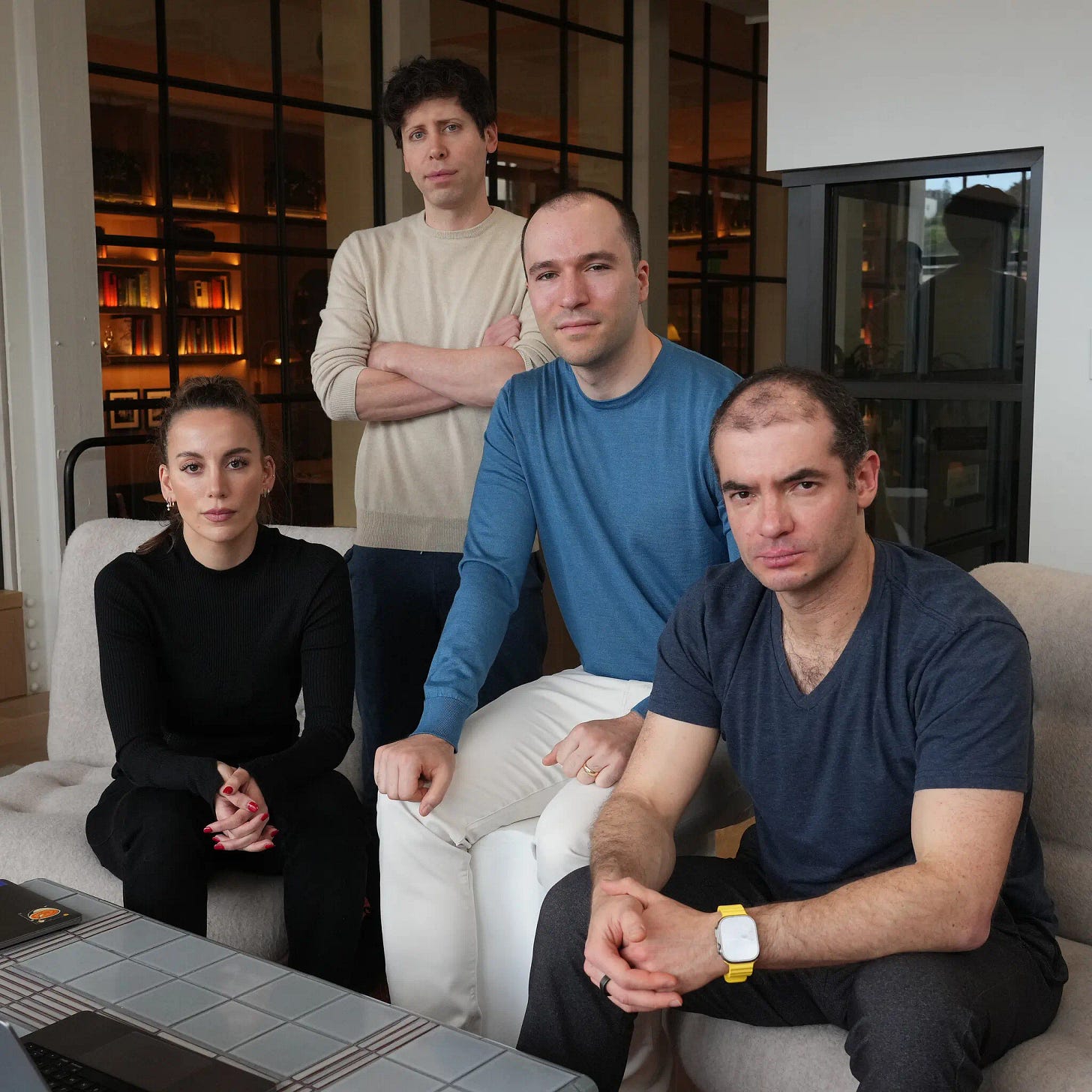

The occasion was some next generation hardware and software in the world of Foundation LLM AI. Now, just three months later, just days after OpenAI’s industry shifting first Developer Conference, we have more news of next generation AI software and hardware. This time, an acknowledgement of OpenAI planning to introduce GPT-5 eventually, with a little help from partner Microsoft, confirmed by founder/CEO Sam Altman directly:

“The company is also working on GPT-5, the next generation of its AI model, Altman said, although he did not commit to a timeline for its release. It will require more data to train on, which Altman said would come from a combination of publicly available data sets on the internet, as well as proprietary data from companies.”

“OpenAI recently put out a call for large-scale data sets from organisations that “are not already easily accessible online to the public today”, particularly for long-form writing or conversations in any format. While GPT-5 is likely to be more sophisticated than its predecessors, Altman said it was technically hard to predict exactly what new capabilities and skills the model might have. “Until we go train that model, it’s like a fun guessing game for us,” he said. “We’re trying to get better at it, because I think it’s important from a safety perspective to predict the capabilities. But I can’t tell you here’s exactly what it’s going to do that GPT-4 didn’t.”

He underlined that further investments are ahead, given the scale involved with next-gen LLM AI models, and OpenAI’s ambitions to race towards ‘Super-Intelligence’, or AGI (Artificial General Intellifence):

“OpenAI plans to secure further financial backing from its biggest investor Microsoft as the ChatGPT maker’s chief executive Sam Altman pushes ahead with his vision to create artificial general intelligence — computer software as intelligent as humans.”

“In an interview with the Financial Times, Altman said his company’s partnership with Microsoft’s chief executive Satya Nadella was “working really well” and that he expected “to raise a lot more over time” from the tech giant among other investors, to keep up with the punishing costs of building more sophisticated AI models. Microsoft earlier this year invested $10bn in OpenAI as part of a “multiyear” agreement that valued the San Francisco-based company at $29bn, according to people familiar with the talks.”

“Asked if Microsoft would keep investing further, Altman said: “I’d hope so.” He added: “There’s a long way to go, and a lot of compute to build out between here and AGI . . . training expenses are just huge.”

The valuation of OpenAI is of course much higher now, given the pending sale of secondary stock by insiders at a reported $80 billion plus valuation, a tripling just this year. That pending sale is also offering OpenAI an opportunity to attract scarce AI talent from rivals like Google and others, offering packages worth potentially $10 million or more in stock. It’s the other critical resource needed to scale Foundation LLM AI besides next-generation software and hardware.

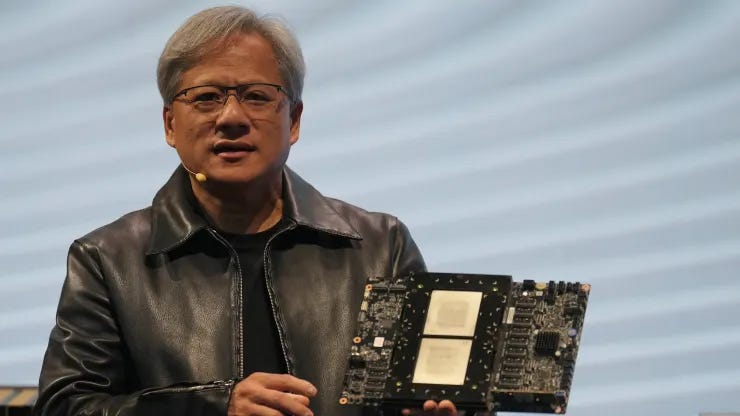

Speaking of hardware, Nvidia is rolling out their next generation GPUs as well, led by founder/CEO Jensen Huang:

“Nvidia on Monday unveiled the H200, a graphics processing unit designed for training and deploying the kinds of artificial intelligence models that are powering the generative AI boom.”

“The new GPU is an upgrade from the H100, the chip OpenAI used to train its most advanced large language model, GPT-4. Big companies, startups and government agencies are all vying for a limited supply of the chips.”

“H100 chips cost between $25,000 and $40,000, according to an estimate from Raymond James, and thousands of them working together are needed to create the biggest models in a process called “training.”

“Excitement over Nvidia’s AI GPUs has supercharged the company’s stock, which is up more than 230% so far in 2023. Nvidia expects around $16 billion of revenue for its fiscal third quarter, up 170% from a year ago.”

“The key improvement with the H200 is that it includes 141GB of next-generation “HBM3” memory that will help the chip perform “inference,” or using a large model after it’s trained to generate text, images or predictions.”

“Nvidia said the H200 will generate output nearly twice as fast as the H100. That’s based on a test using Meta’s Llama 2 LLM.”

“The H200, which is expected to ship in the second quarter of 2024, will compete with AMD’s MI300X GPU. AMD’s chip, similar to the H200, has additional memory over its predecessors, which helps fit big models on the hardware to run inference.”

All this is to underline that the AI industry continues to invest tens of billions plus in next generation AI software and hardware. Also expected soon is Google’s multimodal Gemini model, which should compete with OpenAI’s GPT-4 and more potentially. The other thing to underline is that these are Moore’s Law on turbo-charge types of improvements, being delivered in the next 2-3 years.

And given the way that LLM AI works off of unending pools of data, there is much that even the top AI developers don’t understand how it does what it does do, as AI software fuses with traditional software. All of that points to potentially unexpected levels of AI capabilities, as Sam Altman highlighted above.

Unnerving and exciting at the same time in the times ahead. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)