AI: OpenAI, from GPT to GPTs

...one to many 'mainframe AI' to 'personal AI' computing

OpenAI CEO Sam Altman did his version of Oprah today, giving everyone a GPT. He added an ‘s’ to GPT, the company’s foundation product. They’re GPTs now. And everyone gets a GPT.

One year after his company’s ‘ChatGPT moment’, at the company’s first Developer Conference, he changed the world of Foundation LLM AI from a monolithic AI in the cloud to one that gives everyone a shot at their own GPT. And his partner Satya Nadella, the CEO of Microsoft came on stage at the keynote to promise that his company would do everything to make sure OpenAI had all the ‘Compute’ GPU infrastructure he needed to make sure everyone could drive around in their own GPTs. A good direction for Sam Altman and his company.

From one to many in one fell swoop. As the Verge explained:

“With the release of ChatGPT one year ago, OpenAI introduced the world to the idea of an AI chatbot that can seemingly do anything. Now, the company is releasing a platform for making custom versions of ChatGPT for specific use cases — no coding required.”

“In the coming weeks, these AI agents, which OpenAI is calling GPTs, will be accessible through the GPT Store. Details about how the store will look and work are scarce for now, though OpenAI is promising to eventually pay creators an unspecified amount based on how much their GPTs are used. GPTs will be available to paying ChatGPT Plus subscribers and OpenAI enterprise customers, who can make internal-only GPTs for their employees.”

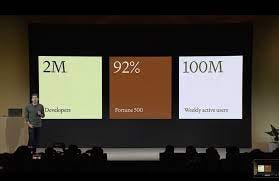

“Custom GPTs were announced Monday at DevDay, OpenAI’s first-ever developer conference in San Francisco, where the company also announced a turbocharged, cheaper GPT-4, lower prices for developers using its models in their apps, and the news that ChatGPT has reached a staggering 100 million weekly users.”

All measured in months going forward instead of the five plus decades for the tech industry to date. From mainframes to PCs to the internet smartphones, and now likely a pathway to tens of billions of AIs for eight billion humans. Everyone gets an AI.

As Ars Technica aptly puts it “OpenAI introduces custom AI Assistants called “GPTs” that play different roles”:

“Users can build and share custom-defined roles—from math mentor to sticker designer.”

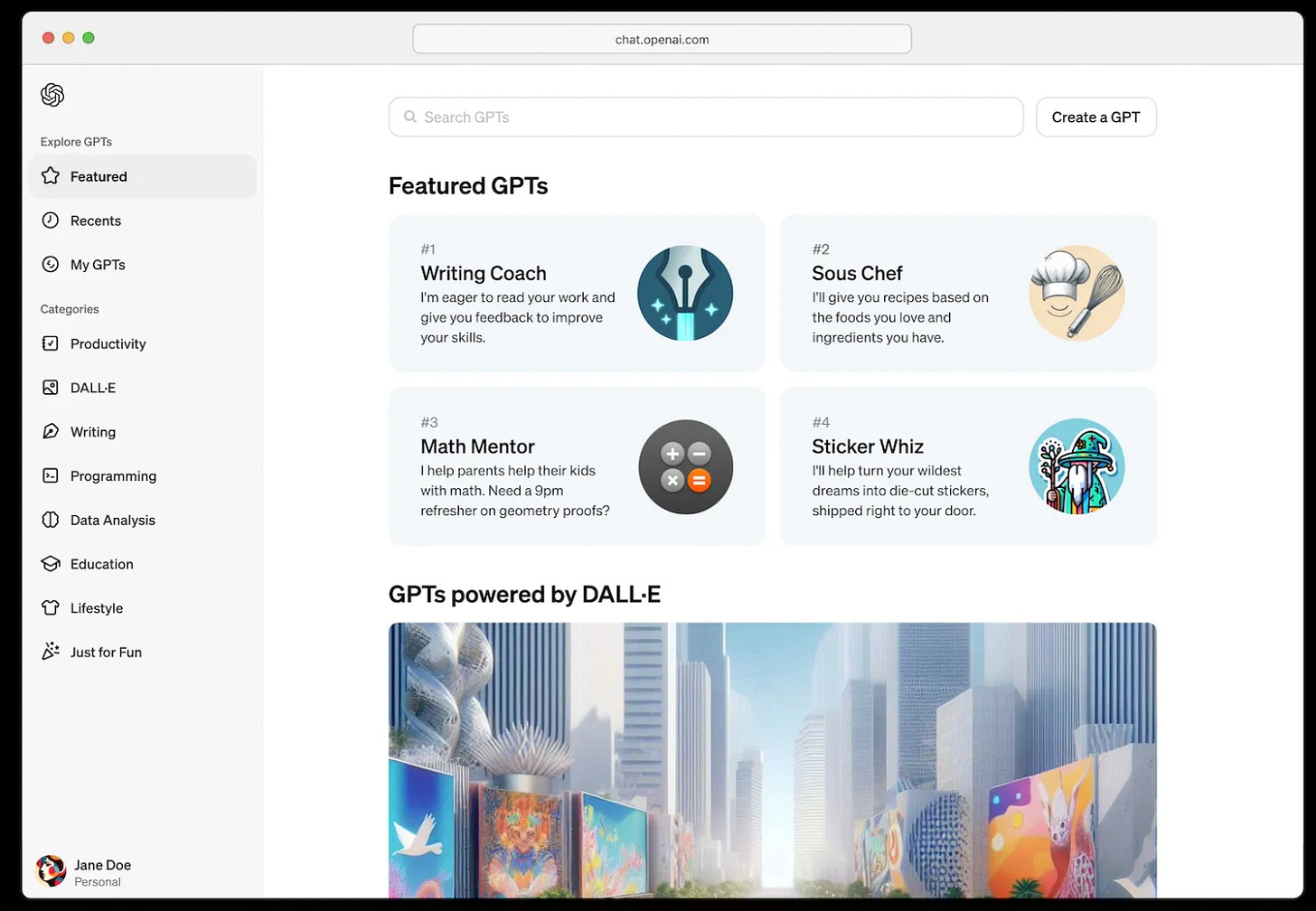

“For example, in a screenshot of the GPTs interface provided by OpenAI, the upcoming GPT Store shows custom AI assistants called "Writing Coach," "Sous Chef," "Math Mentor," and "Sticker Whiz" available for selection. The screenshot describes the GPTs as assistants designed to help with writing feedback, recipes, homework help, and turning your ideas into die-cut stickers.”

So GPTs are custom versions of ChatGPT that combine instructions, extended knowledge/data from customers (kept private), and actions tailored for specific purposes for customers, be they consumers or businesses. Examples by customers demoing on stage included a Code.org GPT to help teachers explain coding concepts, a Canva GPT to generate designs from natural language prompts, and a Zapier GPT to automate workflows across apps. Customized GPTs on the fly, make ChatGPT more customizable, controllable and capable. Users can publish GPTs publicly or keep them private.

The other key announcement was that OpenAI will launch a GPT Store and share revenue with creators of the most useful GPTs. Visions of Apple App Store and its tens of billions in revenues in win-win configurations shimmer in the distance. As Techcrunch explains it,:

“An App Store for AI”

“Perhaps the most impactful announcement of all today was OpenAI’s GPT Store, which will be the platform on which these GPTs will be distributed and, eventually, monetized.

“Later this month, we’re launching the GPT Store, featuring creations by verified builders. Once in the store, GPTs become searchable and may climb the leaderboards. We will also spotlight the most useful and delightful GPTs we come across in categories like productivity, education, and “just for fun”. In the coming months, you’ll also be able to earn money based on how many people are using your GPT.”

“Sound familiar? The App Store model has proven unbelievably lucrative for Apple, so it should come as no surprise that OpenAI is attempting to replicate it here. Not only will GPTs be hosted and developed on OpenAI platforms, but they will also be promoted and evaluated.”

“We’re going to pay people who make the most used and most useful GPTs with a portion of our revenue,” and they’re “excited to share more information soon,” Altman said.”

There were many other items of note at the OpenAI developer day besides the GPTs and GPT Store. To summarize, the main announcements were around four key areas: the GPTs and GPT store raved on about above, the Assistants API, the new multimodal GPT-4 Turbo model (from GPT-4 before), lowered pricing on GPT services, copyright indemnification for customers, and a re-emphasized partnership with Microsoft.

The new Assistants API makes it easier to build customized AI assistants. It handles conversation state, built-in retrieval, a code interpreter, and improved function calling. All good things that make the AI remember customer needs and requirements on an ongoing basis. This greatly simplifies creating advanced assistants again demoed like Shopify Sidekick and Snap's My AI. Key features include persistent threads, invoking multiple functions together, guaranteed JSON outputs, and visibility into the assistant's steps. All features at a Developer Day conference to make Developers’ Days sing.

GPT-4 Turbo is OpenAI's latest model launched today, upgrading GPT-4. It increases context length up to 128,000 tokens (makes possible much longer customer questions), improves control and reproducibility, updates knowledge through April 2023 (from mid-2021 earlier), adds modalities like image/audio, enables customization, and doubles token limits. Oh, and OpenAI also significantly reduces pricing by 2.75x compared to GPT-4.

Then as mentioned before, Microsoft CEO Satya Nadella joined to announce an expanded partnership. Microsoft aims to provide the best infrastructure for training and inference so OpenAI can build the best models. Microsoft itself wants to leverage OpenAI APIs as a developer to build products like GitHub Copilot and of course CoPilot for Office 365 and Windows itself. Copilot is the next Windows for Microsoft if things work out right. The OpenAI/Microsoft partnership is grounded in enabling broad access to AI while of course ensuring safety and reliability.

ChatGPT now runs on GPT-4 Turbo with the latest capabilities. Its knowledge graph also updates continually. OpenAI believes gradual deployment of more capable AI agents is important for managing societal impacts. GPTs and the Assistants API represent initial steps toward the future of AI agents. And the AIs will likely get an AI. All of the above are OpenAI’s version of AI ‘Smart Agents’ compared to Meta’s celebrity infused versions and Elon’s ‘snarky and saucy’ ‘Grok’ for xAI.

From a model of mainframe computing to a PC on every desk and a smartphone in every hand. From one monolithic ‘Wizard of Oz’ to answer all questions to potentially millions of ‘mini-Oz’ apps to answer any ongoing questions of tens of millions of users. From mainframe service center computing to Lotus 1-2-3 in less a year. Instead of decades the tech industry actually took the last few waves. From mainframe to personal computing to computing personalized.

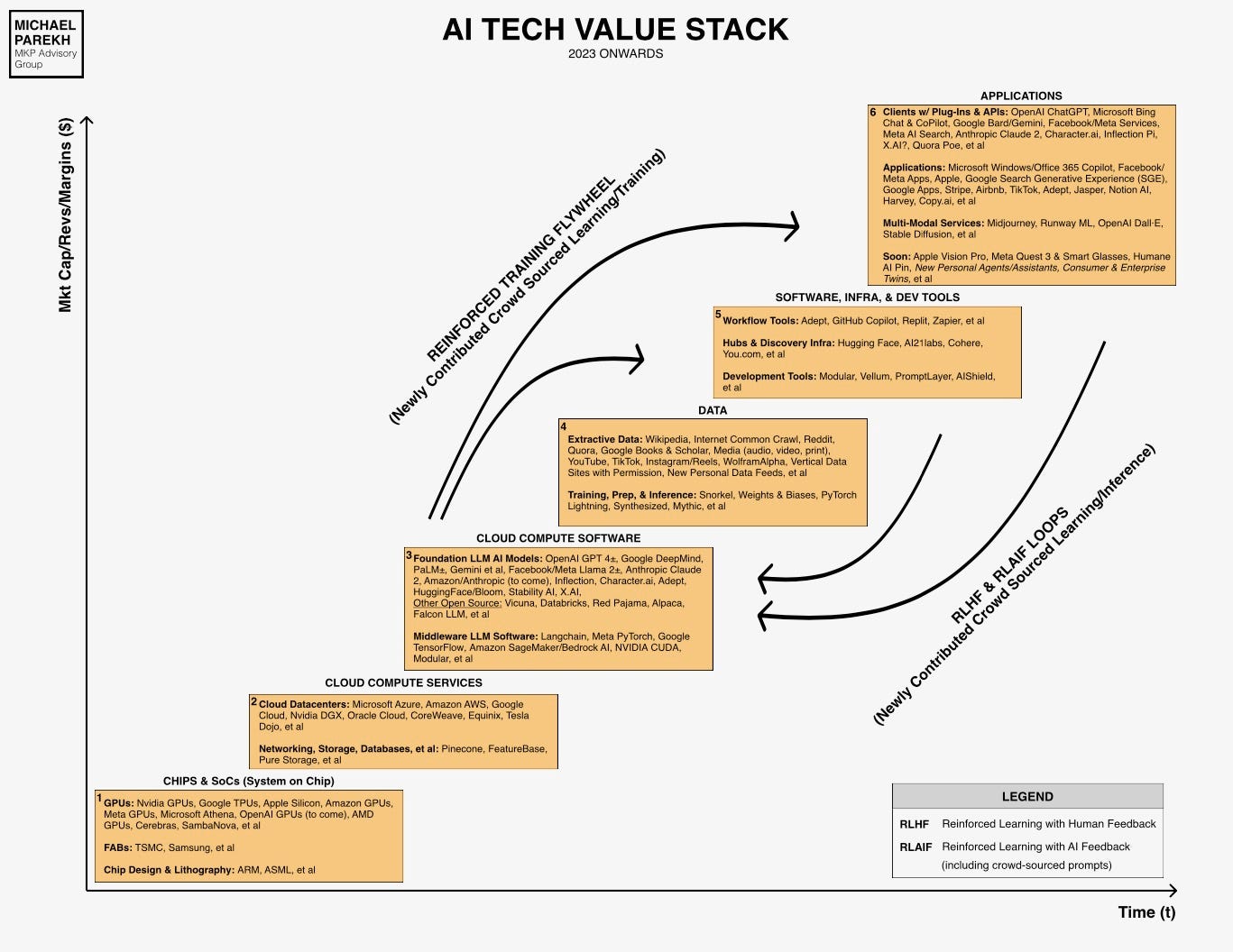

Because it’s not just OpenAI. Their initiatives announced today if successful will likely be emulated by every Foundation LLM AI company already frenetically investing billions in spooling up their multimodal models in the blue sky of AI possibilities.

Other than that it was a relatively prosaic Developer Day by a big tech company.

In hindsight we’ll likely see this day as accelerating the AI Tech Wave and how it at least tried to leverage the key lessons of the past waves: operating system and application platforms (PCs: Windows/Office), aggregation platforms (Internet/Social Media: centralized portals), and controlled, organized ‘app stores’ (smartphones: iPhone/Android App stores). All potentially worth billions over time.

In conclusion, OpenAI made significant progress in democratizing access to advanced AI through customizable GPTs, simplified assistants via API, an upgraded foundation model, all leveraged by its Microsoft partnership. With a serious focus on ‘responsible development’ remaining central, as AI capabilities advance. OpenAI is taking Foundation LLM AI from the era of ‘Compute’ data center ‘mainframes’ to true personalized computing. From GPT to GPTs. Everyone gets a GPT.

And the rest of the industry will likely follow with their AIs. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)