With Microsoft, Google, Meta all having ties to BIG Foundation LLM AI models, the question has been if Amazon and Apple needed one too. Remember Amazon AWS hosts lots of traditional and AI computing services of all types and sells access to the same to businesses and users worldwide, large or small. So for the most part, they don’t need to ‘roll their own’, especially since it takes billions to build, train and scale, both in terms of the hardware and software ‘compute.

That changed today, as Amazon AWS put in a good number of chips on a particular LLM AI by Anthropic, the largest private purveyor of same after OpenAI, who’s of course hitched up with Microsoft. As Stephanie Palazzolo of the Information explains:

“Amazon’s ‘Switzerland’ Days Are Over:”

“Looks like Amazon’s finally made its AI bet.”

“On Monday, model developer Anthropic announced that it had raised as much as $4 billion from Amazon. As part of the deal, Amazon Web Services *AWS), will become Anthropic’s primary cloud provider, and Anthropic will train and deploy its future models on AWS training and inference specialized chips, Trainium and Inferentia.”

“What that means isn’t totally clear, however, since Google in February invested $400 million in Anthropic and announced it would be Anthropic’s “preferred” cloud provider.”

Remember Google has a number of Foundation LLM AI models in house already with Palm2, Bard, and the upcoming Gemini. And likely wants Anthropic to also support its own Tensorflow TPU AI chips that compete directly with Nvidia’s market share hogging (70% plus globally) A100 to H100 GPU chips that are on global allocation for at least the next couple of years. I wrote how Nvidia’s is fast trying to take advantage of their supply position to ramp up its DGX AI GPU Cloud Compute via all the ‘Cloud Service Providers’ (CSPs), like Amazon AWS, Microsoft Azure, Google Cloud, Oracle Cloud, CoreWeave, and many others. (In that ranking down order, by the way).

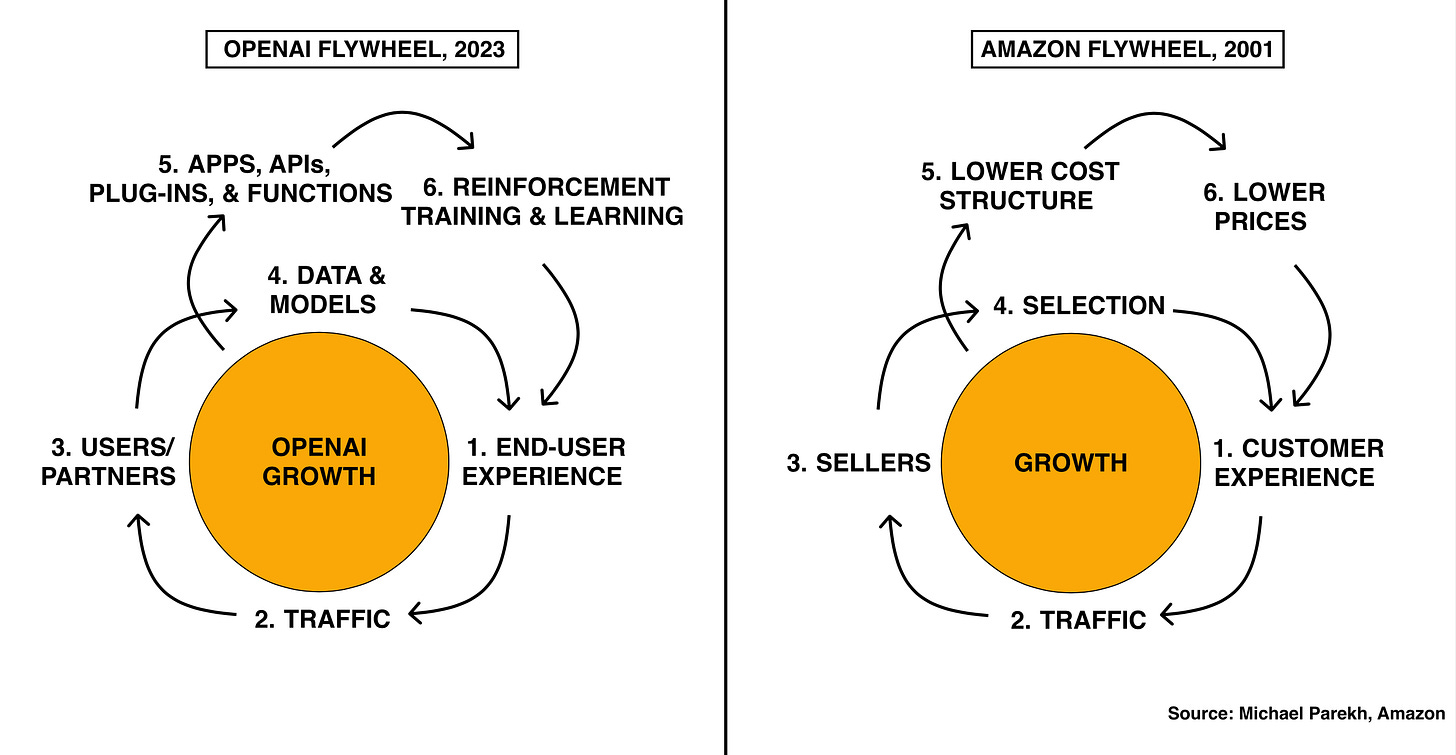

So Amazon making this bet on Anthropic’s LLM AI makes sense not in terms of ‘having their own LLM AI, but backing a large provider that could potentially sway developers to support Amazon AWS AI hardware and software over Nvidia’s advantage with its free ‘CUDA’ and other AI software frameworks that make it possible to use those LLM AI models for their applications. Swaying Developer habits at scale is a big effort by all the big tech companies ahead. It’s a whole new ‘Flywheel’ for Amazon in the world of LLM AI models that getter so much better with reinforcement loops at scale. Puts them right up against what OpenAI/Micorosoft and others are trying to do with their AI Flywheels.

This is all a bit in the weeds for most folks. But key to understand is that it’s an AI Infrastructure chess game in these early days of the AI Tech Wave, with the companies in the first three boxes below are trying to make sure the companies in boxes 5 and especially 6, are their customers and partners down the road. To get them to build their AI applications and services with the hardware and software provided vertically by the companies discussed thus far.

Which potentially leaves out Apple as the one big trillion plus cap tech company without a Foundation LLM AI of their own. But as I’ve written previously, they’ve been working on it in-house using Google AI tech, and have inherently different scale of AI opportunities in Box 6 above with their ‘Everything App iPhone’, their bottoms-up AI machine learning apps that focus on private and personal data running ‘inference’ learning loops on over two billion local devices (iPhones, iPads, Macs, Watches, and soon Vision Pro headsets). Boy, do they have AI flywheels to build at scale ahead. Much more on that to come in future posts.

But don’t be surprised if Apple does put some chips into an outside Foundation LLM AI company private or public to accelerate AI Training on a large Foundation LLM AI model, ESPECIALLY using their game-changing Apple Silicon. That big chip head start, especially with privacy-focused GPU cores on devices at the edge, is something Apple has executed well on using chip designs by Arm Holdings, which recently went public.

It’s a long series of games within games, races within races ahead. And the moves are coming fast and furious. We’re barely at the beginning of the beginning. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)