AI: Amazon AWS, AI underdog

...relatively under-appreciated

Pick up any mainstream business publication of late, and if they’re writing about AI, the spotlight is invariably on the usual suspects with the highest buzz and drama:

OpenAI/Microsoft the ‘ChatGPT’ guys, Google whom they ‘threaten’, Meta driven by Zuck’s laser focus on Open Source AI and AI driven Threads & new ‘Smart AI Apps’ to come. Then up next is Elon Musk’s ‘X-Twitter, and just launched from behind xAI.

I’ve of course covered a lot of this in previous posts already. And all of these companies have been big beneficiaries of investor enthusiasm in both the private and public markets already.

The one company that gets less relative media focus in the AI context, is Amazon AWS. As this piece in The Information highlights the relative ‘underdog’ positioning:

“Amazon Web Services, the king of renting cloud servers, is facing an unusually large amount of pressure. Its growth and enviable profit margins have been dropping, Microsoft and Google have moved faster—or opened their wallets—to capture more business from artificial intelligence developers (TBD on whether it will amount to much), and Nvidia is propping up more cloud-provider startups than we can keep track of.

It’s no wonder AWS CEO Adam Selipsky last week came out swinging in an interview in response to widespread perceptions his company is behind in the generative AI race.”

More on that interview in a minute. But it’s worth also underlining Nvidia’s core role as the underlying enabler of LLM AI. They of course make all of the above possible. They’re the market’s newest trillion dollar ‘Picks and Shovels’ favorite, Nvidia, whose GPU chips are the critical components that drive over 70% of the LLM AI infrastructure out there. And likely will do so well into 2025. Without Nvidia’s AI GPU infrastructure, ‘A and I’ are just two letters in the alphabet.

As I outlined in my second half 2023 preview, the next few quarters are going to see billions in capital expenditure (aka ‘capex), by a host of Big Tech, and Unicorn ‘AI Native’ startups. Led by Meta, Microsoft, Google and Amazon ear-marking billions to take the scarce GPUs and create Cloud ‘Compute’ data centers. All in anticipation of billions in potential demand for AI apps and services in this AI Tech wave, over the next three years and beyond.

We got updates on this ‘Capex’ spend in the just released quarterly results of the Big Tech players, as the Information highlights:

“Microsoft’s capital expenditures—primarily purchases of hardware and software—in the second quarter exceeded $10.7 billion, up 23% from the same quarter a year earlier and the highest quarterly capex in the company’s history. Chief Financial Officer Amy Hood told investors last week that the number will climb even higher in each of the next four quarters.”

“Microsoft’s spiking capex illustrates one cost of the AI boom, as tech giants and startups alike spend heavily to meet surging demand. Google Chief Financial Officer Ruth Porat told investors last week that the company’s capex will rise in the second half of 2023 and into 2024, with the main expense being servers, including for AI. At Oracle, which is smaller than the other cloud providers but has attracted several AI startups as customers, capex nearly doubled in the fiscal year ended May 31 after doubling in the prior fiscal year.”

As the piece goes on to explain, we saw a similar Capex binge ahead of anticipated customer revenues, in the earlier Cloud Tech wave a few years ago:

“But some observers say the current capex build-out mirrors the first wave of cloud computing more than a decade ago, when Amazon, Google and Microsoft invested heavily in building server capacity amid rising cloud demand.”

“Those investments paid off, despite some early concerns. “It’s a capex circus right now, and this one has a lot of the same worries and tremors as the first cloud build-out,” said Sheila Gulati, a managing director at Seattle venture capital firm Tola Capital who spent more than a decade as a Microsoft cloud marketing executive. “The question is whether to worry about that crazy capex spend. Personally, I don’t, because the demand is just incredibly large. When you see infinite demand, you lean in and go get supply.”

We’ll get color from Amazon on its AWS capex, as they report their results after market close today. It’s important to note that AWS is the biggest of the three big Cloud Data center companies, ahead of Microsoft and Google.

It’s easy to forget that AWS is twice as large as Microsoft Azure, which was big at $34 billion in 2022. Comparable numbers for 2023 are likely >$40 billion for Microsoft vs >$85 billion for AWS. And remember a good chunk of that Microsoft Azure revenue are the dollars they ‘invested’ in their $13 plus billion partnership with OpenAI. It's OpenAI ‘boomerang’ spending that money right back to build out the AI Compute infrastructure for its GPT4 and beyond Foundation LLM AI infrastructure.

Google Cloud was a distant third at <$27 billion in 2022. Oracle is further back from Google, and dozens of others bring up the long tail of data center providers.

As I started saying in the beginning, a lot of media attention is away from Amazon on the AI front, given all the headline grabbing activity outlined above. And yet the Amazon AWS folks are really doing a lot of things right on the AI front.

Start with AWS being the largest cloud datacenter provider of LLM AI models, closed or open, large or small, along with the supporting software infrastructure and data base options. They’re going to be an important distribution hub for Meta’s market leading open source Llama 2 LLM AI models, along with a host of other AI software infrastructure. Here is some context on that from AWS CEO Adam Selipsky a few weeks ago in the FT:

“Amazon is deeply invested in AI and machine learning and has been for a long time. If you back way up, Amazon’s been doing AI for 25 years: the personalisation on the Amazon website circa 1998 was AI. It was just that nobody called it AI at that time. But we are taking a little bit of a different approach to other companies.”

“We are squarely focused in AWS on what AWS customers need: on business and organizational applications. I think a couple of other companies have been building — or talking about — consumer-facing chat interfaces, which is fine. It’s super interesting and will absolutely have a use. But it’s not what a significant enterprise needs. We’re really focused on building what AWS customers need.”

The whole interview is worth reading. I particularly agree where he underlines that we’ve just in the opening yards of a marathon when it comes to AI. And Amazon has been AI since AI was cool. They’ve pioneered and created the largest API-driven cloud business that meters and collects billions from businesses large and small using cloud infrastructure to run their businesses.

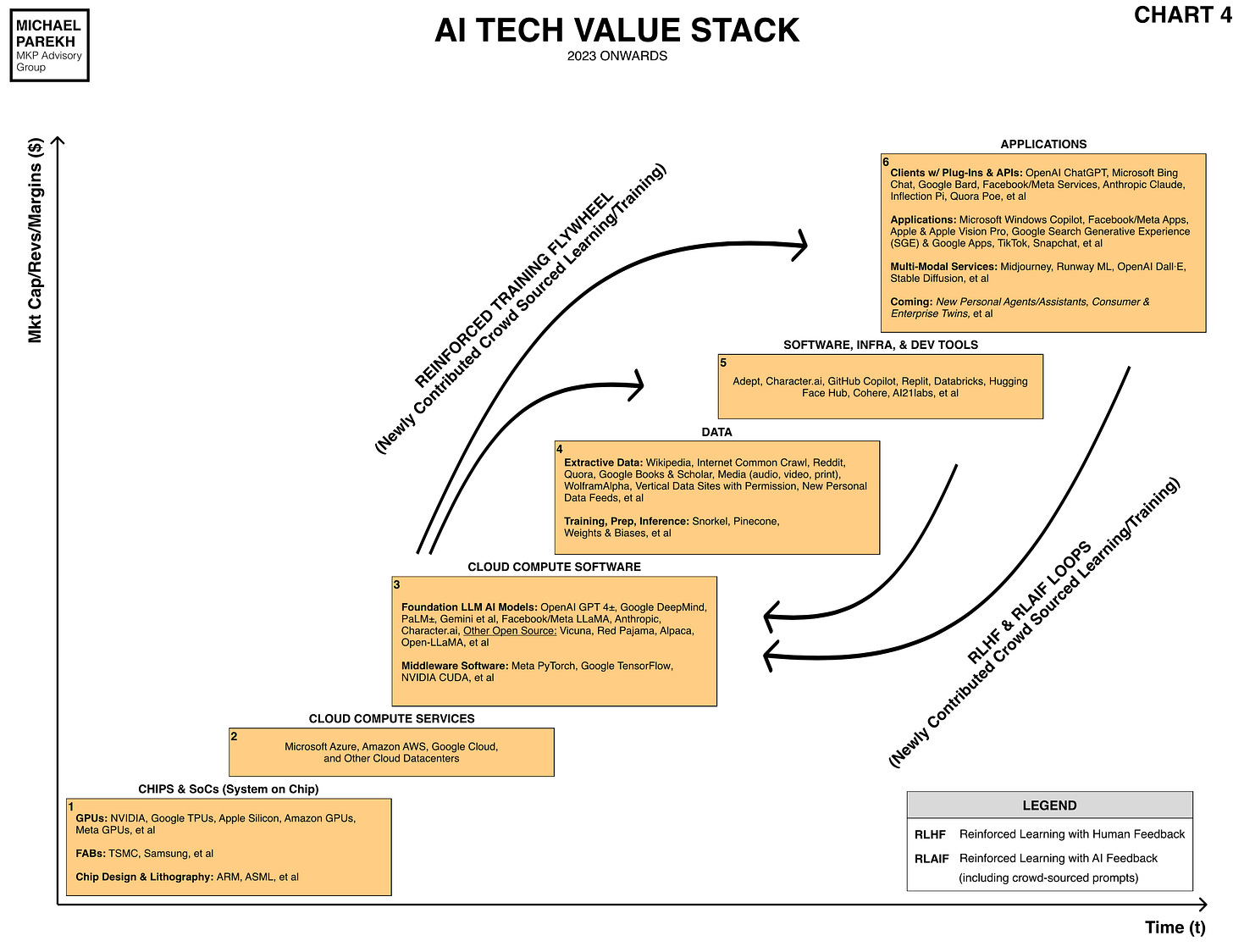

AI is another very API metering driven business to come, with far greater ‘Reinforcement Learning’ user loops, as AI software ‘eats’ traditional software. I’ve outlined these dynamics in this piece here. And Amazon has this metered API model available at scale at the beginning stages of this AI wave.

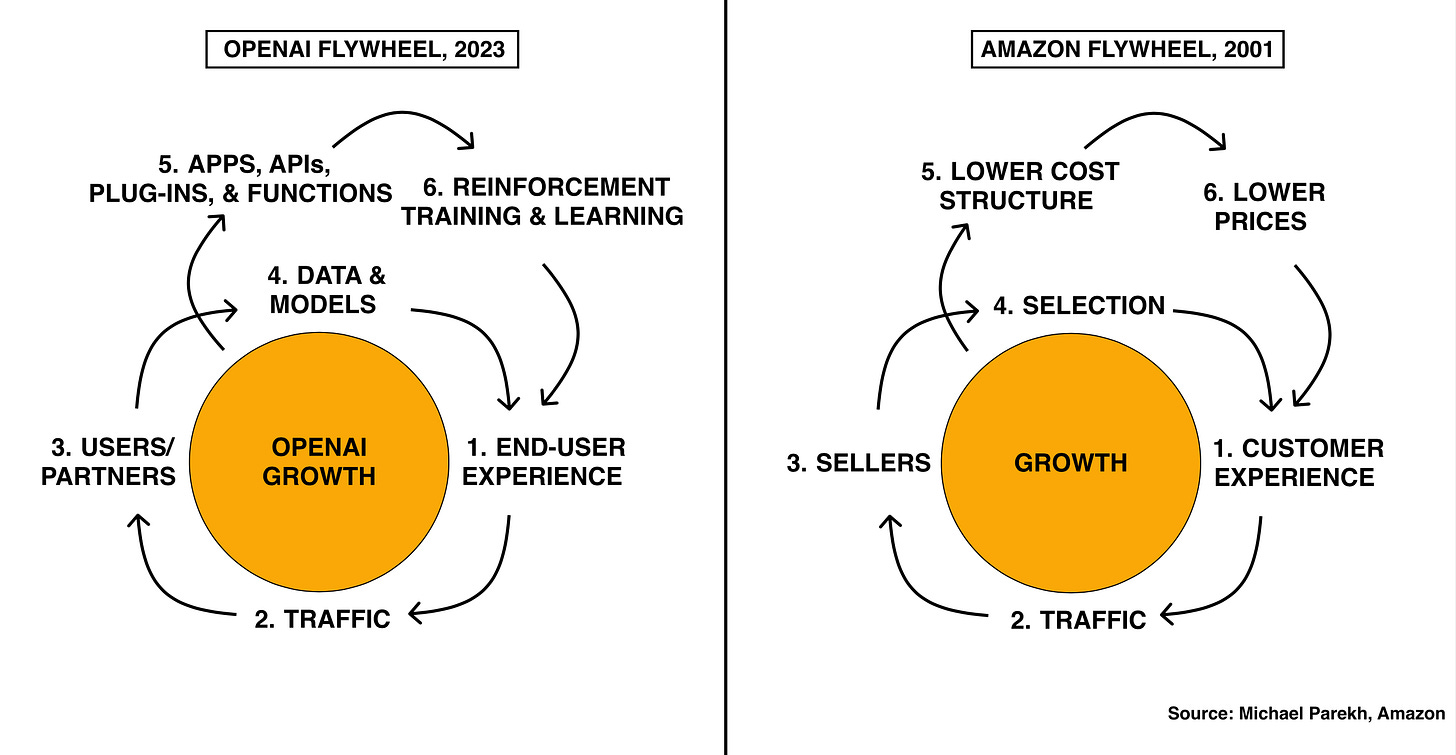

Also worth mentioning is that Amazon was the first big tech company to show how to master the Tech Flywheel that’s now being implemented at scale by OpenAI, and many ‘AI Native’ companies to follow, as I’ve discussed at length. They’ve been executing a modified version of the larger Amazon Flywheel at Amazon AWS, for over a decade now.

And created the leading Cloud services business of its type in the world. Just look at the OpenAI Flywheel (barely getting started in 2023) below, and imagine it as scale for Amazon AWS, AI version. For customers large and small worldwide. Will have a lot more to say here in posts to come.

So overall, I think Amazon AWS is an under-appreciated beneficiary of the AI ‘Picks and Shovel’ race currently beyond Nvidia in GPUs. They’re likely one of the best positioned globally, as businesses start to figure out how to implement LLM AI technologies in the years ahead. Be they open or closed, large or small, centralized or at the Edge, in their own networks and devices. For the customer’s own applications, with the needed levels of data control, privacy, and security.

So let’s keep a close eye on Amazon as well in the AI race, along with the other usual suspects. And the potential market at the moment is big enough for all. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here).

It's true, they may be less in the spotlight, but they're doing some impressive things on the AI front, especially as the largest cloud datacenter provider of LLM AI models. Looking forward to seeing how they continue to shape the AI landscape.