OpenAI opened its doors officially for Enterprise business, with its introduction of ‘ChatGPT Enterprise’. The invitation to the trillion dollar plus enterprise market globally is to

“Get enterprise-grade security & privacy and the most powerful version of ChatGPT”.

This development is notable along with OpenAI passing $1 billion revenue pace, as Enterprises large and small boost AI spending worldwide. From The Information today:

“OpenAI is currently on pace to generate more than $1 billion in revenue over the next 12 months from the sale of artificial intelligence software and the computing capacity that powers it. That’s far ahead of revenue projections the company previously shared with its shareholders, according to a person with direct knowledge of the situation.”

“The billion-dollar revenue figure implies that the Microsoft-backed company, which was valued on paper at $27 billion when investors bought stock from existing shareholders earlier this year, is generating more than $80 million in revenue per month. OpenAI generated just $28 million in revenue last year before it started charging for its groundbreaking chatbot, ChatGPT.”

“The rapid growth in revenue suggests app developers and companies—including secretive ones like Jane Street, a Wall Street firm—are increasingly finding ways to use OpenAI’s conversational text technology to make money or save on costs. Microsoft, Google and countless other businesses trying to make money from the same technology are closely watching OpenAI’s growth.”

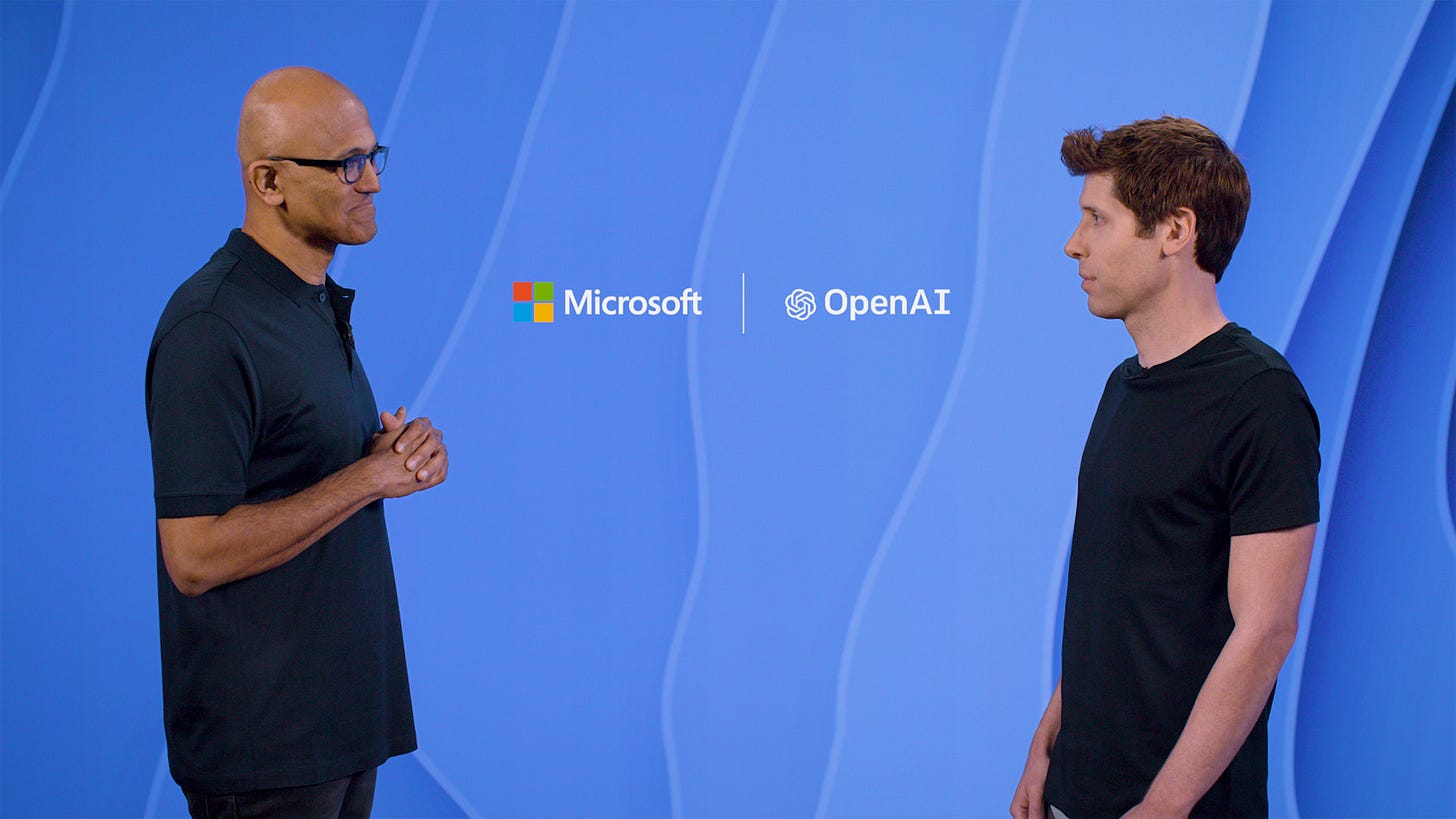

Speaking of ‘sibling’ rivalry, the optics on the historic $13 billion plus partnership between OpenAI and Microsoft expanded less than a year ago, of course is one of two brothers chasing the same prospective bride.

As the Wall Street Journal put it directly: “OpenAI Launches business Version of ChatGPT that Competes with Microsoft”:

“ChatGPT Enterprise, unveiled on Monday, is tailored to help employees learn new concepts or skills such as coding and to analyze internal corporate data, OpenAI’s chief operating officer, Brad Lightcap, said in an interview. The product is built on OpenAI’s most advanced language model, GPT-4, and the company says it will run up to twice as quickly as the paid version of ChatGPT.”

“ChatGPT Enterprise also is designed to address corporate customers’ concerns about protecting proprietary data. OpenAI won’t use data from customers of the product for training or to improve its own services, Lightcap said.“

And then the Elephant in the room:

“The launch comes about six weeks after Microsoft announced Bing Chat Enterprise, which is also built with OpenAI’s technology. Microsoft says that its tool can summarize text or generate answers similarly to ChatGPT, and touts that it will keep data from corporate users private. Microsoft is offering Bing Chat Enterprise as a feature for some customers of Microsoft 365, its popular workplace software that includes Word and Excel, and is part of an effort to deploy ChatGPT-based products for its vast population of corporate customers.”

“Microsoft has invested billions of dollars in OpenAI and taken a 49% stake in the startup to get early access to its generative-AI technology. Leaders of both companies have praised the partnership, but there has also been occasional conflict and confusion between them.”

It’s important to note that OpenAI with its few hundred total employees, and Microsoft with tens of thousands in enterprise sales alone, are more likely to work together on the mega enterprise customer opportunity than compete directly. And despite areas where sales teams may come to loggerheads, the multi-decade, top-down close relationship between the two CEOs, OpenAI’s Sam Altman and Microsoft’s Satya Nadella, will likely smooth over issues as needed.

And the global market both enterprise and consumer for now, is more than big enough for both partners, as well as big and small entrants like Google, Anthropic, and many others from around the world. This AI Tech Wave is in its earliest days as I’ve outlined before.

The more notable elements here are the needed improvements to the underlying products and services themselves, making them both enterprise grade. Lots of wood to chop here for all at this earliest of stages, not the least of which are safety and security issues.

Notably, Google Cloud countered with its Enterprise AI assist products this week as well, going up against Microsoft Azure and Amazon AWS, the number two and one Cloud companies respectively:

“Google is selling broad access to its most powerful artificial-intelligence technology for the first time as it races to make up ground in the lucrative cloud-software market.”

“Thomas Kurian, chief executive officer of Google Cloud, outlined the offerings to thousands of customers at Google’s annual cloud conference in San Francisco on Tuesday, while making widely available a number of tools that can help draft emails or summarize lengthy documents stored in the cloud.”

The new products will intensify competition with Microsoft, the second-largest cloud provider, and OpenAI, the ChatGPT creator it has backed with billions of dollars. Both companies already sell access to the latest AI technology behind the popular ChatGPT bot, but Google’s launch on Tuesday puts it ahead of Microsoft in making AI-powered office software easily available for all customers.”

Not to mention Amazon AWS, the number one Cloud provider to businesses worldwide. They’re all vying for the global AI driven Enterprise market.

A wild card on the data center side besides Amazon AWS, Microsoft Azure and Google Cloud is the GPU chip kingmaker Nvidia itself, which just reported results surprising even the bulls on Wall Street. And seeing the stock up over 230% in 2023, the best in the S&P500, and ahead of second best Meta, up almost 150%.

With Nvidia’s 70% plus share of AI GPU market, along with its supporting networking and other AI CUDA infrastructure software, it is increasingly building data center revenue streams with partners like CoreWeave, Microsoft, Amazon, and just this week Google as well. Its a hybrid AI datacenter model being built across vendors, at this time of global GPU shortages.

The only other company that has direct flexibility in AI chip processing capacity with Taiwan’s TSMC and others, is Google itself, with its TPU family of AI datacenter hardware. Also a trend that am watching closely, as competitors scramble globally to supply GPU chips at scale. As I’ve said before, without GPUs, this generation of LLM ‘AI’ just amounts to two non-consecutive letters in the alphabet.

Separately, Google for now seems to be matching Microsoft’s anticipated prices for CoPilot for Office 365:

“The company also announced Tuesday the general availability of a suite of AI-powered tools for corporate Gmail accounts and other workplace software products. The tools will cost an extra $30 a month per person, matching the price Microsoft will charge for a competing offering in its flagship 365 suite, which is still in testing with select businesses.”

The market is still trying to figure out best how much to charge for AI add-ons, an area I’ve discussed at length. It’s important to note that despite the initial sticker shock at the opening prices, falling compute prices, and a relatively competitive dynamic globally, will likely let the market find the best prices at scale.

We’ve discussed the opportunities for AI around Google Search/ChatGPT, the open source markets, and the consumer side at length, with coming innovations at scale around ‘Smart Agents’, ‘Digital Twins’, Video, and more. The Enterprise market is of course, also part of the global opportunity. It’s good to see the main players putting their cards on the table, and working through their partnerships. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)