AI: Google Gemini Almost Ready

...should hold its own against OpenAI/Microsoft and rest

Google smartly unveiled its long-heralded next generation, multimodal Foundation LLM AI set of models around Gemini today, despite a delayed introduction of more advanced versions into early next year. It’s needed positioning for Google, against both OpenAI and Microsoft’s upcoming products and services based on GPT-4 Turbo, which both companies went through at their respective Developer conferences recently. Some highlights of Google’s Gemini rollout first via New York Times, with CEO Sundar Pichai and Google AI/Deepmind head Demis Hassabis leading the charge:

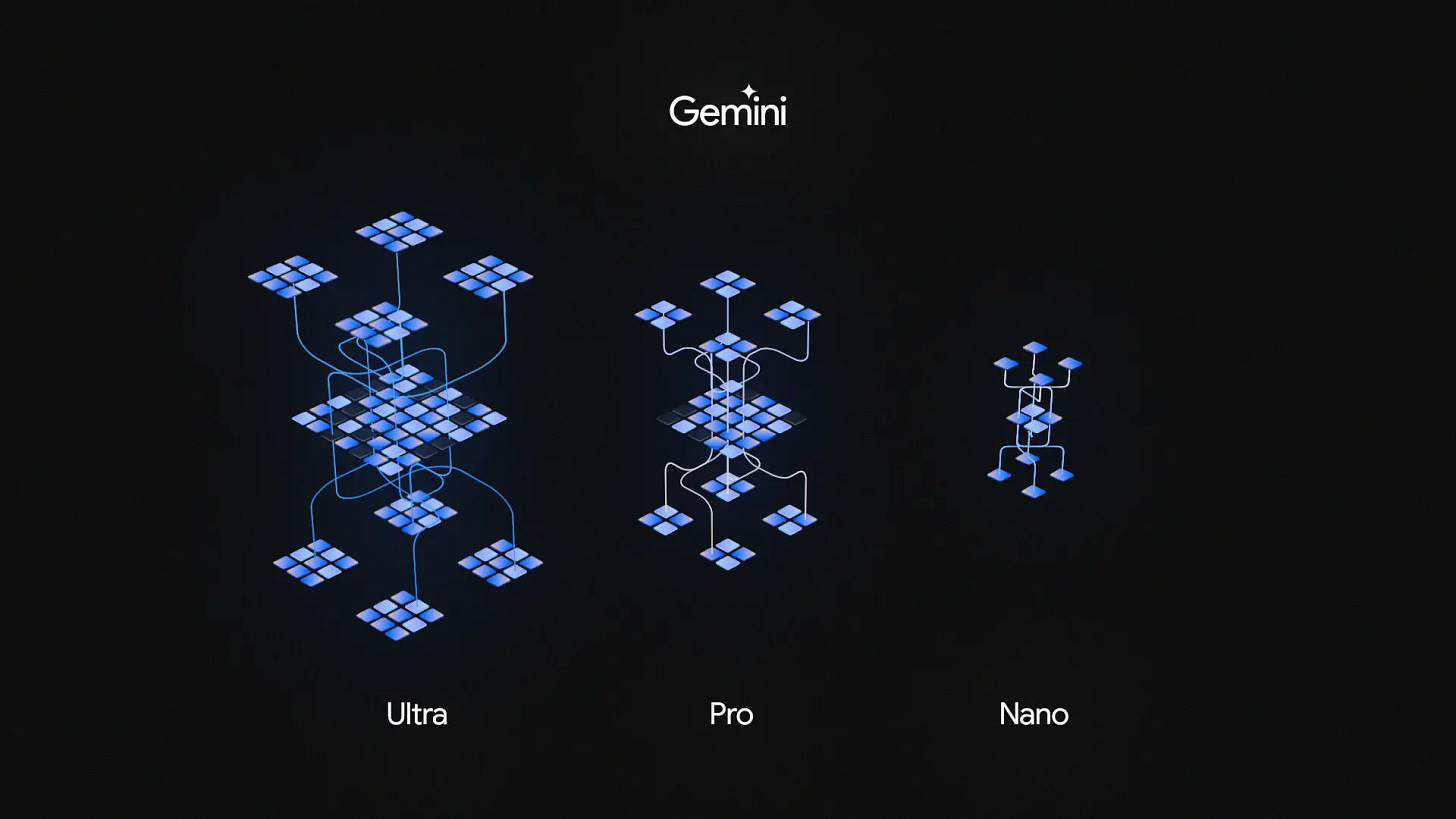

“Google has built three versions of Gemini with three different sets of skills. The largest, Ultra, is designed to tackle complex tasks and will debut next year. Pro, the mid-tier offering, will be rolled out to numerous Google services, starting Wednesday, with the Bard chatbot. Nano, the smallest version, will power some features on the Pixel 8 Pro smartphone, such as summarizing audio recordings and offering suggested text responses in WhatsApp starting Wednesday.

“Gemini is what scientists call a large language model, or L.L.M., a complex mathematical system that can learn skills by analyzing vast amounts of data, including digital books, Wikipedia articles and online bulletin boards. By identifying patterns in all that text, an L.L.M. learns to generate text on its own. That means it can write term papers, generate computer code and even carry on a conversation.”

“With Gemini, Google has also trained the technology on digital images and sounds. It is what researchers call a “multimodal” system, meaning it can analyze and respond to both images and sounds. If you give it a math problem that includes lines, shapes and other images, for example, it can answer in much the way a high school student would.”

“That portion of the technology, however, will not be available to consumers until sometime next year. Google also acknowledged that like similar systems, Gemini is prone to mistakes. It can get facts wrong or even “hallucinate” — make stuff up.”

I continue to think Google will more than hold its own against OpenAI/Microsoft, Meta, Anthropic, and many others, in this early stage of multimodal Foundation LLM AI services being rolled out into next year. Not the least of it because of their formidable AI resources and capabilities, and a wide range of Search driven products and services already being used daily by billions of users. Also as I outlined just a few days ago, both Google and OpenAI/Microsoft can win the AI race in their own ways.

Axios wraps up the highlights of today’s Gemini rollout with a clean summary and the rollout schedule that stretches into 2024:

“Google is announcing details around Gemini, the next version of its large language model that will power Bard and other products.”

“Why it matters: Google is eager to show it can keep up with rivals, especially OpenAI and Microsoft.”

“Driving the news: Gemini will come in three flavors: an Ultra version for the most demanding tasks, a Pro version it says is suited to a wide range of tasks and a Nano version that can run directly on mobile devices.”

“It's designed to be multimodal, recognizing video, images, text and voice at the same time. However, for now Gemini will only return results in either text or code.”

“Google is rolling out Gemini in stages. Starting today, Bard will use a fine-tuned version of Gemini Pro.”

“Gemini Nano will power the Google Pixel 8 Pro's new generative AI features, starting with a "summarize" feature in the voice recording app and a "smart reply" option in Gboard.”

“Beginning December 13, developers and enterprise customers will get access to Gemini Pro through either the Gemini API in Google AI Studio or Google Cloud Vertex AI.”

“Google also announced it is using a new, higher performance version of its Tensor chips — the Cloud TPU v5p — to train and serve its AI products.”

“Yes, but: Gemini Ultra is not being made available while the company completes additional safety and testing work.”

I’m particularly glad Google is focused on Gemini for local devices with Nano. As I’ve written before, it’s ‘Small AI’ in billions of devices where the users are, that will be a key aspect of AI products and services that do not exist today at scale. Despite the plethora of things that over a 100 million users do every month with OpenAI’s ChatGPT/GPT-4.

We are at very early days here and how much opportunity is present for all the companies with next generation Foundation/Frontier LLM AI models, from OpenAI/Microsoft to Google, Anthropic (now partnered with both Amazon AND Google), and others. As Google CEO Sundar Pichai highlighted responding to a question from the MIT Technology Review today:

“QUESTION: I imagine there was an enormous amount of pressure to get Gemini out the door. I’m curious what you learned by seeing what had happened with GPT-4’s release. What did you learn? What approaches changed in that time frame?”

“SUNDAR PICHAI: One thing, at least to me: it feels very far from a zero-sum game, right? Think about how profound the shift to AI is, and how early we are. There’s a world of opportunity ahead.”

“But to your specific question, it’s a rich field in which we are all progressing. There is a scientific component to it, there’s an academic component to it; being published a lot, seeing how models like GPT-4 work in the real world. We have learned from that. Safety is an important area. So in part with Gemini, there are safety techniques we have learned and improved on based on how models are working out in the real world.”

It’s important to underline that as Sundar Pichai highlights for Google and it’s Bard augmented Google services, it’s early days. AI investments and innovation underway at companies large and small have barely figured out the basics of the technology despite the capabilities and benchmarks today.

This includes both the ‘TPU’ hardware and Bard/Gemini software innovations that Google and others are working on this year. And importantly, they’re fusing ongoing concurrent AI work by the scientific, academic, and commercial communities globally. This is something that is a bit different in the AI Tech Wave than prior tech waves around the PC, Internet and others. There most of the innovation centered around primarily commercial efforts to bring to market products and services at scale. The underlying technologies had been mostly baked and cooked out of scientific and academic worlds.

In that context, we are in the early innings of a very long series of races and games, as I’ve laid out in earlier pieces. Google Gemini announcements today are but the next important move forward over the coming months, in an an effort by an important player Google, that has barely gotten underway. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)