AI: Microsoft, CoPilot for the Win

...re-branding Microsoft as the'Age of CoPilots'

As described a few days ago, OpenAI founder/CEO Sam Altman took the audience’s breath away at his company’s first Developer conference last week, announcing the company’s move from GPT to ‘GPTs for everyone’.

The CEO of their partner Microsoft, Satya Nadella, took a page from that key note in his own keynote for his company’s Developer conference Ignite, and announced the the AI ‘Age of CoPilots’. And then when onto to explain how everyone gets their own CoPilot, just as Sam made a big deal about everyone getting their own ‘GPTs’. Thus a faint sense of deja vu as the Verge explains in “Microsoft Copilot Studio lets anyone build custom AI copilots”:

“Microsoft follows OpenAI in allowing custom AI chatbots that can be fully customized by businesses.”

“Last week OpenAI announced its new GPT platform to let anyone create their own version of ChatGPT, and now Microsoft is following with Copilot Studio: a new no-code solution that lets businesses create a custom copilot or integrate a custom ChatGPT AI chatbot.”

Microsoft Copilot Studio is designed primarily to extend Microsoft 365 Copilot, the paid service that Microsoft launched earlier this month. Businesses can now customize the Copilot in Microsoft 365 to include datasets, automation flows, and even custom copilots that aren’t part of the Microsoft Graph that powers Microsoft 365 Copilot.”

The branding of Microsoft continues away from WIndows, with the company separately announcing plans to make Windows accessible as an app via other platforms like Apple iPhone iOS, Google Android and more. It’s now CoPilot front and center, And the commitment to ‘CoPilot’ continued at the conference:

“Microsoft’s Copilot Studio announcement is part of a broader AI push for Copilot, including new features for businesses, a Bing Chat rebranding, and even custom CPU and AI chips to help power these experiences.”

Let’s get used to the new Microsoft CoPilot logo:

So Microsoft rebrands Bing Chat to CoPilot, going more head to head with OpenAI’s ChatGPT:

“‘Bing Chat and Bing Chat Enterprise will now simply become Copilot,’ explains Colette Stallbaumer, general manager of Microsoft 365. The official name change comes just a couple of months after Microsoft picked Copilot as its branding for its chatbot inside Windows 11. At the time it wasn’t clear that the Bing Chat branding would fully disappear, but it is today.”

To make CoPilots and GPTs a reality at scale, Microsoft CEO Satya Nadella went onto to highlight the continued massive investments required in GPU infrastructure from their partnerships with Nvidia, AMD and others, while also building their own competitive AI chips, called Maia 100, and Cobalt 100:

“The Azure Maia 100 and Cobalt 100 chips are the first two custom silicon chips designed by Microsoft for its cloud infrastructure.”

“Microsoft has built its own custom AI chip that can be used to train large language models and potentially avoid a costly reliance on Nvidia. Microsoft has also built its own Arm-based CPU for cloud workloads. Both custom silicon chips are designed to power its Azure data centers and ready the company and its enterprise customers for a future full of AI.”

“Microsoft’s Azure Maia AI chip and Arm-powered Azure Cobalt CPU are arriving in 2024, on the back of a surge in demand this year for Nvidia’s H100 GPUs that are widely used to train and operate generative image tools and large language models. There’s such high demand for these GPUs that some have even fetched more than $40,000 on eBay.”

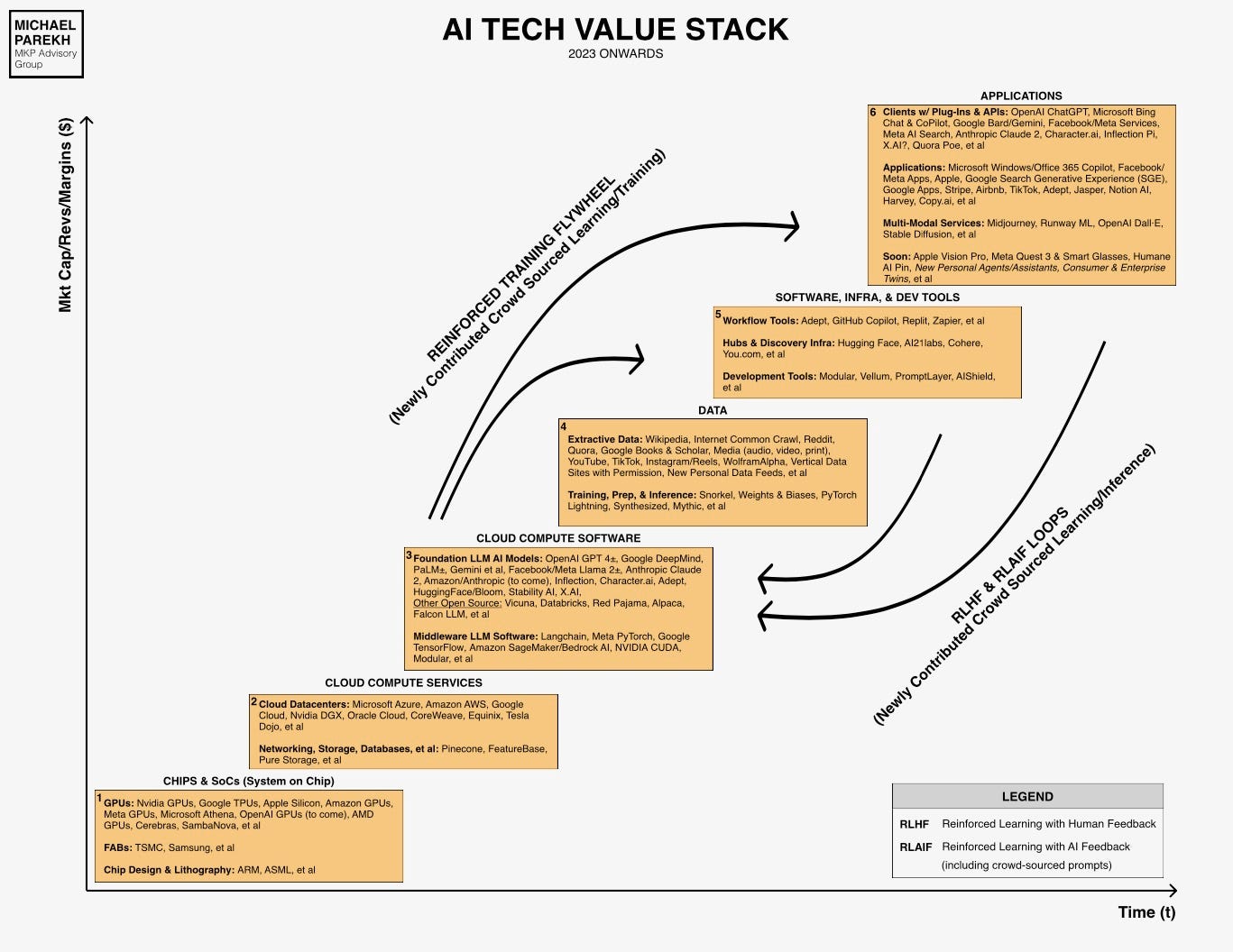

All of this coming GPU infrastructure also lays the foundation for AI services via Microsoft’s Azure cloud datacenter services for business customers like Meta, WPP, and regular companies looking to implement their own AIs on a range of Foundation LLM AI models beyond OpenAI’s GPT-4 Turbo and beyond. This was highlighted by Nvidia founder and CEO Jensen Huang joining Satya Nadella on stage at Ignire highlighting the infrastructure work the two companies are working on together to enable a range of LLM AI models and tools for customers beyond OpenAI.

It all illustrates the acceleration in Foundation LLM AI infrastructure with companies allocating billions in this AI Tech Wave.

It’s why Nvidia is rolling out its own DGX AI cloud strategy as I’ve described, and why both Google and Amazon AWS have invested in and partnered with foundation LLM AI company Anthropic. Barely a year after OpenAI’s ChatGPT moment last November, in partnership with Microsoft.

The companies above are both partners and competitors, in the short and longer term. The whole keynote video is worth watching in its entirety to understand the scale and scope of what’s coming from just two of the biggest players, CoPilot and all. As both CEOs re-emphasized several times on stage, it’s all just getting started. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)