The Bigger Picture. Sunday April 7, 2024

The early years in every tech wave are characterized by companies large and small skirting the ‘Gray Zones’. We’ve seen it in the 1980s PC wave with Bill Gates and Microsoft in its early years, with AOL ‘round-trip’ deals in the 1990s Internet wave in its fast-growth days, Uber founder Travis Kalanick’s grey area transgressions in the smartphone wave in the 2000s. And of course many other examples all the way to the current AI Tech Wave. And given the current multi-hundred billion dollar AI Gold Rush, we’re seeing it again this wave. This is the Bigger Picture I’d like to focus on this Sunday. Let me explain.

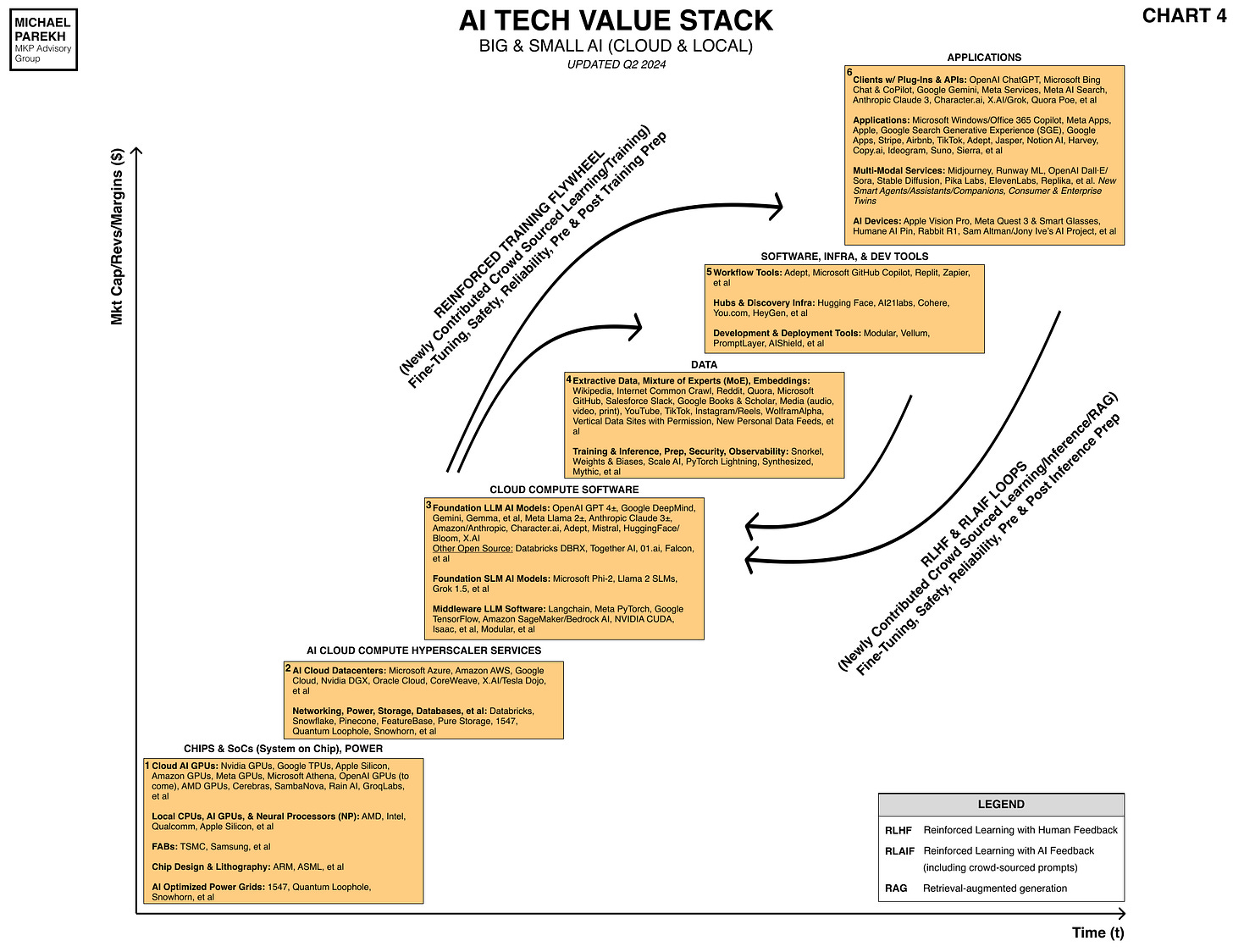

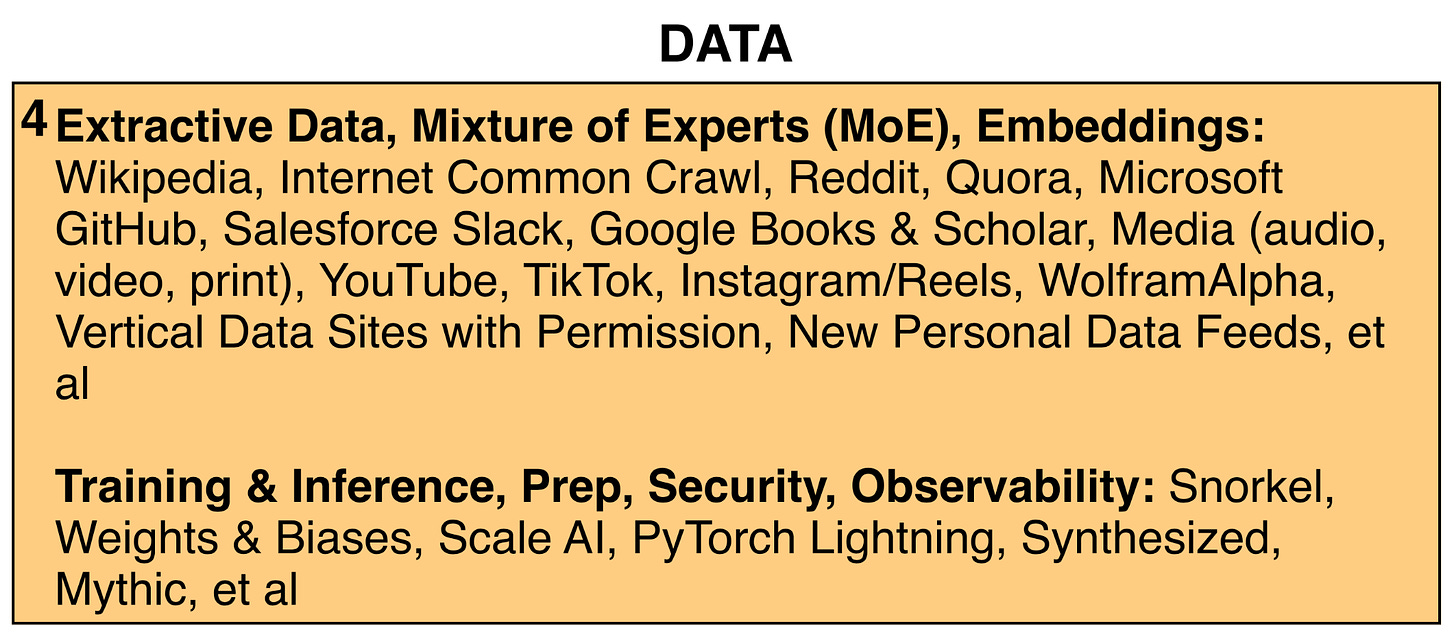

The arena in the AI Tech Wave this time is the mad dash for more sources of massive Data troves. Especially as LLM AI models and AI GPU architectures scale at rates of 20x plus per year for the next few years. The AI Data Quest is a topic I explored earlier this week, citing it as a key input for AI infrastructure and growth over the next few years, along with AI GPU chips, AI ready super-scaled data centers, and accompanying ramps of Power infrastructure. Companies big and small are earnestly focused on ramping up at scale this year and beyond. All the Boxes from #1 to #4 below in the updated AI Tech Wave chart below:

The Grey area is vividly outlined by the New York Times’ “How tech Giants Cut Corners to Harvest Data for AI”:

“In late 2021, OpenAI faced a supply problem.”

“The artificial intelligence lab had exhausted every reservoir of reputable English-language text on the internet as it developed its latest A.I. system. It needed more data to train the next version of its technology — lots more.”

“So OpenAI researchers created a speech recognition tool called Whisper. It could transcribe the audio from YouTube videos, yielding new conversational text that would make an A.I. system smarter.”

“Some OpenAI employees discussed how such a move might go against YouTube’s rules, three people with knowledge of the conversations said. YouTube, which is owned by Google, prohibits use of its videos for applications that are “independent” of the video platform.”

“Ultimately, an OpenAI team transcribed more than one million hours of YouTube videos, the people said. The team included Greg Brockman, OpenAI’s president, who personally helped collect the videos, two of the people said. The texts were then fed into a system called GPT-4, which was widely considered one of the world’s most powerful A.I. models and was the basis of the latest version of the ChatGPT chatbot.”

The piece then goes on to explain that Google, Meta, and many other tech companies faced their own ‘tough decisions’ on skirting Gray areas, in the quest for new sources of Data at scale. Both actual, real-time, and the Synthetic kind that offer promising new sources at scale. The whole piece is worth reading.

The AI Data Quest is an area I’ve covered at length before. And continue to believe that “we’ve barely scratched the tip of the potential AI Data iceberg thus far”. It’s Box 4 in the chart above.

The near term challenges of Scaling AI Data remain of course, as I also covered a few days ago in “Humans needed ‘en masse’ to Scale AI”. As the NY Times piece above goes on to observe:

“Like OpenAI, Google transcribed YouTube videos to harvest text for its A.I. models, five people with knowledge of the company’s practices said. That potentially violated the copyrights to the videos, which belong to their creators.

“Last year, Google also broadened its terms of service. One motivation for the change, according to members of the company’s privacy team and an internal message viewed by The Times, was to allow Google to be able to tap publicly available Google Docs, restaurant reviews on Google Maps and other online material for more of its A.I. products.”

The roots of the AI data race goes back to early 2020:

“In January 2020, Jared Kaplan, a theoretical physicist at Johns Hopkins University, published a groundbreaking paper on A.I. that stoked the appetite for online data.”

His conclusion was unequivocal: The more data there was to train a large language model — the technology that drives online chatbots — the better it would perform. Just as a student learns more by reading more books, large language models can better pinpoint patterns in text and be more accurate with more information.

“Everyone was very surprised that these trends — these scaling laws as we call them — were basically as precise as what you see in astronomy or physics,” said Dr. Kaplan, who published the paper with nine OpenAI researchers. (He now works at the A.I. start-up Anthropic.)”

“Scale is all you need” soon became a rallying cry for A.I.”

Echoing of course the “Attention is All you need'“ AI paper by Google researchers in 2017, that kicked off the LLM/Generative AI boom in the first place.

And of course, as always, time is of the essence:

“Their situation is urgent. Tech companies could run through the high-quality data on the internet as soon as 2026, according to Epoch, a research institute. The companies are using the data faster than it is being produced.”

“Tech companies are so hungry for new data that some are developing “synthetic” information. This is not organic data created by humans, but text, images and code that A.I. models produce — in other words, the systems learn from what they themselves generate.”

I discussed the pros and cons of ‘Synthetic Data” last fall, an area seeing rapid technical innovation.

All this of course contributes to the ‘Copyright vs Fair Use’ debates currently being negotiatd and litigated by the AI industry at large. In the heat of building LLM AI businesses ‘On the Shoulders of Giants, Go-Getter ‘Creators’, & Grunts’, OTSOG 2.0 as it were.

But the current pressures for more Data are fueling a ‘Do it now and ask for forgiveness later’ environment. And many players seeking cover from ‘everyone else is doing it too’:

“Meta’s executives said OpenAI seemed to have used copyrighted material without permission. It would take Meta too long to negotiate licenses with publishers, artists, musicians and the news industry, they said, according to the recordings.”

“The only thing that’s holding us back from being as good as ChatGPT is literally just data volume,” Nick Grudin, a vice president of global partnership and content, said in one meeting.”

“OpenAI appeared to be taking copyrighted material and Meta could follow this “market precedent,” he added.”

“Meta’s executives agreed to lean on a 2015 court decision involving the Authors Guild versus Google, according to the recordings. In that case, Google was permitted to scan, digitize and catalog books in an online database after arguing that it had reproduced only snippets of the works online and had transformed the originals, which made it fair use.”

“Using data to train A.I. systems, Meta’s lawyers said in their meetings, should similarly be fair use.”

All in the heat of the AI moment.

It’s not surprising that the AI industry is navigating into the ‘Gray areas’. We’ve seen it before, and we’re seeing it again in the heat of the competitive moment. Maybe it’ll all be forgiven later. Or at least re-negotiated.

And that’s this Sunday’s ‘Bigger Picture’. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)