AI: The Power Bill for AI

...in the end may not be as Big as currently imagined

The Bigger Picture, Sunday, February 19, 2024

One of the enduring variables for exponential growth in AI usage globally in the decade or more to come, is that we’re likely to need exponential amounts of power for the AI data centers and for local “Small AI” processing on devices. This has particularly been true amongst the so-called ‘AI Scalists’ like OpenAI and its founder/CEO Sam Altman, who has been driving the presumption that we’ll likely need immense amounts of power for AI training and inference of the ever-growing and capable LLM AI models.

This is to the point that Mr. Altman is an active investor and propnent of alternative sources of mass energy like both fission and fusion nuclear energy companies. But the latest data from multiple sources suggest that the power needs for AI may ultimately be far more manageable than presumed. This is the ‘Bigger Picture’ I’d like to review this Sunday. Let me explain.

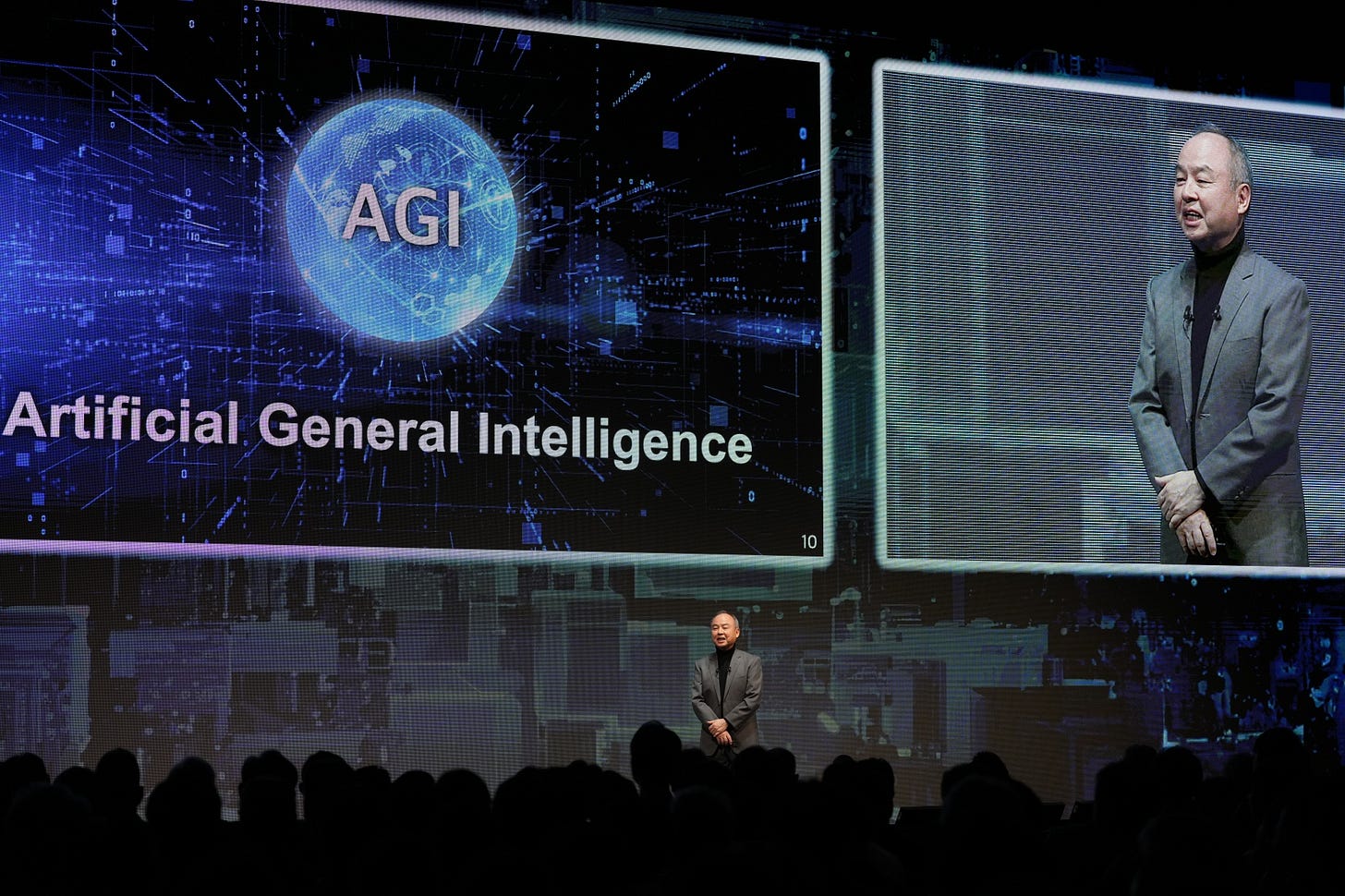

To provide a bit more context, I’ve written recently about Mr. Altman’s efforts to raise up to $7 trillion for chip and power infrastructure globally. To the point that Softbank’s Masayoshi Son is contemplating a $100 billion dollar financing for said ‘AGI’ AI infrastructure investments.

In a piece titled “How much electricity does AI consume?”, the Verge offers this additional context:

“It’s not easy to calculate the watts and joules that go into a single Balenciaga pope. But we’re not completely in the dark about the true energy cost of AI.”

“It’s common knowledge that machine learning consumes a lot of energy. All those AI models powering email summaries, regicidal chatbots, and videos of Homer Simpson singing nu-metal are racking up a hefty server bill measured in megawatts per hour. But no one, it seems — not even the companies behind the tech — can say exactly what the cost is.”

“Estimates do exist, but experts say those figures are partial and contingent, offering only a glimpse of AI’s total energy usage. This is because machine learning models are incredibly variable, able to be configured in ways that dramatically alter their power consumption. Moreover, the organizations best placed to produce a bill — companies like Meta, Microsoft, and OpenAI — simply aren’t sharing the relevant information. (Judy Priest, CTO for cloud operations and innovations at Microsoft said in an e-mail that the company is currently “investing in developing methodologies to quantify the energy use and carbon impact of AI while working on ways to make large systems more efficient, in both training and application.” OpenAI and Meta did not respond to requests for comment.)”

The whole piece is worth reading for a range of detail around both the ‘training’ of ever larger LLM AI models like OpenAI’s GPT 3, 3.5 Turbo, 4 and beyond. Not to mention comparably scaled models from Google with Gemini, Anthropic’s Claude models and over a dozen foundation LLM AI models running in robust AI data centers around the world, fitted with hundreds of thousands, and eventually millions of Nvidia’s currently scarce AI chips. Those chips like the H100 and the upcoming B100 cost tens of thousands of dollars per chip.

Nvidia’s founder/CEO Jensen Huang alone has estimated a likely outlay of almost a trillion dollars just for AI data centers in the coming decade. This for what he calls “accelerated computing’, which is a complex, but logical evolution of computing AI hardware and software, that continues to scale Moore’s Law in alternative ways. That is doubling or better in power, and ever decreasing costs.

But the power to run these centers is likely to end up being in the low single digit percentages, even at scale for training, and inference running the ever important ‘reinforced learning’ training loops that makes AI so remarkable and useful.

Again, from the Verge piece above:

“A recent report by the International Energy Agency offered similar estimates, suggesting that electricity usage by data centers will increase significantly in the near future thanks to the demands of AI and cryptocurrency. The agency says current data center energy usage stands at around 460 terawatt hours in 2022 and could increase to between 620 and 1,050 TWh in 2026 — equivalent to the energy demands of Sweden or Germany, respectively.”

“But de Vries says putting these figures in context is important. He notes that between 2010 and 2018, data center energy usage has been fairly stable, accounting for around 1 to 2 percent of global consumption. (And when we say “data centers” here we mean everything that makes up “the internet”: from the internal servers of corporations to all the apps you can’t use offline on your smartphone.) Demand certainly went up over this period, says de Vries, but the hardware got more efficient, thus offsetting the increase.”

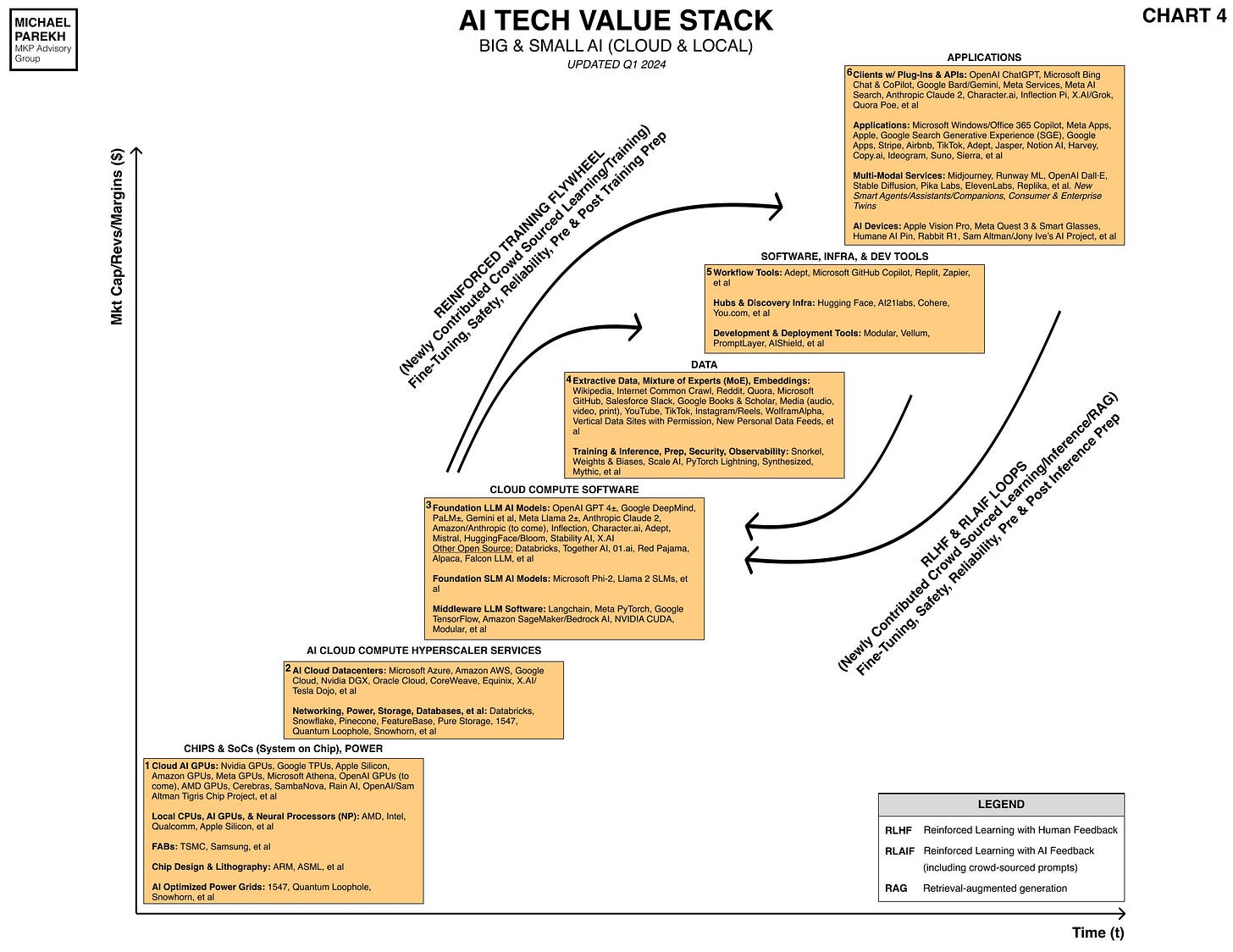

The tl;dr here is that Power is an important component for the AI Tech Wave, that yours truly has been tracking for a very long time. And it’s built into the AI Tech Stack I talk about regularly below, represented in the first two boxes 1 & 2:

And will be tracking this closely, both from the perspective of the AI Scalists at OpenAI and elsewhere, and the counter innovations from far more efficient AI processing, particularly from open source AI technologies championed by Meta, Hugging Face, Mistral, and others.

As usual, the answer will likely come out somewhere in the middle, and potentially more manageable than currently feared. That is the ‘Bigger Picture’ in AI Power requirements we should keep in mind for now. We’ll find out more soon enough. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)