AI: Intel and its AI chip re-think

...whole tech industry ramping up AI chip redos

The Bigger Picture, Sunday, December 24, 2023

‘“‘Twas the night before Christmas, when all through the AI data center house, all the GPUs were whirring, as swift as a cyber-mouse”.

Ok, not as smooth as the original, but you get the point. As we end this year of flexing LLM AI models, ever more AI infrastructure from the GPU chips up, and the white hot global demand for them all driven by hundreds of billions in investment capital.

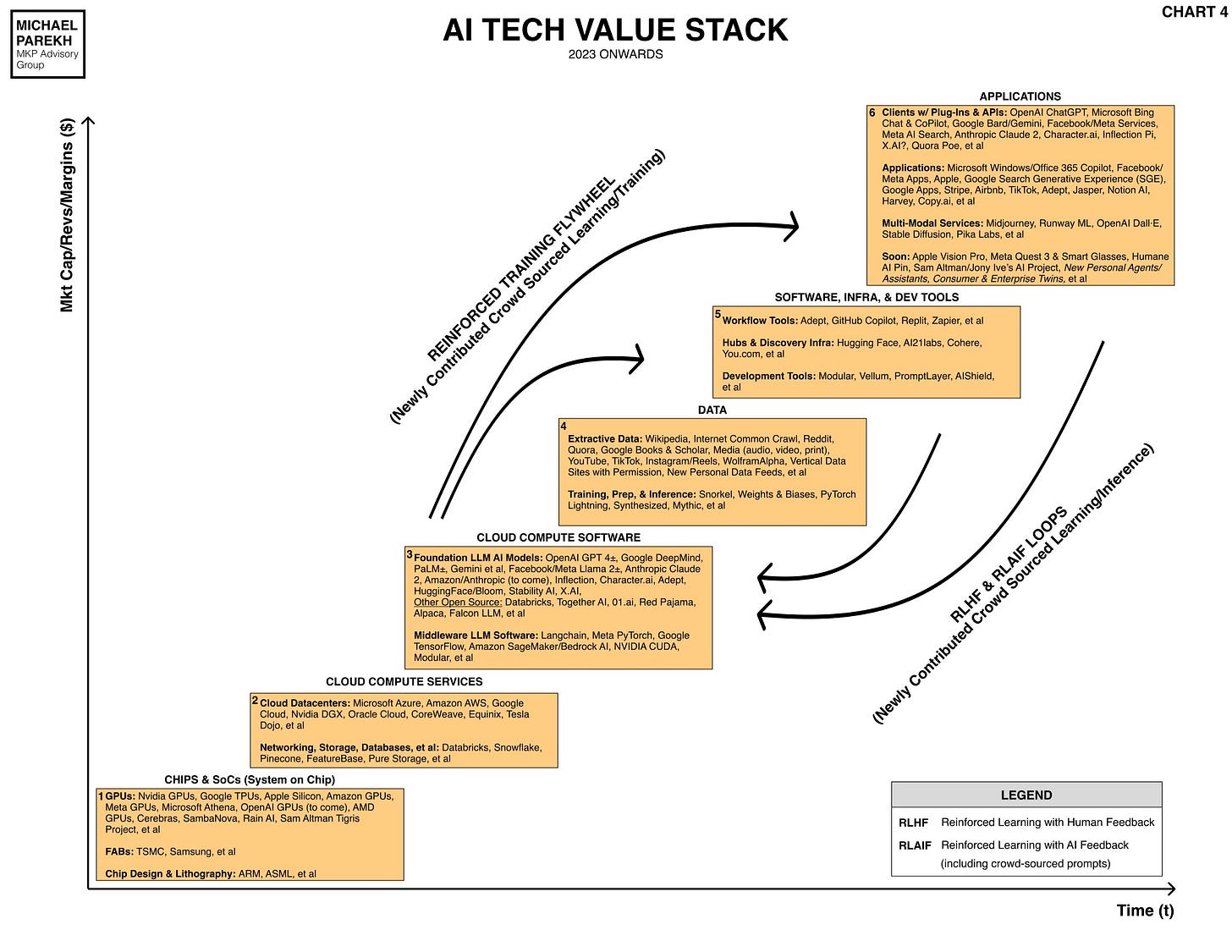

As discussed at length in these pages, from TSMC on up in the AI Tech Stack driving the AI Tech Wave.

Nvidia for now rules the AI GPU and data center roost, driven by gusty early innovations and investments by founder/CEO Jensen Huang. It means of course that going into 2024, his core competitors like Intel, AMD, Qualcomm and most other chips companies worldwide are ramping up to compete for AI training and inference chips in 2024, making multi-year, multi-billion dollar bets that resemble the telecom industry capex ramp up concurrent with the internet commercialization boom in the 1990s.

The Bigger Picture this Sunday is that other chip companies are going beyond their multi-decade long ‘comfort zones’ to compete.

Intel is a key example this month of that big change, continuing to build on their chip re-think projects I’ve discussed in previous posts. The most recent Intel announcements are a major evolution of their long-standing approach to computer chips. Let me explain.

Intel had its product roadmap conference just a few days ago, appropriately called ‘AI Everywhere’ in New York. They announced their roadmap of AI products ‘to enable customers’ AI solutions everywhere, across the data center, network, edge and PC’.

As ‘All About Circuits’ described it in “Intel Announces the Company’s Largest Architectural Change in 40 Years”:

“Intel's new Core Ultra mobile processors and 5th Gen Xeon processors build in AI acceleration with specialized cores, marking the biggest architectural change since the 80286.”

That was one of the core chips that drove the beginning of the PC Era and tech wave in the 1980s of course, over forty years ago.

The ‘Circuit’ continues:

“At Intel's 2023 "AI Everywhere" event, Intel introduced a radical update to its processing architecture, represented in its mobile Core Ultra processors and desktop Core Ultra processors to be released in 2024.”

“The architectures combine conventional high-capability CPU cores with special-purpose cores for low-power tasks, graphics acceleration, and AI acceleration. The newest 5th Gen Xeon CPUs, also announced at the event, focus on server performance and add in co-processor cores for cloud AI acceleration.”

“According to Intel’s company vision, the future of AI processing is in both the cloud and the edge. The company predicts that by 2028, 80% of all PCs will be “AI PCs”, equipped with AI co-processors.”

And then of course, AI neural processing chips, pioneered by everyone from Apple to Google in their device plans to power AI ‘at the edge’, something I’ve also discussed at length the concurrent ramp of ‘Small AI’ with the ‘Big AI’ seen this year:

“According to Intel’s company vision, the future of AI processing is in both the cloud and the edge. The company predicts that by 2028, 80% of all PCs will be “AI PCs”, equipped with AI co-processors.”

“Intel Moves to Neural Processing Units”

“Intel's AI co-processors, called neural processing units (NPUs), are its newest major innovation. When combined with the other special-purpose CPU cores, Intel believes that the new processors will increase overall performance while reducing electrical power draw and lowering overall total cost of ownership (TCO).”

As I discussed just a few days ago, reducing power consumption as a key input, for both ‘Big AI’ in AI cloud data centers, and ‘Small AI’ in billions of local devices, is a critical must-do for every AI company large and small.

It’s one of the things OpenAI founder and CEO Sam Altman is focused on, potentially raising billions in new capital at a $100 billion plus valuation, for entirely new chip architectures that are far more efficient in Compute and power consumption.

OpenAI has also held discussions to raise funding for a new chip venture with Abu Dhabi-based G42, according to the report.”

“It is unclear if the chip venture and wider company funding were related, the report said, adding that OpenAI has discussed raising between $8 billion and $10 billion from G42.

“OpenAI is set to complete a separate tender offer led by Thrive Capital in early January, which would allow employees to sell shares at a valuation of $86 billion, according to the report.”

“Microsoft (MSFT.O) has committed to invest over $10 billion in OpenAI, which kicked off the generative artificial intelligence craze in November 2022 by releasing ChatGPT.”

The race is on for more efficient AI chips. And every major company, both traditional chip companies like Intel, and the new cluster of AI data center companies like Amazon, Microsoft, Google, and others are in a frenetic innovation and investment ramp. They’re all in a race to re-think and re-build one of the hardest, complex, and capital intensive infrastructure component of computing that’s made the modern world possible over the last fifty plus years.

They’re partners, customers and of course long-term customers in the ecosystem. And they’re all pushing the boundaries Moore’s Law and other scale efficiencies, that have been such an exponential tail wave to the tech industry for decades.

The Bigger Picture this Christmas Eve Sunday is that we are in a multi-year secular race to build this global computing infrastructure, from chip on up. It will for now transcend the financial cycles of investor enthusiasm both private and public.

And it’s all just beginning. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)