AI: Soon in all Shapes and Sizes

...multimodal AI to envelop us all

Last November, in “AI: Trying Different form factors”, I highlighted that as LLM AI models go multimodal in 2024, we would soon see it show up in all types of form factors, big and small in this AI Tech Wave:

“In last month’s ‘AI: Feeling our way to AI UI/UX’, I made the point:

“In the ten months since OpenAI’s ‘ChatGPT moment’, and after 200 billion plus in AI Infrastructure investments, the world is still trying to figure out how AI will be used at scale in all its many possible incarnations. And so many ways for users to interact and experiment, ‘UI/UX’ in silicon valley speak.

These include of course the ChatGPT style ‘ChatBot’, with variations coming in celebrity ‘Smart Agent’ flavors by Meta and others.“

“This comes up as Humane AI, a company founded several years ago by a whole bunch of ex-Apple designers and engineers, and backed by OpenAI’s Sam Altman and other tech/VC luminaries, launched its AI driven lapel pin last week to the world. As the NY Times explains in an illuminating origin story/profile piece.”

This trend of course is concurrent with Large Language Models already seeing a potentially larger number of ‘Small Language Models’, or SLMs, a trend I’ve highlighted as ‘Small AI’ , complementing the ‘Big AI’ services like OpenAI’s ChatGPT, and Google’s Bard and upcoming Gemini run on.

I bring all this up because we’re already seeing a spate of announcements around new initiatives around AI coming in a lot more shapes and sizes, especially with the CES 2024 show this week in Las Vegas being driven by AI hardware and software. One of the largest auto companies in the world Volkswagen announced they would soon incorporate ChatGPT into many of their cars.

And there were other new products and services that are also rolling out with AI focused on sight and sound all around us. As Fast Company notes in their piece on upcoming AI devices:

“If the meme-ified response and recent layoffs are any indication, our first piece of AI hardware—Humane’s $240 million Ai Pin from ex-Apple duo Imran Chaudhri and Bethany Bongiorno—appears to be dead on arrival. But that hasn’t stopped AI enthusiasts across X from buzzing about another entrant in the category.”

“I’m actually not referring to the highly anticipated “iPhone of AI” being developed by OpenAI and Lovefrom with $1 billion in funding from Softbank.

And I’m also not talking about the $200 Rabbit R1, a sort of AI camera-meets-walkie-talkie that can control apps on your phone, designed by Teenage Engineering and announced just this week.”

“I’m referring to a relatively humble pendant, a wearable AI microphone called Tab, developed by 21-year-old Harvard dropout Avi Schiffmann. At age 17, Schiffmann built a massively successful COVID-19 tracking website that welcomed tens of millions of users daily.”

Of course all this is in addition to Apple rolling out its next major computing wearable platform around the Vision Pro this month, introducing its form of AI driven ‘Spatial Computing, at a price that in a historical context is ‘reasonable’ even as it starts at $3499.

All of these devices, will test the limits of the underlying emerging technologies, their economic price points down Moore’s Law curves, and of course what society is willing to bear in terms of public behavioral and privacy norms.

All the big tech ‘Magnificent 7’ companies and others are racing down this path.

Devices like ‘Smart’ sunglasses by Meta in partnership with Ray-Ban, and Amazon in partnership with Carrera, have been recently updated with multimodal AI features to come.

These rollouts are already bring back memories of privacy intruding scenarios of unauthorized pictures, photos and recordings by people using these gadgets vs the people who are not. As the New York Times recounts in “How Meta’s New Face Camera Heralds a New Age of Surveillance”:

“Meta’s $300 smart glasses look cool but lack a killer app, and they offer a glimpse into a future with even less privacy and more distraction.”

“The issue of widespread surveillance isn’t particularly new. The ubiquity of smartphones, doorbell cameras and dashcams makes it likely that you are being recorded anywhere you go. But Chris Gilliard, an independent privacy scholar who has studied the effects of surveillance technologies, said cameras hidden inside smart glasses would most likely enable bad actors — like the people shooting sneaky photos of others at the gym — to do more harm.”

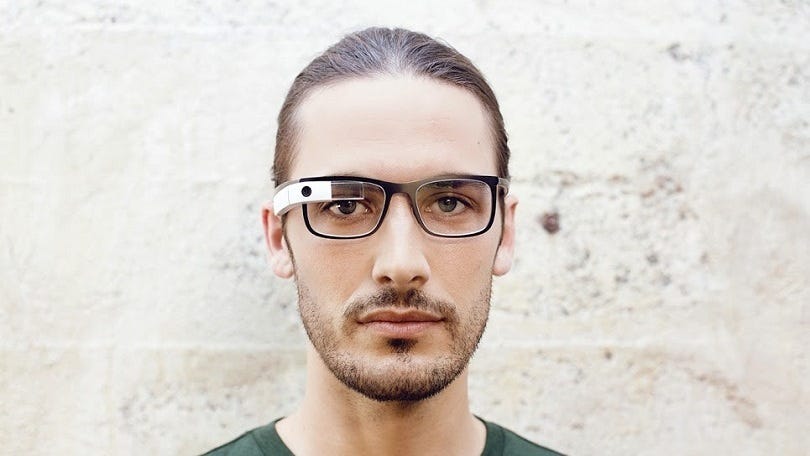

The tech industry has been down this road before, before AI was white-hot and ‘cool’. Remember the ‘Google Glassholes’ pushback to the early adopters of Google’s AR/VR glasses back in 2012.

All of those interactions will likely seem tame compared to what’s coming next. As multimodal AI gets baked into many devices old and new. Google, Apple, Meta, Amazon amongst the big tech companies are already racing to introduce multimodal AI features into Google Nest devices, Apple Siri, and Amazon Echo/Alexa devices, and of course Meta’s ‘Meta AI’ via Ray-ban ‘Smart Glasses’. And every other tech company large and small will have their versions out this year as well.

Most of these experiments will likely not see adoption at scale (i.e., fail). But the learnings will be considerable. Both in terms of how we use (or don’t use AI) beyond ChatGPT, and when are we willing to behavioral norms in society to accommodate them. And of course regulators will have to determine how they go along, if at all. Just in the US, it’s still illegal to record parties without their explicit consent in many States.

Those sorts of societal and legislative changes can be far slower than the technologies racing ahead around AI. Early adopters will of course race ahead with them. Mainstream adoption at scale, especially globally, is another thing to watch.

But the Cambrian explosion of AI devices, services and experiences is coming soon to somewhere near all of us. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)