AI: Opacity to Transparency

...Stanford's Foundation LLM AI 'Transparency Index'

A few days ago, in my piece “AI: Figuring out AI Explainability”, I stressed the point that Foundation LLM AI products and their upcoming multimodal versions, like OpenAI’s GPT4, Google’s current Palm2, Bard, and upcoming Gemini, Meta’s ‘smart agents’ driven Meta AI built on its open source Llama 2, and other models from Anthropic, Inflection and others are notable for their ‘Opacity’. In other words, their lack of ‘Transparency’. Specifically, I said:

“ChatGPT and its ilk are a unique early technology product in that they;re open-ended and opaque in their results.”

“We’re far from a place where we can clearly interpret, explain, and ultimately rely on the results from these AI technologies.”

As I highlighted in other pieces, the immense quantitative complexity of these models and the AI GPU hardware that runs them, have even their top scientists at a loss to explain how their products do their ‘magic’ in a transparent way. It’s early days in the AI Tech Wave, and a lot of industry efforts are underway to improve on these metrics, along with of course the safety and reliability of these models.

So as one might expect, efforts are also underway to measure and compare Foundation LLM AI models against one another. Stanford University’s CRFM (Center for Research on Foundation Models), unveiled their “Foundation model Transparency Index”, a ‘comprehensive assessment of the transparency of foundation model developers’. They lay out the context, design and their execution on the Index:

“Context. Foundation models like GPT-4 and Llama 2 are used by millions of people. While the societal impact of these models is rising, transparency is on the decline. If this trend continues, foundation models could become just as opaque as social media platforms and other previous technologies, replicating their failure modes.”

“Design. We introduce the Foundation Model Transparency Index to assess the transparency of foundation model developers. We design the Index around 100 transparency indicators, which codify transparency for foundation models, the resources required to build them, and their use in the AI supply chain.”

“Execution. For the 2023 Index, we score 10 leading developers against our 100 indicators. This provides a snapshot of transparency across the AI ecosystem. All developers have significant room for improvement that we will aim to track in the future versions of the Index.”

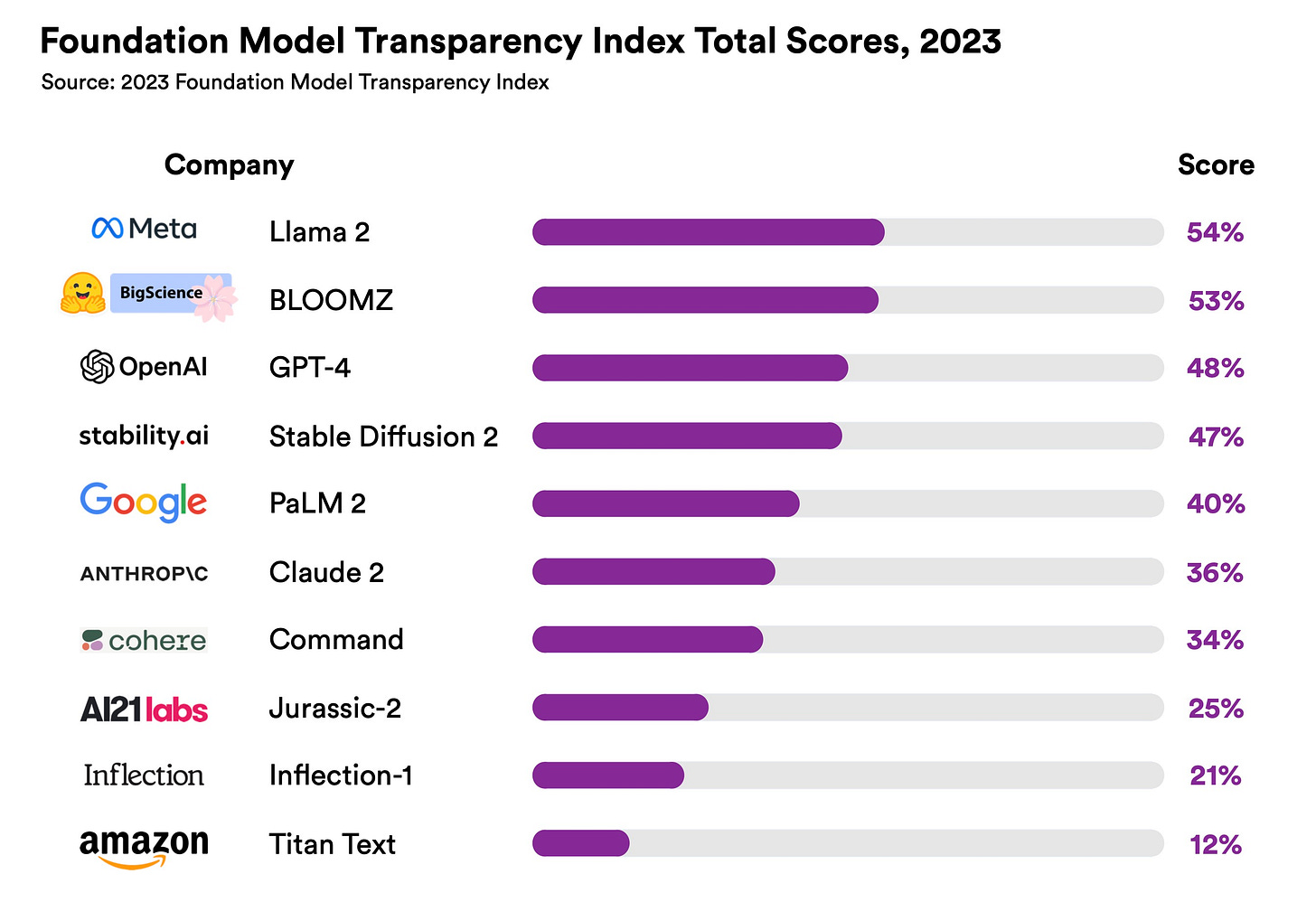

As one might expect at this early stage, the results have a lot of room for improvement, as this interview of the authors by Venturebeat indicates. Here is the headline chart:

The top company for 2023 on Foundation Model Transparency was Meta’s open source Llama 2 with a score of 54%. OpenAI’s ‘Closed’ GPT-4 came in no. 3 with 48%, with Google at no. 5 at 40%. Not surprisingly, open source Foundation LLM AI models fared better than their closed peers. CRFM laid out their key findings in the following summary:

“Key Findings

“The top-scoring model scores only 54 out of 100. No major foundation model developer is close to providing adequate transparency, revealing a fundamental lack of transparency in the AI industry.”

“The mean score is a just 37%. Yet, 82 of the indicators are satisfied by at least one developer, meaning that developers can significantly improve transparency by adopting best practices from their competitors.”

Open foundation model developers lead the way. Two of the three open foundation model developers get the two highest scores. Both allow their model weights to be downloaded. Stability AI, the third open foundation model developer, is a close fourth, behind OpenAI.”

The authors of the study emphasized the long way to go for the industry on Transparency, and the long-term need for improvements:

“Companies in the foundation model space are becoming less transparent, says Rishi Bommasani, Society Lead at the Center for Research on Foundation Models (CRFM), within Stanford HAI. For example, OpenAI, which has the word “open” right in its name, has clearly stated that it will not be transparent about most aspects of its flagship model, GPT-4.”

“Less transparency makes it harder for other businesses to know if they can safely build applications that rely on commercial foundation models; for academics to rely on commercial foundation models for research; for policymakers to design meaningful policies to rein in this powerful technology; and for consumers to understand model limitations or seek redress for harms caused.”

“To assess transparency, Bommasani and CRFM Director Percy Liang brought together a multidisciplinary team from Stanford, MIT, and Princeton to design a scoring system called the Foundation Model Transparency Index. The FMTI evaluates 100 different aspects of transparency, from how a company builds a foundation model, how it works, and how it is used downstream.”

The authors conclude:

“‘We shouldn’t think of Meta as the goalpost with everyone trying to get to where Meta is,’ Bommasani says. “We should think of everyone trying to get to 80, 90, or possibly 100.”

“And there’s reason to believe that is possible: Of the 100 indicators, at least one company got a point for 82of them.”

“Maybe more important are the indicators where almost every company did poorly. For example, no company provides information about how many users depend on their model or statistics on the geographies or market sectors that use their model. Most companies also do not disclose the extent to which copyrighted material is used as training data. Nor do the companies disclose their labor practices, which can be highly problematic.”

“In our view, companies should begin sharing these types of critical information about their technologies with the public."

This definitely is a nudge, especially given the interest in AI transparency, explainability and interpretability types of metrics by regulators far and wide.

There’s a lot more granular detail in their study, and it’s worth a deeper read. It all points to the complexity of these technologies at this early stage. The industry has a long way to go to improve Transparency, as well as the other metrics we’ve been discussing.

Additionally, the industry also has a lot of wood to chop to get the ‘Transparency’ metrics to be accessible to mainstream consumers and businesses. These metrics are not yet an easy to understand format that we find out on our food with their nutritional labels, cars with their comparative features and specs, or computers and smartphones with their hardware and software metrics and descriptors. Not yet ready for Consumer Reports style detailed reviews.

Separately, there are initiatives underway by companies like Adobe, Microsoft and others to adopt a new symbol/icon above, called ‘an icon of transparency’, to provide transparency in AI-generated work:

“Adobe developed the symbol with other companies as one of the many initiatives of the Coalition for Content Provenance and Authenticity (C2PA), a group founded in 2019 that looks to create technical standards to certify the source and provenance of content. (It uses the initials “CR,” which confusingly stands for content CRedentials, to avoid being confused with the icon for Creative Commons.) Other members of the C2PA include Arm, Intel, Microsoft, and Truepic. C2PA owns the trademark for the symbol.”

A consumer facing effort to improve content transparency.

Coming back to AI models, given the competition underway by dozens of companies focused on ‘Big AI and Small AI’, we’re likely to see more ways to rate, rank and compare Foundation AI models, than we’ve been able to do say with social media products and services over last decade and more.

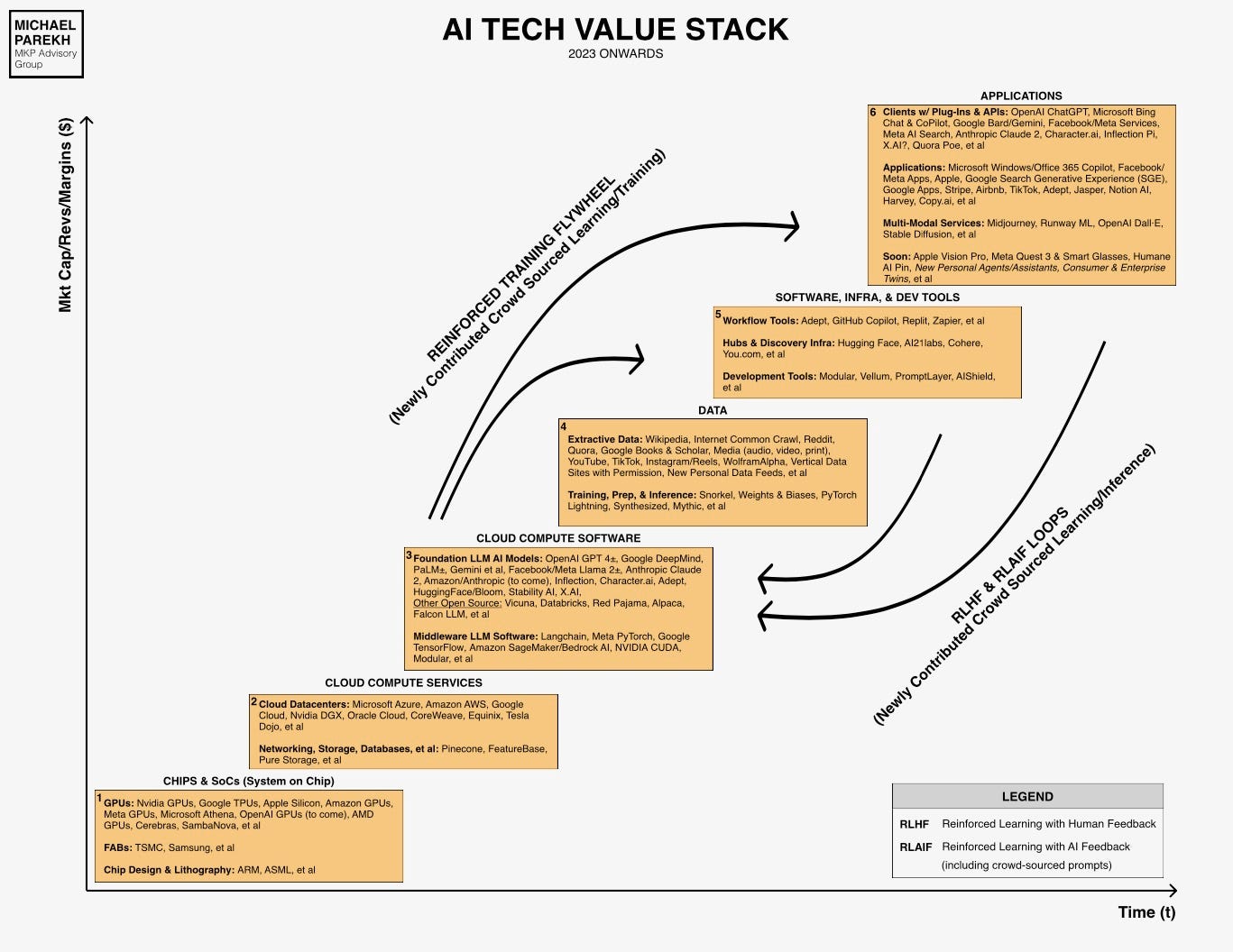

In the meantime, these early steps are welcome move in those directions. In not too long, we’re going to have a lot of AI applications, products and services (Box 6 in the AI Tech Wave chart above), to try in the coming months and come up with our own ways to rate and rank them.

And of course figure out how best to use them, what is transparent, and what opacity needs to be dialed down. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)