AI: Nvidia's Jensen Huang in Peak Paranoid form. RTZ #392

...tech history rhymes from Intel to Cisco to now Nvidia

(UPDATE: Cisco ex-CEO John Chambers explains why Nvidia today is in a different place than Cisco in the 1990s Internet Wave. Also this piece on Nvidia’s performance as a public company since IPO in 1999, and how it all happened in waves of ups and downs. Both worthwhile reads ampliying points in this piece).

The devil in every success story are the details of the ups AND downs, often multiple times. Another famous semiconductor CEO, Intel’s Andy Grove made “Only the Paranoid Survive” a personal and corporate mantra. But Nvidia’s Jensen Huang has lived through peaks and valleys of his company for over three decades. And continues to execute around that ethos even at this peak moment for this three plus decade ‘overnight success’ story.

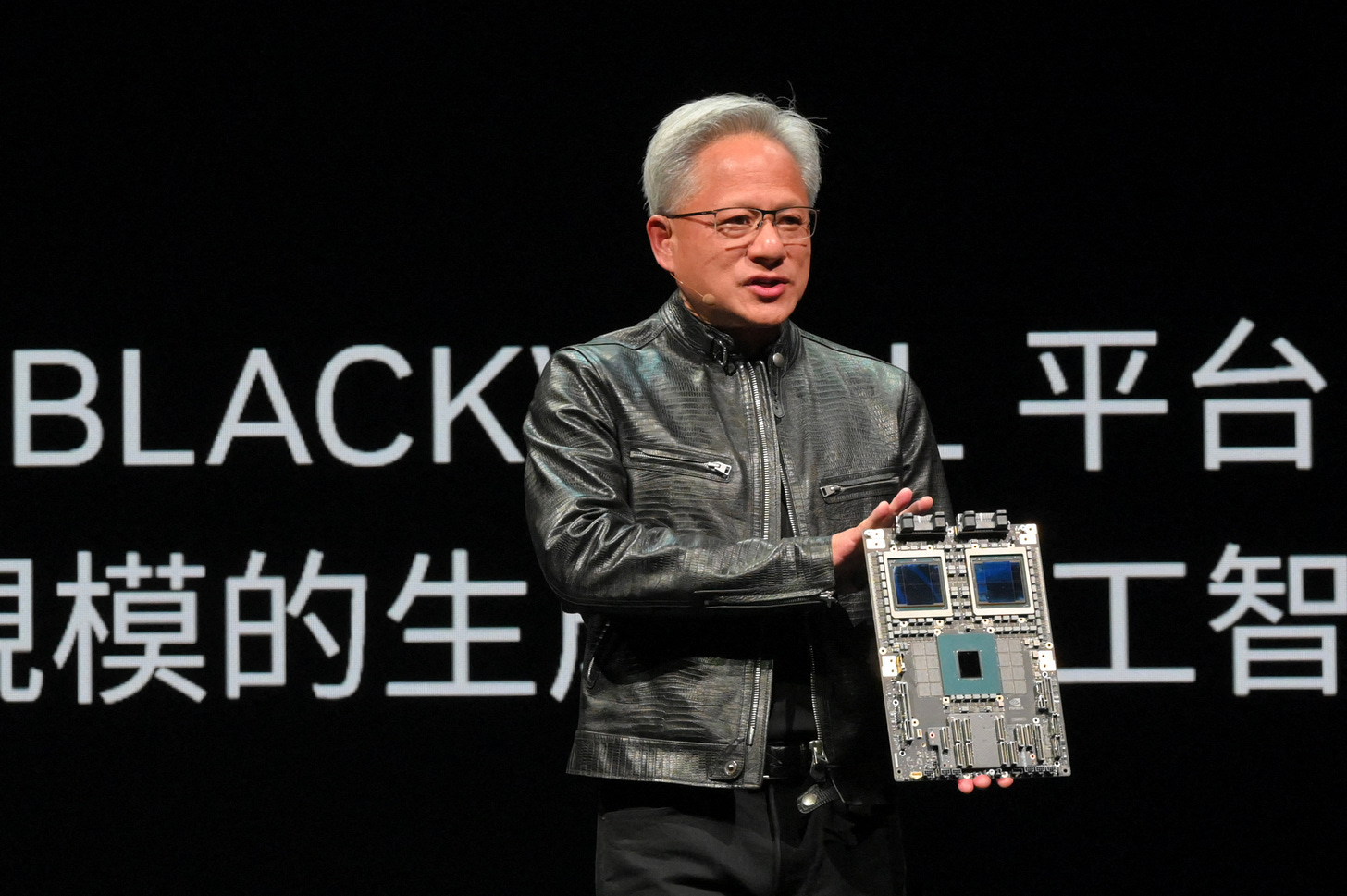

Both in personal and professional terms, the time to worry the most is when one is seemingly at the top of the world. In these heady, early ‘pickup and shovel’ days of the early ‘AI Tech Wave’, that crown of worry sits on the head of Nvidia Founder/CEO Jensen Huang at this point in 2024. As the WSJ notes tonight on June 18, 2024, “Nvidia Tops Microsoft to Become Largest US Company”:

“Nvidia’s shares rose Tuesday to make it the U.S.’s most valuable listed company, underlining the high demand for the company’s artificial intelligence chips.”

“The shares finished the day up 3.5% at a record-high $135.58, with the company reaching a valuation of $3.34 trillion, ahead of Microsoft and Apple—and marking the first time a company other than those latter two sat atop the list since early 2019.”

“Nvidia’s chips have been the workhorses of the AI boom, essential tools in the creation of sophisticated AI systems that have captured the public’s imagination with their ability to produce cogent text, images and audio with minimal prompting.”

“The scramble among tech giants like Microsoft, Meta and Amazon.com to lead the way in AI’s development and capture its hoped-for benefits has led to a chip-buying spree that lifted Nvidia’s revenue to unprecedented heights. In its latest quarter, the company brought in $26 billion, more than a triple the same period a year before.”

“Nvidia’s stock, the best performer in the S&P 500 in 2023, has more than tripled in value in the past year. The company’s market value hit $3 trillion earlier this month, less than four months after it reached the $2 trillion mark.”

“Its rise has powered the stock market higher even as many other stocks in the benchmark S&P 500 have underperformed.”

“Nvidia split its shares 10-for-1 earlier this month, a move aimed at lowering the price of each individual share and making it more accessible to investors.”

For Nvidia Founder/CEO, Jensen Huang, these have likely to be the “Best of Times, and Worst of Times”. Even as it is a leading AI infrastructure provider in the critical Boxes 1 through 5 in the AI Tech stack below.

As the Information notes in a lengthy, but must read piece “Nvidia’s Jensen Huang is on Top of the World. So Why is he Worried?”:

“Nvidia’s CEO, mindful of the downfall of onetime hardware giants like Cisco, is aggressively pushing his company into software and cloud services, putting it in competition with its biggest customers.”

“Huang has become a business rock star and the chief cheerleader of an AI boom that has propelled his microchip firm’s once-in-a-generation growth and profits, lifting its value to the same $3 trillion level enjoyed by both Microsoft and Apple. But behind the glamour and well-deserved victory laps, Huang and his colleagues have also focused on countering the next threat to the business—the likelihood that demand for Nvidia’s chips will eventually slow down.”

“To guard against that possibility, Nvidia has begun selling more software to AI developers and a year ago even set up its own server rental business, DGX Cloud. That move put it directly into competition with its biggest customers—cloud providers such as Microsoft and AWS. Bizarrely enough, DGX Cloud operates on clusters of Nvidia-powered servers that it leases from those cloud providers. Nvidia then rents the servers to its own customers at a higher cost, promising them better computing performance.”

I highlighted this Nvidia strategy last August in “Nvidia the new Nike”, explaining how Nvidia was making sure that its DGX data centers were carried by all its best AI Cloud company customers.

That strategy is working in spades, even though every top customer from Amazon AWS to Microsoft Azure, is negotiating aggressively for every minute advantage with Nvidia as expected. As the Information explains in their piece:

“The move has created tensions within the industry. AWS, the biggest cloud provider, initially resisted letting Nvidia carve out its own rival business within AWS data centers. But after all of AWS’ smaller rivals agreed to Nvidia’s terms, AWS relented, saying it would offer DGX cloud with a newer Nvidia AI chip that other cloud providers didn't have yet. AWS also may have been concerned about upsetting a critical supplier at a time when its chips were hard to come by. AWS spokesperson Patrick Neighorn said that suggestion was speculative and incorrect.”

“Last fall, Nvidia even considered leasing its own data centers for DGX Cloud, according to a person who was involved in those discussions. Such a move would have cut out the cloud providers entirely. Nvidia also recently hired a senior Meta Platforms executive, Alexis Black Bjorlin, to run the cloud business. It isn’t clear whether Nvidia plans to move ahead with its own data centers for DGX Cloud.”

Nvidia also has a strong moat in its exquisitely executed strategy of open source AI software libraries and infrastructure frameworks like CUDA, that is favored by countless Developers:

“Nvidia got its start 31 years ago selling GPUs for PC gaming systems. Huang laid the foundation for Nvidia’s recent ascent in 2006 by launching Compute Unified Device Architecture, a programming language that taps into computing power provided by graphics chips. CUDA saved developers time by automating the process of building applications that harnessed the chips. In recent years CUDA has become a major factor in Nvidia’s sales: Millions of programmers don’t want to bother learning how to program with rival chips.”

Besides building its next generation ‘Rubin’ AI GPU family, the company is building on tihs advantage with new AI software infrastructure, the latest being their just announced Nemotron-4 family of Open Source LLM Models that developers can use to generate Synthetic Data for training LLM AIs.

As I’ve outlined before, helping customers convert raw Data lakes and flows into usable fuel for their LLM AI models is a critical gating item for AI success.

“Only the Paranoid Survive”, is a phrase that now carries on from it’s author, Intel CEO Andy Grove at in the PC Tech Wave, to now Jensen Huang of Nvidia. He’s had that phrase built into his DNA over the thirty years of building Nvidia. And done it independent of Andy Grove. To far greater heights than Intel and Cisco combined.

So far, he’s executing very well on an increasingly complex playing field, and accelerating computing while he’s doing it. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)