Yesterday I highlighted the New York Times’ copyright lawsuit against OpenAI and Microsoft. It’s part of an ongoing series litigation around AI data copyright issues that have been on a ‘slow burn’ in 2023.

The legal tussles over AI copyrights are accelerating going into 2024, with this lawsuit but one vivid example in an otherwise quiet holiday period. As The Information puts it succinctly in “New York Times Co.’s OpenAI-Microsoft Suit is a negotiating tactic”, that is what this current step represents for now:

“Before you start dreaming of a high-profile trial with Sam Altman, Satya Nadella and A.G. Sulzberger taking the stand, you should view this for what it is: a negotiating tactic. It would be far too risky for The Times to go to trial over how the fair use doctrine—which allows limited use of copyrighted material—applies to artificial intelligence models. A court determining that OpenAI was operating legally would cut off The Times from getting a cut of the licensing revenue it seeks.”

“The Times said in the lawsuit it approached OpenAI and Microsoft about a licensing deal in April, but the talks have “not produced a resolution.”

All the parties have by now seen that tech waves from PC to Internet and others, create opportunities as well as challenges. In our collective recent memory of course, it started started of course in 1999 with the now infamous litigation over Napster enabling easy ‘ripping’ of music tracks off CDs, followed by the litigation that followed Google/YouTube’s innovations in video link sharing. And then of course how the music industry thoroughly inserted itself into Spotify’s music streaming business model with a relative ‘win-win’ set of accommodations by all parties.

Although the legal and technical contours of this series of disagreements and tussles are markedly different, it is more than likely we see all parties come up with ‘win-win’ settlements and agreements in the coming months of the new year.

This move is but a timely move by the New York Times to get OpenAI’s attention, especially after OpenAI’s recent deal with European media giant Axel Springer to licenses it data content. A good explanation on all this can be found in this piece by Mike Masnick of Techdirt titled “The New York Times' lawsuit against OpenAI and Microsoft relies on a false belief that copyright can limit the right to read and process data” (A piece well worth reading in whole):

“Let me let you in on a little secret: if you think that generative AI can do serious journalism better than a massive organization with a huge number of reporters, then, um, you deserve to go out of business. For all the puffery about the amazing work of the NY Times, this seems to suggest that it can easily be replaced by an auto-complete machine.”

“In the end, though, the crux of this lawsuit is the same as all the others. It’s a false belief that readingsomething (whether by human or machine) somehow implicates copyright. This is false. If the courts (or the legislature) decide otherwise, it would upset pretty much all of the history of copyright and create some significant real world problems.”

As I outlined yesterday in the post on AI building its foundations ‘On the Shoulders of Giants’ or OTSOG, this latest legal entanglement is another step towards all parties figuring out solutions at the end of their ‘negotiations’. Foundation LLM and Generative AI’s use of a never-ending sea of digital data is but a continuation of building on earlier works of others over millennia.

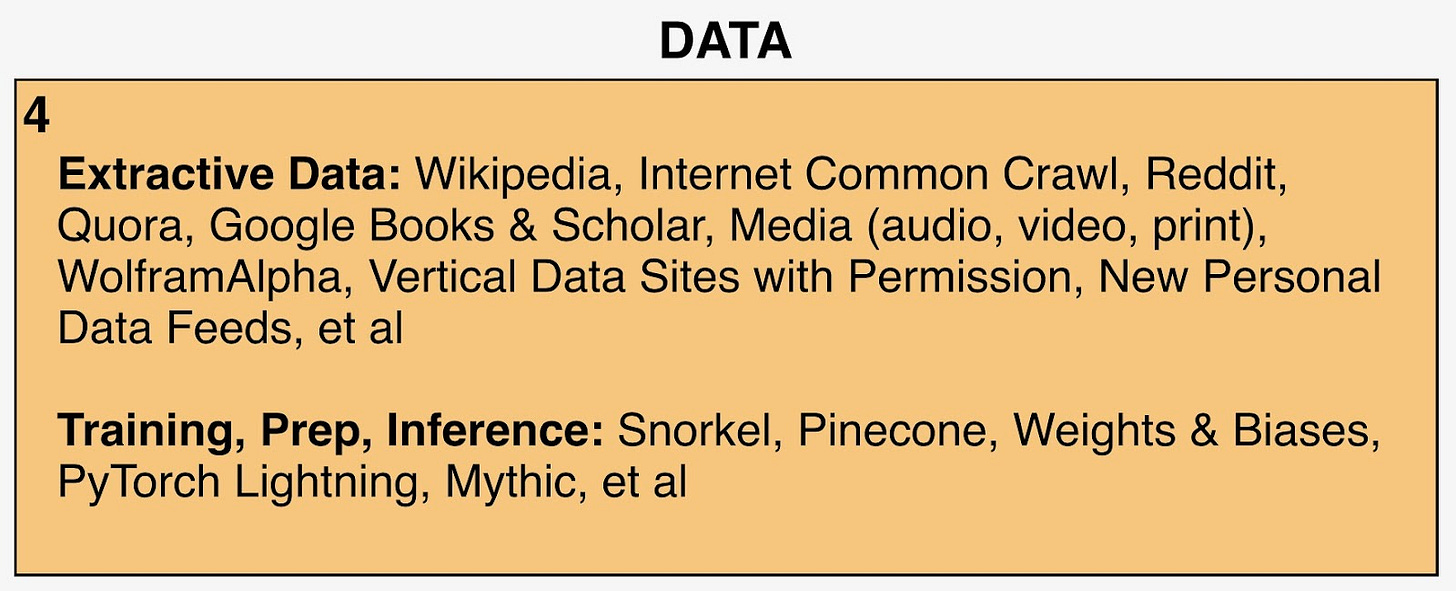

In this case, massive software models running on unimaginable number of parallel computations on AI GPU infrastructure, are continuing the historical tradition of ‘OTSOG’, this time based on the work of ‘Giants’ like the New York Times and other publishers, but also what I’ve termed ‘Go-Getter Creators’ of the multi-hundred billion dollar online ‘Creator Economy’, and of course all of us digital ‘Grunts’ producing and publishing content into the ‘data’ rivers online.

But to address the heart of the legal tussle and issue to resolve is the concept of ‘Fair Use’ by online platforms.

This is an issue that has impacted other technology waves like Search involving Google and others. And is now being extended into the AI Tech Wave today.

AI companies are focusing on ‘transformative use’ building on the ‘Fair Use’ legal concepts:

“AI companies generally argue that their models do not infringe on copyright laws because they transform the original work, therefore qualifying as fair use — at least under U.S. laws.”

“Fair use” is a doctrine in the U.S. that allows for limited use of copyrighted data without the need to acquire permission from the copyright holder.”

“Some of the key factors considered when determining whether the use of copyrighted material classifies as fair use include the purpose of the use — particularly, whether it’s being used for commercial gain — and whether it threatens the livelihood of the original creator by competing with their works.”

The New York Times legal case is one of the most thorough set of arguments put together on the other side of this argument. It makes of interesting reading, especially with the dozens of well articulated examples of how LLM AI technologies do more than they say they do on copyright infringement. There are already many deep discussions on the pros and cons of their case that is interesting to peruse.

But in the end analysis, as I’ve argued before, the AI toothpaste is out of the tube. It’s a technology that is now far outside the labs of OpenAI, Microsoft, Google and indeed all of the ‘Magnificent 7’ big tech companies. And being vigorously developed on worldwide by companies large and small, with hundreds of billions of dollars seeking opportunities to innovate at scale.

Content providers are an important stakeholder in this ecosystem, but they’ve also seen how technologies can also create immense new opportunities along with the near-term challenges.

It’s likely that we see resolution of these matters despite the legal dramas before us. It’s still early days in the AI Tech Wave. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

Generative AI using copyrighted images, like Disney's Mickey Mouse, would violate "Fair use" especially now as these AAi companies are using the sources for commercial purposes. However, the use of text is more problematic as the copyright covers chunks of text and the whole document. But as Masnick has pointed out, viewing source material, such a Mickey Mouse or a NYTimes articles does not violate copyright. If it did, all those links in html would invite copyright violations once clicked.

However, masnick may be going too far. If an LLM produces copy that uses text verbatim, and that text is output for uses such as publishing, that seems to me (uneducated as I am in copyright law) a potential copyright violation. After all, plagiarism is an offence and must be corrected in published works. [You can quote verbatim is teh source is credited].

The greater problem is copyright maximalism, especially by the big media companies that have bought their legislators to keep expanding the length of copyrights well beyond their intended periods.