AI: Can the 'First-Movers' Falter?. RTZ #378

...eyes on OpenAI and Nvidia as they set the pace for AI innovation at scale

As we sit here in sixth month of 2024, a year and a half after OpenAI’s ‘ChatGPT moment’ that kicked off the current AI Tech Wave global boom, it’s clear that Nvidia and OpenAI are the two key companies to watch at the head of the race. As I said on X/Twitter earlier today,

“Nvidia and OpenAI are setting the pace AND benchmarks, for AI scaling in hardware & software 2024 thru 2027. Think Blackwell/Rubin/DGX/NIMs from $NVDA & GPT4/5/6, Sora, Omni, AI Search, & Agents from OpenAI.”

I’ve regularly talked about how Nvidia is setting the AI hardware and software infrastructure stack up and down the AI tech stack chart below.

The most recent of course is after founder/CEO Jensen Huang’s Computex Keynote in Taiwan earlier this week, where he outlined the Nvidia AI GPU chip family cadence of Hopper to Blackwell to Rubin over the next three plus years (including the ‘Ultra’ version cycles for Blackwell and Rubin to come).

And I’ve talked about the half a dozen plus AI products and services to expect from OpenAI on the LLM AI front, not the least of it being its GPT-5 model due later this year at the earliest.

These two companies’ product roadmap is important as they set the stage for everyone else in vis a vis the exponential AI Scaling to be expected over the next three years at least.

Despite the ongoing debates and discussions on the right amount of focus on AI Safety ahead of AGI (artificial general intelligence), whenever it emerges over the next few years.

The media of course will continue to debate if and when these two companies may be surpassed by others. The latest is this Information piece specifically asking “How long OpenAI’s First-mover Advantage Last”:

“As companies like OpenAI, Anthropic, Google and Meta Platforms race to leapfrog each other with marginally faster, cheaper or more accurate large language models, a number of developers have told me that these LLMs are beginning to reach parity when it comes to performance.”

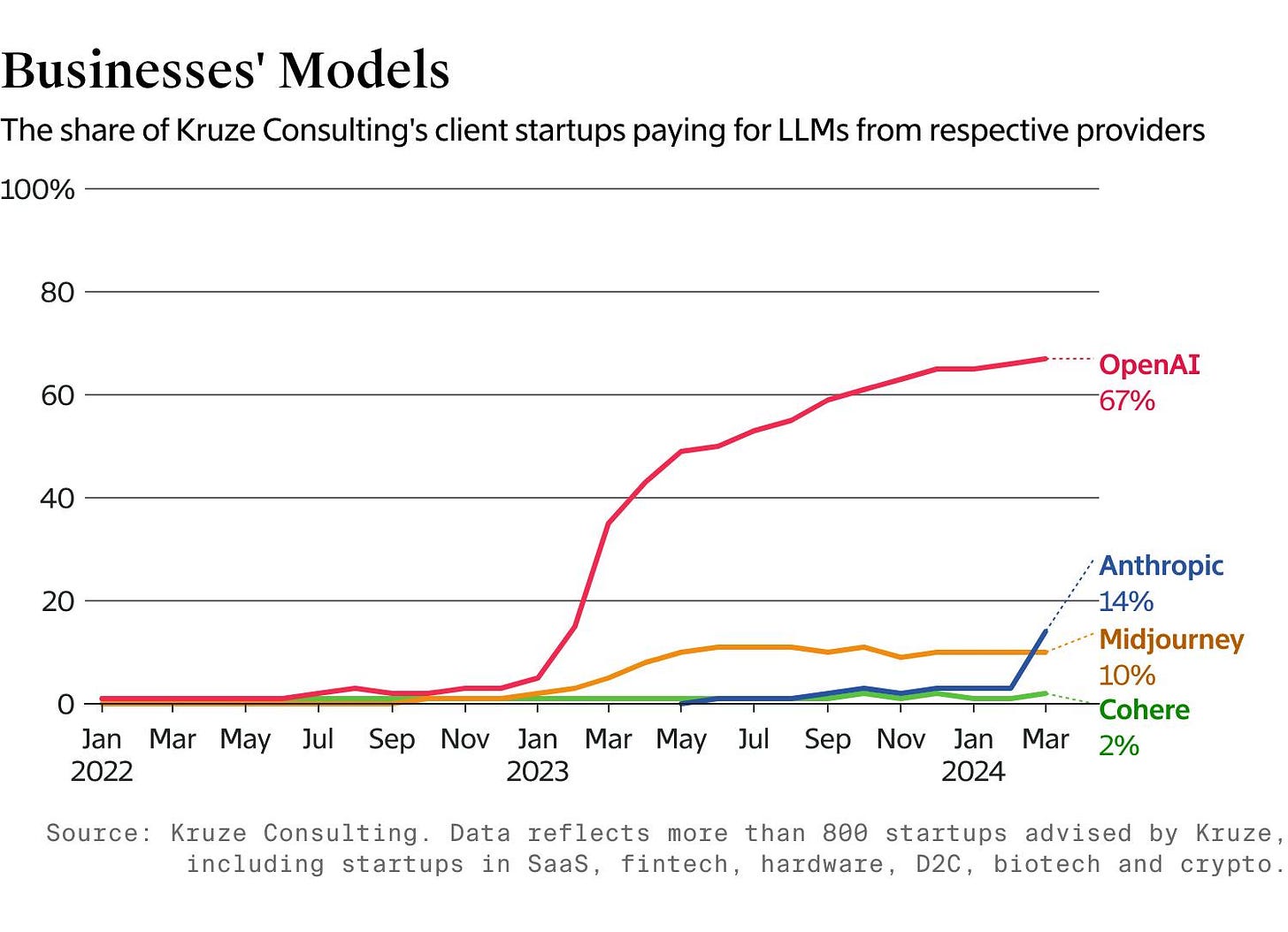

“And now, new exclusive data from Kruze Consulting, a finance and HR consulting firm, backs up those anecdotes. Over the last year, the proportion of the firm’s 800-plus venture-backed startup customers using multiple AI models has grown significantly, expanding from 1% last summer to 15% in April.”

“OpenAI remains by far and away the market leader when it comes to LLM usage, and I’ll be the first to admit that 15% is still a relatively modest number. But, this trend could signal that developers aren’t as married these days to any specific model provider as they once were, a belief that industry practitioners have shared with me as well.”

They go onto highlight what I duscssed a few weeks ago, that the other LLM AI companies, both open and closed, are of now catching up with OpenAI’s GPT-4 models. The Information notes:

““The most recent open-source models today are on par with GPT-4, and until the release of GPT-4o, OpenAI, Anthropic and Google’s models were very close in terms of performance,” said Ion Stoica, a professor of computer science at the University of California, Berkeley and the co-founder of AI startups Anyscale and Databricks. “It does raise the question of whether these models will get commoditized.”

As I summarized in a response to the WSJ’s recent piece asking “The AI Revolution is already losing steam”, we are too early to see AI technologies either on the hardware or the software fronts get commoditized. The markets are too nascent, and years away from saturation. Specifically, I noted the following points to the key concerns and questions:

“The long WSJ piece goes into the following broad points below:”

“The pace of improvement in AIs is slowing”.

“AI could become a commodity”.

“Today’s AI’s remain ruinously expensive to run.”

“Narrow use cases, slow adoption”.

Here are my high-level responses to the above key points raised:

“The pace of AI improvements is NOT slowing, but ACCELERATING EXPONENTIALLY, due to ‘AI Scaling Laws’ that for now beat Moore’s Law. In place at least for three years or more. OpenAI, Google, Nvidia and others are increasing AI innovation.”

“Tech only becomes a commodity after market saturation. We’ve barely seen any mainstream adoption by businesses and billions of end users. We’re at least half a decade or more away from that point.”

“Yes, it is VERY expensive to build and deploy ‘Big AI’ today, but will quickly be offset by ‘Small AI’ on local devices in the next 2-3 years.”

“Narrow use cases right now, but real AI applications and services not yet fully developed and deployed. Just starting. Mainstream adoption at scale still ahead.”

“The AI Tech Wave, even with the historic build-out underway of AI Tech infrastructure in the hundreds of billions today, is barely at the beginning of its ‘accelerated computing’ buildout and mainstream use.”

I cite all of these points to keep in mind at least for this year while we assess the leading and new players in this AI Tech Wave . And evaluate whether the top companies are in danger of losing their ‘first-mover’ advantages.

Can happen, but low probability events while the leading companies execute at the pace they’re currently moving to set the benchmarks. Let’s wait and see. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)