AI: When Nvidia goes up the Tech Stack to AI Data Centers & Services RTZ #573

...moving fast as its best customers build their own AI GPU chips

Nvidia and its top AI customers. They’re bound to meet in the middle some day, traveling fast in both directions.

Between Boxes 1 and 2 of my AI Tech Stack chart at the end of this post below.

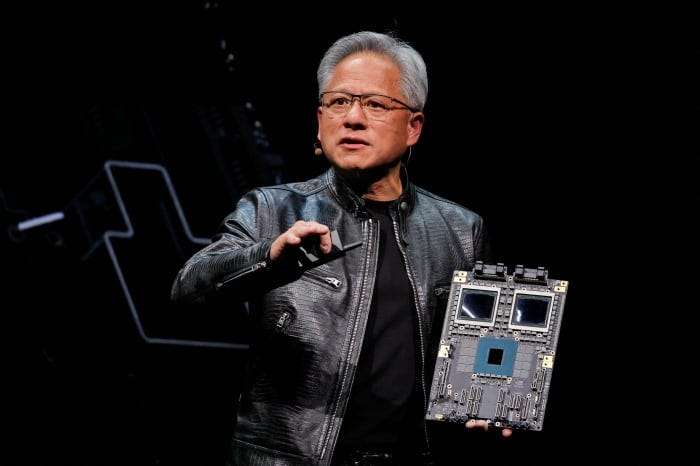

From being top business customers and partners to top direct competitors. Nvidia for now is in the AI catbird’s seat. Providing the bulk of the AI GPUs that drive today’s AI Tech Wave, to their top customers. Microsoft/OpenAI, Google, Amazon, Meta, Tesla. Even Apple occasionally buys chips from Jensen. Nvidia Hoppers and now Blackwell, and soon Rubin chips in the largest quantities possible.

While they all build their own competing chips as fast as possible, with partners like Broadcom and others, even though it’ll cost billions and take years to make them at scale.

As the Information lays it out in “Nvidia Says It Could Build a Cloud Business Rivaling AWS. Is That Possible?”:

“In the past two years, Nvidia has quietly gone into competition with its biggest customers: cloud providers such as Amazon Web Services and Microsoft. Nvidia now rents out servers powered by its artificial intelligence chips to businesses directly, and also provides software to help them develop AI applications.”

“Nvidia says it is already among the biggest sellers of AI cloud services. And in a little-noticed disclosure, it told investors that in the long run it could generate $150 billion in revenue from software and cloud services—more than either Nvidia or AWS currently generates annually.”

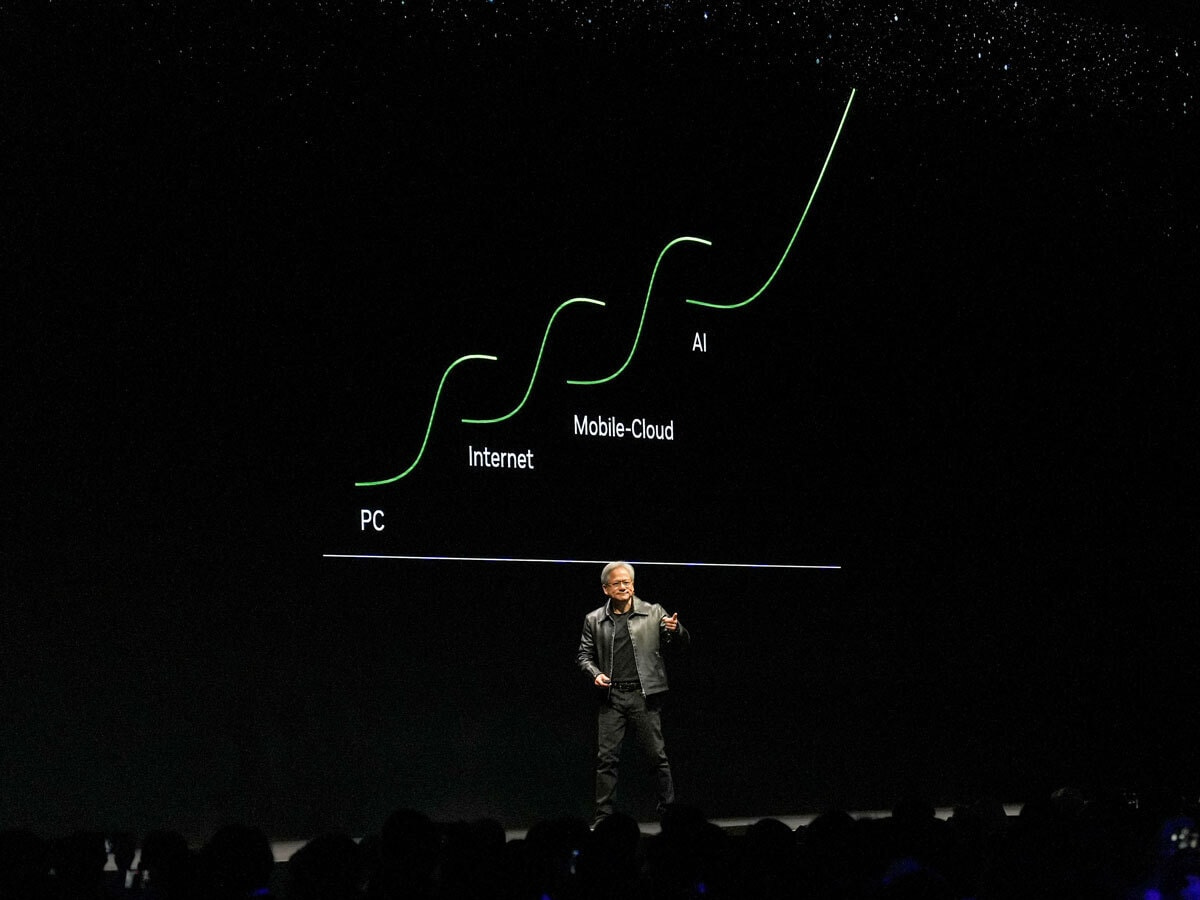

It’s a point not lost on Nvidia founder/CEO Jensen Huang, who’s tracked and executed to these accelerating computing curves for over three decades.

““If you actually step back and you look at how much is spent on data center infrastructure versus enterprise software, the lion’s share of ongoing expenditure in enterprise is on the software side,” said Justin Boitano, Nvidia’s vice president of enterprise AI. “I think we realize that we have a really important role to play there.”

“The Nvidia cloud software business faces numerous challenges and pales in comparison with Nvidia’s chip division, which was recently on pace to generate $120 billion annually. But last month, Nvidia Chief Financial Officer Colette Kress said the firm’s software, service and support business would surpass $2 billion in annualized revenue by the end of 2024. (Company spokespeople declined to say whether those figures included its cloud server rental business.)”

That’s big enough, and getting bigger.

“That means Nvidia already has one of the top AI cloud businesses. Around June, Microsoft hit $1 billion in annualized revenue from selling OpenAI technology to cloud customers. Google Cloud has set a goal to generate $1 billion in revenue from selling AI services in North America this year. And CoreWeave, which runs a cloud service that primarily rents out Nvidia-powered chips, has projected it would generate $2 billion in revenue this year.”

“Nvidia’s move into cloud software has privately irked the cloud providers. Not only is Nvidia competing with them, but its offerings could change the way businesses access the specialized hardware and software for developing AI apps, such as chatbots and agents that automate research, analysis and other tasks.”

I’ve discussed Nvidia backing into the AI cloud business via its DGX product line for AI data centers for some time now.

“Nvidia CEO Jensen Huang hasn’t been afraid to publicly imply he could muscle into cloud providers’ territory by ramping up his company’s own cloud and AI software businesses, known as DGX Cloud and Nvidia AI Enterprise, respectively. He wants to make Nvidia a one-stop shop for businesses looking to develop and run AI applications, from customer service chatbots to tools that might help researchers discover new drugs.”

“This is going to likely be a very significant business over time,” Huang said in a quarterly earnings conference call in February, adding that he envisioned “every enterprise in the world” would buy Nvidia’s software, not just its vaunted chips.”

And Jensen knows his customers are aggressively building non-Nvidia options, as they bear hug him on stage at their developer conferences.

“Huang’s ambition in the cloud is also understandable: Amazon and other big Nvidia chip customers such as Microsoft and Google are simultaneously developing quasi competitors to Nvidia’s dominant AI chips. So while those cloud providers have been buying tens of billions of dollars of Nvidia chips to rent them out to companies developing AI, they’re also trying to lure those companies to use the alternative chips, which are less expensive than Nvidia’s.”

“In some cases, Nvidia’s would-be rivals are developing their AI chips with the help of chip designer Broadcom, whose shares have soared in recent days after CEO Hock Tan said the market for Nvidia alternatives was going to boom.”

Nvidia DGX is of course a trojan horse into its best customers’ castles, a point not lost on them of course.

“But there’s a twist: Nvidia operates DGX Cloud in the data centers of the rival cloud providers—and its expansion promises to bring those rivals extra business, at least for now. Nvidia has said it will increase its own purchases of cloud services from major providers such as AWS to more than $1 billion per year, in part to support DGX Cloud. Nvidia has considered setting up its own data centers and cutting out the cloud providers. It isn’t clear if Huang intends to move in that direction.”

There’s a version of the cartoon above, where Nvidia closes up shop for an hour and runs down ahead of its customers, to pan and dig for gold itself.

An Nvidia direct AI data center business won’t happen overnight.

“Nvidia hasn’t yet created a big organization to expand its AI cloud and software business. For instance, there isn’t one single leader who oversees Nvidia AI Enterprise, according to a person with direct knowledge of the situation. Last year, Nvidia hired Alexis Black Bjorlin from Meta Platforms to run DGX Cloud, but a search on LinkedIn shows only around 200 employees are working on the cloud or enterprise software businesses. Nvidia employs around 30,000 people.”

An Nvidia of course has an advantage over their customers, just like Microsoft had an advantage as they built Microsoft Office applications on top of its Windows operating systems and tools, competing with their best customers decades ago.

“I think Nvidia has a slight edge here because they’re so focused.…They know their next line of chips, they know how you need to optimize their GPUs,” he said.”

It’s not personal, just business. A question of who gets the higher margins the further they go up the AI Tech Stack chart above. From Box 1 to 2 above. Nvidia already makes some powerful open source LLM AI models of various sizes in Box 3 below. And then with NIMs, NeMo, Omniverse, CUDA, and other hardware/software services in the cloud, methodically moving towards Boxes 4, 5 and of course 6, the holy grail of AI applications and services.

Some day soon potentially. Not 2025 soon, but possibly 2026 or 2027 soon. Maybe with the help of some ‘Neoclouds’ along the way.

Nvidia’s version of Wintel, coming from the ‘tel’ side for the ‘win’. Potentially by one company.

It’ll all take some time for both parties to meet in the middle, but the game is afoot on both sides. And Nvidia has more of the cards than its customers, than generally understood. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)