AI: Data Redux, Redefined, Recreated for AI ahead. RTZ #570

...ilya on 'peak internet data' for AI scaling

The Bigger Picture, December 15, 2024

OpenAI co-founder and now founder of Safe SuperIntelligence (aka SSN), Ilya Sutskever is back, this time with thoughts on AI Scaling. Particularly with the Data we have thus far on the Internet, and how we move forward. Ilya and AI data are subjects I’ve covered a fair bit in these pages over the last 569 days. His talk in Vancouver this week (24 minutes with Q&A) is worth a full listen. And timely given recent rumblings on AI Scaling slowing down, which I’ve addressed in various parts to date. Ilya’s newly laid out thoughts this week on these topics, is the subject of our Bigger Picture this Sunday.

Especially because without new ways to scale synthetic content, synthetic data, and real new data, there is no real viable way to scale AI this AI Tech Wave. It’s the all important and unique Box 4 in the AI Tech Stack chart below. Regulatory and content issues all in the stew.

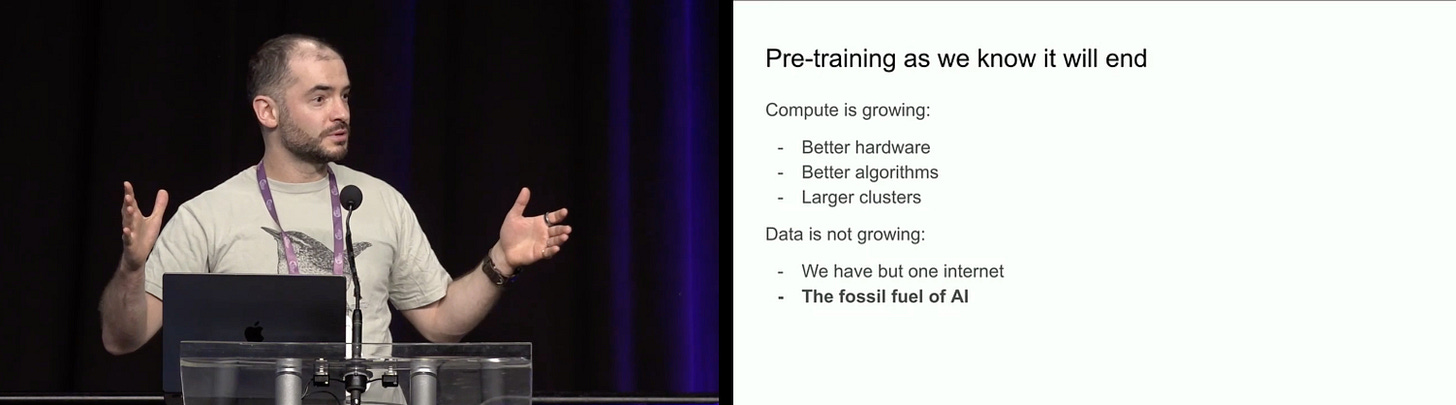

The headlines from Ilya’s talk are attention grabbing indeed. AI Disruption’s “Ilya Sutskever's Bombshell at NeurIPS: Pre-training is Over, Data Squeezing is Finished” is a case in point:

“Ilya Sutskever’s shocking statement at NeurIPS 2024: the end of pre-training and data exhaustion. The AI industry must adapt to new models beyond large-scale pre-training.”

"Reasoning is unpredictable, so we must start with incredible, unpredictable AI systems."

“Ilya finally made his appearance, and he began with astonishing words.”

This Friday, Ilya Sutskever, the former Chief Scientist of OpenAI, stated at the global AI summit, "The data we can obtain has reached its peak, and there will be no more."

“Ilya Sutskever, co-founder and former Chief Scientist of OpenAI, left the company in May this year to start his own AI lab, Safe Superintelligence, making headlines.”

“Since leaving OpenAI, he has stayed out of the media, but this Friday, he made a rare public appearance at the NeurIPS 2024 conference in Vancouver.”

“"We are undoubtedly at the end of pre-training as we know it," Sutskever said on stage.”

OpenAI has of course laid out its roadmap to AGI, which go through AI Reasoning and Agents as the next two levels beyond ‘chatbots’, which were Level 1 of this AI wave thus far. And Sam Altman has laid out his thoughts on our current ‘Intelligence Era’, and how ‘Deep Learning Worked’. And other OpenAI cofounder and now Anthropic founder and CEO Dario Amodei has his thoughts on the subject.

Their co-founder Ilya has his take on AI reasoning and agents. Reuters explains in “AI with reasoning power will be less predictable, Ilya Sutskever says”:

“Accepting a "Test Of Time" award for his 2014 paper, with Google's , Oriol Vinyals and Quoc Le, Sutskever said a major change was on AI's horizon.”

“An idea that his team had explored a decade ago, that scaling up data to "pre-train" AI systems would send them to new heights, was starting to reach its limits, he said. More data and computing power had resulted in ChatGPT that OpenAI launched in 2022, to the world's acclaim.”

“"But pre-training as we know it will unquestionably end," Sutskever declared before thousands of attendees at the NeurIPS conference in Vancouver. "While compute is growing," he said, "the data is not growing, because we have but one internet."

“Sutskever offered some ways to push the frontier despite this conundrum. He said technology itself could generate new data, or AI models could evaluate multiple answers before settling on the best response for a user, to improve accuracy. Other scientists have set sights on real-world data.”

“But his talk culminated in a prediction for a future of superintelligent machines that he said "obviously" await, a point with which some disagree. Sutskever this year co-founded Safe Superintelligence Inc in the aftermath of his role in Sam Altman's short-lived ouster from OpenAI, which he said within days he regretted.”

“Long-in-the-works AI agents, he said, will come to fruition in that future age, have deeper understanding and be self-aware. He said AI will reason through problems like humans can.”

The issue is growing unpredictability with growing reasoning:

“There's a catch.”

"The more it reasons, the more unpredictable it becomes," he said.”

“Reasoning through millions of options could make any outcome non-obvious. By way of example, AlphaGo, a system built by Alphabet's DeepMind, surprised experts of the highly complex board game with its inscrutable 37th move, on a path to defeating Lee Sedol in a match in 2016.”

“Sutskever said similarly, "the chess AIs, the really good ones, are unpredictable to the best human chess players."

Not just AI hallucinations, for which LLM AI results are already famous. But less ‘predictable’ ways the artificial neural nets of our AI machines start to ‘reason’ and deploy ‘agents’ in the real world.

Not ‘scary’ per se, but different. Especially when deployed at scale over the coming years.

That’s what he asks us to ponder and think through going forward. And point to how AI being built this AI Tech Wave, ‘is about to change’, as the Verge recounts him saying:

“We’ve achieved peak data and there’ll be no more. We have to deal with the data that we have. There’s only one internet.”

He’s referring of course to Box 4 in the chart above.

“Next-generation models, he predicted, are going to “be agentic in a real ways.” Agents have become a real buzzword in the AI field. While Sutskever didn’t define them during his talk, they are commonly understood to be an autonomous AI system that performs tasks, makes decisions, and interacts with software on its own.”

“Along with being “agentic,” he said future systems will also be able to reason. Unlike today’s AI, which mostly pattern-matches based on what a model has seen before, future AI systems will be able to work things out step-by-step in a way that is more comparable to thinking.”

There was optimism and confidence in the following:

“The more a system reasons, “the more unpredictable it becomes,” according to Sutskever. He compared the unpredictability of “truly reasoning systems” to how advanced AIs that play chess “are unpredictable to the best human chess players.”

“They will understand things from limited data,” he said. “They will not get confused.”

Particularly notable was his comparisons to biological systems:

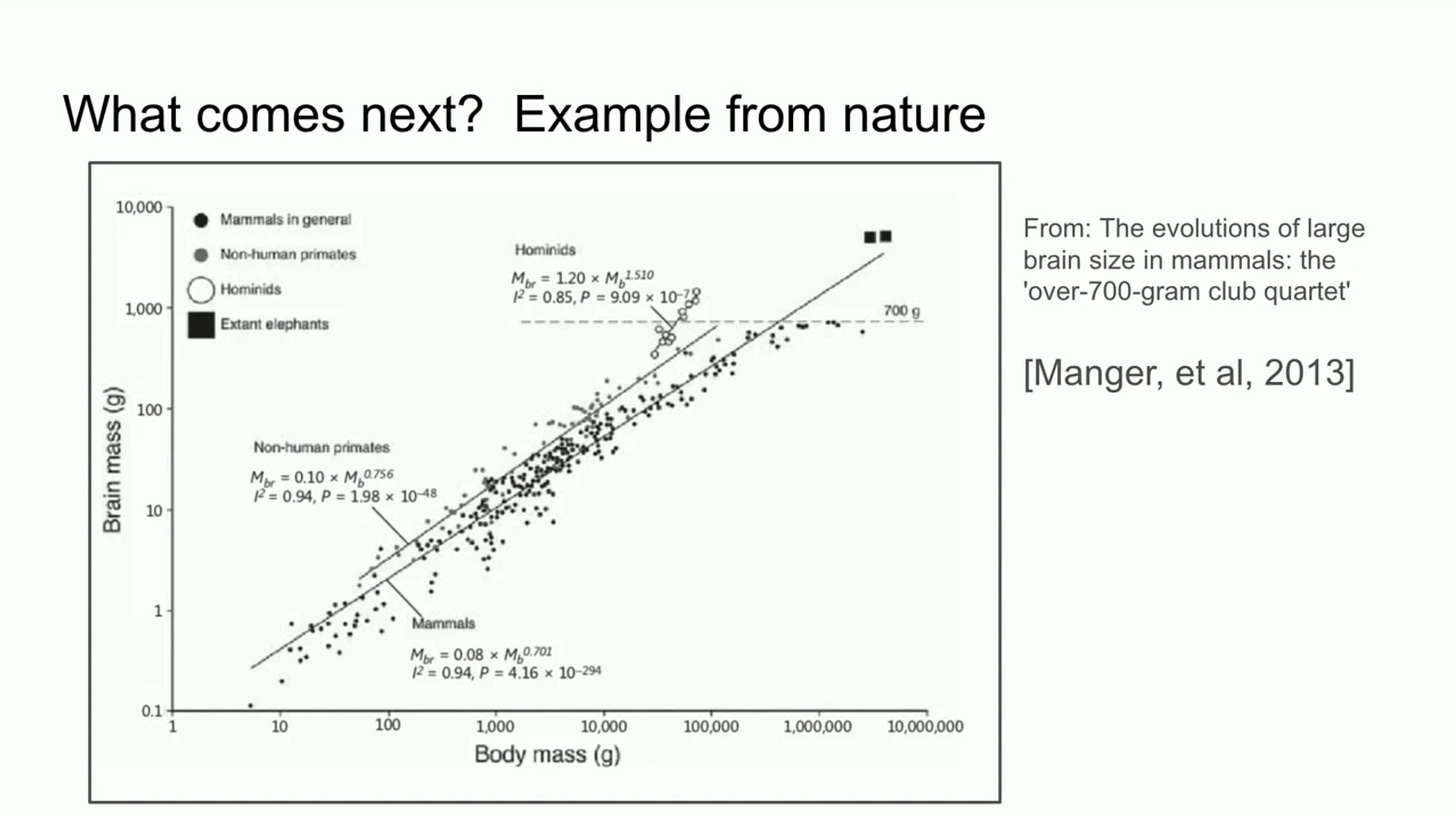

“On stage, he drew a comparison between the scaling of AI systems and evolutionary biology, citing research that shows the relationship between brain and body mass across species. He noted that while most mammals follow one scaling pattern, hominids (human ancestors) show a distinctly different slope in their brain-to-body mass ratio on logarithmic scales.”

“He suggested that, just as evolution found a new scaling pattern for hominid brains, AI might similarly discover new approaches to scaling beyond how pre-training works today.”

The truly interesting line is the small, third one under ‘Hominids’ below, with an up and to the right slope at a higher angle than the rest. That’s what he thinks could be replicated with artificial AI systems at true Scale.

Again, the whole talk is worth a listen, particularly for his conviction levels.

They’re reasonable points to focus on this Sunday and beyond. That’s the the Bigger Picture to get our attention for now. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)