AI: Amazon AWS 'supersizes' AI Data Centers with its own AI GPU chips. RTZ #559

...Apple, Anthropic, Microsoft/OpenAI, Nvidia and others accelerated in the competitive and cooperative mix

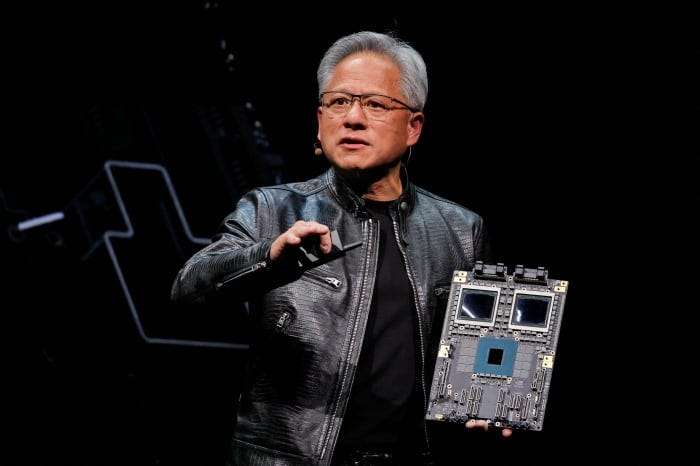

A few days ago I discussed the accelerating trend in this AI Tech Wave to ‘Supersize’ AI Data Centers with hundreds of thousands of AI GPUs and related infrastructure. The primary beneficiary of said ‘supersizing’ by companies like Elon Musk’s xAI, Mark Zuckerberg’s Meta, Microsoft/OpenAI and others, is of course Nvidia.

Now Amazon is accelerating its own AI GPU chips as I discussed as well, to join the ‘Supersizing’ trend. This time via its expanded partnership with #2 LLM AI company Anthropic.

The WSJ explains in “Amazon Announces Supercomputer, New Server Powered by Homegrown AI Chips”:

“The company’s megacluster of chips for artificial-intelligence startup Anthropic will be among the world’s largest, it said, and its new giant server will lower the cost of AI as it seeks to build an alternative to Nvidia”.

“Amazon’s cloud computing arm Amazon Web Services Tuesday announced plans for an “Ultracluster,” a massive AI supercomputer made up of hundreds of thousands of its homegrown Trainium chips, as well as a new server, the latest efforts by its AI chip design lab based in Austin, Texas.”

“The chip cluster will be used by the AI startup Anthropic, in which the retail and cloud-computing giant recently invested an additional $4 billion. The cluster, called Project Rainier, will be located in the U.S. When ready in 2025, it will be one of the largest in the world for training AI models, according to Dave Brown, Amazon Web Services’ vice president of compute and networking services.”

“Amazon Web Services also announced a new server called Ultraserver, made up of 64 of its own interconnected chips, at its annual re:Invent conference in Las Vegas Tuesday.”

“Additionally, AWS on Tuesday unveiled Apple as one of its newest chip customers.”

This is a plot twist, as CNBC elaborates:

“Apple is currently using Amazon Web Services’ custom artificial intelligence chips for services like searching and will evaluate if its latest AI chip can be used to pretrain its AI models included in Apple Intelligence.”

“Apple revealed its usage of Amazon’s proprietary chips at the company’s annual AWS Reinvent conference.”

“Apple’s appearance at Amazon’s conference and its embrace of the company’s chips is a strong endorsement of the cloud service as it vies with Microsoft Azure and Google Cloud for AI spending.”

“Apple is currently using Amazon Web Services’ custom artificial intelligence chips for services like search and will evaluate if the company’s latest AI chip can be used to pretrain its models like Apple Intelligence.”

“Apple revealed its usage of Amazon’s proprietary chips at the annual AWS Reinvent conference on Tuesday. Benoit Dupin, Apple’s senior director of machine learning and AI, took the stage to discuss how Apple uses the cloud service. It’s a rare example of the company officially allowing a supplier to tout them as a customer.”

“We have a strong relationship, and the infrastructure is both reliable and able to serve our customers worldwide,” Apple’s Dupin said.”

“Apple’s appearance at Amazon’s conference and its embrace of the company’s chips is a strong endorsement of the cloud service as it vies with Microsoft Azure and Google Cloud for AI spending. Apple uses those cloud services, too.”

“Benoit said Apple had used AWS for more than a decade for services including Siri, Apple Maps and Apple Music. Apple has used Amazon’s Inferentia and Graviton chips to serve search services, for example, and Benoit said”

As I’ve described before, Apple has been executing on a strategy to use its own Silicon chip in the cloud. This announcement with Amazon AWS augments that strategy in the Cloud, while Apple also using OpenAI as its first of presumably other LLM AI partners for external AI queries by its billions of Apple ecosystem users.

These announcements again emphasize the ‘Frenemies’ nature of the current AI industry environment, where the biggest comnpanies are both working together and aggressively competing concurrently.

For Amazon, it was a big day of announcements:

“Combined, Tuesday’s announcements underscore AWS’s commitment to Trainium, the in-house-designed silicon the company is positioning as a viable alternative to the graphics processing units, or GPUs, sold by chip giant Nvidia.”

“The market for AI semiconductors was an estimated $117.5 billion in 2024, and will reach an expected $193.3 billion by the end of 2027, according to research firm International Data Corp. Nvidia commands about 95% of the market for AI chips, according to IDC’s December research.”

Even Amazon AWS head admits Nvidia’s leadership in the AI GPU market despite its other efforts:

“Today, there’s really only one choice on the GPU side, and it’s just Nvidia,” said Matt Garman, chief executive of Amazon Web Services. “We think that customers would appreciate having multiple choices.”

“A key part of Amazon’s AI strategy is to update its custom silicon so that it can not only bring down the costs of AI for its business customers, but also give the company more control over its supply chain. That could also make AWS less reliant on Nvidia, one of its closest partners, whose GPUs the company makes available for customers to rent on its cloud platform.”

Amazon also announced its own Nova LLM AI Frontier models in various sizes.

And as the Information also explains, these moves keep open new possibilities ahead, potentially alongside the Microsoft/OpenAI partnership:

“But if Garman wants to ensure that OpenAI, whose growth has surged, doesn’t take an even bigger percentage of enterprise IT budgets that might otherwise flow to AWS, he might want to find a way to make it easier for companies to use OpenAI technology together with AWS’ core storage and computing services.”

“As OpenAI and Microsoft get some separation from one another—at least when it comes to their computing arrangements—AWS could look to strike a deal in which it resells the startup’s models, similar to how it resells Anthropic’s. Now that would be needle-moving.”

I point these developments as being the ‘Frenemies’ state of play for the AI Tech Wave for at least the next two to three years.

While of course regulatory uncertainties also swirl around core industry partnerships and alliances of convenience.

Amazon’s moves today vividly illustrate this ‘coopetition’ environment, even as the industry invests billions for ever larger AI data centers and expanded AI products for its global customers. Stay tuned,

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

🤔It’s going to get interesting between these Frenemies.