AI: Attention/Transformer Authors Go Fishing

...to a whole new watering hole

(UPDATE: Sakana AI, the company discussed in the post below, secured an additional $100 million in funding at a billion dollar valuation, as reported June 14, 2024)

It isn’t everyday one of the two of the eight authors of the seminal AI paper that launched the current global LLM AI Tech wave do something that draws our Attention. Yes, pun intended.

They co-wrote the now pivotal 2017 Google AI paper, “Attention is all you need”, that kicked off the ‘T’ as in ‘Transformers’. As in the ‘T” in OpenAI’s ChatGPT and underlying Foundation LLM AI GPT4 models today and to come.

As Stephanie Palazollo at the Information notes:

“Talk about breaking from tradition. A key architect of the boom in large-language models has started an artificial intelligence research lab that’s taking a very different approach from OpenAI and Google.”

“Llion Jones, co-author of the iconic Google research paper that paved the way for chatbots like ChatGPT, and David Ha, former head of research at embattled AI software developer Stability AI, said they are developing a new kind of AI model that will require a lot less maintenance and monitoring work from ML engineers.”

“Surprisingly, the lab, Sakana AI, isn’t relying on Jones’s breakthrough research on transformers, a type of machine-learning model that OpenAI has used to great effect. Instead of a transformers-based model, the two are creating a new kind of foundation model based on what they call “nature-inspired intelligence.” (This connection to nature is one of the reasons why they decided to name their lab after the Japanese word for “fish.”)”

Their new endeavor will be based out of Tokyo, rather than typical hot spots like silicon valley. And focused on more basic research than what the big tech companies like Google, Microsoft, Meta, Amazon, Apple, Elon/X/xAI, and of course OpenAI, are focused on, along with a slew of private unicorns with billions of capital being poured into Foundation LLM AI GPU Compute and reinforced learning driven iterative innovation on what’s come before. As the Information piece continues:

“The recent focus of AI researchers, at Google and other companies, to commercialize existing technology means that there’s less room for more bleeding edge research, Jones said. But research for the sake of research is a principle the two cofounders aim to uphold at Sakana, and is one of the reasons why they decided to base the company in Tokyo, thousands of miles away from Silicon Valley’s AI hype.”

“The cofounders didn’t disclose much on fundraising plans, though they said that they’re “working out some of the details on the funding side.” (Read, open for business).

And they’re definitely fishing in new waters, without specific product plans:

“The duo said they don’t know yet what product they might try to develop.”

“But Nature-inspired intelligence has been a running theme throughout Ha’s career. In addition to his work on permutation-invariant neural networks, he’s also experimented with world models, which gather data from their surroundings to build a mental model of their environments, similar to humans.”

“Nature-inspired intelligence” might sound like something out of a 60’s stoner movie, but the concept has a long history in AI research. The idea is to create software that is able to learn and search for solutions rather than one that must be engineered step-by-step, Jones said.”

As his partner Ha went on to explain to Reuters:

“After the famous paper came out, advances in generative AI foundation models have centered around making the “transformer”-based models larger and larger. Instead of doing that, Sakana AI will focus on creating new architectures for foundation models, Jones told Reuters.

“Rather than building one huge model that sucks all this data, our approach could be using a large number of smaller models, each with their own unique advantage and smaller data set, and having these models communicate and work with each other to solve a problem,” said Ha, though he clarified this was just an idea.”

So it’s early days, and there’s a lot of basic work (read ‘Research’) to be done. As I’ve discussed before, there’s so much about LLM AI even today with the billions invested already, that we barely understand. And so much early innovation to be done that’s ahead. But the Sakana Labs story is notable given the pedigree of the researchers, now entrepreneurs.

The eight authors of the core Google 2017 Attention/Transformer AI paper have gone on to found well-funded AI startups in the current environment. Companies like Character.ai, Cohere, Adept, Essential AI, and others are just some of the names that are familiar, and I’ve written about some of them already. The FT chronicles how the team came together, how the paper came about, and where they went on to go:

“Like all scientific advances, the transformer was built on decades of work that came before it, from the labs of Google itself, as well as its subsidiary DeepMind, the Facebook owner Meta and university researchers in Canada and the US, among others.

“But over the course of 2017, the pieces clicked together through the serendipitous assembly of a group of scientists spread across Google’s research divisions. The final team included Vaswani, Shazeer, Uszkoreit, Polosukhin and Jones, as well as Aidan Gomez, an intern then studying at the University of Toronto, and Niki Parmar, a recent masters graduate on Uszkoreit’s team, from Pune in western India. The eighth author was Lukasz Kaiser, who was also a part-time academic at France’s National Centre for Scientific Research.”

The core glue for the team was the spoken word, how they come together in human vernacular, and most importantly, how the just emerging GPU hardware could run massive amount of probabilistic computations on ever massive amounts of data, to predict what’s next in a query solution:

“The binding forces between the group were their fascination with language, and their motivation for using AI to better understand it. As Shazeer, the veteran engineer, says: “Text is really our most concentrated form of abstract thought. I always felt that if you wanted to build something really intelligent, you should do it on text.”

Their collective work saw academic light in 2017:

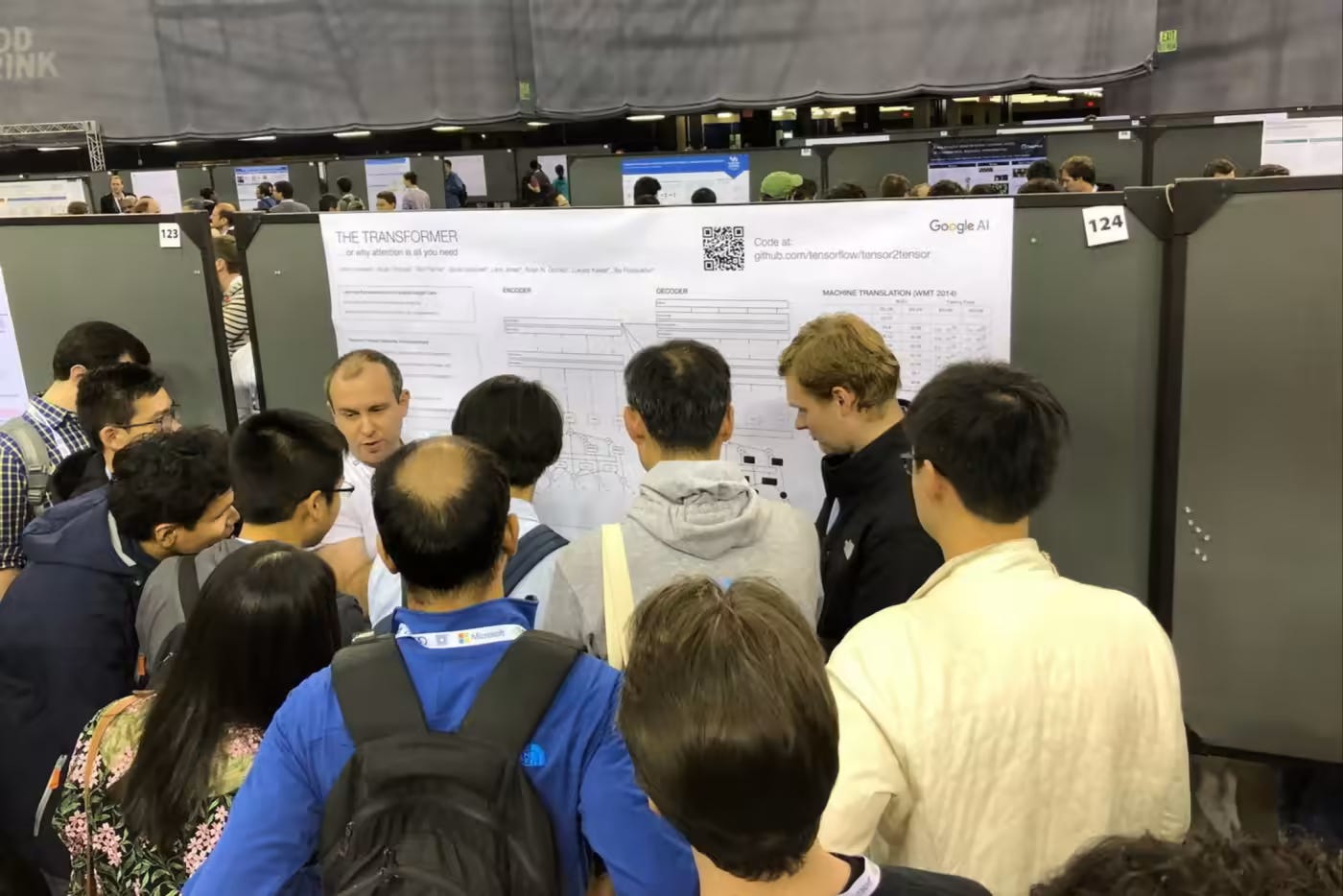

“A peer-reviewed version of the paper was published in December 2017, just in time for NeurIPS, one of the most prestigious machine learning conferences held in southern California that year. Many of the transformers authors remember being mobbed by researchers at the event when displaying a poster of their work. Soon, scientists from organisations outside Google began to use transformers in applications from translation to AI-generated answers, image labeling and recognition. At present, it has been cited more than 82,000 times in research papers.”

That was July last year. The rest as they say, was history.

By now, the authors have gone onto greener pastures, as Reuters notes:

“All the authors on the “Attention Is All You Need” paper have now left Google. The authors' new ventures have attracted millions in funding from venture investors, including Noam Shazeer, who is running AI chatbot startup Character.AI, and Aidan Gomez, who founded large language model startup Cohere.”

Jones and Ha are the latest to go fishing in fresh waters. Their determination to venture out of the not-so-old Foundation LLM AI box stands out in this conversation with CNBC:

“I would be surprised if language models were not part of the future,” said Ha, who left Google last year to be head of research at startup Stability AI. He said he doesn’t want Sakana to just be another company with an LLM.”

New directions indeed. Happy Fishing!

It’s always heartening to see founders with fresh takes on old problems. We’re seeing a fair bit of that with all of the famous AI ‘Attention’ paper authors.

Some of these perhaps, and/or likely other AI startup stories will be as iconic over time as Bill Gates/Paul Allen, Steve Jobs/Steve Wozniak, Sergey Brin/Larry Page and others over tech waves past…bridges to tech waves today and the ones to follow in the years ahead. These are early days, and it takes a while for the fish to bite. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here).