AI: Nvidia's vertical AI Data Center Strategy. RTZ #468

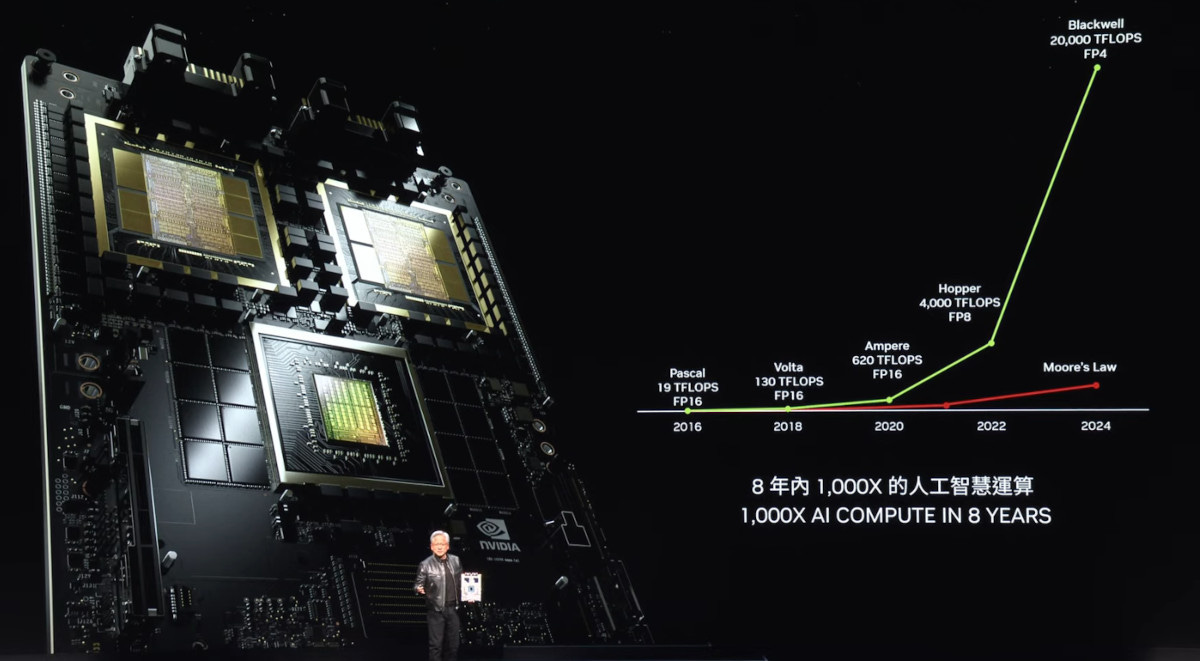

...a market leading strategy of fusing AI GPU chips with AI data centers, and a formidable software moat

As the saying goes, it’s generally important to ‘see the forest for the trees’.

In my post a few days ago “Nvidia’s formidable Software Moat…not just a ‘chip company” in this AI Tech Wave, I noted:

“Nvidia sells entire systems to fill AI Data centers, far bigger than even IBM could dream about in its heyday. And increasingly Nvidia ‘rents’ its systems both directly and indirectly via its AI Cloud Data center partners like Amazon AWS, Microsoft Azure, Google Cloud, Oracle Cloud, CoreWeave and many, many others. Via its AI Data center brand names like DGX (see above), and soon NIMs (Nvidia Inference Microservices), NeMo, and other products. With options of air-cooled or liquid-cooled. That’s extra.”

“And with the interconnecting open source Software, they have a moat to beat most moats. And it’s far from being ‘just a chip’ company. They’re building vertical AI data centers that they can provide all the parts for, then then populate their Cloud data center company customers’s facilities around the world. Their DGX data centers are an increasingly preferred alternative in these customers' data centers.”

“It’s an AI Operating System company. A ‘Wintel’ of the AI age. Upside down with hardware empowering the software. All in one company instead of two in the PC wave, Microsoft and Intel.”

“Only this time, the software is open source, and increasingly the invisible key to the profit gusher that has already become visible. And likely becoming more sustainable as time goes on.”

All while this AI Tech Wave is barely getting started.“

The key point above beyond their formidable software moat, is that Nvidia is also building a formidable AI data center strategy, under the DGX brand. And doing so in partnership with their top cloud data center customers like Microsoft, Google, Amazon, Meta and others.

This point is underlined in the WSJ’s latest piece “Nvidia Takes an Added Role Amid AI Craze: Data-Center Designer”:

“Beyond its chips, the company is playing a growing role in shaping the server farms where AI is produced and deployed”.

“Nvidia dominates the chips at the center of the artificial-intelligence boom. It wants to conquer almost everything else that makes those chips tick, too.”

“Chief Executive Jensen Huang is increasingly broadening his company’s focus—and seeking to widen its advantage over competitors—by offering software, data-center design services and networking technology in addition to its powerful silicon brains.”

“He is trying to build Nvidia into more than a supplier of a valuable hardware component: a one-stop shop for all the key elements in the data centers where tools like OpenAI’s ChatGPT are created and deployed, or what he calls “AI factories.”

I’ve discussed this ‘AI Factory’ strategy as part of Nvidia’s ‘Accelerated Computing’ framework in the Healthcare vertical.

And of course this is echoed by competitors like AMD racing to build their AI data center strategy as well with its $5 billion purchase of ZT Systems just a few days ago. Nvidia of course has been executing on that strategy for years now, with its 2019 acquisition of networking company Mellanox for $7 billion.

As the WSJ summarizes it:

“Nvidia is building on the effectiveness of its 17-year-old proprietary software, called CUDA, which enables programmers to use its chips. More recently, Huang has been pushing resources into a superfast networking protocol called InfiniBand, after acquiring the technology’s main equipment maker, Mellanox Technologies, five years ago for nearly $7 billion. Analysts estimate that InfiniBand is used in most AI-training deployments.”

“Nvidia is also building a business that supplies AI-optimized Ethernet, a form of networking widely used in traditional data centers. The Ethernet business is expected to generate billions of dollars in revenue within a year, Chief Financial Officer Colette Kress said Wednesday.”

“More broadly, Nvidia sells products including central processors and networking chips for a range of other data-center equipment that is fine-tuned to work seamlessly together. And it offers software and hardware setups catered to the needs of specific industries such as healthcare and robotics.”

“He has verticalized the company,” Raul Martynek, CEO of data-center operator DataBank said of Huang’s strategy. “They have a vision around what AI should be, what software and hardware components are needed to make it so users can actually deploy it.”

Nvidia’s AI data center strategy, is targeting a market that founder/CEO Jensen Huang see as a trillion dollar opportunity over this decade. One needs to see the AI data center ‘forest’ for the AI GPU chips that are towering ‘trees’. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)