AI: Nvidia's formidable Software Moat. RTZ #445

...not just a 'chip' company in this AI Tech Wave

The Bigger Picture, Sunday August 11, 2024

At a recent AI investment conference, I was asked why isn’t Nvidia just a ‘chip’ company. Yes, an ‘AI’ chip company, but a ‘chip’ company nevertheless.

Why should it be an almost three trillion market company with software like margins, with no discernible and defensible ‘moat’.

I explained that Nvidia is a company with a formidable ‘moat’, complete with flying dragons.

That after having tracked tech companies for over three decades across at least half a dozen technology waves, Nvidia in my view in this AI Tech Wave, is one of the most formidable ‘Software’ companies with impressive ‘moats’, that I’ve seen to date. And that it’s increasingly far from being just a ‘chip’ company, with the software being at least one of its most intractable ‘moats’. This is ‘The Bigger Picture’, I’d like to explain this Sunday.

First, let me put the ‘just a chip company’ part in context. You see, in most prior tech waves, hardware companies, which could be chips, storage, networking, computers etc., are the lower parts of the technology stack. You can see these tech stack charts below. Here is the PC Tech Stack from the 1980s:

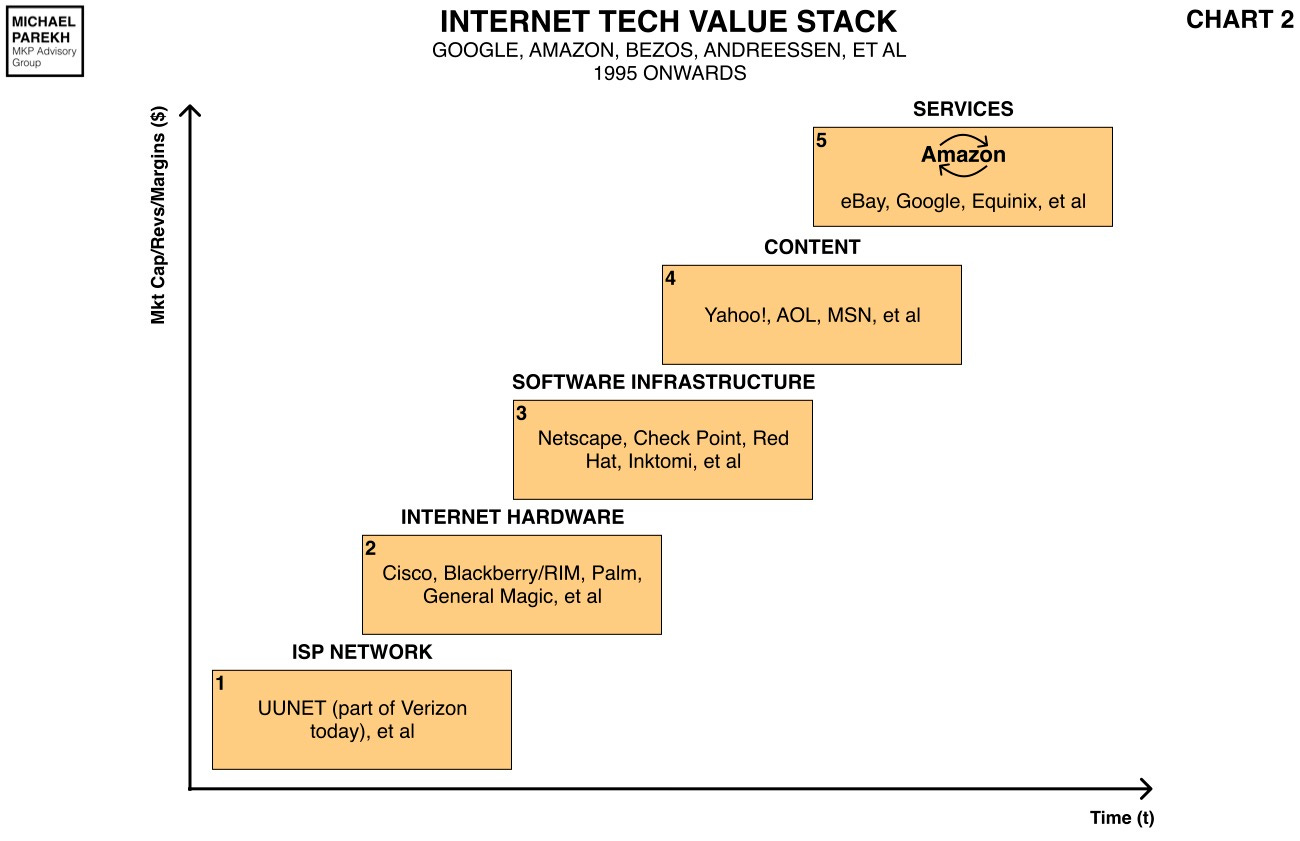

And the Internet Tech Value Stack from the 1990s:

These parts are most prone to commodification with lower margins, as the tech wave gathers steam and grows rapidly (the ‘Y’ axis on the left).

Especially at the saturation point of the tech wave. But in this AI Tech Wave, Nvidia, besides also starting in the lower box 1 of the tech stack chart below, has managed to garner margins north of 80%, most akin to the most profitable software companies, that typically sit in Box 6 up and to the right.

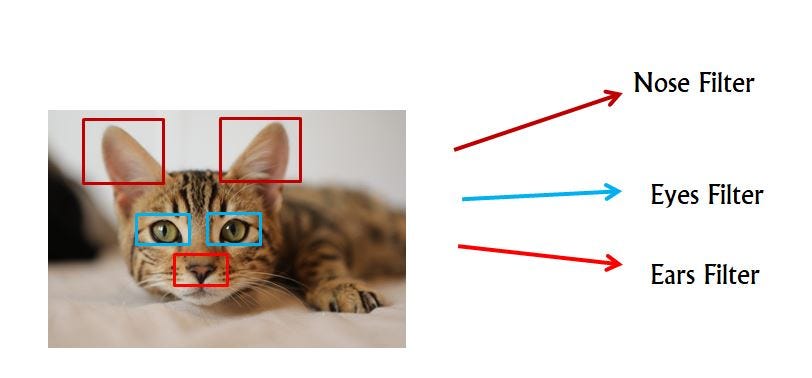

And the secret to Nvidia’s success in the ‘upside down’ outperformance, is that its founder/CEO Jensen Huang architected, and executed a brilliant AI Accelerated Computing Strategy based as much on Software as on the Hardware. With Jensen jumping on the implications of ‘Convolutional Neural Networks’ like Alexnet in 2012, when AI could finally find a ‘cat’ in millions of images via GPUs.

And going ‘all-in’ with Nvidia, with increasing ‘bets’ over the next dozen years.

All of it goes into its industry leading AI GPU chips, AI data center clusters, and related networking and other AI hardware and software infrastructure. And most of that software is ‘open source’, and is one of the company’s critical ‘moats’.

The WSJ in a piece by Christopher Mims, explains the current state of play here for Nvidia in “Why Every Big Tech Company Has Failed to Dethrone Nvidia as King of AI”. It calls Nvidia’s software ‘moat’, its ‘walled garden’, but that’s a ‘Rose by any name’:

“Nvidia, like Apple, shows that if you want to become a giant, you’ve got to be as good at software as you are at hardware.”

“Nvidia is famous for building AI chips, but its most important construction is a business bulwark that keeps customers in and competitors out. This barrier is made as much of software as it is of silicon.”

“Over the past two decades, Nvidia has created what is known in tech as a “walled garden,” not unlike the one created by Apple. While Apple’s ecosystem of software and services is aimed at consumers, Nvidia’s focus has long been the developers who build artificial-intelligence systems and other software with its chips.”

“Nvidia’s walled garden explains why, despite competition from other chip makers and even tech giants like Google and Amazon, Nvidia is highly unlikely to lose meaningful AI market share in the next few years.”

“It also explains why, in the longer term, the battle over territory Nvidia now dominates is likely to focus on the company’s coding prowess, not just its circuitry design—and why its rivals are racing to develop software that can circumvent Nvidia’s protective wall.”

And then it goes into Nvidia’s software ‘moat’:

“The key to understanding Nvidia’s walled garden is a software platform called CUDA. When it launched in 2007, this platform was a solution to a problem no one had yet: how to run non-graphics software, such as encryption algorithms and cryptocurrency mining, using Nvidia’s specialized chips, which were designed for labor-intensive applications like 3-D graphics and videogames.”

“CUDA enabled all kinds of other computing on those chips, known as graphics-processing units, or GPUs. Among the applications CUDA let Nvidia’s chips run was AI software, whose booming growth in recent years has made Nvidia one of the most valuable companies in the world.”

But then the WSJ piece goes into the software ‘moat’, BEYOND CUDA, the hundreds of open-source software ‘kernels’ across dozens of vertical applications, from healthcare to manufacturing, to robotics and more:

“Also, and this is key, CUDA was just the beginning. Year after year, Nvidia responded to the needs of software developers by pumping out specialized libraries of code, allowing a huge array of tasks to be performed on its GPUs at speeds that were impossible with conventional, general-purpose processors like those made by Intel and AMD.”

Geeky stuff as in the chart below, but goes a long way explaining Nvidia’s software ‘moat’. Or as I like to think of it, Nvidia is fast becoming the OPERATING SYSTEM (aka OS) of the AI industry. Providing the ‘gunky stuff’ between hardware and software from the wide array of third parties across so many industries. The MS-DOS of the AI age in its early days:

“The importance of Nvidia’s software platforms explains why for years Nvidia has had more software engineers than hardware engineers on its staff. Nvidia Chief Executive Jensen Huang recently called his company’s emphasis on the combination of hardware and software “full-stack computing,” which means that Nvidia makes everything from the chips to the software for building AI.”

“Every time a rival announces AI chips meant to compete with Nvidia’s, it is up against systems that Nvidia’s customers have been using for more than 15 years to write mountains of code. That software can be difficult to shift to a competitor’s system.”

And here are the dimensions of its software ‘moat’…the truly impressive numbers behind their AI ‘accelerated computing’ OS:

“At its June shareholders meeting, Nvidia announced that CUDA now includes more than 300 code libraries and 600 AI models, and supports 3,700 GPU-accelerated applications used by more than five million developers at roughly 40,000 companies.”

The piece then goes into the breadth and depth of efforts by competing efforts by big and smaller tech, hardware and software companies, that are rushing to develop mechanism to penetrate Nvidia’s emerging AI Operating System. The piece is worth reading in its entirety for that context.

But as I’ve articulated in prior posts, Nvidia’s Jensen Huang has been playing the ‘long game’ in AI for over a decade now. He called ‘AI eating software eating the world’ before 8 Google engineers published their seminal ‘Attention is all you need’ AI paper in 2017. That got the whole LLM AI/Generative AI ball rolling, and allowed OpenAI to develop the ‘T’ for ‘Transformers’ in all their pioneering ‘GPT models’.

That then culminating to date, in GPT-4 Omni, its current leading AI model, and leading the way to the highly anticipated GPT-5, potentially with ‘Strawberry’ flavoring,

And Nvidia has been playing a concurrent game beyond ‘chips’ from the beginning to empower the above AI ‘Transformer’ software revolution, with OpenAI, Microsoft, Google and many others.

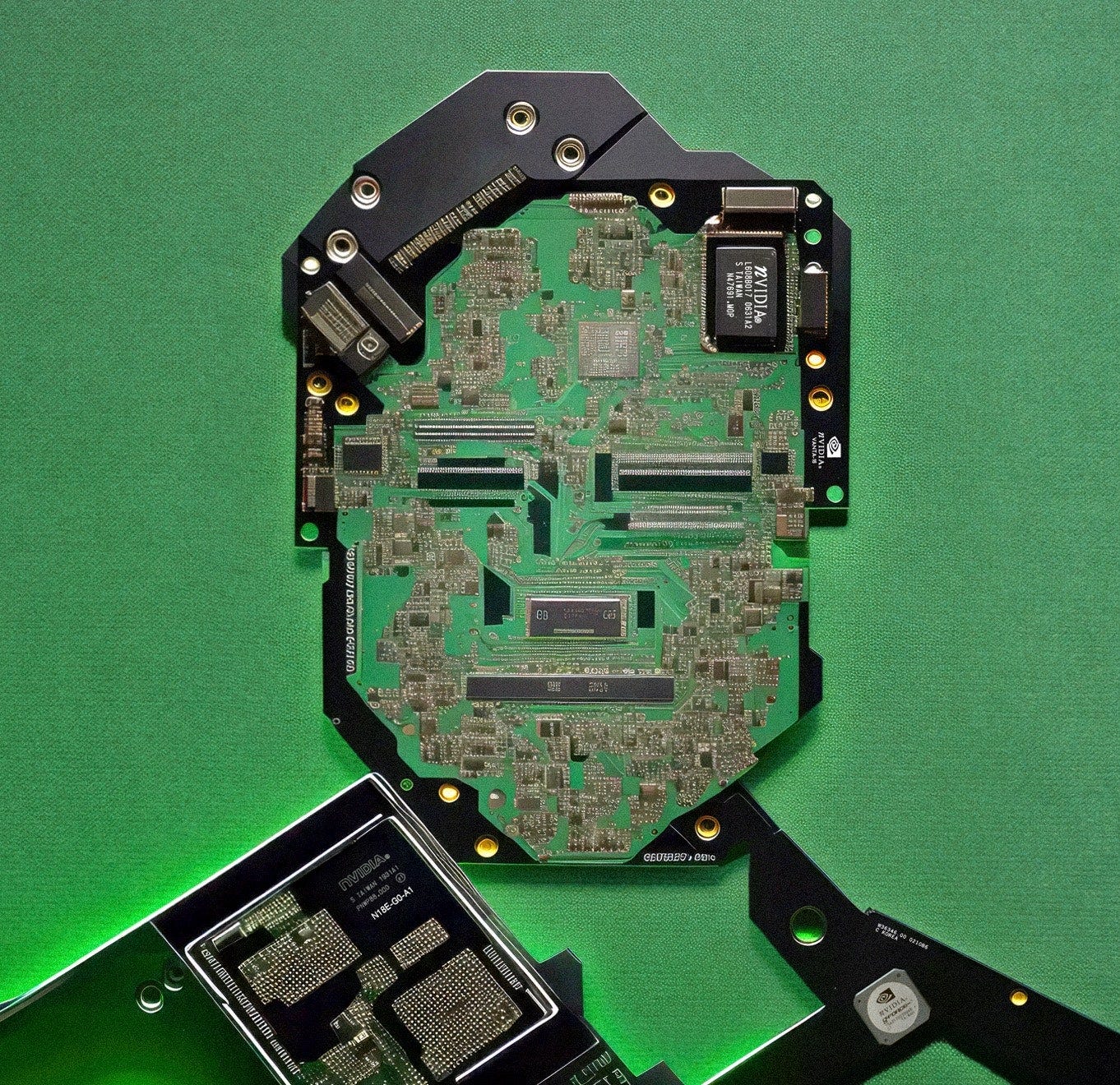

I’ve written about their AI ‘Accelerated Computing’ roadmap, which accelerates computing beyond Moore’s Law. And have discussed their detailed product outlines, its ‘AI Inference game plan’, and the ‘AI Factories’ to come that truly take AI data centers to the next levels in AI Compute. So yes, Nvidia is a true AI hardware AND software juggernaut. Despite their recent next gen Blackwell tech delay.

And coming back to the opening question, ‘Why is Nvidia not just a ‘chip’ company’, I’d add that other than its gaming GPU chips, Nvidia today doesn’t sell its AI GPUs by the piece. Customers buy them as AI data center ‘superclusters’, in batches of tens of thousands, hundreds of thousands, and soon millions of AI GPUs. Completely connected by bespoke and increasingly industry standard ‘cabling’ that by itself is worth billions in additional revenues.

Nvidia sells entire systems to fill AI Data centers, far bigger than even IBM could dream about in its heyday. And increasingly Nvidia ‘rents’ its systems both directly and indirectly via its AI Cloud Data center partners like Amazon AWS, Microsoft Azure, Google Cloud, Oracle Cloud, CoreWeave and many, many others. Via its AI Data center brand names like DGX (see above), and soon NIMs (Nvidia Inference Microservices), NeMo, and other products. With options of air-cooled or liquid-cooled. That’s extra.

And with the interconnecting open source Software, they have a moat to beat most moats. And it’s far from being ‘just a chip’ company. They’re building vertical AI data centers that they can provide all the parts for, then then populate their Cloud data center company customers’s facilities around the world. Their DGX data centers are an increasingly preferred alternative in these customers' data centers.

It’s an AI Operating System company. A ‘Wintel’ of the AI age. Upside down with hardware empowering the software. All in one company instead of two in the PC wave, Microsoft and Intel.

Only this time, the software is open source, and increasingly the invisible key to the profit gusher that has already become visible. And likely becoming more sustainable as time goes on.

All while this AI Tech Wave is barely getting started.

Haven’t seen a operating system dynamic like this in any of the tech waves over my professional tech career over three decades.

For these reasons alone, Nvidia is also on my ‘Call your shot’ list in this AI Tech Wave, along with Google and Apple that I’ve discussed as well. These are companies with nuanced business models, whose sum of the parts put them in a pole position in this AI Tech Wave for the long term.

That’s ‘the Bigger Picture’ this Sunday. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

The comparison to Wintel is really apropo here - but what strikes me is how Nvidia's learned from Microsoft's playbook by going open source with CUDA and those 300+ libraries. Makes it way harder for competitiors to dislodge them when millions of developers are already building on your stack. The 'walled garden' that's actualy open is a fascinating paradox that seems to be working brilliantly for them.

Is Nvidia’s moat still as formidable now that Broadcom emerges as a strong competitor with big tech companies using Broadcom’s custom AI chips?