AI: New Focus on 'Accelerated' Local AI Devices. RTZ #387

...Apple's new AI roadmap makes it 'Real' at 'Edge'; Microsoft Windows as well with Qualcomm 'Snapdragon Elite' chip laptops/PCs

With Apple’s just announced ‘Apple Intelligence’ ‘AI’ strategy, the company made clear that many of its AI features would only run on Apple’s latest, top of the line iPhone 15 Pro smartphones, and Macs/laptops/iPads with Apple Silicon M chips or better. And that to get these wide array of AI features, users will have to upgrade their devices going forward.

Microsoft is concurrently the beneficiary of the same trend, with its upcoming Windows PC/Laptops by a host of OEM vendors powered by Qualcomm’s Snapdragon Elite chips. These are designed from the ground up to give Apple Silicon type power and GPU/Neural chip processing for AI/ML applications to Windows machines. Microsoft expects to sell over 50 million units of these per year plus, going forward. Qualcomm is poised to be the new ‘Intel Inside’ of the computers. Only with Qualcomm instead of Intel (or AMD), for now.

That’s resulting in a market anticipation of a faster smartphone upgrade cycles, and dismay in some quarters that older phones and computers won’t be able to do cool AI things.

There’s been both disappointment and excitement for observers that more AI features for smartphone and PC/laptop users mean that devices will need to be upgraded more regularly. Possibly annually even, to get the best AI performance in applications and services. This is something I’ve outlined as a key next step for Apple and others.

I’ve been talking about a ‘Small AI’ and Local/’On-Prem’ phase in this AI Tech Wave for some time now. We started to see this early this year as hardware and software vendors started to talk about ‘AI PCs’, culminating in Microsoft’s ‘Copilot + PC’ strategy just a few weeks ago.

It’s clear that companies like Microsoft, Google, and now Apple, with meaningful Operating System platforms, both in computers, and/or smartphones, are rapidly leveraging LLM and Generative AI capabilties deep in their devices and operating systems, often with DOZENS of local and small language models (SLMs), both closed and open source.

And they’re reworking the hardware semiconductor chipsets with AI GPUs, ‘Neural Processing’ chips, higher amounts of high speed memory, and other upgrades, to run AI training and inference cycles locally on the devices themselves whenever possible.

The advantages here of course are deeper access to the user’s most personal content and data, with the highest amounts of Privacy, along with the lowest ‘latency’, meaning that there are fewer long round trips to data and compute in the cloud for AI computations. Not to mention higher power efficiencies locally via batteries and plugged in power.

But the biggest advantage, and challenge, of running AI locally of course, is to enhance Privacy of user content, data and their most personaly daily habits. As I’ve described recently, it’s as important to Scale Trust for mainstream users going forward, as it is to ‘Scale AI’.

It’s illustrative how Apple plans to do the above by leveraging its unique ecosystem of multiple hardware and software based hardware platforms, operating systems, and portfolio of applications and services. Both their own, and third party developer driven application services.

As the Verge explains in “Here’s how Apple’s AI model tries to keep your data private”:

“At WWDC on Monday, Apple revealed Apple Intelligence, a suite of features bringing generative AI tools like rewriting an email draft, summarizing notifications, and creating custom emoji to the iPhone, iPad, and Mac. Apple spent a significant portion of its keynote explaining how useful the tools will be — and an almost equal portion of time assuring customers how private the new AI system keeps your data.”

“That privacy is possible thanks to a twofold approach to generative AI that Apple started to explain in its keynote and offered more detail on in papers and presentations afterward. They show that Apple Intelligence is built with an on-device philosophy that can do the common AI tasks users want fast, like transcribing calls and organizing their schedules. However, Apple Intelligence can also reach out to cloud servers for more complex AI requests that include sending personal context data — and making sure that both deliver good results while keeping your data private is where Apple focused its efforts.”

“The big news is that Apple is using its own homemade AI models for Apple Intelligence. Apple notes that it doesn’t train its models with private data or user interactions, which is unique compared to other companies. Apple instead uses both licensed materials and publicly available online data that are scraped by the company’s Applebot web crawler. Publishers must opt out if they don’t want their data ingested by Apple, which sounds similar to policies from Google and OpenAI. Apple also says it omits feeding social security and credit card numbers that are floating online, and ignores “profanity and other low-quality content.”

“A big selling point for Apple Intelligence is its deep integration into Apple’s operating systems and apps, as well as how the company optimizes its models for power efficiency and size to fit on iPhones. Keeping AI requests local is key to quelling many privacy concerns, but the tradeoff is using smaller and less capable models on-device.”

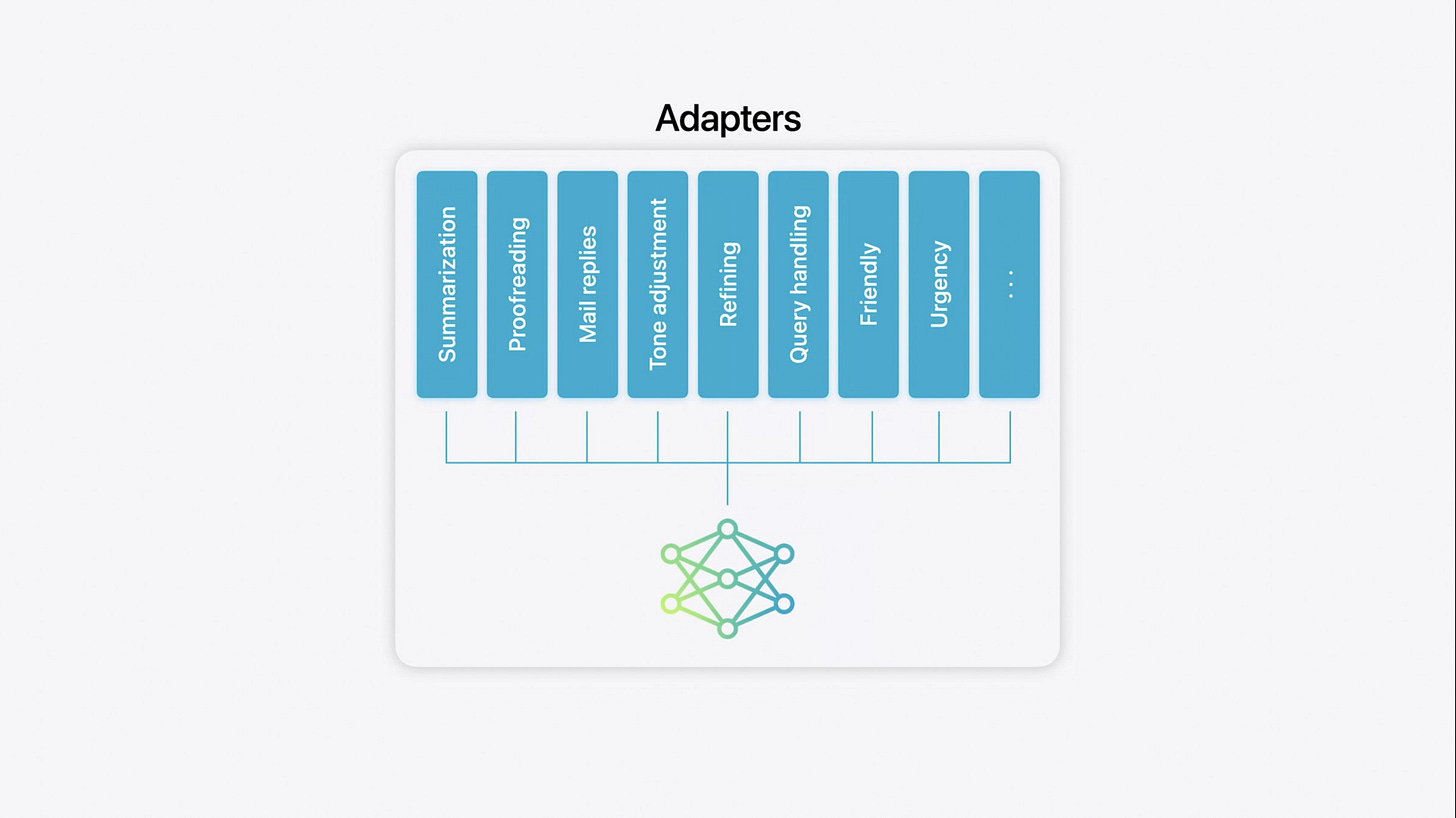

“To make those local models useful, Apple employs fine-tuning, which trains models to make them better at specific tasks like proofreading or summarizing text. The skills are put into the form of “adapters,” which can be laid onto the foundation model and swapped out for the task at hand, similar to applying power-up attributes for your character in a roleplaying game. Similarly, Apple’s diffusion model for Image Playground and Genmoji also uses adapters to get different art styles like illustration or animation (which makes people and pets look like cheap Pixar characters).”

That’s all done as locally as possible, going to the Cloud only when necessary:

“If a user request needs a more capable AI model, Apple sends the request to its Private Cloud Compute (PCC) servers. PCC runs on its own OS based on “iOS foundations,” and it has its own machine learning stack that powers Apple Intelligence. According to Apple, PCC has its own secure boot and Secure Enclave to hold encryption keys that only work with the requesting device, and Trusted Execution Monitor makes sure only signed and verified code runs.”

It’s a very differentiated strategy of balancing AI imperatives across local and Apple’s cloud services, and then extended to third party broader LLM AI services like OpenAI’s ChatGPT Omni and potentially other AI services. All on an ‘Opt-in’ basis every time.

But the key here is the amount of boost provided by the local AI chip hardware on the devices.

Google and Microsoft of course are pursuing a similar strategy in their own ecosystems:

“Apple’s local and cloud split approach for Apple Intelligence isn’t totally novel. Google has a Gemini Nano model that can work locally on Android devices alongside its Pro and Flash models that process on the cloud. Meanwhile, Microsoft Copilot Plus PCs can process AI requests locally while the company continues to lean on its deal with OpenAI and also build its own in-house MAI-1 model. None of Apple’s rivals, however, have so thoroughly emphasized their privacy commitments in comparison.”

Hope the above begins to explain the bottom up reasons of a possible device upgrade cycle globally due to mainstream AI deployment by tech companies large and small.

With the annual global smartphone market alone running at almost half a trillion dollars, this implies a market and upgrade cycle at least as important as AI Data Centers and Compute, which have gotten so much investment attention to date.

We are at the beginning of the beginning of this accelerated device upgrade cycle in this AI Tech Wave. And it’s barely begun to get started at the local device level. Apple is now leading the way with their ‘Apple Intelligence’ strategy. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)