AI: Going small with Google Gemma

...they're in 'Small AI' too now

Regular readers know how I’ve long highlighted ‘Small AI’ being as important as ‘Big AI’ this year and beyond for the AI Tech Wave. And discussed how the biggest ‘Magnificent 7’ companies like Microsoft with Phi-2, Meta with open-sourced Llama 2, on local devices, Nvidia just days ago with AI chat on RTX, and others have been accelerating in this direction.

Now Google is also rolling out small LLM AI models (or just ‘Small Language Models: SLMs), with Gemma. And they’re open sourced, joining Meta and others on that AI vector as well. It adds onto their core efforts around Google Gemini, which I’ve also discussed at length. Now Google is going bigger in ‘Small AI’ as well with Gemma. As Axios summarizes:

“Google today released Gemma, a range of "lightweight" open AI models designed for text generation and other language tasks, Ryan reports.”

“Why it matters: Google is betting on the substantial market of developers who don't need or can't afford to use the biggest AI models like Gemini.”

“Details: Google is releasing models in two sizes and both can run on a laptop.”

“The models come with a "Responsible Generative AI Toolkit," which Google says will help developers build their own safety filters for Gemma models, and a "debugging tool" to help developers investigate Gemma's behavior and address potential issues.”

“Gemma is optimized for Nvidia GPUs, offering the ability to fine tune models locally.”

“Access to Gemma is free via Google's Kaggle platform for data scientists in an effort to encourage transparency about how the models are used and to offer "large scale community validation" of its safety efforts.”

“The big picture: Instead of chasing an excitement factor or a consumer market, Google is seeding an enterprise market — one that may end up paying big dollars to use Google Cloud, as developers invent new consumer applications to run on Gemma.”

“What they're saying: "Things that previously would have been the remit of extremely large models are now possible with state-of-the-art smaller models and this unlocks completely new ways of developing AI applications," says Tris Warkentin, a director at Google DeepMind.”

“Yes, but: Microsoft has also invested in the market for smaller models, via its Phi range.”

“Go deeper: How competition between big and small AI will shape the tech's future”

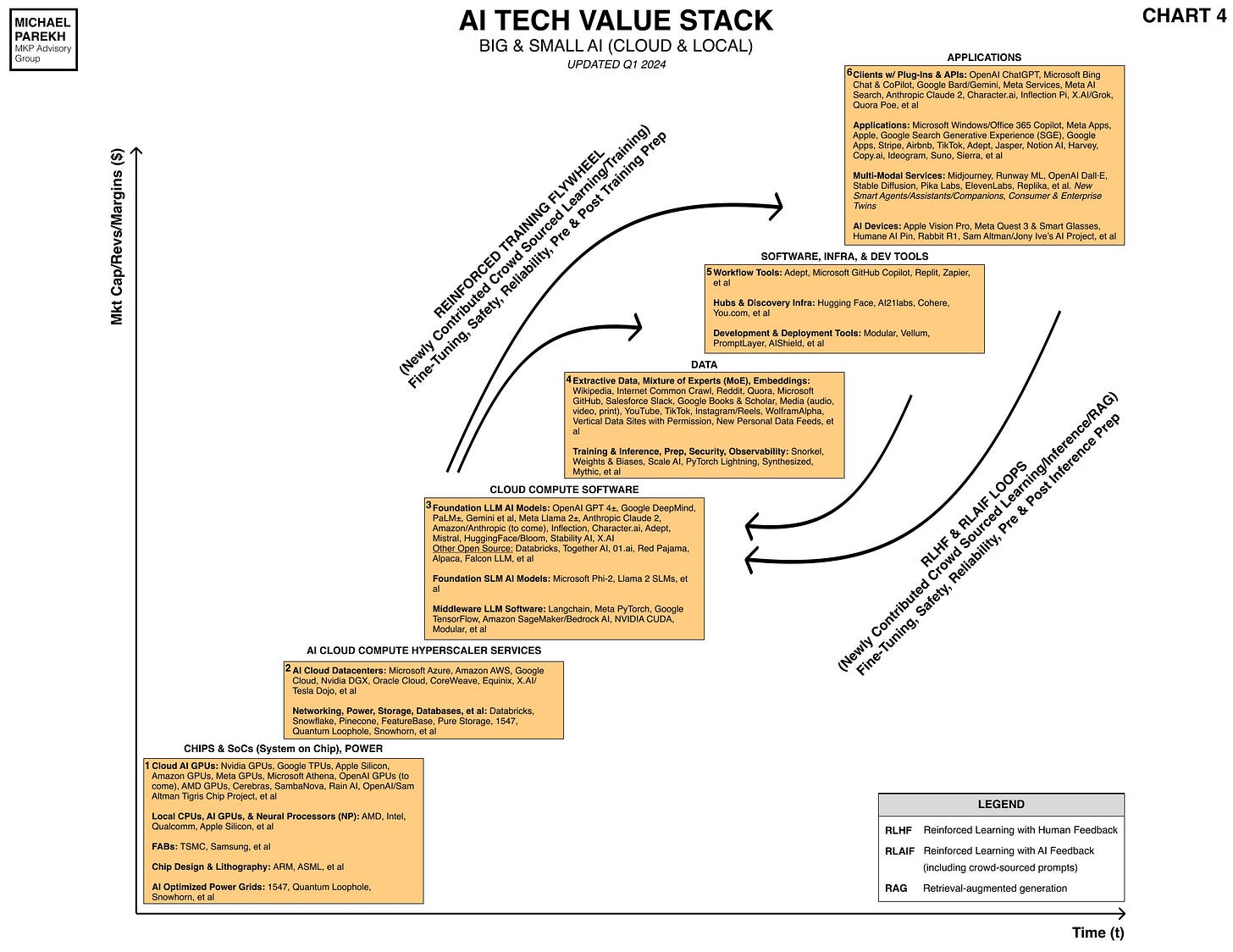

All this continues to underline how actively the underlying AI infrastructure continues to build around ‘Small AI’. Now the foundational boxes 1 through 4 below in the AI Tech Stack above. And seeing a range of components coming into focus from many key players. Only a matter of time before we see interesting AI products and services further up the stack, all the way to Box 6.

Expect more from all AI companies big and small on this front all through this year. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)