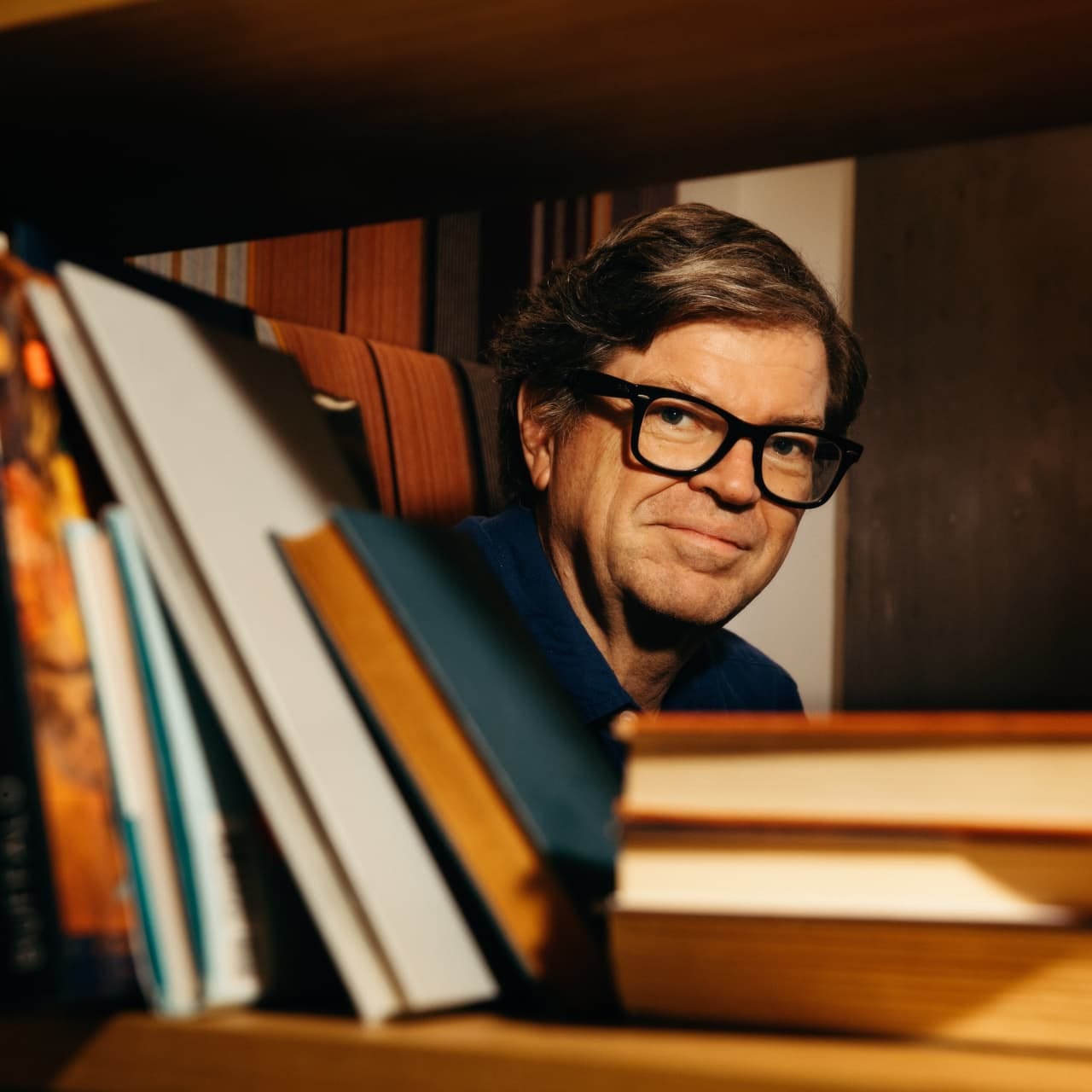

AI: Meta's Yann LeCun, a differentiated voice in the AI debates. RTZ #509

...a pragmatic and balanced 'Godfather of AI'

Is AI today poised to surpass humans, or is it just barely as smart as a cat?

This debate is underway fast and furious at the top AI circles today.

The last few days has seen several pieces here on AI luminaries who’ve won Nobel prizes, and ones that have penned AI notable essays on the AI road ahead to AGI, whatever that ends up being in this AI Tech Wave.

And there are far more areas of disagreements amongst them than one would suspect of colleagues working together for decades.

“From left to right: Yann LeCun ; Geoffrey Hinton ; Yoshua Bengio”.

We’ve discussed one of three ‘AI Godfathers’ Geoffrey Hinton, who shared a Nobel prize in Physics, and Sir Demis Hassabis, head of Google’s Deepmind and AI efforts, who just shared a Nobel in Chemistry. And of course we’ve discussed written essays on AI ahead by OpenAI founder/CEO Sam Altman, and Anthropic founder/CEO Dario Amodei.

But it wouldn’t be complete to talk about the AI luminaries who matter, especially at the intersection of AI Research, and AI commercialization, without discussing Yann LeCun, the head of AI at Meta, and long-time AI/Deep Learning professor at NYU.

Particularly, because I’ve been following him for a long time, and much of his views on AI resonate with mine, especially on premature fear of AI. I also like how the WSJ introduces him in their profile this weekend. It’s provocatively titled “This AI Pioneer Thinks AI Is Dumber Than a Cat”:

“Yann LeCun, an NYU professor and senior researcher at Meta Platforms, says warnings about the technology’s existential peril are ‘complete B.S.’”

“Yann LeCun helped give birth to today’s artificial-intelligence boom. But he thinks many experts are exaggerating its power and peril, and he wants people to know it.”

“While a chorus of prominent technologists tell us that we are close to having computers that surpass human intelligence—and may even supplant it—LeCun has aggressively carved out a place as the AI boom’s best-credentialed skeptic.”

“On social media, in speeches and at debates, the college professor and Meta Platforms META 1.05%increase; green up pointing triangle AI guru has sparred with the boosters and Cassandras who talk up generative AI’s superhuman potential, from Elon Musk to two of LeCun’s fellow pioneers, who share with him the unofficial title of “godfather” of the field. They include Geoffrey Hinton, a friend of nearly 40 years who on Tuesday was awarded a Nobel Prize in physics, and who has warned repeatedly about AI’s existential threats.”

“LeCun thinks that today’s AI models, while useful, are far from rivaling the intelligence of our pets, let alone us. When I ask whether we should be afraid that AIs will soon grow so powerful that they pose a hazard to us, he quips: “You’re going to have to pardon my French, but that’s complete B.S.”

The whole piece goes into a lot more detail on his short and long-term views on AI, and would recommend reading in full.

The piece also frames his well with the other AI luminaries mentioned above:

“In 2019, LeCun won the A.M. Turing Award, the highest prize in computer science, along with Hinton and Yoshua Bengio. The award, which led to the trio being dubbed AI godfathers, honored them for work foundational to neural networks, the multilayered systems that underlie many of today’s most powerful AI systems, from OpenAI’s chatbots to self-driving cars.”

“Today, LeCun continues to produce papers at NYU along with his Ph.D. students, while at Meta he oversees one of the best-funded AI research organizations in the world, as chief AI scientist at Meta. He meets and chats often over WhatsApp with Chief Executive Mark Zuckerberg, who is positioning Meta as the AI boom’s big disruptive force against other tech heavyweights from Apple to OpenAI.”

And given the current ongoing high-level debates on the ‘existential fears’ of AI, and ‘big risks’ on AI safety, it’s refreshing to see Yann address this with candor based on deep knowledge of the field, especially relative to his peers:

“LeCun jousts with rivals and friends alike. He got into a nasty argument with Musk on X this spring over the nature of scientific research, after the billionaire posted in promotion of his own artificial-intelligence firm.”

“LeCun also has publicly disagreed with Hinton and Bengio over their repeated warnings that AI is a danger to humanity.”

“LeCun, in glasses, in 2019 shared the highest prize in computer science with Yoshua Bengio, far left, and Geoffrey Hinton, standing, who went on to win a Nobel Prize in physics this week”

“Bengio says he agrees with LeCun on many topics, but they diverge over whether companies can be trusted with making sure that future superhuman AIs aren’t either used maliciously by humans, or develop malicious intent of their own.”

It’s also refreshing to see his thoughts on the timing to AGI:

“He is convinced that today’s AIs aren’t, in any meaningful sense, intelligent—and that many others in the field, especially at AI startups, are ready to extrapolate its recent development in ways that he finds ridiculous.”

“If LeCun’s views are right, it spells trouble for some of today’s hottest startups, not to mention the tech giants pouring tens of billions of dollars into AI. Many of them are banking on the idea that today’s large language model-based AIs, like those from OpenAI, are on the near-term path to creating so-called “artificial general intelligence,” or AGI, that broadly exceeds human-level intelligence.”

“OpenAI’s Sam Altman last month said we could have AGI within “a few thousand days.” Elon Musk has said it could happen by 2026.”

“LeCun says such talk is likely premature. When a departing OpenAI researcher in May talked up the need to learn how to control ultra-intelligent AI, LeCun pounced. “It seems to me that before ‘urgently figuring out how to control AI systems much smarter than us’ we need to have the beginning of a hint of a design for a system smarter than a house cat,” he replied on X.”

“He likes the cat metaphor. Felines, after all, have a mental model of the physical world, persistent memory, some reasoning ability and a capacity for planning, he says. None of these qualities are present in today’s “frontier” AIs, including those made by Meta itself.”

Kudos to Meta founder/CEO Mark Zuckerberg for supporting his AI chief on all these perspecties, balancing near-term pragmatism on AI, with the long-term AI opportunities. Both open and closed AI, as they blur.

In particular, I like how Yan summarize the spectrum of AI realities in the piece, especially in the importance for other types of software beyond LLMs to create ‘hybrid’ solutions, a topic I’ve discussed at length:

”The large language models, or LLMs, used for ChatGPT and other bots might someday have only a small role in systems with common sense and humanlike abilities, built using an array of other techniques and algorithms.”

“Today’s models are really just predicting the next word in a text, he says. But they’re so good at this that they fool us. And because of their enormous memory capacity, they can seem to be reasoning, when in fact they’re merely regurgitating information they’ve already been trained on.”

“We are used to the idea that people or entities that can express themselves, or manipulate language, are smart—but that’s not true,” says LeCun. “You can manipulate language and not be smart, and that’s basically what LLMs are demonstrating.”

He emphasizes how it’s important especially not to anthropomorphize the AIs prematurely, a topic I also agree with wholeheartedly.

Overall, Yann LeCun is an important voice in this AI Tech Wave journey. And it’s encouraging to see him in the AI wheelhouse of both Meta and the AI industry. Cats’ feelings notwithstanding. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)