AI: AI industry's roots in academic politics. RTZ #506

...important source of friction at leading AI companies

There is an old saying that “The politics of the university are so intense because the stakes are so low". And it’s relevant to discuss in these early days of the AI Tech Wave. Particularly because the stakes may be getting higher.

It comes to mind today because one of the Nobel Prize winners for AI in the Physics category I discussed yesterday, went on to criticize Sam Altman, the founder/CEO of OpenAI. The media nick-named ‘Godfather of AI’, Geoffrey Hinton, a long-time deep learning/machine learning/AI researcher, professor, ex-Googler, ‘AI Doomer’, and now AI Ambassador at large, had this to say post his award. As reported by the Information:

“Hinton spent his victory lap interviews talking about the existential risks posed by AI, saying that governments should force companies such as OpenAI to prioritize safety measures and not “just put safety research on the back burner,” as some ex-employees have alleged (and current employees deny).”

“Hinton also took a swipe at OpenAI’s CEO, saying, “I’m particularly proud of the fact that one of my students fired Sam Altman.”

“That student is OpenAI co-founder Ilya Sutskever, who previously studied under Hinton (and Altman) and last year led a boardroom coup against Altman, which lasted for only a few days. Sutskever is now busy running his own company and is busy trying to hire OpenAI researchers.”

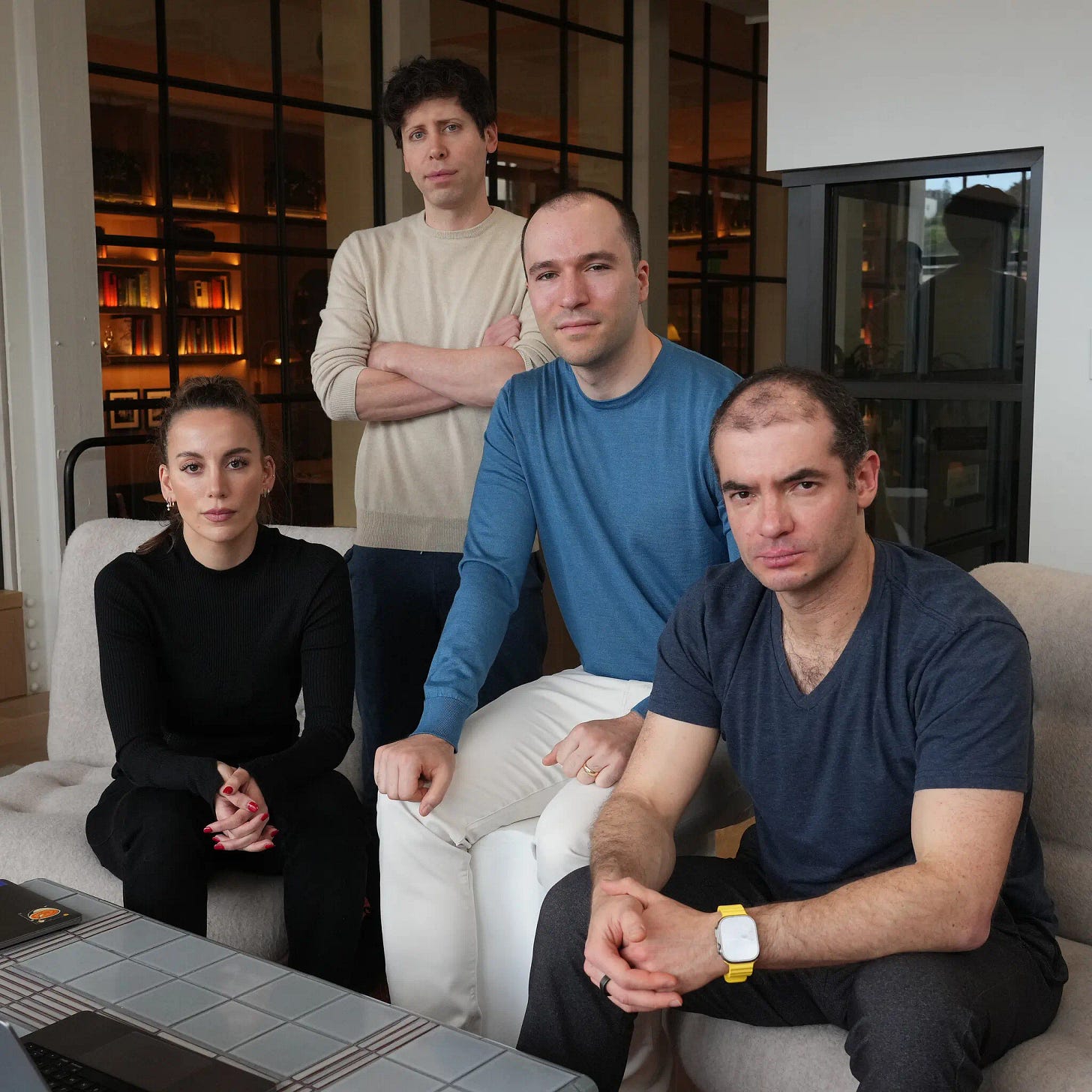

He’s praising Ilya of course (on the right above), whom I’ve written about extensively in these pages, most recently for launching ‘Safe Superintelligence’ (SSI), with over a billion dollars in fresh capital. To go up against his former company, OpenAI.

The reason these academic politics are relevant in this AI Tech Wave is because we’re seeing THE most rapid transition of academic research in a field (AI in this case), to commercially engineered and designed AI products and services, vs any other tech wave in history.

So as a result, by definition, the sentiments of most of the founders and leaders of AI startups small and large, will have to transition their politics from the academic to the commercial domains. The former comes in the form of ‘shade’ above, privately and/or publicly. The latter comes from vigorous competition in the markets, to scale the companies products and services bigger and faster than their peers.

For investors, the latter is preferable over the former.

So as AI transitions form ‘Science Projects’ to commercial AI offerings at Scale, investors have to watch this ‘emotional intelligence’ transition as well.

After all, most of the founders of Anthropic, one of the top four US LLM AI companies along with OpenAI, Google and Meta, are former founders and executives of OpenAI. That flow continues to this day. These two in some cases are two emotional sides of the same AI coin. ‘AI Safety’ is the main external differentiation device for one against the other.

The industry is seeing this shift from the academic to the commercial, and these transitions of emotional politics are but one more variable to monitor for investors both private and public. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

🎯💯🔥✨👍Concise, clear and understandable for someone like me who is not an experrt in the field. Learning every time I read one of your pieces. TYVM from Jorge in Miami.