One of the most memorable lines from the movies is from the 1995 Apollo 13, where Ed Harris playing the iconic NASA Flight Director Gene Kranz says, ‘Let’s Stay Cool, People’.

Turns out, It critically applies to AI infrastructure too.

This Sunday I discussed Nvidia’s formidable AI hardware and software ‘Accelerated Computing’ platform that is more than the ‘sum of its parts’ in this AI Tech Wave. AI chips of course being a supply-constrained critical input for AI as it Scales in Compute AND Trust, in the coming years.

But as I’ve discussed, so are Power, Talent and Data, as AI moves to exponentially higher computation capabilities. It all needs to be optimized together to do the Training, Inference, and the ‘Reinforcement Learning Loops’ driving ‘Matrix Math’ in LLM and SLM AIs (Large and Small Language AI models).

A key there is of course the power needed to cool the increasingly powerful superclusters of AI computers in these AI optimized data centers. And the race to improve those technologies to consume less power, AND cool the ever more powerful AI Compute is on. And Nvidia of course has the biggest stakes in this race, being the largest provider of AI GPU Cloud Compute Infrastructure.

So it’s important to understand this cooling AI input in more detail as we assess the capex vs returns debate of this AI Tech Wave investment boom.

As the WSJ outlines in detail, “Novel Ideas to Cool Data Centers: Liquid in Pipes or a Dunking Bath”:

“One of the latest innovations at artificial-intelligence chip maker Nvidia has nothing to do with bits and bytes. It involves liquid.”

“Nvidia’s coming GB200 server racks, which contain its next-generation Blackwell chips, will mainly be cooled with liquid circulated in tubes snaking through the hardware rather than by air. An Nvidia spokesman said the company was also working with suppliers on additional cooling technologies, including dunking entire drawer-sized computers in a nonconductive liquid that absorbs and dissipates heat.”

“Cooling is suddenly a hot business as engineers try to tame one of the world’s biggest electricity hogs. Global data centers—the big computer farms that handle AI calculations—are expected to gobble up 8% of total U.S. power demand by 2030, compared with about 3% currently, according to Goldman Sachs research.”

Big tech companies like Amazon, Microsoft, Google and others are rushing to soak up entire new power generation plants for their soon to be Gigawatt powered AI data centers, as Meta founder/CEO Mark Zuckerberg recently discussed.

“The Nvidia GB200 series is likely to be sought-after as technology companies race to deploy AI in content creation, autonomous driving and more.”

That Blackwell generation of chips was of course delayed by a few months into early next years, which I outlined in this post. As the WSJ explains:

“Nvidia’s stock took a hit early this month as investors reacted to potential delays in its Blackwell-powered products. Although the company said it was on track to ramp up production in the second half of this year, Chief Executive Charles Liang of Super Micro Computer, which makes server racks with Nvidia chips, said the timeline had been “pushed out a little bit.” Liang said he anticipated significant volumes would be ready in the first quarter of next year.”

Liquids and electronics make awkward bedfellows, as the WSJ continues to explain:

“Data centers, housing as many as tens of thousands of servers, tend to be cacophonous and chilly places. At older facilities that use fans and air conditioning, cooling accounts for up to 40% of power consumption, a proportion that could be reduced to 10% or less with more advanced technology, according to Shaolei Ren, associate professor of electrical and computer engineering at the University of California, Riverside.”

“Liquid cooling has become a common feature of high-end gaming computers, but on a larger scale has traditionally been limited to the hardest challenges, such as nuclear power plants. The upfront cost of circulating liquid through delicate electronics can be many times the cost of installing AC and fans. Some parts are in short supply.”

“Leakage is the biggest risk.”

“If a single drop of water falls onto a server, such as the million-dollar GB200, it could cause catastrophic damage,” said Oliver Lien, general manager of Forcecon Technology, which works with semiconductor makers on cooling.”

“More than 95% of current data centers use air cooling because of its mature design and reliability, according to a recent Morgan Stanley report.”

There are supporting tech infrastructure companies also racing into this opportunity:

“Super Micro Computer, commonly known as Supermicro, will use liquid cooling in about 30% of the racks it ships next year, said Liang. In June and July, the company delivered more than 1,000 liquid-cooled AI racks, representing more than 15% of new global data-center deployments, he said.”

“Nvidia both makes its own servers and supplies chips to other server makers that build devices for tech giants working on AI applications. Decisions on cooling tend to be made jointly by those companies.”

Below is a Supermicro liquid cooling tower. The company also makes server racks with Nvidia chips.

“Supermicro said its liquid cooling systems enabled data centers to reduce power consumption by 30% to 40%. Nvidia has said liquid-cooled data centers can pack twice as much computing power into the same space because the air-cooled chips require more room in a server.”

“If only air cooling is used, high-performance computers require server-room temperatures below 50 degrees Fahrenheit, said Lien of Forcecon Technology. Aside from the heavy electricity use, the fans produce dust that can hinder performance and 24-hour whirring that can annoy the neighbors.”

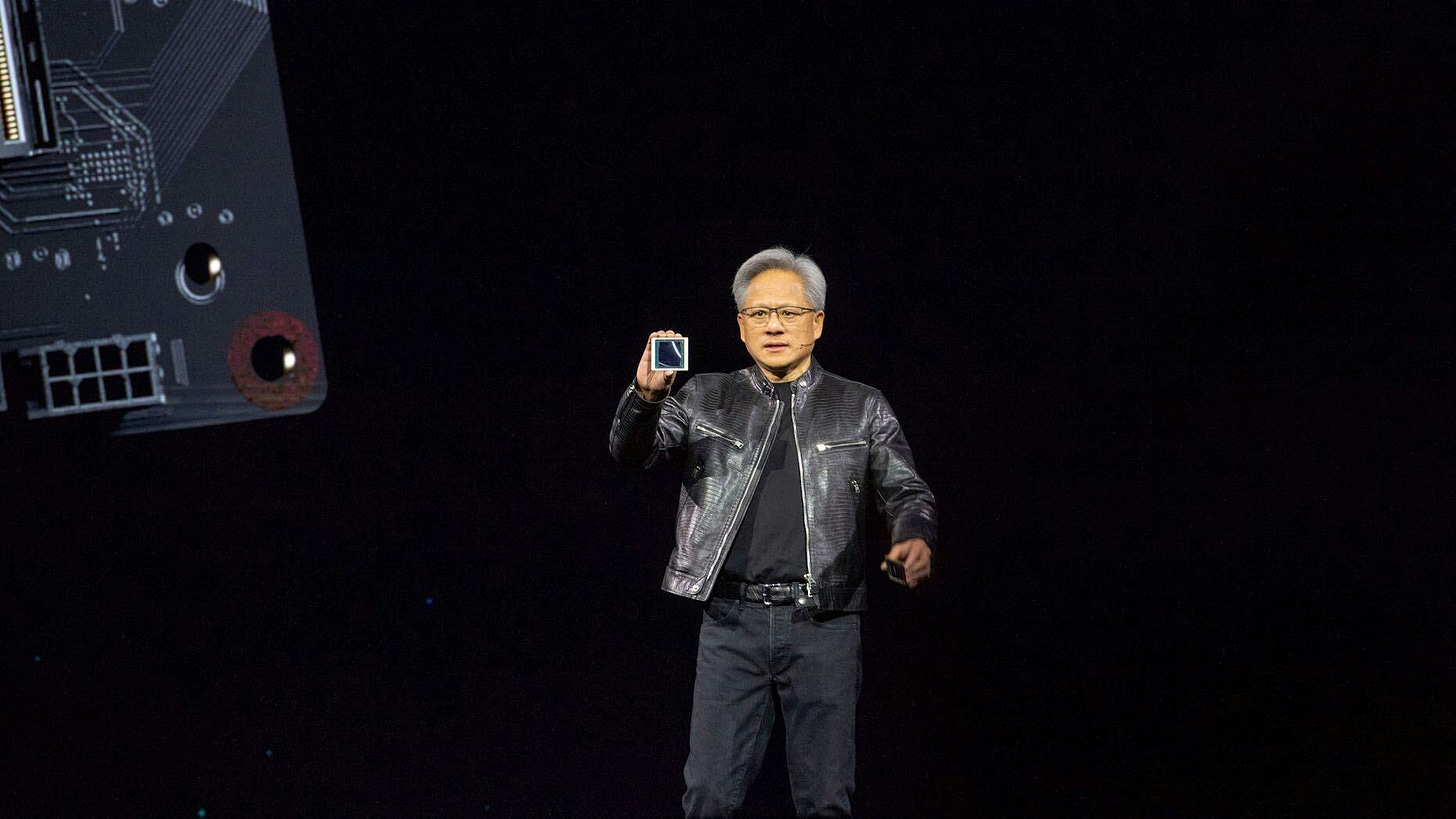

Here is Nvidia founder/CEO Jensen Huang with SuperMicro CEO Charles Liang:

“Liquid cooling is definitely inevitable for higher-end AI applications from firms such as Nvidia, AMD or Google,” Lien said. The liquid-cooled machines whisper instead of whirring and kick up virtually no dust.”

The liquid cooling of course is ‘Extra’, as I outlined in my Sunday piece on Nvidia. The WSJ gives some relative numbers:

“The liquid cooling systems for Nvidia’s GB200 high-end rack cost more than $80,000, about 15 to 20 times the cost of an air-cooling system for an existing rack with Nvidia’s H100 chips, according to Morgan Stanley estimates. It projected the market for those systems will more than double to $4.8 billion by 2027.”

And of course like the AI chips themselves being made in Taiwan Semiconductor (TSM) chip fabs, many of the cooling system components and capabilities also come from Taiwan. Which adds to the US/China Geopolitical ‘threading the needle’ challenges, I’ve discussed previously.

Again, the WSJ:

“Executives said one part in tight supply is called universal quick disconnect, an item that prevents leaks when parts of the piping system are disconnected. That part is mostly made by American and European companies, but more than half of the global cooling system business is concentrated in Taiwan-based companies, according to Edward Kung, who leads Intel’s liquid cooling projects and is chairman of the Taiwan Thermal Management Association.”

“The Taiwanese companies are benefiting from their experience cooling gaming computers, much as Nvidia started as a maker of chips for games and moved into AI.”

And then, on the ‘cool tech’ innovation front (pun intended), the next generation cooling solutions are counter-intuitive:

“Many in the business think the next step could be total immersion in heat-absorbing fluid, although the technology faces skepticism because the fluid and custom tanks are costly and maintenance is messier.”

“Taiwanese companies including Cooler Master, a longtime Nvidia collaborator known to videogame enthusiasts for its high-end computer cooling hardware, are working on immersion technology for potential future Nvidia products, people familiar with those products said.”

“Last year, Nvidia Chief Executive Jensen Huang stopped by a trade-show display in which Taiwan’s Gigabyte Technology showed off its immersion cooling tank.”

“Good job,” Huang told people at the display. “This is the future.”

Reminds me of the famous scene from the James Cameron 1989 scifi masterpiece ‘The Abyss’.

It’s where the US government ‘develops’ a technology where humans could breathe while immersed in a special liquid. Here is the famous scene showing how a rat could breathe in this new concoction.

It’s mind-blowing to think that in a few years, some of the most critical AI technology infrastructure that makes our world work, may be immersed entirely in liquid. To be more power efficient.

In any case, cooling next generation AI data centers, which according to Nvidia’s Jensen Huang is a ‘trillion dollar’ plus opportunity over this decade, is another critical input to scaling AI in this AI Tech Wave. And the trend is already showing up in the exploding growth for a new job category of ‘data technicians’ for data centers, fusing traditional definitions of ‘blue and white collar’ work. And paying six figures.

Of course at this early stage, it’s important to keep in mind other AI technology innovations that may make today’s ‘brute-force’ Compute far more efficient. An example would be the ‘1.5 bit AI Matrix Math’ developments I discussed a few weeks ago. Developments like that may ease the power and thus the cooling imperatives as they’re perfected.

But in the meantime, we’ve got to make sure to make the Compute ‘Stay Cooled’, and ‘Breathing’. It’s about managing Nvidia’s Accelerated Computing AND seemingly impossible Power consumption ramps vs Power Production.

As Ed Harris/Gene Kranz says it memorably in Apollo 13, here too ‘Failure is not an Option’. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)