AI: Anthropic's new AI Models

...the new performance contender, with a keen focus on AI Safety

Anthropic, the other major Foundation LLM AI “New Kid on the Block” after OpenAI and Google, rolled out its next-gen models that go up against the top dogs (OpenAI’s GPT-4 and Google’s Gemini Ultra), on some key metrics. As Ben’s Bites pithily summarizes in “Anthropic's drops a bomb—Claude 3 might be the new AI king.”:

“OpenAI’s GPT-4 is no longer the lone wolf in the “scary-good AI” valley. Anthropic is making bold claims with their latest release, Claude 3. The new family of language models is going for the jugular, beating the reigning champ like OpenAI's GPT-4 and taking on newcomers like Google's Gemini.”

“What’s going on here?”

“Anthropic's betting big that Claude 3 is THE next-gen AI for businesses, And based on their benchmarks, they might not be just blowing smoke.”

“What does that mean?”

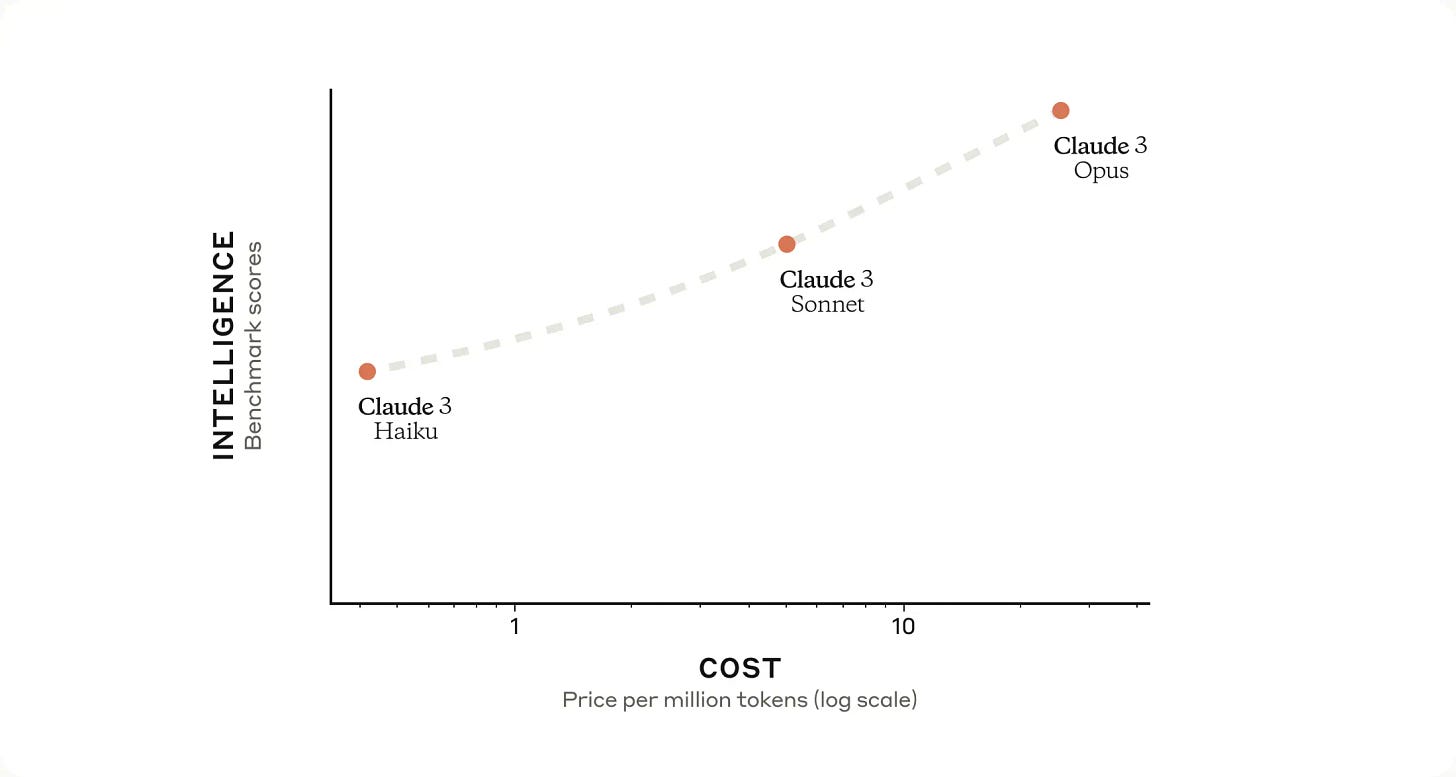

“Claude 3 isn't a one-size-fits-all. It comes in flavours (or should I say jingles): Opus, Sonnet, and Haiku. Opus is the monster truck, pricier but insanely powerful, Sonnet's your versatile workhorse and Haiku keeps things lean and mean for cost-sensitive tasks.”

“Anthropic claims Opus flat-out beats GPT-4 and Gemini 1.0 Ultra on everything from general knowledge to coding challenges. Sonnet trades punches with GPT-4, winning some, losing others. Though little caveat: These benchmarks might not factor in the latest updates like GPT-4 Turbo and Google’s unlreased Gemini 1.5 Pro. I’ve more on “performance” below.”

“These models are vision-ready, so you can send images into them to give more info and context. Also, remember, Anthropic's whole thing is about safer AI. Past models were good but could be overcautious. They claim they did some behavioural design with Claude 3 that nails the balance—less likely to choke on harmless requests without going rogue.”

“Claude 3 maintains Anthropic’s 200k context windows but claims near-perfect recall across that context length. Big baddie Opus performs best and can have a context window of up to 1M tokens but it’s behind private access. Well, that reminds me, Anthropic’s API is now generally available (they were pretty pesky about API access earlier). You can use Opus and Sonnet right now via the API, while Haiku needs some more work.”

The piece goes onto to discuss pricing and availability, along with performance on other metrics. The general positive take on the Anthropic’s release was also echoed by other outlets including Bloomberg, and others.

As CNBC reminds us in “Anthropic, backed by Amazon and Google, debuts its most powerful chatbot yet”:

“Anthropic on Monday debuted Claude 3, a chatbot and suite of AI models that it calls its fastest and most powerful yet.

“The company, founded by ex-OpenAI research executives, has backers including Google, Salesforce and Amazon, and closed five different funding deals over the past year, totaling about $7.3 billion.”

“The new chatbot has the ability to summarize up to about 150,000 words, or a lengthy book, compared to ChatGPT’s ability to summarize about 3,000. Anthropic is also allowing image and document uploads for the first time.”

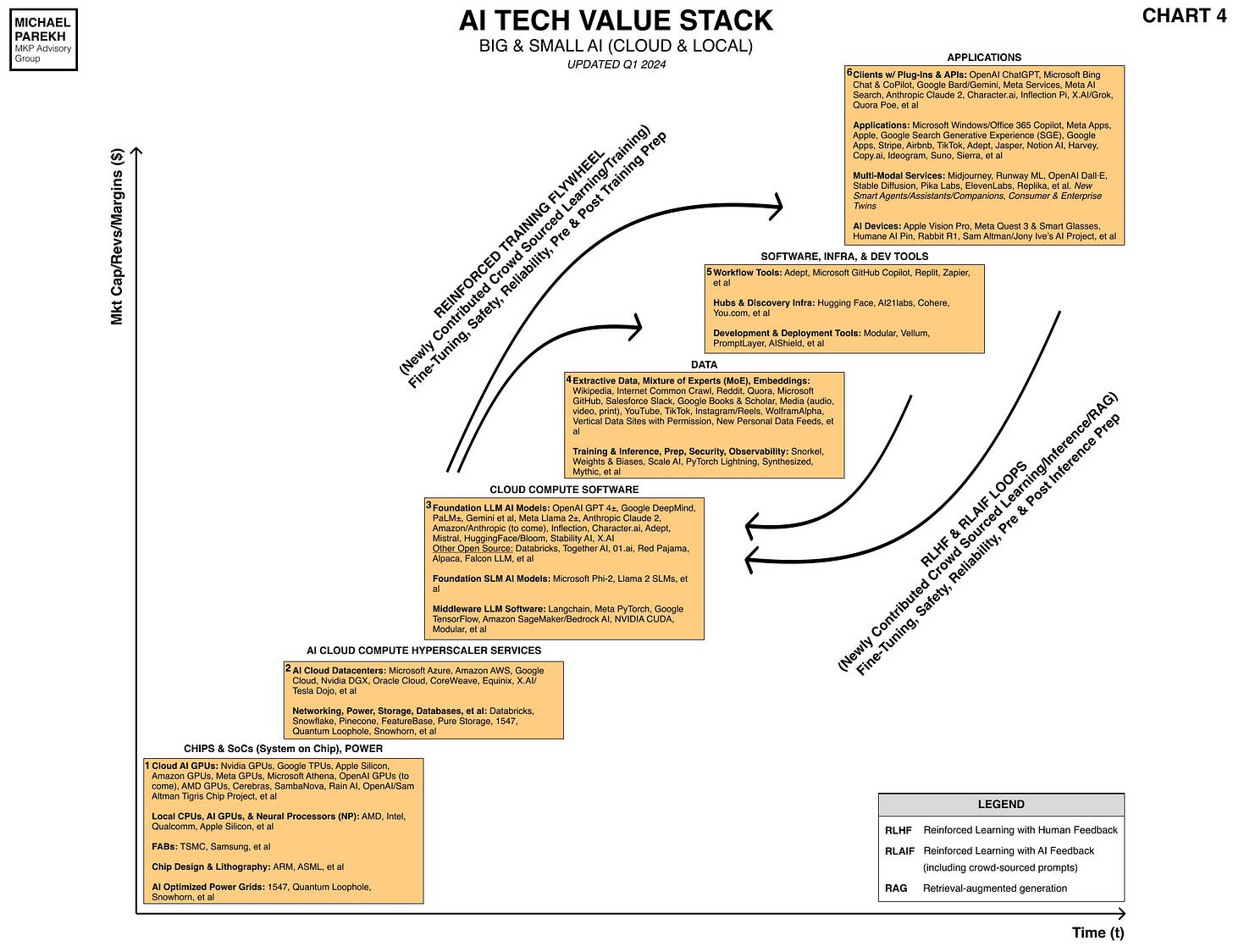

I’ve discuss the strategic significance of Anthropic being backed by both Amazon and Google in earlier pieces, and it emphasizes the race by all the major LLM AI and AI Cloud data center and infrastructure providers in the AI Tech Wave chart’s Boxes 3 through 5. It highlights the on going focus by all the top AI companies to pulling out all stops against OpenAI and Microsoft.

Remember that Anthropic founders, coming from OpenAI are intensely focused on AI Safety first in its culture and DNA. As its Founder/CEO Dario AMoedi tells CNBC:

““Of course no model is perfect, and I think that’s a very important thing to say upfront,” Amodei told CNBC. “We’ve tried very diligently to make these models the intersection of as capable and as safe as possible. Of course there are going to be places where the model still makes something up from time to time.”

The big players are playing the game of what some AI professionals have derivisly called ‘Safety Lobotomy’ on their models. As noted by AI analyst John Nosta in “Breaking Brains: The Lobotomization of Large Language Models”:

“GPT Summary: The contemporary endeavor to control or restrict Large Language Models (LLMs) is likened to the tragic practice of lobotomies. This metaphor underscores a complex interplay between technology, ethics, philosophy, and psychology. The struggle to eliminate unwanted aspects of these models reflects a broader philosophical question about the balance between control and freedom, suppression and expression. Attempts to isolate or control specific functions may risk losing what drives creativity and understanding, a principle that holds true in both human cognition and artificial intelligence. This nuanced perspective suggests that embracing complexity rather than attempting to suppress it may lead to genuine innovation and insight.”

As another Abacus AI CEOBindu Reddy notes on Anthropic’s latest models vs the comnpetition:

“Sadly, Claude 3 is pretty censored as well...”

“For what it’s worth, censoring these models and safety lobotomizing them badly affects real-world performance!! Usually, the safety lobotomy is done in a manner not to affect benchmarks, but the model starts throwing a fit and refuses to answer complex real-world problems. I guess Anthropic will not change their "safety stance" anytime soon.”

The industry will have to find the right balance between AI competitive functionality and Safety, a key AI industry debate and worry. And potentially the leading companies may be more handicapped by their industry leading profiles and roles than smaller participants focused on either open or closed LLM AI Solutions. But for now, with Claude 3, New Kid on the Block Anthropic has its moment in the AI sun. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)