A ‘big thing’ being focused on by AI companies large and small in 2024 this AI Tech Wave, is ‘multimodal’ LLM AI. It’s a 50 cent word for AIs that can be interacted with voice, images, videos, code and other ‘modalities’. OpenAI has this baked into its ChatGPT app, especially for the ‘Plus’ users paying $20/month. It’s also becoming the key feature for Google Gemini Advance released last week, and Microsoft’s Copilot AIs, revamped last week. All of course at incremental $20/month.

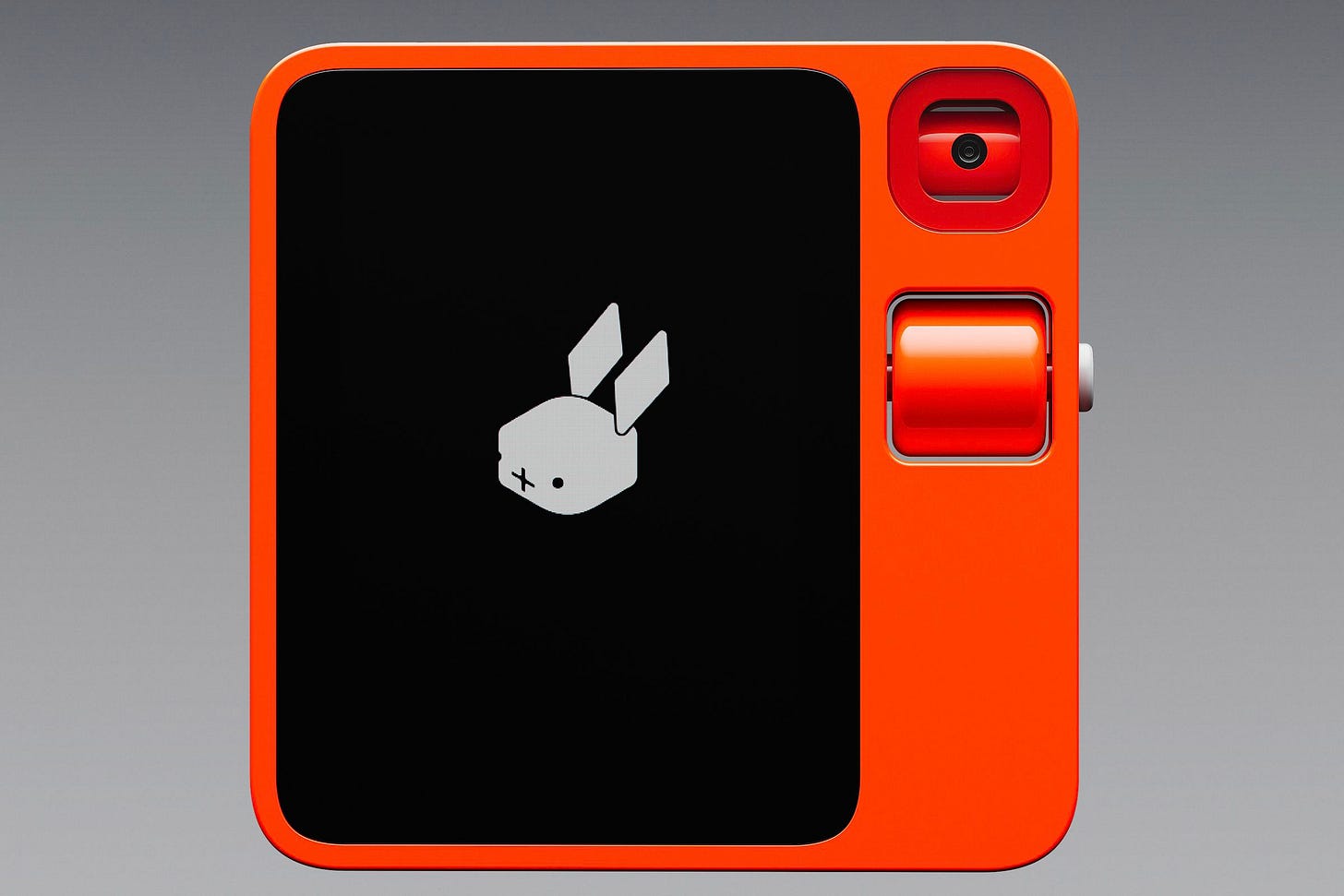

And of course it’s the one key interaction feature and mechanism for most ‘AI Wearables’ rolling out this year, for devices ranging from Humane AI’s pin, to the Rabbit R1 with its launch at CES in January.

Not to mention the long rumored device being cooked up by OpenAI’s Sam Altman and former Apple uber-designer, Jony Ive.

Not to leave out a host of other startups, and big tech incumbents of course like Apple’s Vision Pro ‘spatial computer’.

And then there’s Meta’s ‘Smart Glasses’ in partnership with RayBan.

I’m discussing voice in particular as an AI modality, because it’s a uniquely difficult interface mechanism for ‘probabilistic’ AI, vs ‘deterministic’ machine learning based voice services before, like Apple Siri, Amazon Alexa/Echo, Google Nest et al. Just those companies alone have collectively expended tens of billions in developing those platforms over the last decade or two, and they’re all furiously revamping them with LLM AI.

And it’s going to be a long road to get it right, likely as long as it took to even get the previous versions like Apple Siri, Google Next, and Amazon Alexa/Echo especially, to barely tell us the time, set timers, and play some music on demand.

All this comes to mind with Axios’ Ina Fried reviewing the latest update to Meta’s Ray-ban smart glasses, and using it to interview OpenAI’s Sam Altman no less. Here’s an excerpt from “Test-driving Meta's Ray-Bans — now with AI”:

“I used Meta's second-generation Ray-Ban smart glasses to broadcast my interview with Sam Altman at Davos live on Instagram, and Altman called the experience "a little weird" — but I think the glasses pack some neat tricks for a device that doesn't feel much bigger or heavier than standard eyewear.”

“Why it matters: The design of many cutting edge devices, like Apple's Vision Pro, starts with a long list of tech features and then tries to make everything smaller and lighter. With the Ray-Bans, Meta is taking a different approach — choosing a size, weight and price that people actually want, then seeing what you can do with it.”

“What's happening: The new glasses launched in October, and I've been using them (with my prescription) on and off for a few months. While they're largely a refinement of the initial version, they also include the ability to livestream and a built-in, if nascent, AI assistant.”

As Ina notes, the core use case of the Meta/Rayban smart glasses for months to date has been to take quick photos and videos at the touch of a button. The same thing possible of course with Apple’s Vision Pro, and Google’s infamous Google Glasses, over a decade ago:

“Of note: My favorite use of the second generation of these specs is the same as the first time around: They let me capture first-person photos and videos without having to pull out my phone.”

That of course changes with the new ‘multimodal’ voice AI feature, as Ina notes:

“Details: The new model's main addition is access to Meta AI, a chatbot that resembles a less capable ChatGPT. The bot can also provide some real-time data — such as weather and sports scores — via Microsoft's Bing.

“Simply saying, "Hey, Meta" summons the bot's voice in your ear. It's still pretty hit or miss in terms of which queries it will answer and how well.”

“Another feature, currently only available to a small group of testers, is the ability to ask the AI chatbot questions based on what's in a user's field of view.”

“Saying "Hey Meta, look at this" brings up this capability and prompts the glasses to take a picture, which it then analyzes to answer the question asked. It can write fun captions, translate a menu or just describe what it sees.”

This ability to Search with AI augmentation was also highlighted as a key feature by Samsung in its latest Galaxy S24 smartphones a few weeks ago in partnership with Google.

Google has since also rolled out that feature for its flagship Google Pixel 8 smartphones as well.

What I want to highlight about AI augmented Voice interaction is that it that these systems work reasonably well on simple requests from previous Voice devices still like telling the time, relaying the weather, and answering basic questions off the web and wikipedia mostly to answer basic questions.

The place where they trip up are the “Edge Cases”. These are the myriad things that come up in a number of cases mixing modalities, that trip up these machine learning and generative AI systems. As this Venturebeat piece explains:

“In AI development, success or failure lies significantly in a data science team’s ability to handle edge cases, or those rare occurrences in how an ML model reacts to data that cause inconsistencies and interrupt the usability of an AI tool. This is especially crucial now as generative AI, now newly-democratized, takes center stage. Along with increased awareness comes new AI strategy demands from business leaders who now see it as both a competitive advantage and as a game changer.

“As companies go from AI in the labs to AI in the field or production AI, the focus has gone from data- and model-centric development, in which you bring all the data you have to bear on the problem, to the need to solve edge cases,” says Raj Aikat, CTO and CPO at iMerit. “That’s really what makes or breaks an application. A successful AI application or company is not one that gets it right 99.9 percent of the time. Success is defined by the ability to get it down to the .1 percent of the time it doesn’t work — and that .1 percent is about edge cases.”

Edge cases have been with us in traditional software and search technologies as well, and Google in particular has spent billions and decades to get Google Search to give us results when we’re ‘Feeling Lucky’. But Edge cases abound exponentially in probabilistic AI systems, so it’s a problem that comes back in spades for engineers and developers.

As Ina again illustrates by example with the Meta ‘Smart Glasses’:

“As for that Sam Altman interview live-stream: I'll admit it — it was a bit weird for me, too.”

“I was conscious that anything I looked at — including my notes — could end up in the broadcast.”

“Also, I wasn't sure if I had properly turned off the setting that enables a recital of live comments from viewers through the speakers by my ear.”

Long-time users of Apple Siri Homepod devices, Amaxzn Alexa/Echo gizmos, and Google Nest speakers, know this issue well even with pre-generative AI versions of these products and services. How often has someone saying the trigger words ‘Siri’ or ‘Alexa’ on TV or a PC triggered the devices in one’s home or office.

Or if you have multiple devices of a given type in a home or office, they all chirp up to answer the query or play the music, including the smartphone in one hand, without any ‘understanding’ of WHICH device the user was targeting with the query.

Edge cases in both the data, and probabilistic code of AI/ML are the bane of so many other AI/ML driven systems. Especially ‘self-driving’ cars, whose challenged have vexed even might companies like Tesla, Apple and many others.

Voice powered AIs have a similar, hard and complex learning curve ahead. And it’ll likely take a lot longer than we all think and would like to iron out. Especially as we also throw in features like Star Trek inspired ‘Universal Translation’ in multiple languages.

In the meantime, we’re all early adopters and testers for these products and services. It’s useful to keep that in mind going in again with our short and long-term expectations. And our emotions towards AI systems, especially driven by voice for AI assistance and companionship.

They of course will get better over time, or be replaced with even newer technologies. We’ll see how it goes. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)