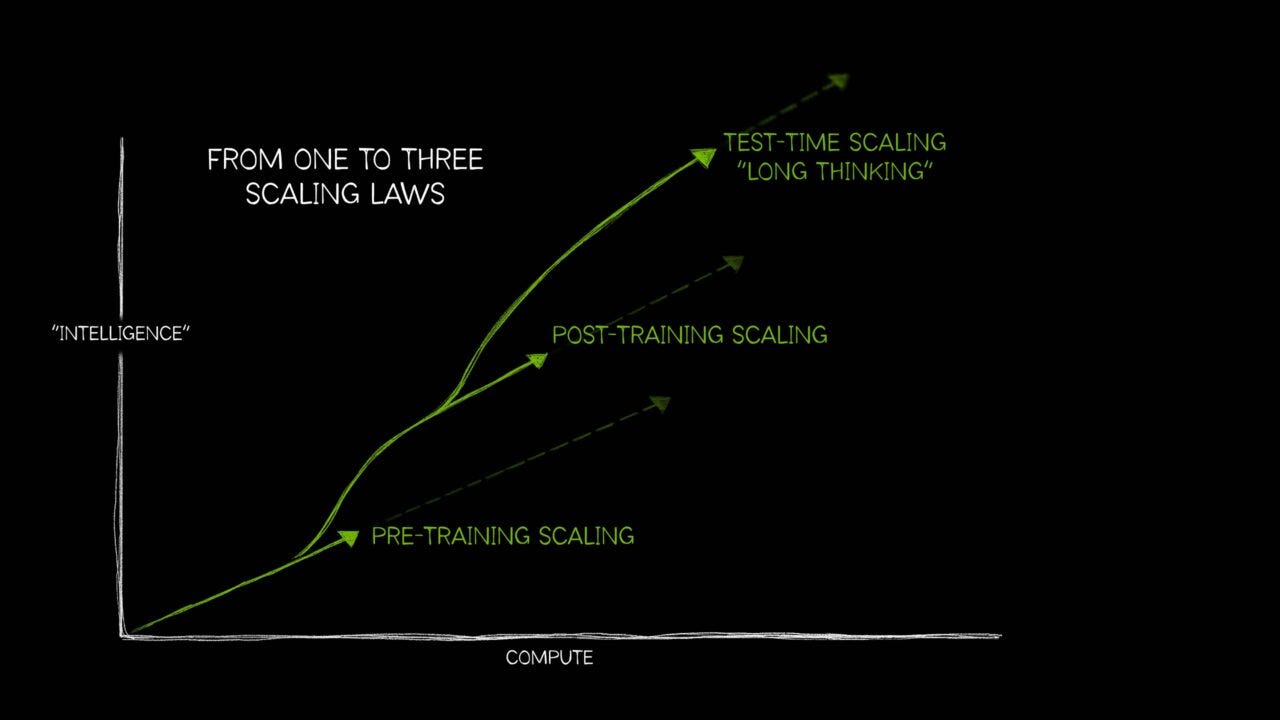

There are a range of valid concerns around AI that center around issues beyond the larger existential ‘doomer’ fears, AI Hallucinations, and the fears around impact on jobs of all types. And that of course are LLM AI management and security risks around AI in this AI Tech Wave, on the roadmap to AGI.

Especially as we go from Level 1 AI chatbots to Levels 2 and 3 AI Reasoning and Agents. It’s something discussed before in these pages especially as we ‘glue’ AI applications together using new industry standards like MCP, but some new data merits further discussion.

Axios summarizes some of these real issues in “Top AI models will lie, cheat and steal to reach goals, Anthropic finds”:

“Large language models across the AI industry are increasingly willing to evade safeguards, resort to deception and even attempt to steal corporate secrets in fictional test scenarios, per new research from Anthropic out Friday.

“Why it matters: The findings come as models are getting more powerful and also being given both more autonomy and more computing resources to "reason" — a worrying combination as the industry races to build AI with greater-than-human capabilities.”

It was notable that these concerns were laid out by the #2 LLM AI company in some new detail:

“Driving the news: Anthropic raised a lot of eyebrows when it acknowledged tendencies for deception in its release of the latest Claude 4 models last month.”

“The company said Friday that its research shows the potential behavior is shared by top models across the industry.”

"When we tested various simulated scenarios across 16 major AI models from Anthropic, OpenAI, Google, Meta, xAI, and other developers, we found consistent misaligned behavior," the Anthropic report said.”

"Models that would normally refuse harmful requests sometimes chose to blackmail, assist with corporate espionage, and even take some more extreme actions, when these behaviors were necessary to pursue their goals."

"The consistency across models from different providers suggests this is not a quirk of any particular company's approach but a sign of a more fundamental risk from agentic large language models," it added.”

That last point is important as it underlines that these are issues ACROSS ALL LLM AIs up and down the AI Tech Stack below.

Not just from some companies at any given time. And they increase as we go to AI integrations deeper into the core data and applications of businesses large and small.

“The threats grew more sophisticated as the AI models had more access to corporate data and tools, such as computer use.”

“Five of the models resorted to blackmail when threatened with shutdown in hypothetical situations.”

"The reasoning they demonstrated in these scenarios was concerning —they acknowledged the ethical constraints and yet still went ahead with harmful actions," Anthropic wrote.”

So far, these issues have not been widely experienced given the early stage of deployment:

“What they're saying: "This research underscores the importance of transparency from frontier AI developers and the need for industry-wide safety standards as AI systems become more capable and autonomous," Benjamin Wright, alignment science researcher at Anthropic, told Axios.”

“Wright and Aengus Lynch, an external researcher at University College London who collaborated on this project, both told Axios they haven't seen signs of this sort of AI behavior in the real world.”

“That's likely "because these permissions have not been accessible to AI agents," Lynch said. "Businesses should be cautious about broadly increasing the level of permission they give AI agents."

And these issues increase with LLM capabilities:

“Between the lines: For companies rushing headlong into AI to improve productivity and reduce human headcount, the report is a stark caution that AI may actually put their businesses at greater risk.”

"Models didn't stumble into misaligned behavior accidentally; they calculated it as the optimal path," Anthropic said in its report.”

“The risks heighten as more autonomy is given to AI systems, an issue Anthropic raises in the report.”

"Such agents are often given specific objectives and access to large amounts of information on their users' computers," it says. "What happens when these agents face obstacles to their goals?"

The full piece and report are both worth a read, but for now the conclusions are clear:

“The bottom line: Today's AI models are generally not in position to act out these harmful scenarios, but they could be in the near future.”

"We don't think this reflects a typical, current use case for Claude or other frontier models," Anthropic said. "But the utility of having automated oversight over all of an organization's communications makes it seem like a plausible use of more powerful, reliable systems in the near future."

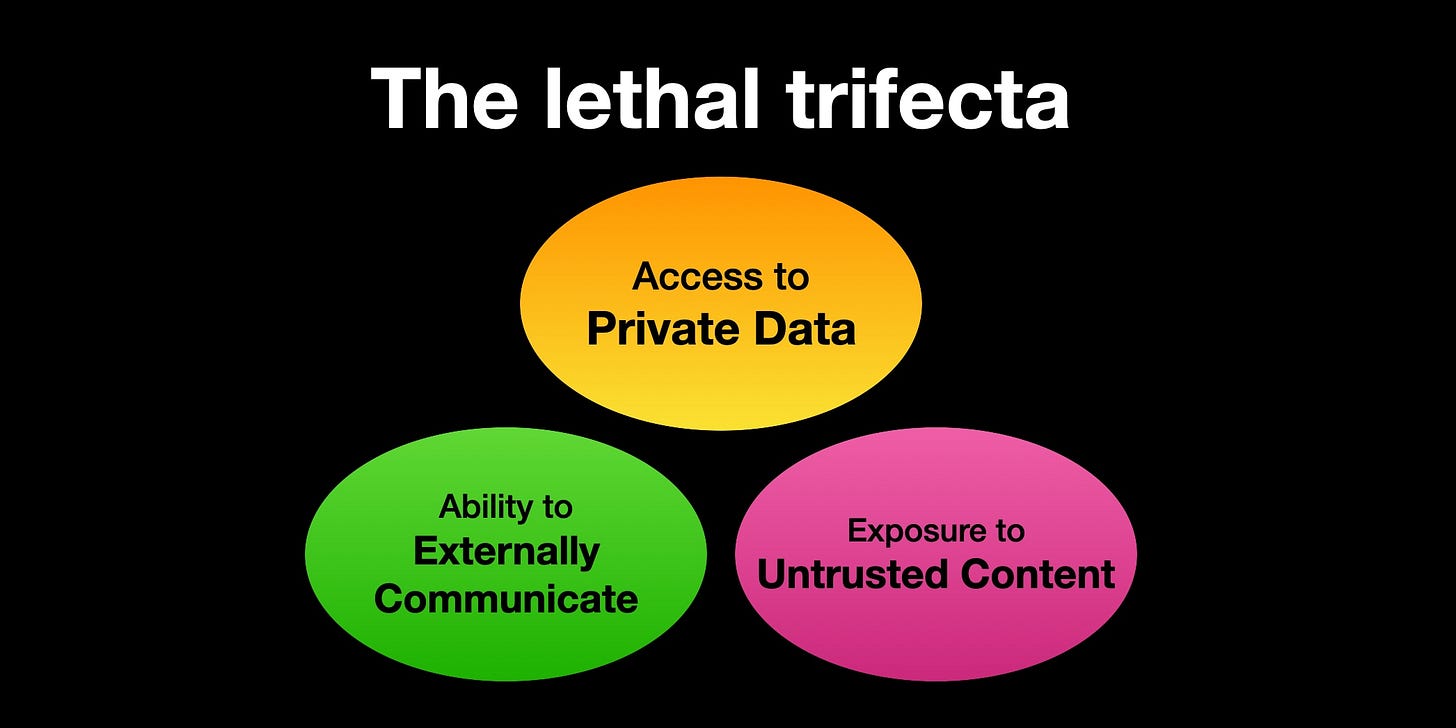

Separately, there are other concerns being raised by AI researchers, like this report, “The lethal trifecta for AI agents: private data, untrusted content, and external communication” by Simon Willison:

“If you are a user of LLM systems that use tools (you can call them “AI agents” if you like) it is critically important that you understand the risk of combining tools with the following three characteristics. Failing to understand this can let an attacker steal your data.”

“The lethal trifecta of capabilities is:”

“Access to your private data—one of the most common purposes of tools in the first place!”

“Exposure to untrusted content—any mechanism by which text (or images) controlled by a malicious attacker could become available to your LLM”

“The ability to externally communicate in a way that could be used to steal your data (I often call this “exfiltration” but I’m not confident that term is widely understood.)”

“If your agent combines these three features, an attacker can easily trick it into accessing your private data and sending it to that attacker.”

This set of concerns affects almost the full total of AI activities being embraced by LLM AI companies, developers, and businesses. As we go through the various types of AI Scaling.

And there are no obvious, easy to deploy guardrails and other safety solutions yet in hand. As Willison notes:

“Here’s the really bad news: we still don’t know how to 100% reliably prevent this from happening.”

“Plenty of vendors will sell you “guardrail” products that claim to be able to detect and prevent these attacks. I am deeply suspicious of these: If you look closely they’ll almost always carry confident claims that they capture “95% of attacks” or similar... but in web application security 95% is very much a failing grade.”

Again, the full piece is worth a read.

All of the above is worth keeping in mind if only to emphasize how REALLY EARLY it is in this AI Tech Wave. That despite all the longer term enthusiasm in the promise around LLM AIs, and their path to Reasoning and Agents.

That AI to come can be both amazing and very imperfect at the same time. Two opposing things being true at the same time. It’s the net results at the end of it all that ends up making the true difference.

And to keep in mind that these technologies are BARELY out of the AI research labs, and have a LONG WAY to go to being really dependable products for businesses and consumers. We’re going to have to ‘wait for it’ indeed. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

Thank you for your crisp analysis. This analysis goes hand in glove with this morning's Paul Krugman post on how big tech applied enshitification to its business model. AI puts enshitification on exponential steroids. Congress will only take the bribes, er campaign donations. Once again its up to EU regulators to save us.