AI: The Fine Print around AI Encryption. RTZ #818

...a key intermediate solution to enhancing AI Privacy and Trust

I’ve discussed the accelerating challenges of AI Privacy and Trust in these pages, from the beginning. And as leading LLM AI companies like OpenAI, Anthropic, Google and yes,even Apple race towards figuring out top and bottom down AI applications and services in this AI Tech Wave, the need to better alignment of trust and privacy for end users becomes more critical than ever.

The industry is attacking this in a variety of technical ways. Note that a lot of privacy protections will also have to come via regulatory means at the state, federal and global country levels. And those always lag the underlying AI technologies racing ahead.

Especially as AI becomes more deeply ingrained in daily mainstream user habits, having to do with their physical and mental health, social well-being, legal matters, and so many other domains.

There is no one size fits all situation for the most part.

But one area that could be a possible solution is AI chatbot encryption. It’s a topic that OpenAI founder/CEO Sam Altman discussed with a group of tech journalists at a San Francisco dinner a few days ago.

Axios outlines this chatbot encryption part in “OpenAI weighs encryption for temporary chats”:

“Sam Altman says OpenAI is strongly considering adding encryption to ChatGPT, likely starting with temporary chats.”

“Why it matters: Users are sharing sensitive data with ChatGPT, but those conversations lack the legal confidentiality of consultations with a doctor or lawyer.”

"We're, like, very serious about it," the OpenAI CEO said during a dinner with reporters last week. But, he added, "We don't have a timeline to ship something."

Most mainstream chatbot users don’t fret over how their queries are used and stored in AI systems, especially to further enhance the AI models for other users. With deeper memory driven personalization:

“How it works: Temporary chats don't appear in history or train models, and OpenAI says it may keep a copy for up to 30 days for safety.”

“That makes temporary chats a likely first step for encryption.”

“Temporary and deleted chats are currently subject to a federal court order from May forcing OpenAI to retain the contents of these chats.”

This is where the technical and legal fine print details matter. Especially as our computers and smartphones themselves ‘record and remember’ everything about us for LLM and SLM AI models ahead (large and small AI models):

This becomes even more important as almost every web browser is soon going to become an ‘AI Browser’ soon, as I’ve discussed as well.

“Yes, but: Encrypted messaging keeps providers from reading content unless an endpoint holds the keys. With chatbots, the provider is often an endpoint, complicating true end-to-end encryption.”

“In this case, OpenAI would be a party to the conversation. Encrypting the data while it is in transit isn't enough to keep OpenAI from having sensitive information available to share with law enforcement.”

Apple is unique amongst the LLM AI and Big Tech companies in its approach to what they call ‘Apple Intelligence’:

“Apple has addressed this challenge, at least in part, with its "Private Cloud Compute" for Apple Intelligence, which allows queries to run on Apple servers without making the data broadly available to the company.”

Now here is the real rub longer term. Most mainstream users want their AI to remember lots of details about themselves, i.e., better memory. It’s a subject I’ve written a lot about. But better memory is contradictory with truly useful AI services from centralized LLM Models across the internet.

“Adding full encryption to all of ChatGPT would also pose complications as many of its services, including long-term memory, require OpenAI to maintain access to user data.”

Then there’s the issue of government regulations in the US and abroad. Especially in places like Europe and China:

“The big picture: Altman and OpenAI have advocated for some protection from government access to certain data, especially when people are relying on ChatGPT for medical and legal advice — protections that apply when you speak to a licensed professional.”

"If you can get better versions of those [medical and legal chats] from an AI, you ought to be able to have the same protections for the same reason," Altman said, echoing comments he has recently made.”

The problem is nascent for now, but poised to explode to mainstream billions imminently:

“OpenAI hasn't yet seen a large number of demands for customer data from law enforcement.”"The numbers are still very small for us, like double digits a year, but growing," he said. "It will only take one really big case for people to say, like, all right, we really do have to have a different approach here."

“Between the lines: Altman said this issue wasn't originally on his radar but that it has become a priority after he realized how people are using ChatGPT and how much sensitive data they are sharing.”

"People pour their heart out about their most sensitive medical issues or whatever to ChatGPT," Altman said. "It has radicalized me into thinking that AI privilege is a very important thing to pursue."

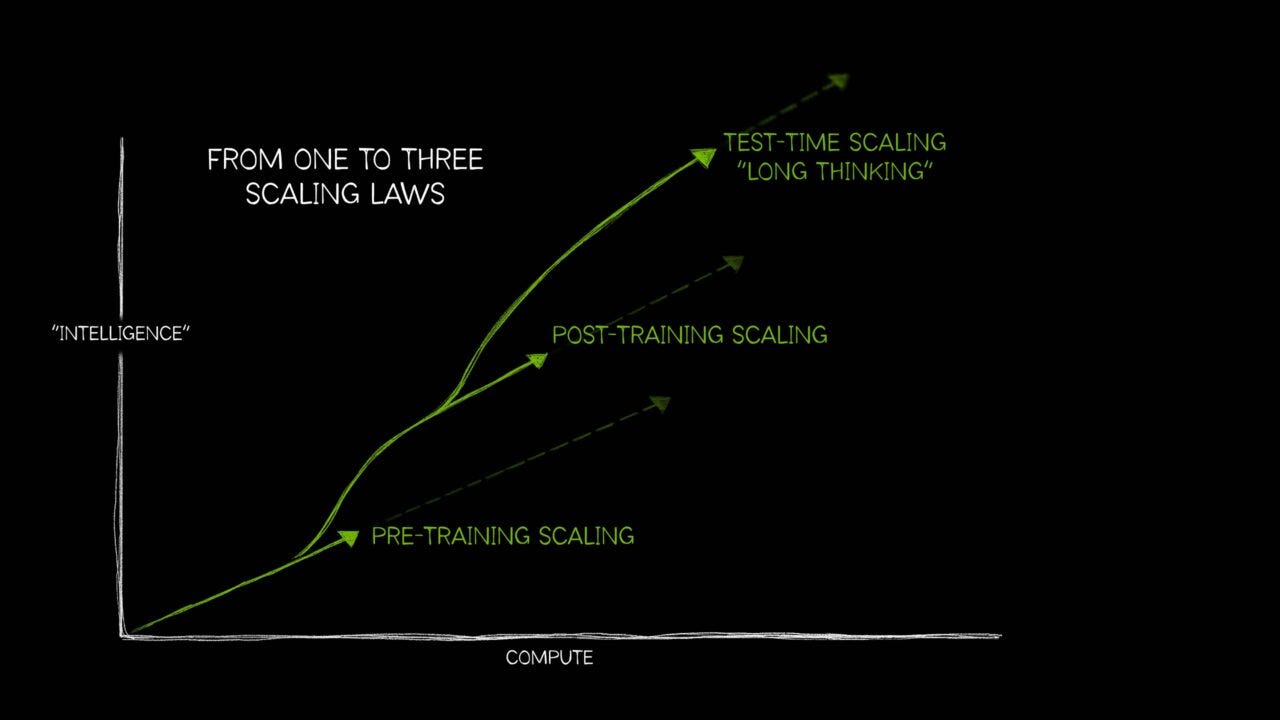

And the solutions and their timelines are vague for now, while AI is Scaling in multiple directions:

“What to watch: Altman predicted some sort of protections will emerge, adding that lawmakers have been somewhat receptive and generally favor privacy protections.”

"I don't know how long it will take," he said. "I think society has got to evolve."

“Go deeper: Generative AI's privacy problem.”

The above is a good reminder of the trust and privacy challenges coming fast in this AI Tech Wave. The solutions are not just technical. But regulatory as well as what mainstream users are ultimately truly comfortable with as their daily habits incorporate more ‘AI’ activities.

It’s ‘just’ chatbots today, but it’s going to be a whole lot more. Sooner than we think. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)