AI: OpenAI's Intimidating AI Compute & Power Plans. RTZ #860

...setting very aggressive goalposts vs very aggressive peers

OpenAI’s founder/CEO Sam Altman continues to gaze deep into the future of AI possibilities.

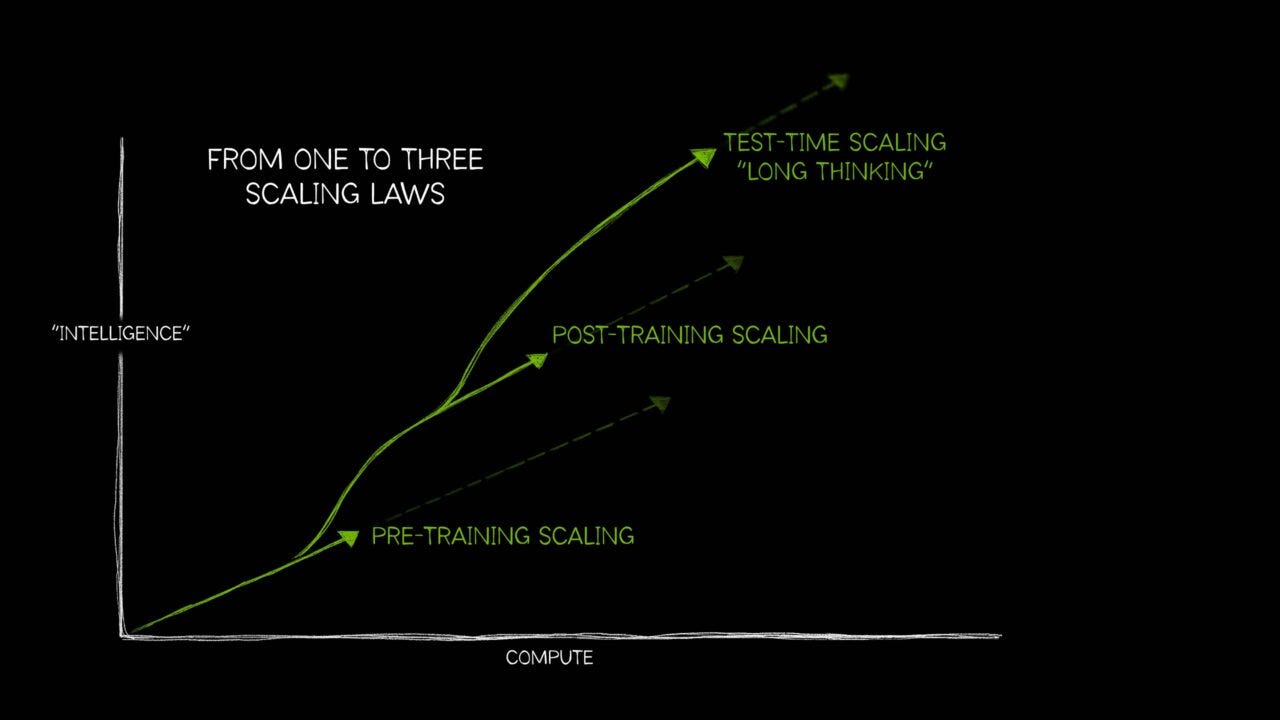

I’ve long discussed how Scaling Power goes hand-in-hand with the current AI Tech Wave aspirations to Scale AI.

And do it in a hurry.

Not in decades, but a decade or less. The reason of course is to generate exponential amounts of ‘Intelligence tokens’, for countless businesses and mainstream users, as they scale AI itself. And ramp countless large and small language models for AI training, inference, reinforcement learning, and boundless types of AI Reasoning, AI Agents and other AI augmenting tasks ahead.

And do it all with truly accelerated alacrity. To get to AGI and/or Superintelligence, or any other ‘AI God’, of choice that the principal backers believe in ardently.

All this means that the LLM AI and AI Cloud hyperscalers will need to raise trillions for both AI Compute AND AI Power in the Gigawatts. And the numbers are mind-boggling as we sit here in 2025. Only about a thousand days and a month after OpenAI’s ‘ChatGPT moment’ on November 30, 2022.

And OpenAI in particular is laying out the most aggressive goalposts for the AI Compute and Power builds ahead. Part to Babe Ruth it, and part to intimidate the other AI Apes on the field.

The Information lays it out in “Sam Altman Wants 250 Gigawatts of Power. Is That Possible?”:

“Artificial intelligence is hungry for power at a scale that defies belief.”

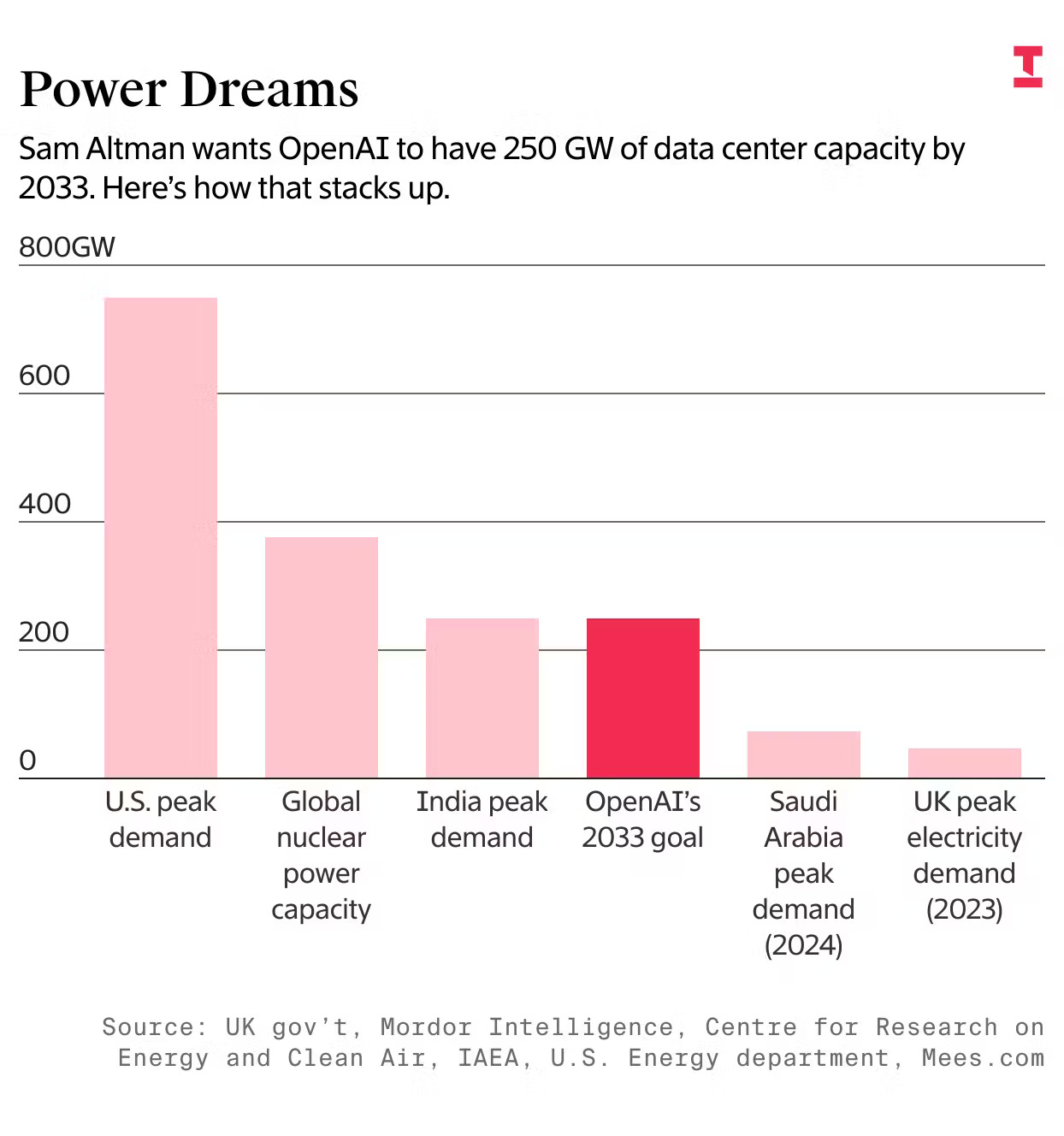

“Last week, OpenAI and Nvidia said they would work together to develop 10 gigawatts of data center capacity over an unspecified period. Inside OpenAI, Sam Altman floated an even more staggering number: 250 GW of compute in total by 2033, roughly one-third of the peak power consumption in the entire U.S.!”

I wrote about it, but the numbers still need to be digested.

“Let that sink in for a minute. A large data center used to mean 10 to 50 megawatts of power. Now, developers are pitching single campuses in the multigigawatt range—on par with the energy draw of entire cities—all to power clusters of AI chips.”

“Or think of it this way: A typical nuclear power plant generates around 1 GW of power. Altman’s target would mean the equivalent of 250 plants just to support his own company’s AI. And based on today’s cost to build a 1 GW facility (around $50 billion), 250 of them implies a cost of $12.5 trillion.”

The global GDP this year for comparison is about $125 trillion. So 10% of today’s number in AI Power, just for OpenAI.

And the driver of course is competition and AI AGI aspirations.

“We are in a compute competition against better-resourced companies,” Altman wrote to his team last week, likely referring to Google and Meta Platforms, which also have discussed or planned large, multigigawatt expansions. (XAI CEO Elon Musk also knows a thing or two about raising incredible amounts of capital.)”

“We must maintain our lead,” Altman said.”

OpenAI has already taken some giant steps from almost a standing start in just a couple of years. Especially with partners like Softbank to build out ‘Stargate’.

“OpenAI expects to exit 2025 with about 2.4 GW of computing capacity powered by Nvidia chips, said a person with knowledge of the plan, up from 230 MW at the start of 2024.”

“Ambition is one thing. Reality is another, and it’s hard to see how the ChatGPT maker would leap from today’s level to hundreds of gigawatts within the next eight years. Obviously, that figure is aspirational.”

This includes startling even their core AI partner, Nvidia:

“Then again, OpenAI’s fast-rising server needs surprised even Nvidia executives, said people on both sides of the relationship.”

“Before the events of last week, OpenAI had contracted to have around 8 GW by 2028, almost entirely consisting of servers with Nvidia graphics processing units. That’s already a staggering jump, and OpenAI is planning to pay hundreds of billions of dollars in cash to the cloud providers who develop the sites.”

“To put it into perspective, Microsoft’s entire Azure cloud business operated at about 5 GW at the end of 2023—and that was to serve all of its customers, not just AI. (Azure is No. 2 after Amazon’s cloud business.)”

“Bigger Is Still Better”

“Data center developers tell me most of OpenAI’s top competitors are asking for single campuses in the 8 to 10 GW range, an order of magnitude bigger than anything the industry has ever attempted to build.”

And relying on AI ‘contractors’ like Oracle, CoreWeave and many others to aggressively execute the buildouts at flawless scale:

“A year and a half ago, OpenAI’s plan with Microsoft to build a single Stargate supercomputer costing $100 billion seemed like science fiction. Barring a seismic macroeconomic change, these types of projects now seem like a real possibility.”

“The rationale behind them is simple: Altman and his rivals believe that the bigger the GPU cluster, the stronger the AI model they can produce. Our team has been at the forefront of reporting on some of the limitations of this scaling law, as evidenced by the smaller step-up in quality between GPT-5 and GPT-4 than between GPT-4 and GPT-3.”

“Nevertheless, Nvidia’s fast pace of GPU improvements has strengthened the belief of Altman and his ilk that training runs conducted with Blackwell chip clusters this year and with Rubin chips next year will crack open significant gains, according to people who work for these leaders.”

Quite the comparison chart above. Especially in the context of the past.

“In the early days of the AI boom, it was hard to develop clusters of a few thousand GPUs. Now firms are stringing together 250,000, and they want to connect millions in the future.”

“That desire runs into a pretty important constraint: electricity. Companies are already trying to overcome that hurdle in unconventional ways, by building their own power plants instead of waiting for utilities to provide grid power, or by putting facilities in remote areas where energy is easier to secure.”

“Still, the gap between company announcements and the reality on the ground is enormous. Utilities by nature are conservative when it comes to adding new power generation. They won’t race to build new plants if there’s a risk of ending up with too much capacity—no matter who is asking.”

The AI Compute and Scaling plans sweep the continental US and beyond, with a global land rush underway:

“‘Activating the Full Industrial Base’”

“OpenAI’s largest cluster under development, in Abilene, Texas, currently uses grid power and natural gas turbines. But other projects it has announced in Texas will use a combination of natural gas, wind and solar.”

“Milam County, where OpenAI is planning one of its next facilities, recently approved a 5 GW solar cell plant, for instance. And gas is expected to be the biggest source of power for the planned sites, this person said.”

“To accomplish its goals, OpenAI and its partners will need the makers of gas and wind turbines to greatly expand their supply chains. That’s not an easy task, given that it involves some risk-taking on the part of the suppliers. Perhaps Nvidia’s commitment to funding OpenAI’s data centers while maintaining control of the GPUs will make those conversations easier.”

And need a lot of ongoing help from Washington.

“Altman told his team that obtaining boatloads of servers “means activating the full industrial base of the world—energy, manufacturing, logistics, labor, supply chain—everything upstream that will make large-scale compute possible.”

“There are other bottlenecks, such as getting enough chipmaking machines from ASML and getting enough manufacturing capacity from Taiwan Semiconductor Manufacturing Co., which produces Nvidia’s GPUs. Negotiating for that new capacity will fall to Nvidia.”

“Predicting the future is notoriously difficult, but a lot of things will need to go right for OpenAI and its peers to get all the servers they want. In the meantime, they will keep making a lot of headlines in their quest to turn the endeavor into a self-fulfilling prophecy.”

The good news thus far, is that the world has bought into the above ‘vision’, and for now is eager to work with the AI industry to find clever pathways to fund this AI Compute and Power buildout, with both private and public capital, employing both debt and equity driven funding vehicles.

Almost every LLM AI company is racing ahead towards these AI windmills, as some might say, and doing it globally. Not to mention many countries large and small, in the name of ‘Sovereign AI’.

And no one is as aggressive to be out in front as OpenAI. Well, maybe Meta leda by founder/CEO Mark Zuckerberg, and Tesla/xAI led by founder/CEO Elon Musk. And of course most of the ‘Mag 7s’, with their trillions in market caps.

But even they’re all running into the limits of their annual free cash flow and ample balance sheets, and are increasingly having to resort to clever external financing vehicles with some of the biggest financial and sovereign investors in the world.

And as I’ve articulated here many times, the funding for all this AI Compute and Power is going to run ahead of the AI revenues that will presumably follow. So investors of all types will have to keep that in mind, and wait for their rewards.

That sets the stage for the next decade in AI aspirations. It’s going to be quite the spectacle ahead in this AI Tech Wave. Incomparable to most tech waves that have come before.

With OpenAI running and shrieking hard in the lead. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)