AI: Microsoft & OpenAI chart more courses. RTZ #504

...working both together and apart on various AI fronts

I’ve been discussing how OpenAI is processing and navigating through a concurrent flow of challenges and opportunities in recent months. All as it approaches the second anniversary of its iconic ‘ChatGPT moment’ next month.

It closed a $10 plus billion set of equity and debt fund raises at a $157 plus billion valuation. While continuing to process its research and product execution through both senior staff turnover, and a governance framework transition into next year.

The other key element that OpenAI is managing of course is its pivotal $13 billion+ partnership with Microsoft over the last few years. My post last year, ‘It Takes Two to Tango’, goes into this in some detail. It’s notable that both Microsoft CEO Satya Nadella and OpenAI founder/CEO Sam Altman, have been working hand in hand leveraging each others’ strengths and assets.

And deeply driving their organizations to work closely together, defining the early stages of this AI Tech Wave.

Through some bumpy stretches. Even as the companies have been competing in some areas, and cooperating in many others. And while Microsoft has been driving its own AI Copilot priorities, and potential AI backup plans. All the while working together on big long term AI infrastructure plans like the $100 billion ‘Stargate’ AI data center efforts.

But as both companies lean into AI next year and beyond, there are bound to be areas where they may both find certain areas they’d do on their own. It’s also a topic I’ve discussed before, and it looks like more developments may be underway on the pivotal AI data center infrastructure front.

The Information’s piece “OpenAI Eases Away From Microsoft Data Centers”:

“OpenAI and Microsoft soared to new heights by developing and sharing artificial intelligence, specialized servers and product revenue with each other. Microsoft’s cash has paid for nearly all of that work.”

After marching in lockstep, their relationship is changing. Last week, after OpenAI CEO Sam Altman and Chief Financial Officer Sarah Friar raised $6.6 billion in capital, mostly from a slew of financial firms, they told some employees OpenAI would play a greater role in lining up data centers and AI chips to develop technology rather than relying solely on Microsoft, according to a person who heard the remarks.”

“Friar previously told some shareholders Microsoft hadn’t moved fast enough to supply OpenAI with enough computing power—hence the need to pursue other data center deals, which it had started to do, said a person who was involved in the conversation. For instance, OpenAI’s growing impatience with Microsoft recently prompted it to arrange an unusual data center deal in Texas with one of Microsoft’s rivals.”

The core area of course in around the ‘AI Table Stakes’ going into 2025 on much larger AI data center and Power infrastructure than exists for any big tech company currently. I discussed that priority for the AI industry yesterday. The Information explains:

“The companies previously included a provision in their contract that gave OpenAI room to work on such deals, despite agreeing to make Microsoft its exclusive cloud server provider, said a different person with knowledge of their agreement.”

“Taking more control of data center plans could help OpenAI stay ahead of rivals such as Anthropic and xAI, which aren’t locked into a single cloud provider.”

“The comments from OpenAI leaders last week reflected what’s been happening behind the scenes in recent months: While OpenAI lowers its dependency on Microsoft data centers, Microsoft has aimed to lessen its reliance on OpenAI technology as they increasingly compete in selling AI products and as Microsoft tries to lower the cost of running its products.”

Both companies of course emphasize that the core partnership remains intact:

“A Microsoft spokesperson did not have a comment on Altman’s and Friar’s remarks. An OpenAI spokesperson didn’t address those remarks and said its “strategic cloud relationship with Microsoft is unchanged.”

Part of the catalyst of course is OpenAI’s upcoming transition from a non-profit to a PBC (Public Benefit Corp) structure:

“Now, as OpenAI moves forward with a plan to convert to a for-profit from a nonprofit, it is negotiating various financial details with Microsoft, such as the size of the stake Microsoft would have. Currently, in exchange for investing more than $13 billion in OpenAI, Microsoft has the rights to 75% of OpenAI’s future profits until the software company’s principal investment of more than $13 billion is repaid, and 49% of profits after that up to a theoretical cap.”

“Because of Microsoft’s outsize rights to future OpenAI profits under the current nonprofit configuration, it could end up with a significant portion of OpenAI’s shares after it turns into a for-profit. The nonprofit currently overseeing OpenAI also will get a sizable stake in the new for-profit firm—likely at least 25%, said a person who has spoken to company leaders.”

And core interests for both are still very much aligned:

“The Microsoft-OpenAI partnership will still be critical for both firms. Microsoft can use OpenAI’s technology in perpetuity for its own products and OpenAI pays Microsoft to run its AI business in its Azure data centers. OpenAI pays a 20% commission to Microsoft when it sells access to AI models to other businesses through an application programming interface, and Microsoft pays OpenAI a 20% commission from reselling OpenAI models to Azure customers through a Microsoft API.”

Just like Microsoft has been building its independent AI strategy, OpenAI is also focusing on similar priorities, especially in the context of AI Compute Infrastructure, as OpenAI scales AI to GPT-5 ‘Orion’ and beyond.

“Lately, Altman has sought to make the companies’ extensive computing partnership more flexible.”

“He’s become concerned about Microsoft’s ability to provide servers to OpenAI fast enough to stay ahead of new rivals such as Elon Musk’s xAI, according to four people working on data center projects for OpenAI. Altman’s urgency around securing more data center space picked up after Musk took a public victory lap about creating one the largest clusters of Nvidia AI chips in the world, these people said. Musk co-founded OpenAI with Altman and others.”

“Microsoft, for its part, effectively paid $650 million to hire a team of technologists from a startup called Inflection to try to develop software that might compete with OpenAI’s.”

Microsoft also has more internal AI mouths to feed, as well as its aspirations to build global AI infrastructure for its cloud business Azure’s enterprise customers, and monetize AI Copilot across Microsoft’s global customer base:

“And Microsoft has become more cautious about paying for ever-bigger server clusters for OpenAI as the cloud giant aims to ensure it won’t take a loss on costly data centers that may not generate consistent revenue in the coming decades. Part of Microsoft’s concern is that clusters optimized for training AI models aren’t as cost effective when used for other purposes, such as powering AI apps, known as inference. It may be hard to predict the demand for large-scale training in the coming decade, which makes it risky to build expensive computing clusters that may sit idle for stretches of time.”

OpenAI, on its side, is trying something different in Texas:

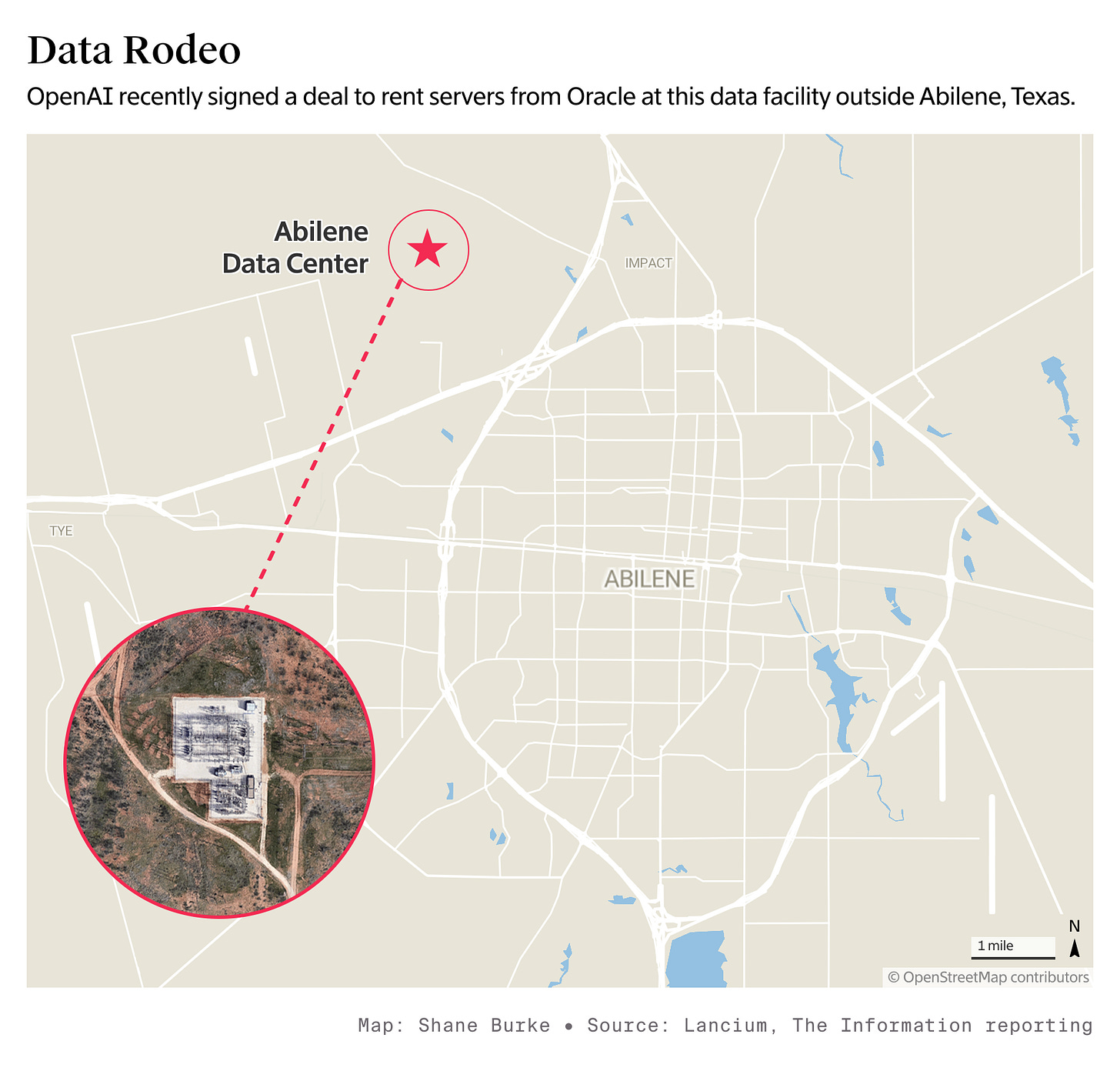

“One prominent example of how OpenAI’s relationship with Microsoft is changing involves a data center under construction in Abilene, Texas. OpenAI recently signed a deal to rent servers from the facility, which Oracle—a cloud rival to Microsoft—and other firms are developing and which they expect will be one of the largest in the world. Previously, Microsoft handled all data center affairs for OpenAI.”

“In the new deal, OpenAI negotiated directly with Oracle. Microsoft was informed about the negotiations and included in a press release about the deal, but in reality it has had relatively little involvement, according to two people working on it. Microsoft likely blessed the deal, given that Microsoft previously negotiated the right to block any arrangements OpenAI made with other cloud providers, according to someone who reviewed the contract.”

And new cloud intermediaries like Oracle are being used:

“Importantly for Microsoft, because Azure is the exclusive cloud provider to OpenAI, Microsoft is technically Oracle’s customer on OpenAI’s behalf, and can still count OpenAI’s use of the Oracle clusters as revenue for Azure, according to another person who spoke to a key Microsoft executive about the deal. (OpenAI said in a June post on X that it would “use the Azure AI platform on [Oracle] infrastructure,” without elaborating.)”

“Going Big in Abilene”

“OpenAI is now in talks with Oracle to lease the entire Abilene data center site, which could eventually grow to 2 gigawatts if Oracle is able to access more power at the site, according to one of the people involved in the deal. That amount of power could light up multiple big cities. The site is on track to draw a little under 1 gigawatt of power by mid-2026, meaning it could house several hundred thousand Nvidia AI chips.”

That would put the data center in the top tiers of the ones fielded by Microsoft, Google, Meta, Amazon and others. All buying their AI chips and infrastructure of course from Nvidia in this AI Gold Rush.

These developments mean current and future AI infrastructure plans between the two companies may also potentially shift:

“The changing relationship also means OpenAI could leave Microsoft out of more ambitious data center plans. The companies have long discussed how they could work together on bigger and bigger facilities, including a $100 billion supercomputing server cluster, which Microsoft code-named Mercury and OpenAI leaders referred to as Stargate.”

But other initiatives may remain intact and on track:

“The companies are discussing the next phase of data center expansion, a project known as Fairwater. Microsoft plans to give OpenAI access to around 300,000 of Nvidia’s latest graphics processing units, the GB200s, from two data center sites in Wisconsin and Atlanta by the end of next year, according to the database and someone working on the plans. OpenAI will have access to a smaller number of GB200s earlier in the year in the Phoenix area as part of thean expansion of an existing Microsoft data center it already uses.”

The whole piece is worth reading for deeper details. But the big takeaway of course is that the two leadership companies in AI so far in this AI Tech Wave, are each charting additional paths ahead.

The industry remains far more dynamic and competitive as any other tech wave I’ve seen this early in prior tech waves, with far bigger dollars at stake, far earlier. Lots of twists and turns to come. And unimaginable amounts of ‘processing’ to come. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)