AI: Ilya's back with Super Funding for 'Safe Superintelligence'. RTZ #471

...aka SSI, the new big LLM AI hyperscaler

It could be argued that much of what has transpired thus far in this AI Tech Wave, has been driven by Ilya Sutskever, former OpenAI co-founder and chief scientist. And also now famous for his role in OpenAI’s Governance drama back last November. And his ‘Feel the AGI’ charges at the company (aka ‘Feel the ASI’).

Long journey indeed from there to here.

And subsequent departure to found Safe Superintelligence (SSI). To pursue a truly ‘safer’ AGI (Artificial Superintelligence), on its way to ASI (Artificial Superintelligence) at some point. Safer even presumably than current LLM AI #2 Anthropic’s efforts towards a ‘Safer AI’, itself founded by ex co-founders of OpenAI.

Now the details around SSI’s funding and direction are coming into focus. As Reuters reports in “OpenAI co-founder Sutskever's new safety-focused AI startup SSI raises $1 billion”:

The highlights:

“Three-month-old SSI valued at $5 billion, sources say”

“Funds will be used to acquire computing power, top talent”

“Investors include Andreessen Horowitz, Sequoia Capital”

“Safe Superintelligence (SSI), newly co-founded by OpenAI's former chief scientist Ilya Sutskever, has raised $1 billion in cash to help develop safe artificial intelligence systems that far surpass human capabilities, company executives told Reuters.”

“SSI, which currently has 10 employees, plans to use the funds to acquire computing power and hire top talent. It will focus on building a small highly trusted team of researchers and engineers split between Palo Alto, California and Tel Aviv, Israel.”

And two of OpenAI’s investors, a16z and Sequoia, are of course also part of the cap table of SSI:

“Investors included top venture capital firms Andreessen Horowitz, Sequoia Capital, and DST Global. NFDG, an investment partnership run by Nat Friedman and SSI's Chief Executive Daniel Gross, also participated.”

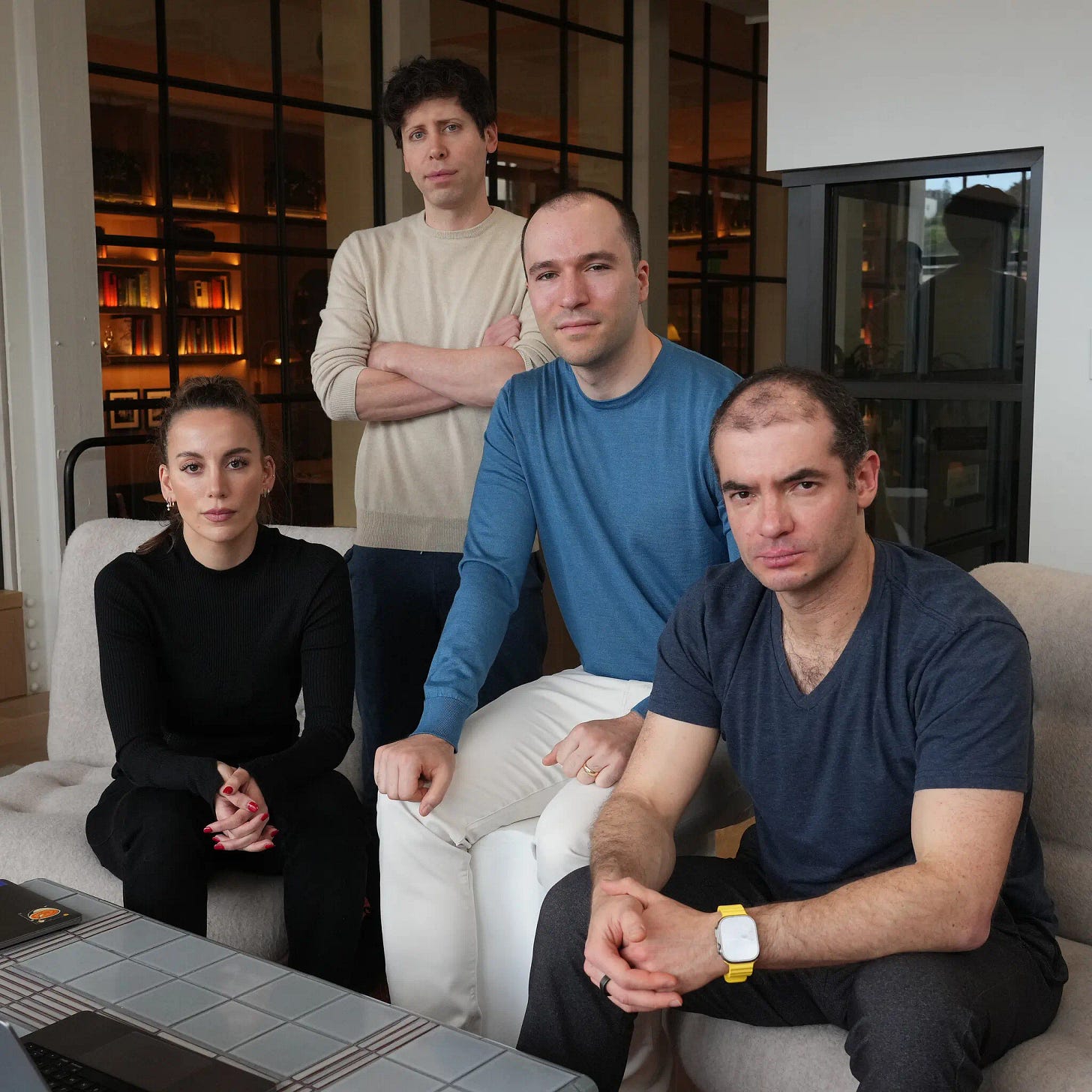

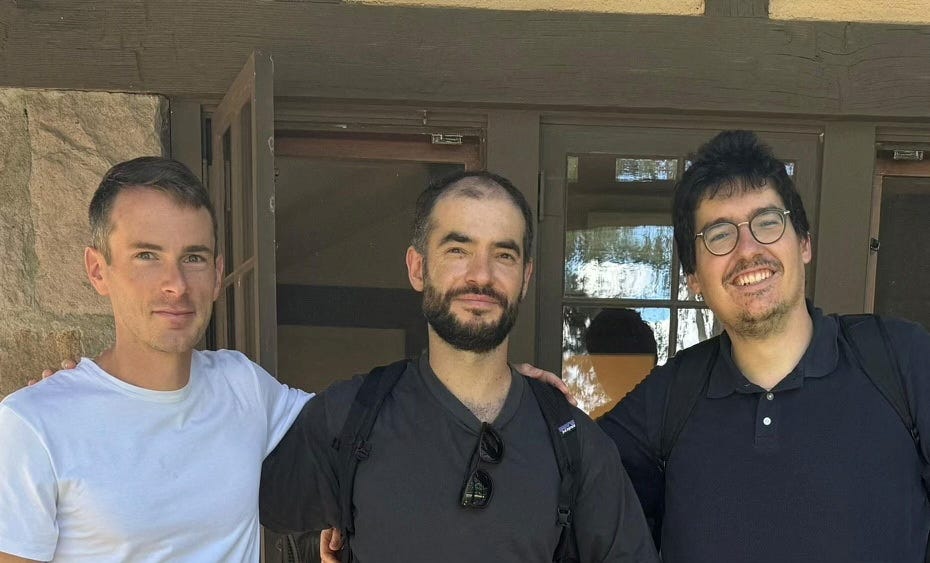

“Ilya Sutskever (middle) is chief scientist and Daniel Levy (right) is principal scientist, while Daniel Gross (left) is responsible for computing power and fundraising.”

“After Sutskever's departure, the company dismantled his "Superalignment" team, which worked to ensure AI stays aligned with human values to prepare for a day when AI exceeds human intelligence.”

This time, Ilya plans to do things differently:

“Unlike OpenAI's unorthodox corporate structure, implemented for AI safety reasons but which made Altman's ouster possible, SSI has a regular for-profit structure.”

“SSI is currently very much focused on hiring people who will fit in with its culture.”

“Gross said they spend hours vetting if candidates have "good character", and are looking for people with extraordinary capabilities rather than overemphasizing credentials and experience in the field.”

A billion in starting capital three months after founding, is impressive. But as we’ve seen thus far, the ante to Scale AI to the next few levels is likely going to need tens and soon hundreds of billions in additional capital, before clear pathways to getting profitable returns on that investment.

The funds raised are at a $ 5 billion valuation, contextualized as follows by the Information:

“This ranks SSI as among the most highly valued AI startups around, judging by our ranking of generative AI startups.”

“To be sure, at this very early stage of SSI’s life, the valuation that its investors ascribe to it is presumably a totally arbitrary number. There’s no revenue to put a multiple against. Maybe the investors are putting a multiple on Sutskever—who worked at Google before co-founding OpenAI—by comparing him to Character co-founders Noam Shazeer and Daniel De Freitas.”

That’s the fourth recent AI ‘Acquihire I discussed recently. They continue:

“Google paid $2.7 billion to get both Shazeer and Freitas when the tech giant agreed to hire most of Character’s staff last month. In other words, SSI’s valuation implies Sutskever is worth nearly two times what Shazeer and De Freitas are. It surely helped that tech investor Daniel Gross, who we profiled here, is a co-founder of SSI with Sutskever.”

SSI of course views this as a series of funding steps:

“SSI says it plans to partner with cloud providers and chip companies to fund its computing power needs but hasn't yet decided which firms it will work with. AI startups often work with companies such as Microsoft and Nvidia to address their infrastructure needs.”

And of course the goal is SAFE AI SCALING:

“Sutskever was an early advocate of scaling, a hypothesis that AI models would improve in performance given vast amounts of computing power. The idea and its execution kicked off a wave of AI investment in chips, data centers and energy, laying the groundwork for generative AI advances like ChatGPT.”

“Sutskever said he will approach scaling in a different way than his former employer, without sharing details.”

"Everyone just says scaling hypothesis. Everyone neglects to ask, what are we scaling?" he said.”

"Some people can work really long hours and they'll just go down the same path faster. It's not so much our style. But if you do something different, then it becomes possible for you to do something special."

Some high-level hints on how Ilya and team’s path to Safe AI is likely to have different approaches being pursued by peers large and small currently.

For now, we have a new horse in the US LLM AI hyperscaler race joining OpenAI, Anthropic, Google, Amazon, Meta, Elon’s xAI/Tesla, and soon possibly Apple. Amongst many others, here, in China, and globally.

More the Merrier. One can’t be too careful with AI. As is currently presumed. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)