AI: Google's TPUs making Nvidia GPUs a better competitor. RTZ #921

...and likely expanding the AI market for all

The Bigger Picture, Sunday, November 30, 2025

This week saw a double-pronged set of successes by Google's vertical AI stack vs both OpenAI and Nvidia, setting a new pace in this AI Tech Wave. As I discussed earlier in the week, Google’s Gemini 3 in various iterations is outperforming OpenAI’s latest and greatest GPT and ChatGPT iterations.

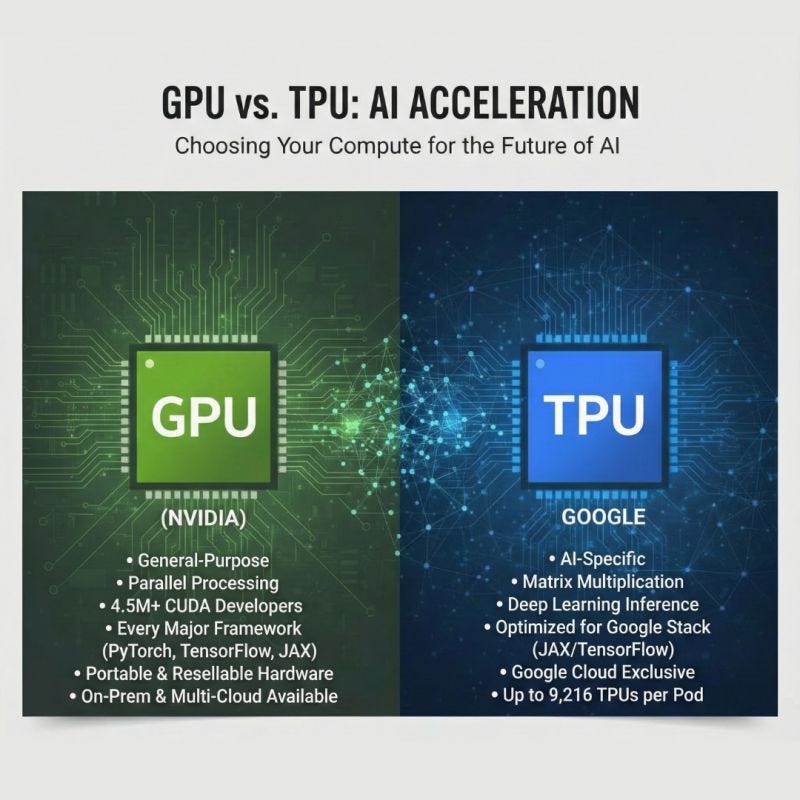

The other Google success is Meta now considering Google TPUs as an alternative to Nvidia’s leading AI GPUs. This builds on Google’s success with OpenAI also using Google TPUs. The Bigger Picture I’d like to discuss this Sunday is Google’s growing success with TPUs vs Nvidia, and now they’re BOTH expanding the AI market together.

As The Information puts it pithily, “Google Further Encroaches on Nvidia’s Turf With New AI Chip Push”:

“Google is talking to Meta and other cloud customers about letting them run Google’s TPU chips in their data centers. The effort could help expand the appeal of Google’s alternative to Nvidia’s AI chips.”

“Google is seeking to expand its TPU business by giving customers the option of on-premise use.”

“Meta Platforms is considering spending billions of dollars on Google TPUs, including for Meta data centers.”

“Google has discussed aiming for 10% of Nvidia’s revenue with its TPU chip business.”

The answers on who comes out on top in these headline questions isn’t as binary as one would like. A deeper analysis makes it clear that for now, a more aggressive Google will likely make a great Nvidia better. And benefit the AI ecosystem at large. While making the market opportunities bigger.

Semianalysis lays this competitive dynamic well in “TPUv7: Google Takes a Swing at the King”, poised as a key question in investor minds today:

“The two best models in the world, Anthropic’s Claude 4.5 Opus and Google’s Gemini 3 have the majority of their training and inference infrastructure on Google’s TPUs and Amazon’s Trainium. Now Google is selling TPUs physically to multiple firms. Is this the end of Nvidia’s dominance?”

The answer seems to be not quite. And dynamic:

“The dawn of the AI era is here, and it is crucial to understand that the cost structure of AI-driven software deviates considerably from traditional software. Chip microarchitecture and system architecture play a vital role in the development and scalability of these innovative new forms of software.”

The answers are fairly complex in the ways AI training and inference can be tweaked on leading LLMs for a vast, and growing set of AI token calculations and configurations:

“The hardware infrastructure on which AI software runs has a notably larger impact on Capex and Opex, and subsequently the gross margins, in contrast to earlier generations of software, where developer costs were relatively larger. Consequently, it is even more crucial to devote considerable attention to optimizing your AI infrastructure to be able to deploy AI software. Firms that have an advantage in infrastructure will also have an advantage in the ability to deploy

“We’ve long believed that the TPU is among the world’s best systems for AI training and inference, neck and neck with king of the jungle Nvidia. 2.5 years ago we wrote about TPU supremacy, and this thesis has proven to be very correct.”

And Google TPU benefits for Google itself are being noticed by OpenAI and Nvidia:

“TPU performance has clearly caught the attention of its rivals. Sam Altman has acknowledged “rough vibes” ahead for OpenAI as Gemini has stolen the thunder from OpenAI.”

“Nvidia even put out a reassuring PR telling everyone to keep calm and carry on — we are well ahead of the competition.”

Semianalysis goes on to count Google’s many successes to date on these dual tracks:

“These past few months have been win after win for the Google Deepmind, GCP, and TPU complex. The huge upwards revisions to TPU production volumes, Anthropic’s >1GW TPU buildout, SOTA models Gemini 3 and Opus 4.5 trained on TPU, and now an expanding list of clients being targeted (Meta, SSI, xAI, OAI) lining up for TPUs. This has driven a huge re-rating of the Google and TPU supply chain at the expense of the Nvidia GPU-focused supply chain.”

Google had long used the TPU for its own internal AI workloads, and is only now deploying it more aggressively externally:

“The TPU stack has long rivaled Nvidia’s AI hardware, yet it has mostly supported Google’s internal workloads. In typical Google fashion, it never fully commercialized the TPU even after making it available to GCP customers in 2018. That is starting to change. Over the past few months, Google has mobilized efforts across the whole stack to bring TPUs to external customers through GCP or by selling complete TPU systems as a merchant vendor. The search giant is leveraging its strong in-house silicon design capabilities to become a truly differentiated cloud provider.”

Both Google and Nvidia are using a combination of technical improvements on their AI chip roadmaps, and their substantial capabilities to support customer/partner for various iterations. The whole piece is worth reading for that nuance and detail, supported by useful charts and graphs.

But the Bigger Picture to take away for the next couple of years at least is that both offerings expand the opportunities for the ecosystem customers and partners:

“Now that Google has gotten their act together on TPU and is selling them externally for people to put in their own datacenters, what are the implications on Nvidia’s business? Does Nvidia finally have a legitimate competitor that will put its market share and margins at threat?”

“While Google’s Ironwood TPU is a proper competitor to Blackwell, Nvidia once again hits back with Vera Rubin. Vera Rubin will deliver huge performance uplifts across compute, memory and network with TPU v8 seeing much smaller improvements.”

“The 8th generation Google TPUs will be available in 2027 and will be competing against Nvidia Vera Rubin.”

“While TPU (and Amazon’s Trainium) have found ways to thread the performance/TCO [total cost of ownership] needle in Anthropic’s favor, Nvidia always runs fast and delivers innovation at breakneck pace that is simply too hard to ignore.”

The bottom line is that a more competitive Google AI chip roadmap makes Nvidia better as well. Especially as both companies are responding actively, and dynamically to the the rapid innovations underway on AI training, inference and a fast-growing set of AI applications and services in this AI Tech Wave.

And that is a Bigger Picture worth keeping in mind for now. Stay tuned.

(Updare: The Information has a good Q&A style Google TPU vs Nvidia GPU discussion worth a read)

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)

TYVM for this consice and instructive note. 👍