AI: Doing the Reasoning Math Reliably

...crossing the probabilistic and deterministic streams

Ultimately, the AI Holy Grail is about ‘crossing the streams’, to paraphrase ‘Ghostbusters’.

In a piece titled ”OpenAI made an AI breakthrough before Altman firing, stoking excitement and concern”, the Information provides color on an OpenAI research development that addresses the holy grail issue of AI software vs traditional software in these early days of the AI Tech Wave. It potentially crosses (fusing) the probabilistic (aka stochastic) approach of the former with the deterministic approach of the latter.

Here’s the context:

“One day before he was fired by OpenAI’s board last week, Sam Altman alluded to a recent technical advance the company had made that allowed it to “push the veil of ignorance back and the frontier of discovery forward.” The cryptic remarks at the APEC CEO Summit went largely unnoticed as the company descended into turmoil.”

“But some OpenAI employees believe Altman’s comments referred to an innovation by the company’s researchers earlier this year that would allow them to develop far more powerful artificial intelligence models, a person familiar with the matter said. The technical breakthrough, spearheaded by OpenAI chief scientist Ilya Sutskever, raised concerns among some staff that the company didn’t have proper safeguards in place to commercialize such advanced AI models, this person said.”

Here’s the relevant technology significance, filtering out the corporate drama:

“In the following months, senior OpenAI researchers used the innovation to build systems that could solve basic math problems, a difficult task for existing AI models. Jakub Pachocki and Szymon Sidor, two top researchers, used Sutskever’s work to build a model called Q* (pronounced “Q-Star”) that was able to solve math problems that it hadn’t seen before, an important technical milestone. A demo of the model circulated within OpenAI in recent weeks, and the pace of development alarmed some researchers focused on AI safety.”

“The work of Sutskever’s team, which has not previously been reported, and the concern inside the organization, suggest that tensions within OpenAI about the pace of its work will continue even after Altman was reinstated as CEO Tuesday night, and highlights a potential divide among executives. (See more about the safety conflicts at OpenAI.)”

“Last week, Pachocki and Sidor were among the first senior employees to resign following Altman’s ouster. Details of Sutskever’s breakthrough, and his concerns about AI safety, help explain his participation in Altman’s high-profile ouster, as well as why Sidor and Pachocki resigned quickly after Altman was fired. The two returned to the company after Altman’s reinstatement.”

Open AI Organizational drama aside, this work is notable for the AI industry even at its early stage:

“Lukasz Kaiser, one of the co-authors of the groundbreaking Transformers research paper, which describes an invention that paved the way for more sophisticated AI models. Among the techniques the team experimented with was a machine-learning concept known as “test-time computation,” which is meant to boost language models’ problem-solving abilities.”

I realize this goes a bit into the math weeds of computing. But here’s why it’s important aside from the political drama and intrigue.

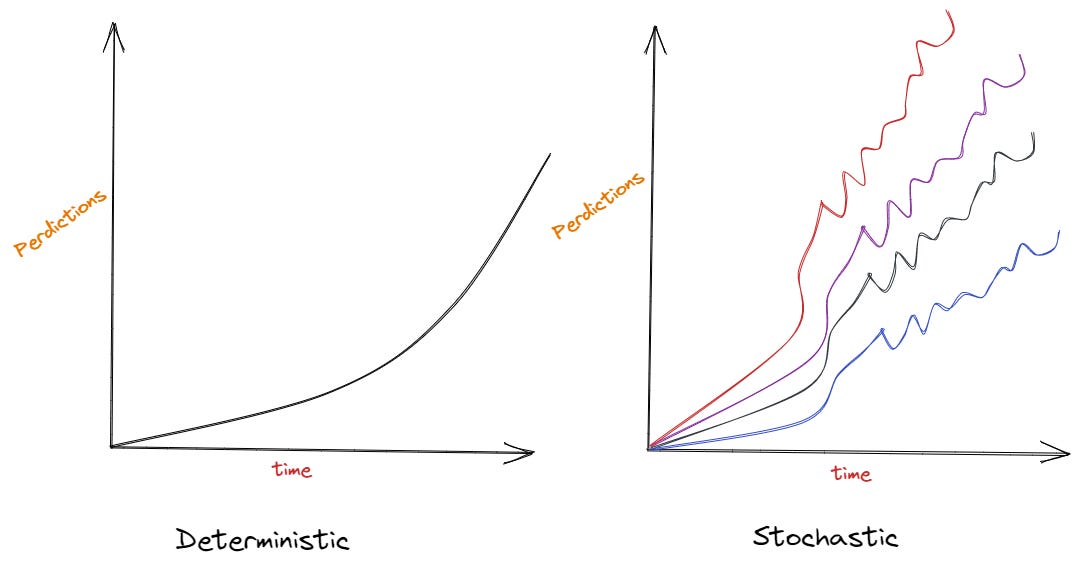

The current holy grail of LLM AI Research is fusing the uniquely probabilistic way AI produces its results vs the deterministic way computers have produced reliable answers doing math with 0s and 1s. In a piece “AI: Can’t see how it works” back in June, I underlined:

“One of the hardest things for regular folks to get their head around is how AI software is different from traditional software. Why is AI eating software?”

“The way I’ve tried to explain AI software before is to highlight that traditional software is based on deterministic code vs AI software being run as probabilistic code. Of course on super fast parallel chips (GPUs), using massive amounts of reinforcement learning feedback loops, constantly feeding on all our Data ever put online, to make the eventual results of the Foundation LLM AI models more reliable and relevant. Less hallucinatory.”

“And how all this makes this AI Tech wave far more potent in human opportunities than any other tech wave before it like the PC or the Internet.”

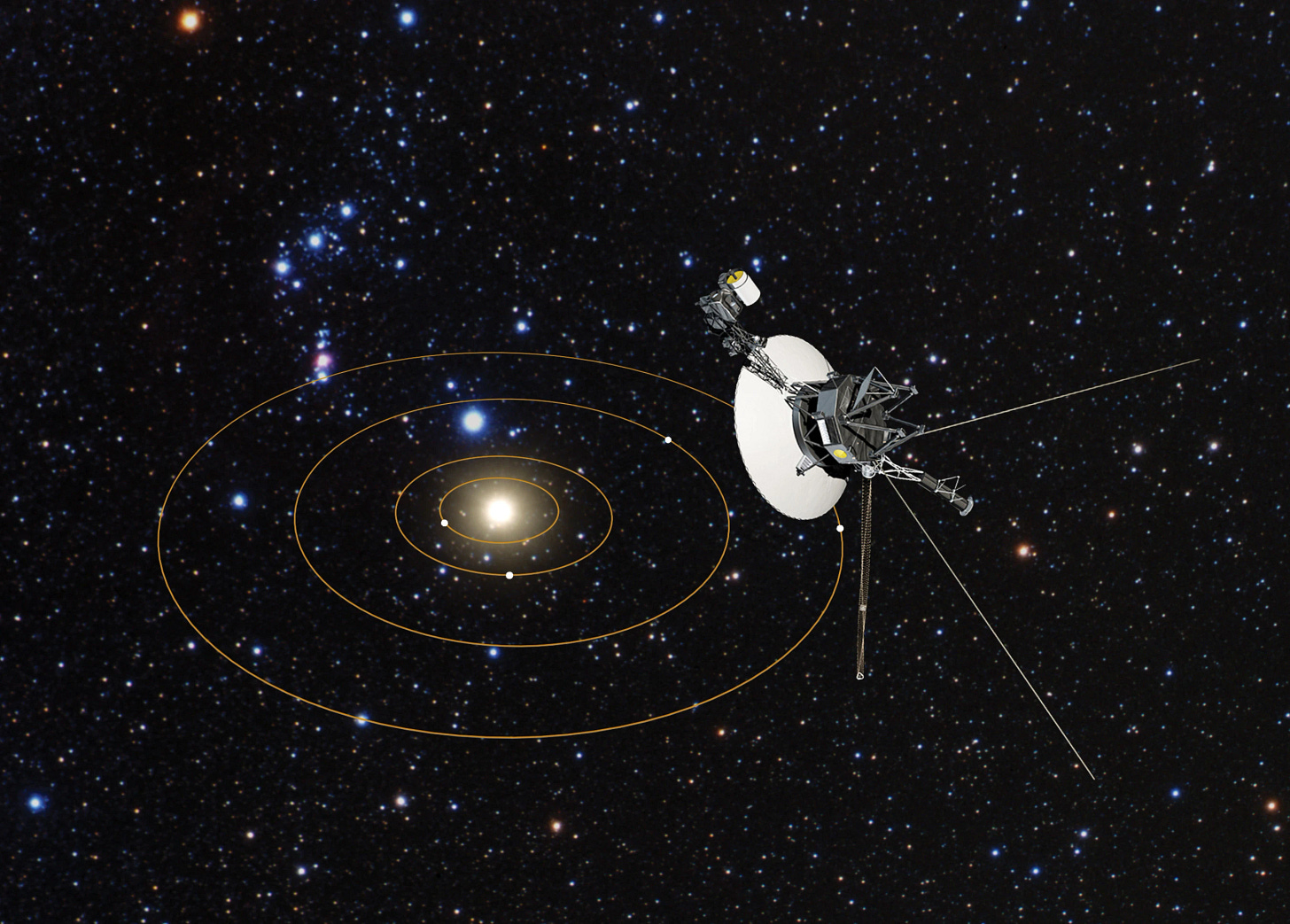

Computer software and hardware traditionally does rock solid math. Utterly reliable to the umpteenth decimal place every single time.

The way humans have done math for centuries.

Indeed the math with slide rules that got the two Voyager spacecraft in 1977 to become the farthest traveling constructs by humans, now traveling at the edges of our Solar System.

AI software does math in probabilities, generating answers that seem accurate, but not always so. Indeed, our best LLM AI today can produce AI results that are inaccurate for much of the time. A much needed area of improvement indeed.

Reuters provides more context of the OpenAI development:

“Some at OpenAI believe Q* (pronounced Q-Star) could be a breakthrough in the startup's search for what's known as artificial general intelligence (AGI), one of the people told Reuters. OpenAI defines AGI as autonomous systems that surpass humans in most economically valuable tasks.

“Given vast computing resources, the new model was able to solve certain mathematical problems.”

“Researchers consider math to be a frontier of generative AI development. Currently, generative AI is good at writing and language translation by statistically predicting the next word, and answers to the same question can vary widely. But conquering the ability to do math — where there is only one right answer — implies AI would have greater reasoning capabilities resembling human intelligence. This could be applied to novel scientific research, for instance, AI researchers believe.”

“Unlike a calculator that can solve a limited number of operations, AGI can generalize, learn and comprehend.”

The math being done by this approach is still at a nascent level. But it’s a promising step for the industry to move forward fusing the two ways we’re using computers to reason better and help humans do math better. By crossing the streams.

The new approaches promise to fuse probabilities and possibilities in a way far beyond the slide rules that got us to the edges of the solar system. And that’s the drama to be thankful for and to focus on going forward.

Happy Thanksgiving all.

Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)